This article mainly talks with you about MySQL deadlock and logs. How to quickly locate online MySQL problems and fix exceptions in actual business? This article shares relevant experiences and methods based on two actual cases. Interested friends can refer to it. I hope it can help everyone.

Recently, several data anomalies have occurred in online MySQL, all of which broke out in the early morning. Since the business scenario is a typical data warehouse application, the pressure during the day is low and cannot be reproduced. Some of the anomalies are even weird, and the final root cause analysis is quite difficult. So how can we quickly locate online MySQL problems and fix exceptions in actual business? Below I will share relevant experiences and methods based on two actual cases.

Case1: Partial data update failed

One day, channel classmates reported that very few channel data in a certain report were 0. Most channel data is normal. This data is routinely updated by a statistical program every early morning. It stands to reason that either everything is normal or everything fails. So what could be the reason for the abnormality of a few individual data?

First of all, the first thing we can think of is to look at the statistical task log, but after looking at the log printed by the statistical program, we did not find any abnormal description such as SQL update failure. So what exactly happened in the database at that time? Before checking the MySQL-server log, I habitually looked at the database status:

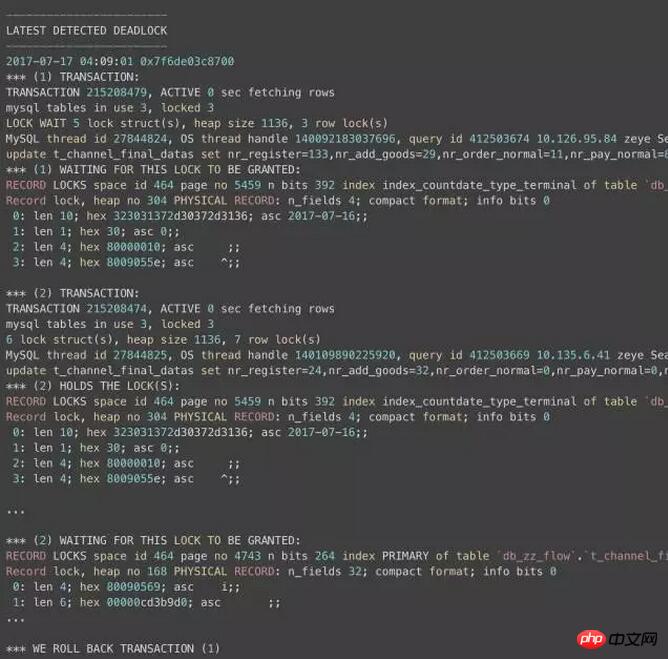

I happened to see a deadlock in this update in the early morning:

Due to space limitations, I have omitted a lot of context here. As you can see from this log, TRANSACTION 1 and TRANSACTION 2 each hold a certain number of row locks, and then wait for the other party's lock. Finally, MySQL detected a deadlock and then chose to roll back TRANSACTION 1: Innodb's current method of handling deadlocks is to roll back the transaction holding the least row-level exclusive lock.

Then there are 3 questions here:

1. Does the innodb row lock only lock one row?

Because this table belongs to the innodb engine, InnoDB supports row locks and table locks. InnoDB row locking is achieved by locking the index entries on the index. This is different from MySQL and Oracle, which is achieved by locking the corresponding data rows in the data block. The row lock implementation feature of InnoDB means that InnoDB uses row-level locks only when data is retrieved through index conditions. Otherwise, InnoDB will use table locks and lock all scanned rows! In practical applications, special attention should be paid to this feature of InnoDB row locks, otherwise it may lead to a large number of lock conflicts, thus affecting concurrency performance. Since MySQL's row lock is a lock for the index, not for the record, although records of different rows are accessed, if the same index key is used, a lock conflict will occur. When we use range conditions instead of equality conditions to retrieve data and request shared or exclusive locks, InnoDB will lock the index entries of existing data records that meet the conditions; in addition, gap locks will also lock multiple rows. InnoDB will not only pass range conditions but also lock multiple rows. In addition to using gap locks when locking, if you use equal conditions to request to lock a record that does not exist, InnoDB will also use gap locks!

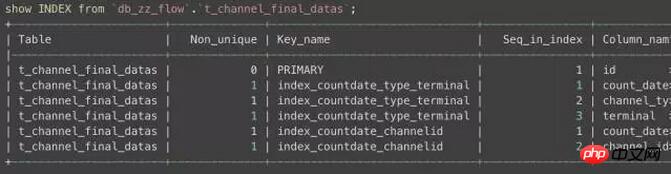

Having said that, let’s take a look at the index of our business table:

You can see that the index of this table is extremely unreasonable : There are 3 indexes, but the update does not fully use the index. As a result, the update does not use the index accurately and needs to lock multi-row range data, thus causing a deadlock.

After knowing the principle, we can carefully build a four-field combined index so that update can accurately use the innodb index. In fact, after we update the index, this deadlock problem is solved.

Note: innodb will print out not only the transactions and locks held and waited for by the transactions, but also the records themselves, which unfortunately, may Exceeds the length reserved by innodb for the output result (only 1M content can be printed and only the latest deadlock information can be retained). If you cannot see the complete output, you can create an innodb_monitor or innodb_lock_monitor table under any library at this time. In this way, the innodb status information will be complete and recorded in the error log every 15 seconds. For example: create table innodb_monitor(a int)engine=innodb;, just delete the table when there is no need to record it in the error log.

2. Why do only some update statements fail during rollback?

In case of rollback, why do only some update statements fail instead of all updates in the entire transaction?

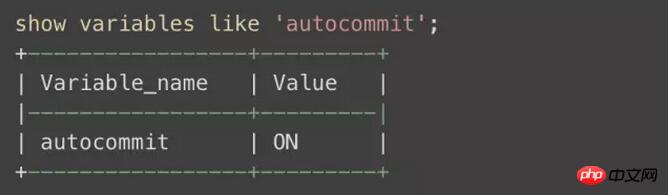

This is because our innodb is automatically submitted by default:

In the case of multiple update or insert statements, after each SQL is executed, innodb will immediately commit once to persist the changes and release the lock at the same time. This is why there are only a few statements after the deadlock rollback transaction in this example. reason of failure.

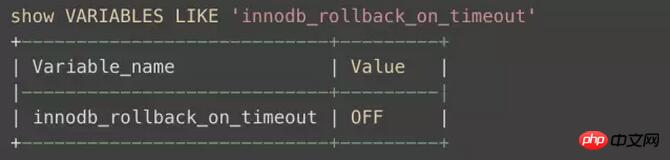

It should be noted that there is usually another situation that may also cause some statements to be rolled back, which requires special attention. There is a parameter in innodb called: innodb_rollback_on_timeout

The official manual describes this:

In MySQL 5.1, InnoDB rolls back only the last statement on a transaction timeout by default. If –innodb_rollback_on_timeout is specified, a transaction timeout causes InnoDB to abort and roll back the entire transaction (the same behavior as in MySQL 4.1). This variable was added in MySQL 5.1.15.

Explanation: If this parameter is turned off or does not exist, only the last Query of the transaction will be rolled back if a timeout occurs. If it is turned on, the entire transaction will be rolled back if the transaction encounters a timeout.

3. How to reduce the probability of innodb deadlock?

Deadlocks are difficult to completely eliminate in row lock and transaction scenarios, but lock conflicts and deadlocks can be reduced through table design and SQL adjustment, including:

Use as much as possible Lower isolation level, for example, if a gap lock occurs, you can change the transaction isolation level of the session or transaction to RC (read committed) level to avoid it, but at this time, you need to set binlog_format to row or mixed format

Carefully design the index, and try to use the index to access data to make locking more accurate, thereby reducing the chance of lock conflict;

Choose a reasonable transaction size, and the probability of lock conflict for small transactions will be smaller;

When locking the record set display, it is best to request a sufficient level of lock at one time. For example, if you want to modify data, it is best to apply for an exclusive lock directly instead of applying for a shared lock first and then request an exclusive lock when modifying. This can easily cause deadlock;

When different programs access a group of tables, try to It is agreed to access each table in the same order. For a table, the rows in the table should be accessed in a fixed order as much as possible. This can greatly reduce the chance of deadlock;

Try to use equal conditions to access data, so as to avoid the impact of gap locks on concurrent insertion;

Do not apply for a lock level that exceeds the actual need; unless necessary , do not display locking when querying;

For some specific transactions, table locks can be used to increase processing speed or reduce the possibility of deadlock.

Case2: Weird Lock wait timeout

There are 6 am and 8 am for several consecutive days A task failed and a Java SQL exception of Lock wait timeout exceeded try restarting transaction innodb was reported when loading data local infile. After communicating with classmates on the platform, we learned that this was a problem of too short a lock time or a lock conflict in our own business database. But when you think back, shouldn’t you? Isn't this always good? Moreover, they are basically single-form tasks, and there is no multi-person conflict.

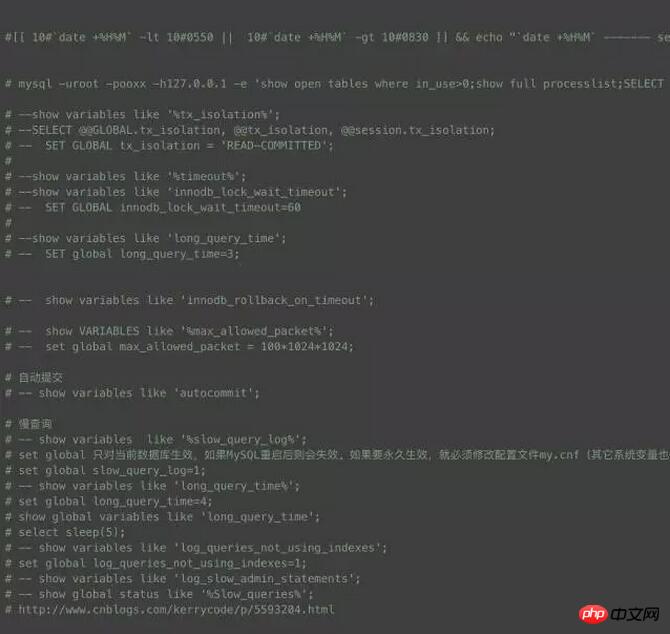

No matter whose problem it is, let’s check if there is any problem with our database first:

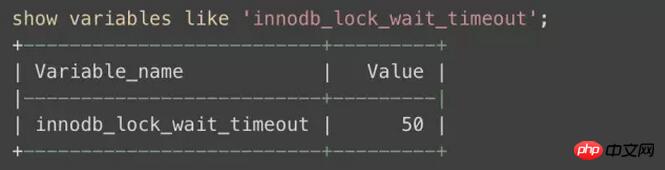

The default lock timeout is 50s, which is really not short. It's probably useless to adjust it. In fact, it's really useless to try it like a dead horse. . .

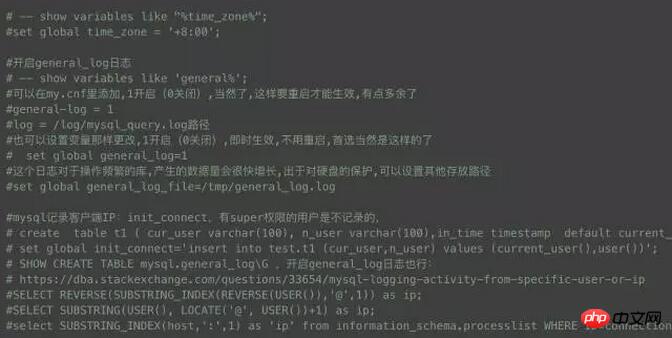

And this time SHOW ENGINE INNODB STATUS\G did not show any deadlock information. Then I turned my attention to the MySQL-server log, hoping to see from the log what operations the data was doing before and after that moment. . Here is a brief introduction to the composition of the MySQL log file system:

(a) Error log: records problems that occur when starting, running or stopping mysqld. It is enabled by default.

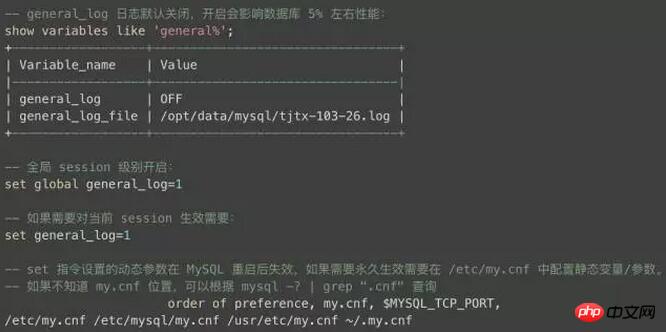

(b) General log: General query log, which records all statements and instructions. There will be a performance loss of about 5% when opening the database.

(c) binlog log: binary format, records all statements that change data, mainly used for slave replication and data recovery.

(d) Slow log: records all queries that take more than long_query_time seconds to execute or queries that do not use indexes. It is turned off by default.

(e) Innodb log: innodb redo log, undo log, used to restore data and undo operations.

As you can see from the above introduction, the current logs for this problem may be in d and b. If there is no log in d, then you can only enable b, but b will have a certain loss on the performance of the database. , because it is a full log, the volume is very huge, so you must be cautious when opening it:

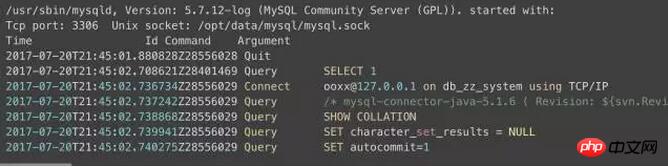

I only opened the full log half an hour before and after the problem occurred every day, and found no results. Any MySQL-client request to our business database! The log format is as follows, recording all connections and commands:

The problem is basically confirmed. The above exception was thrown before the client request reached our side. After repeated communication and confirmation with the platform, the platform finally verified that it was because they needed to start the SQL task before executing the insertion. The table retrieved SQL and updated the task status. As a result, this table had a large number of concurrent inserts and updates at the hour, causing some SQL to time out while waiting for the lock. . .

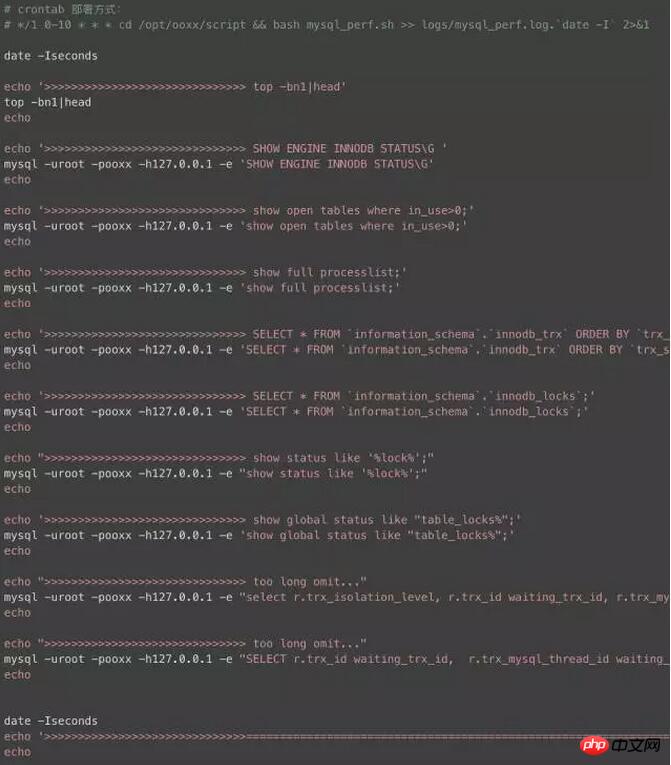

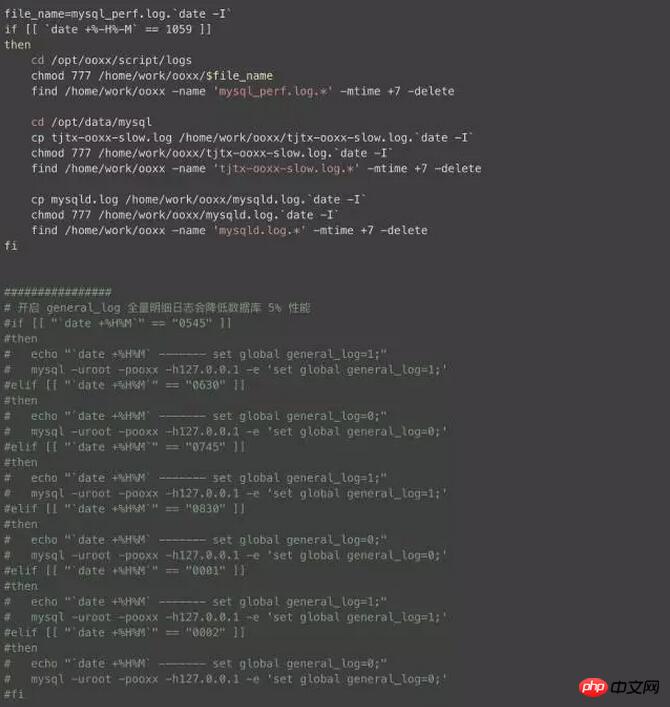

MySQL log analysis script

Since the early morning is the business peak of the data warehouse, many problems occur at this time, and some weird problems often occur after this village This store no longer exists and cannot be restored during the day. How to capture the logs we care about to quickly locate the problem is the top priority. Here I wrote a small script, crontab deployment, you can choose the time range to open, and sample the log every minute. What needs to be explained is the general log Don't turn it on easily, otherwise it will cause great damage to database performance.

Related recommendations:

About using kill in Mysql Detailed explanation of the command to solve the deadlock problem

The concept of deadlock and the conditions of deadlock

Share a method to solve the MySQL deadlock problem

The above is the detailed content of MySQL deadlock and log analysis. For more information, please follow other related articles on the PHP Chinese website!