First of all, let’s briefly understand the crawler. That is a process of requesting a website and extracting the data you need. As for how to climb and how to climb, it will be the content of learning later, so there is no need to go into it for now. Through our program, we can send requests to the server on our behalf, and then download large amounts of data in batches.

Initiate a request: initiate a request request to the server through the url, Requests can contain additional header information.

Get the response content: If the server responds normally, we will receive a response. The response is the content of the web page we requested, which may include HTML. Json string or binary data (video, picture), etc.

Parse content: If it is HTML code, it can be parsed using a web page parser. If it is Json data, it can be converted into a Json object for parsing. If Is binary data, it can be saved to a file for further processing.

Save data: You can save it to a local file or to a database (MySQL, Redis, Mongodb, etc.)

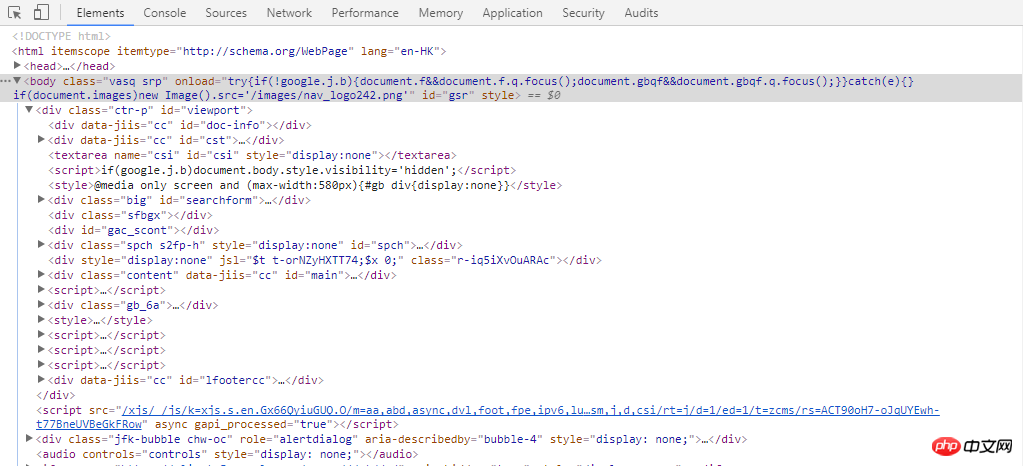

When we send a request to the server through the browser When requesting, what information does this request contain? We can explain it through Chrome's developer tools (if you don't know how to use it, read the notes in this article).

Request method: The most commonly used request methods include get request and post request. The most common post request in development is to submit it through a form. From the user's perspective, the most common one is login verification. When you need to enter some information to log in, this request is a post request.

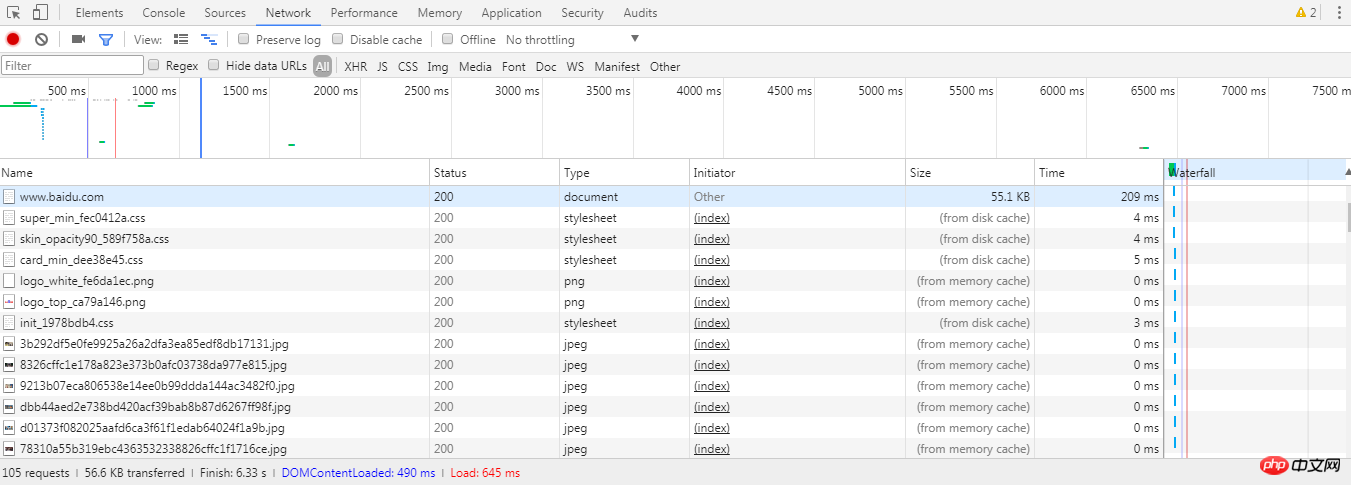

url Uniform Resource Locator: A URL, a picture, a video, etc. can all be defined using URL. When we request a web page, we can view the network tag. The first one is usually a document, which means that this document is an HTML code that is not rendered with external images, css, js, etc. Below this document we will see To a series of jpg, js, etc., this is a request initiated by the browser again and again based on the html code, and the requested address is the url address of the image, js, etc. in the html document

request headers: Request headers, including the request type of this request, cookie information, browser type, etc. This request header is still useful when we crawl web pages. The server will review the information by parsing the request header to determine whether the request is a legitimate request. So when we make a request through a program that disguises the browser, we can set the request header information.

Request body: The post request will package the user information in form-data for submission, so compared to the get request, the content of the Headers tag of the post request There will be an additional information package called Form Data. The get request can be simply understood as an ordinary search carriage return, and the information will be added at the end of the url at ? intervals.

##Response status: The status code can be seen through General in Headers. 200 indicates success, 301 jump, 404 web page not found, 502 server error, etc.

#Response header: includes content type, cookie information, etc.

Response body: The purpose of the request is to get the response body, including html code, Json and binary data.

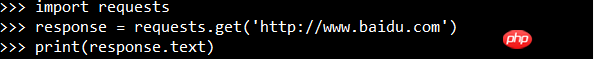

Perform web page requests through Python's request library :

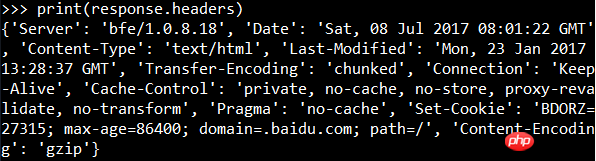

The output result is the web page code that has not yet been rendered, that is, the content of the request body. You can view the response header information:

View status code:

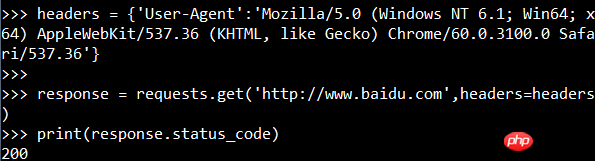

You can also add the request header to the request information:

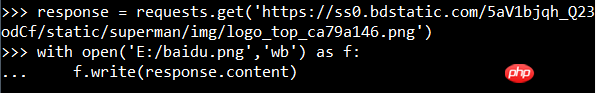

Grab the picture (Baidu logo):

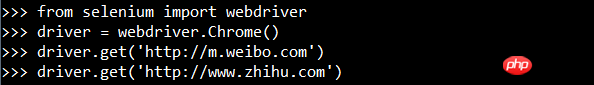

Use Selenium webdriver

Enter print(driver.page_source) and you can see that this time the code is the code after rendering.

##F12 to open the developer tools

##

##

Network tag

##

The above is the detailed content of What is a crawler? What is the basic process of crawler?. For more information, please follow other related articles on the PHP Chinese website!

Tutorial on making word document tables

Tutorial on making word document tables mysql transaction isolation level

mysql transaction isolation level How to completely delete mongodb if the installation fails

How to completely delete mongodb if the installation fails How to set up hibernation in Win7 system

How to set up hibernation in Win7 system Google account registration method

Google account registration method How to set path environment variable

How to set path environment variable How to implement CSS carousel function

How to implement CSS carousel function How to enable TFTP server

How to enable TFTP server