By the way, through this small example, you can master some basic steps about making a crawler.

Generally speaking, making a crawler requires the following steps:

Analyze requirements (yes, requirement analysis is very important, don’t tell me your teacher didn’t teach you )

Analyze the web page source code and cooperate with F12 (the web page source code is not as messy as F12, do you want to see me to death?)

Prepared Regular expression or XPath expression (the artifact mentioned earlier)

Formally write python crawler code

Run:

Well, let me enter keywords and let me think about it, what should I enter? It seems to be a bit exposed.

Enter

It seems that the download has started! Great! , I looked at the downloaded pictures, and wow, instantly I felt like I had added a lot more emoticons....

Okay, that’s pretty much it.

"I want pictures, but I don’t want to search online"

"It’s best to download them automatically"

......

This is Requirements, okay, let's start analyzing the requirements. At least two functions must be implemented, one is to search for pictures, and the other is to automatically download.

First of all, when searching for pictures, the easiest thing to think of is the result of crawling Baidu pictures. Okay, then let’s go to Baidu pictures and take a look.

That’s basically it. , quite beautiful.

Let's try to search for something. I type a bad word and a series of search results come out. What does this mean...

Just find one Enter

#Okay, we have seen a lot of pictures, it would be great if we could climb down all the pictures here. We saw that there is keyword information in the URL

# We tried to change the keyword directly in the URL, did it jump?

In this way, you can search for pictures with specific keywords through this URL, so in theory, we can search for specific pictures without opening the web page. The next question is how to implement automatic downloading. In fact, using previous knowledge, we know that we can use request to get the URL of the image, then crawl it down and save it as a .jpg.

So this project should be completed.

Okay, let’s start with the next step, analyzing the web page source code. Here I first switch back to the traditional page. Why do I do this? Because Baidu pictures currently use the waterfall flow mode, dynamically loading pictures, which is very troublesome to process. The traditional page turning interface is much better.

Another tip here is: if you can crawl the mobile version, don’t crawl the computer version, because the code of the mobile version is very clear and it is easy to get the required content.

Okay, I switched back to the traditional version, but it still has page numbers and is comfortable to read.

Let’s right-click and view the source code

What the hell is this, how can we see it clearly! !

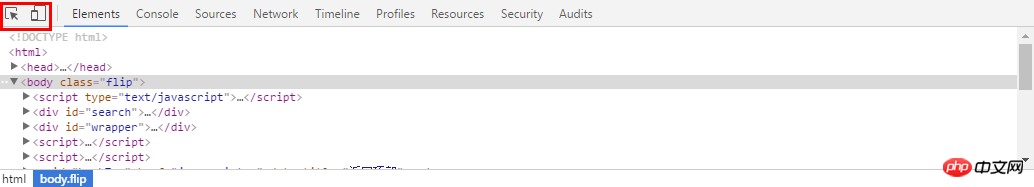

At this time, it’s time to use F12, the developer tool! Let's go back to the previous page, press F12, and the toolbar below will come out. What we need to use is the thing in the upper left corner. One is mouse following, and the other is switching mobile versions, both of which are very useful to us. We use the first

here

然后选择你想看源代码的地方,就可以发现,下面的代码区自动定位到了这个位置,是不是很NB!

我们复制这个地址

然后到刚才的乱七八糟的源代码里搜索一下,发现它的位置了!(小样!我还找不到你!)但是这里我们又疑惑了,这个图片怎么有这么多地址,到底用哪个呢?我们可以看到有thumbURL,middleURL,hoverURL,objURL

通过分析可以知道,前面两个是缩小的版本,hover是鼠标移动过后显示的版本,objURL应该是我们需要的,不信可以打开这几个网址看看,发现obj那个最大最清晰。

好了,找到了图片位置,我们就开始分析它的代码。我看看是不是所有的objURL全是图片

貌似都是以.jpg格式结尾的,那应该跑不了了,我们可以看到搜索出61条,说明应该有61个图片

通过前面的学习,写出如下的一条正则表达式不难把?

pic_url = re.findall('"objURL":"(.*?)",',html,re.S)

好了,正式开始编写爬虫代码了。这里我们就用了2个包,一个是正则,一个是requests包,之前也介绍过了,没看的回去看!

#-*- coding:utf-8 -*-import reimport requests

然后我们把刚才的网址粘过来,传入requests,然后把正则表达式写好

url = 'http://image.baidu.com/search/flip?tn=baiduimage&ipn=r&ct=201326592&cl=2&lm=-1&st=-1&fm=result&fr=&sf=1&fmq=1460997499750_R&pv=&ic=0&nc=1&z=&se=1&showtab=0&fb=0&width=&height=&face=0&istype=2&ie=utf-8&word=%E5%B0%8F%E9%BB%84%E4%BA%BA' html = requests.get(url).text pic_url = re.findall('"objURL":"(.*?)",',html,re.S)

理论有很多图片,所以要循环,我们打印出结果来看看,然后用request获取网址,这里由于有些图片可能存在网址打不开的情况,加个5秒超时控制。

pic_url = re.findall('"objURL":"(.*?)",',html,re.S)

i = 0for each in pic_url:print eachtry:

pic= requests.get(each, timeout=10)except requests.exceptions.ConnectionError:print '【错误】当前图片无法下载'continue好了,再就是把网址保存下来,我们在事先在当前目录建立一个picture目录,把图片都放进去,命名的时候,用数字命名把

string = 'pictures\\'+str(i) + '.jpg'

fp = open(string,'wb')

fp.write(pic.content)

fp.close()

i += 1整个代码就是这样:

#-*- coding:utf-8 -*-

import re

import requests

url = 'http://image.baidu.com/search/flip?tn=baiduimage&ipn=r&ct=201326592&cl=2&lm=-1&st=-1&fm=result&fr=&sf=1&fmq=1460997499750_R&pv=&ic=0&nc=1&z=&se=1&showtab=0&fb=0&width=&height=&face=0&istype=2&ie=utf-8&word=%E5%B0%8F%E9%BB%84%E4%BA%BA'html = requests.get(url).text

pic_url = re.findall('"objURL":"(.*?)",',html,re.S)i = 0for each in pic_url:

print each

try:

pic= requests.get(each, timeout=10)

except requests.exceptions.ConnectionError:

print '【错误】当前图片无法下载'

continue

string = 'pictures\\'+str(i) + '.jpg'

fp = open(string,'wb')

fp.write(pic.content)

fp.close()i += 1我们运行一下,看效果(什么你说这是什么IDE感觉很炫!?赶紧去装Pycharm,Pycharm的配置和使用看这个文章!)!

好了我们下载了58个图片,咦刚才不是应该是61个吗?

我们看,运行中出现了有一些图片下载不了

我们还看到有图片没显示出来,打开网址看,发现确实没了。

所以,百度有些图片它缓存到了自己的机器上,所以你还能看见,但是实际连接已经失效

好了,现在自动下载问题解决了,那根据关键词搜索图片呢?只要改url就行了,我这里把代码写下来了

word = raw_input("Input key word: ")

url = 'http://image.baidu.com/search/flip?tn=baiduimage&ie=utf-8&word='+word+'&ct=201326592&v=flip'result = requests.get(url)好了,享受你第一个图片下载爬虫吧!!当然不只能下载百度的图片拉,依葫芦画瓢,你现在应该做很多事情了,比如爬取头像,爬淘宝展示图,或是...美女图片,捂脸。一切都凭客官你的想象了,当然,作为爬虫的第一个实例,虽然纯用request已经能解决很多问题了,但是效率还是不够高,如果想要高效爬取大量数据,还是用scrapy吧

The above is the detailed content of Use python to make an automatic image downloader in 5 minutes. For more information, please follow other related articles on the PHP Chinese website!