We usually measure the throughput rate of a Web system by QPS (Query Per Second, number of requests processed per second). This indicator is very critical to solve high-concurrency scenarios of tens of thousands of times per second. For example, we assume that the average response time for processing a business request is 100ms. At the same time, there are 20 Apache web servers in the system, and MaxClients is configured as 500 (indicating the maximum number of Apache connections).

Then, the theoretical peak QPS of our Web system is (idealized calculation method):

20*500/0.1 = 100000 (100,000 QPS)

Hey ? Our system seems to be very powerful. It can handle 100,000 requests in one second. The flash sale of 5w/s seems to be a "paper tiger". The actual situation is of course not so ideal. In actual high-concurrency scenarios, machines are under high load, and the average response time will be greatly increased at this time.

An ordinary p4 server can support up to about 100,000 IPs per day. If the number of visits exceeds 10W, a dedicated server is needed to solve the problem. If the hardware is not powerful, no matter how the software is optimized, it will not help. The main factors that affect the speed of the server are: network - hard disk read and write speed - memory size - cpu processing speed.

As far as the web server is concerned, the more connection processes Apache opens, the more context switches the CPU needs to handle, which increases the CPU consumption and directly leads to an increase in the average response time. Therefore, the above-mentioned number of MaxClients must be considered based on hardware factors such as CPU and memory. More is definitely not better. You can test it through Apache's own abench and get a suitable value. Then, we choose Redis for storage at the memory operation level. In a high-concurrency state, the storage response time is crucial. Although network bandwidth is also a factor, such request packets are generally relatively small and rarely become a bottleneck for requests. It is rare for load balancing to become a system bottleneck, so we will not discuss it here.

Then the question comes. Assume that our system, in a high concurrency state of 5w/s, the average response time changes from 100ms to 250ms (actual situation, even more):

20 *500/0.25 = 40000 (40,000 QPS)

So, our system is left with 40,000 QPS. Facing 50,000 requests per second, there is a difference of 10,000.

For example, at a highway intersection, 5 cars come and pass 5 cars per second, and the highway intersection operates normally. Suddenly, only 4 cars can pass through this intersection in one second, and the traffic flow is still the same. As a result, there will definitely be a traffic jam. (It feels like 5 lanes suddenly turned into 4 lanes)

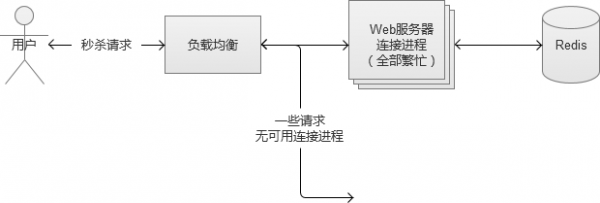

Similarly, in a certain second, 20*500 available connection processes are working at full capacity, but there are still 10,000 new requests. , there is no connection process available, and it is expected that the system will fall into an abnormal state.

In fact, in normal non-high-concurrency business scenarios, similar situations occur. There is a problem with a certain business request interface, and the response time is extremely slow. The entire Web request The response time is very long, gradually filling up the number of available connections on the web server, and no connection process is available for other normal business requests.

In fact, in normal non-high-concurrency business scenarios, similar situations occur. There is a problem with a certain business request interface, and the response time is extremely slow. The entire Web request The response time is very long, gradually filling up the number of available connections on the web server, and no connection process is available for other normal business requests.

The more frightening problem is the behavioral characteristics of users. The more unavailable the system is, the more frequent users click. The vicious cycle eventually leads to an "avalanche" (one of the web machines hangs up, causing the traffic to be dispersed to On other machines that are working normally, the normal machines will also hang, and then a vicious cycle will occur), bringing down the entire Web system.

3. Restart and overload protection

If an "avalanche" occurs in the system, restarting the service rashly will not solve the problem. The most common phenomenon is that after starting up, it hangs up immediately. At this time, it is best to deny traffic at the ingress layer and then restart. If services like redis/memcache are also down, you need to pay attention to "warming up" when restarting, and it may take a long time.

In flash sale and rush sale scenarios, the traffic is often beyond our system’s preparation and imagination. At this time, overload protection is necessary. Denying requests is also a protective measure if a full system load condition is detected. Setting up filtering on the front-end is the simplest way, but this approach is a behavior that is "criticized" by users. It is more appropriate to set overload protection at the CGI entry layer to quickly return direct requests from customers

Data security under high concurrency

We know that when multiple threads write the same file (Multiple threads run the same piece of code at the same time. If the result of each run is the same as that of a single-thread run, and the result is the same as expected, it is thread-safe). If it is a MySQL database, you can use its own lock mechanism to solve the problem. However, in large-scale concurrency scenarios, MySQL is not recommended. There is another problem in flash sale and rush sale scenarios, which is "over-delivery". If this aspect is not controlled carefully, excessive delivery will occur. We have also heard that some e-commerce companies conduct rush buying activities. After the buyer successfully purchases the product, the merchant does not recognize the order as valid and refuses to deliver the goods. The problem here may not necessarily be that the merchant is treacherous, but that it is caused by the risk of over-issuance at the technical level of the system.

1. Reasons for excessive hair

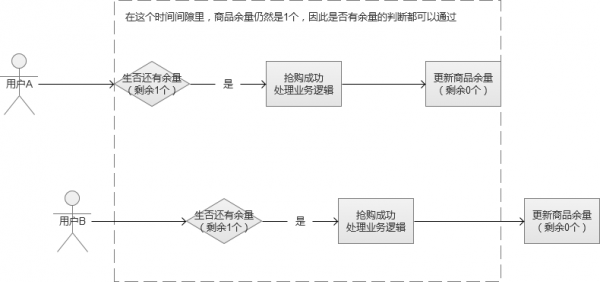

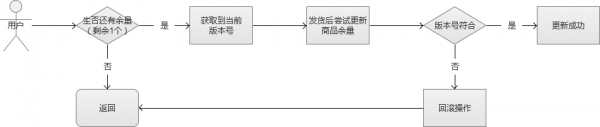

Assume that in a certain rush-buying scenario, we only have 100 products in total. At the last moment, we have consumed 99 products and only the last one is left. At this time, the system sent multiple concurrent requests. The product balances read by these requests were all 99, and then they all passed this balance judgment, which eventually led to over-issuance. (Same as the scene mentioned earlier in the article)

In the picture above, it resulted in concurrent user B also "successfully buying", allowing one more person to obtain the product. This scenario is very easy to occur in high concurrency situations.

Optimization plan 1: Set the inventory field number field to unsigned. When the inventory is 0, because the field cannot be a negative number, false will be returned

<?php

//优化方案1:将库存字段number字段设为unsigned,当库存为0时,因为字段不能为负数,将会返回false

include('./mysql.php');

$username = 'wang'.rand(0,1000);

//生成唯一订单

function build_order_no(){

return date('ymd').substr(implode(NULL, array_map('ord', str_split(substr(uniqid(), 7, 13), 1))), 0, 8);

}

//记录日志

function insertLog($event,$type=0,$username){

global $conn;

$sql="insert into ih_log(event,type,usernma)

values('$event','$type','$username')";

return mysqli_query($conn,$sql);

}

function insertOrder($order_sn,$user_id,$goods_id,$sku_id,$price,$username,$number)

{

global $conn;

$sql="insert into ih_order(order_sn,user_id,goods_id,sku_id,price,username,number)

values('$order_sn','$user_id','$goods_id','$sku_id','$price','$username','$number')";

return mysqli_query($conn,$sql);

}

//模拟下单操作

//库存是否大于0

$sql="select number from ih_store where goods_id='$goods_id' and sku_id='$sku_id' ";

$rs=mysqli_query($conn,$sql);

$row = $rs->fetch_assoc();

if($row['number']>0){//高并发下会导致超卖

if($row['number']<$number){

return insertLog('库存不够',3,$username);

}

$order_sn=build_order_no();

//库存减少

$sql="update ih_store set number=number-{$number} where sku_id='$sku_id' and number>0";

$store_rs=mysqli_query($conn,$sql);

if($store_rs){

//生成订单

insertOrder($order_sn,$user_id,$goods_id,$sku_id,$price,$username,$number);

insertLog('库存减少成功',1,$username);

}else{

insertLog('库存减少失败',2,$username);

}

}else{

insertLog('库存不够',3,$username);

}

?>2. Pessimistic lock idea

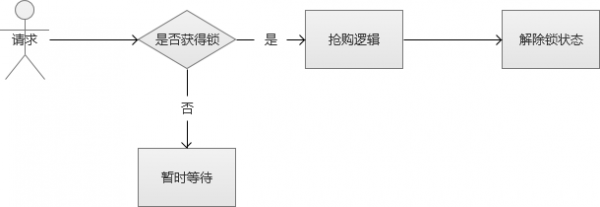

There are many ideas for solving thread safety, and we can start the discussion from the direction of "pessimistic locking".

Pessimistic lock, that is, when modifying data, the lock state is adopted to exclude modifications from external requests. When encountering a locked state, you must wait.

Although the above solution does solve the problem of thread safety, don't forget that our scenario is "high concurrency". In other words, there will be many such modification requests, and each request needs to wait for a "lock". Some threads may never have a chance to grab this "lock", and such requests will die there. At the same time, there will be many such requests, which will instantly increase the average response time of the system. As a result, the number of available connections will be exhausted and the system will fall into an exception.

Optimization plan 2: Use MySQL transactions to lock the operated rows

<?php

//优化方案2:使用MySQL的事务,锁住操作的行

include('./mysql.php');

//生成唯一订单号

function build_order_no(){

return date('ymd').substr(implode(NULL, array_map('ord', str_split(substr(uniqid(), 7, 13), 1))), 0, 8);

}

//记录日志

function insertLog($event,$type=0){

global $conn;

$sql="insert into ih_log(event,type)

values('$event','$type')";

mysqli_query($conn,$sql);

}

//模拟下单操作

//库存是否大于0

mysqli_query($conn,"BEGIN"); //开始事务

$sql="select number from ih_store where goods_id='$goods_id' and sku_id='$sku_id' FOR UPDATE";//此时这条记录被锁住,其它事务必须等待此次事务提交后才能执行

$rs=mysqli_query($conn,$sql);

$row=$rs->fetch_assoc();

if($row['number']>0){

//生成订单

$order_sn=build_order_no();

$sql="insert into ih_order(order_sn,user_id,goods_id,sku_id,price)

values('$order_sn','$user_id','$goods_id','$sku_id','$price')";

$order_rs=mysqli_query($conn,$sql);

//库存减少

$sql="update ih_store set number=number-{$number} where sku_id='$sku_id'";

$store_rs=mysqli_query($conn,$sql);

if($store_rs){

echo '库存减少成功';

insertLog('库存减少成功');

mysqli_query($conn,"COMMIT");//事务提交即解锁

}else{

echo '库存减少失败';

insertLog('库存减少失败');

}

}else{

echo '库存不够';

insertLog('库存不够');

mysqli_query($conn,"ROLLBACK");

}

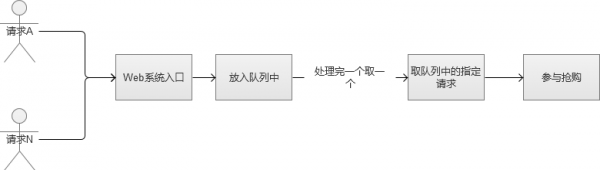

?>3. FIFO queue idea

Okay, then let’s slightly modify the above scenario, We directly put the request into the queue and use FIFO (First Input First Output, first in first out). In this way, we will not cause some requests to never obtain the lock. Seeing this, does it feel like forcibly turning multi-threading into single-threading?

Then, we have now solved the lock problem, and all requests are processed in a "first in, first out" queue. Then a new problem comes. In a high-concurrency scenario, because there are many requests, the queue memory may be "exploded" in an instant, and then the system will fall into an abnormal state. Or designing a huge memory queue is also a solution. However, the speed at which the system processes requests in a queue cannot be compared with the number of crazy influxes into the queue. In other words, the number of requests in the queue will accumulate more and more, and eventually the average response time of the Web system will still drop significantly, and the system will still fall into an exception.

4. The idea of file lock

For applications where the daily IP is not high or the number of concurrency is not very large, generally there is no need to consider this! There is no problem at all with normal file manipulation methods. But if the concurrency is high, when we read and write files, it is very likely that multiple processes will operate on the next file. If we do not monopolize the access to the file at this time, it will easily cause data loss

Optimization plan 4: Use non-blocking file exclusive locks

<?php

//优化方案4:使用非阻塞的文件排他锁

include ('./mysql.php');

//生成唯一订单号

function build_order_no(){

return date('ymd').substr(implode(NULL, array_map('ord', str_split(substr(uniqid(), 7, 13), 1))), 0, 8);

}

//记录日志

function insertLog($event,$type=0){

global $conn;

$sql="insert into ih_log(event,type)

values('$event','$type')";

mysqli_query($conn,$sql);

}

$fp = fopen("lock.txt", "w+");

if(!flock($fp,LOCK_EX | LOCK_NB)){

echo "系统繁忙,请稍后再试";

return;

}

//下单

$sql="select number from ih_store where goods_id='$goods_id' and sku_id='$sku_id'";

$rs = mysqli_query($conn,$sql);

$row = $rs->fetch_assoc();

if($row['number']>0){//库存是否大于0

//模拟下单操作

$order_sn=build_order_no();

$sql="insert into ih_order(order_sn,user_id,goods_id,sku_id,price)

values('$order_sn','$user_id','$goods_id','$sku_id','$price')";

$order_rs = mysqli_query($conn,$sql);

//库存减少

$sql="update ih_store set number=number-{$number} where sku_id='$sku_id'";

$store_rs = mysqli_query($conn,$sql);

if($store_rs){

echo '库存减少成功';

insertLog('库存减少成功');

flock($fp,LOCK_UN);//释放锁

}else{

echo '库存减少失败';

insertLog('库存减少失败');

}

}else{

echo '库存不够';

insertLog('库存不够');

}

fclose($fp);

?>5. Optimistic locking idea

At this time, we can discuss the idea of "optimistic locking". Optimistic locking adopts a more relaxed locking mechanism compared to "pessimistic locking", and most of them use version updates. The implementation is that all requests for this data are eligible to be modified, but a version number of the data will be obtained. Only those with consistent version numbers can be updated successfully, and other requests will be returned to failure. In this case, we do not need to consider the queue issue, but it will increase the computational overhead of the CPU. However, overall, this is a better solution.

There are many software and services that support the "optimistic locking" function, such as watch in Redis is one of them. With this implementation, we ensure data security.

Optimization plan 5: watch in Redis

<?php

$redis = new redis();

$result = $redis->connect('127.0.0.1', 6379);

echo $mywatchkey = $redis->get("mywatchkey");

/*

//插入抢购数据

if($mywatchkey>0)

{

$redis->watch("mywatchkey");

//启动一个新的事务。

$redis->multi();

$redis->set("mywatchkey",$mywatchkey-1);

$result = $redis->exec();

if($result) {

$redis->hSet("watchkeylist","user_".mt_rand(1,99999),time());

$watchkeylist = $redis->hGetAll("watchkeylist");

echo "抢购成功!<br/>";

$re = $mywatchkey - 1;

echo "剩余数量:".$re."<br/>";

echo "用户列表:<pre class="brush:php;toolbar:false">";

print_r($watchkeylist);

}else{

echo "手气不好,再抢购!";exit;

}

}else{

// $redis->hSet("watchkeylist","user_".mt_rand(1,99999),"12");

// $watchkeylist = $redis->hGetAll("watchkeylist");

echo "fail!<br/>";

echo ".no result<br/>";

echo "用户列表:<pre class="brush:php;toolbar:false">";

// var_dump($watchkeylist);

}*/

$rob_total = 100; //抢购数量

if($mywatchkey<=$rob_total){

$redis->watch("mywatchkey");

$redis->multi(); //在当前连接上启动一个新的事务。

//插入抢购数据

$redis->set("mywatchkey",$mywatchkey+1);

$rob_result = $redis->exec();

if($rob_result){

$redis->hSet("watchkeylist","user_".mt_rand(1, 9999),$mywatchkey);

$mywatchlist = $redis->hGetAll("watchkeylist");

echo "抢购成功!<br/>";

echo "剩余数量:".($rob_total-$mywatchkey-1)."<br/>";

echo "用户列表:<pre class="brush:php;toolbar:false">";

var_dump($mywatchlist);

}else{

$redis->hSet("watchkeylist","user_".mt_rand(1, 9999),'meiqiangdao');

echo "手气不好,再抢购!";exit;

}

}

?>PHP solves website big data, large traffic and high concurrency

The first thing I want to talk about is the database. First of all, it must have a good structure. Try not to use * in queries. Avoid related subqueries. Add indexes for frequent queries and use sorting to replace non-sequential access. If conditions permit, generally MySQL The server is best installed on a Linux operating system. Regarding apache and nginx, it is recommended to use nginx in high concurrency situations. Ginx is a good alternative to the Apache server. nginx consumes less memory. The official test can support 50,000 concurrent connections, and in the actual production environment, the number of concurrent connections can reach 20,000 to 30,000. Close unnecessary modules in PHP as much as possible and use memcached. Memcached is a high-performance distributed memory object caching system that directly transfers data from memory without using a database. This greatly improves the speed. iiS or Apache enables GZIP compression to optimize the website and compress the website content to greatly save website traffic. Second, prohibit external hot links. Hotlinking of pictures or files from external websites often brings a lot of load pressure, so external links to

hotlinking of own pictures or files should be strictly restricted. Fortunately, currently you can simply refer to it. To control hot links, Apache itself can disable hot links through configuration. IIS also has some third-party ISAPIs that can achieve the same function. Of course, forging referrals can also be done through code to achieve hotlinking. However, currently there are not many people who deliberately forge referrals to hotlink.You can ignore it or use non-technical means to solve it, such as on pictures. Add watermark.

Third, control the download of large files.

Downloading large files will occupy a lot of traffic, and for non-SCSI hard drives, downloading a large number of files will consume

CPU, which will reduce the website's responsiveness. Therefore, try not to provide downloads of large files exceeding 2M. If

is required, it is recommended to place the large files on another server.

Fourth, use different hosts to divert the main traffic

Place files on different hosts and provide different images for users to download. For example, if you feel that RSS files take up a lot of

traffic, then use services such as FeedBurner or FeedSky to place the RSS output on other hosts. In this way, most of the traffic pressure of other people's access will be concentrated on FeedBurner's host, and RSS will not be available. Taking up too many resources

Place files on different hosts and provide different images for users to download. For example, if you feel that RSS files take up a lot of traffic, then use services such as FeedBurner or FeedSky to place the RSS output on other hosts. In this way, most of the traffic pressure of other people's access will be concentrated on FeedBurner's host, and RSS will not occupy too many resources.

Sixth, use traffic analysis and statistics software.

Installing a traffic analysis and statistics software on the website can instantly know where a lot of traffic is consumed and which pages need to be optimized. Therefore, accurate statistical analysis is required to solve the traffic problem. For example: Google Analytics.

Deployment: server separation, database cluster and library table hashing, mirroring , Load balancing

Load balancing classification: 1), DNS round robin 2) Proxy server load balancing 3) Address translation gateway load balancing 4) NAT load balancing 5) Reverse proxy load balancing 6) Hybrid load balancing

Will be used for unified reading and writing of data physical files

Used for storage warehouse of large attachments

Ensure the IO efficiency and data efficiency of the overall system through the balance and redundancy of its own physical disks Security;

Features of the solution:

Reasonable distribution of Web pressure through front-end load balancing;

Reasonable distribution of light and heavy data flows through the separation of file/video servers and regular Web servers ;

Reasonably allocate database IO pressure through the database server group;

Each Web server usually only connects to one database server, and can automatically switch in a very short time through DEC's heartbeat detection To redundant database servers;

The introduction of disk arrays not only greatly improves system IO efficiency, but also greatly enhances data security.

Web server:

A large part of the resource usage of the Web server comes from processing Web requests. Under normal circumstances, this is the pressure generated by Apache. In the case of high concurrent connections, Nginx is Apache A good alternative to servers. Nginx ("engine x") is a high-performance HTTP and reverse proxy server written in Russia. In China, many websites and channels such as Sina, Sohu Pass, NetEase News, NetEase Blog, Kingsoft Xiaoyao.com, Kingsoft iPowerWord, Xiaonei.com, YUPOO Photo Album, Douban, Xunlei Kankan, etc. use Nginx servers.

Advantages of Nginx:

High concurrent connections: The official test can support 50,000 concurrent connections, and in the actual production environment, the number of concurrent connections reaches 20,000 to 30,000.

Low memory consumption: Under 30,000 concurrent connections, the 10 Nginx processes started consume only 150M of memory (15M*10=150M).

Built-in health check function: If a web server in the backend of Nginx Proxy goes down, front-end access will not be affected.

Strategy: Compared with the old Apache, we choose Lighttpd and Nginx, web servers with smaller resource usage and higher load capacity.

Mysql:

MySQL itself has a strong load capacity. MySQL optimization is a very complicated task, because it ultimately requires a good understanding of system optimization. Everyone knows that database work involves a large number of short-term queries, reads and writes. In addition to software development techniques such as indexing and improving query efficiency that need to be paid attention to during program development, the main impact on MySQL execution efficiency from the perspective of hardware facilities comes from the disk. Search, disk IO levels, CPU cycles, memory bandwidth.

Perform MySQl optimization based on the hardware and software conditions on the server. The core of MySQL optimization lies in the allocation of system resources, which does not mean allocating more resources to MySQL without limit. In the MySQL configuration file, we introduce some of the most noteworthy parameters:

Change the index buffer length (key_buffer)

Change the table length (read_buffer_size)

Settings open The maximum number of tables (table_cache)

Set a time limit for slow long queries (long_query_time)

If conditions permit, it is generally best to install the MySQL server in the Linux operating system, and Not installed in FreeBSD.

Strategy: MySQL optimization requires formulating different optimization plans based on the database reading and writing characteristics of the business system and the server hardware configuration, and the master-slave structure of MySQL can be deployed as needed.

PHP:

1. Load as few modules as possible;

2. If it is under the windows platform, try to use IIS or Nginx instead of what we usually use. Apache;

3. Install the accelerator (both improve the execution speed of the PHP code by caching the precompiled results of the PHP code and the database results)

eAccelerator, eAccelerator is a free and open source PHP accelerator, optimized and Dynamic content caching improves the caching performance of PHP scripts, making the server overhead of PHP scripts in the compiled state almost completely eliminated.

Apc: Alternative PHP Cache (APC) is a free and public optimized code cache for PHP. It is used to provide a free, open and robust framework for caching and optimizing PHP intermediate code.

memcache: memcache is a high-performance, distributed memory object caching system developed by Danga Interactive, which is used to reduce database load and improve access speed in dynamic applications. The main mechanism is to maintain a unified huge hash table in the memory. Memcache can be used to store data in various formats, including images, videos, files and database retrieval results.

Xcache: Developed by Chinese people Cache,

Strategy: Install accelerator for PHP.

Proxy Server (Cache Server):

Squid Cache (referred to as Squid) is a popular free software (GNU General Public License) proxy server and web caching server. Squid has a wide range of uses, from acting as a front-end cache server for web servers to increase the speed of web servers by caching relevant requests, to caching the World Wide Web, Domain Name System, and other web searches for a group of people to share network resources, to helping the network by filtering traffic. Security, to LAN through proxy network. Squid is primarily designed to run on Unix-like systems.

Strategy: Installing Squid reverse proxy server can greatly improve server efficiency.

Stress testing: Stress testing is a basic quality assurance behavior that is part of every important software testing effort. The basic idea of stress testing is simple: instead of running manual or automated tests under normal conditions, you run tests under conditions where the number of computers is small or system resources are scarce. Resources that are typically stress tested include internal memory, CPU availability, disk space, and network bandwidth. Concurrency is generally used for stress testing.

Stress testing tools: webbench, ApacheBench, etc.

Vulnerability testing: Vulnerabilities in our system mainly include: sql injection vulnerabilities, xss cross-site scripting attacks, etc. Security also includes system software, such as operating system vulnerabilities, vulnerabilities in mysql, apache, etc., which can generally be solved through upgrades.

Vulnerability testing tool: Acunetix Web Vulnerability Scanner

No related content found.

How to restart regularly

How to restart regularly

Windows cannot access the specified device path or file solution

Windows cannot access the specified device path or file solution

What is the difference between 5g and 4g

What is the difference between 5g and 4g

Remove header line

Remove header line

oracle insert usage

oracle insert usage

How to restore IE browser to automatically jump to EDGE

How to restore IE browser to automatically jump to EDGE

How to start mysql service

How to start mysql service

What is the difference between css framework and component library

What is the difference between css framework and component library