Character type of numerical type:

In addition to numbers, the information processed by computers is mainly characters. Characters include numeric characters, English characters, expression symbols, etc.; the character types provided by C# are as follows The internationally recognized standard adopts the Unicode character set. A standard Unicode character is 16 bits long and can be used to represent many languages in the world. Assigning values to character variables in C# is similar to C/C++:

Char ch = 'H'; Sometimes we also use the Char type when entering people's names. For example, I used it in the previous diary 03 char sex; to enter my gender, so a Chinese character is also a character? ? Yes, a Chinese character occupies 2 bytes, and 1 byte is 8 bits. After conversion, a Chinese character is exactly 16 bits, so it counts as one character; so char sex = 'male' is a legal compilation.

In addition, we can also assign values to character variables directly through hexadecimal escape or Unicode representation, for example:

using System;

using System.Collections.Generic;

using System.Linq;

using System.Text;

namespace Example

{

class Program

{

static void Main(string[] args)

{

char c = '\x0032'; //\x 是16进制转义符号 此时的32是16进制下的32

char d = '\u0032'; //\u 下的32代表Unicode的一个编码

Console.WriteLine("c = {0}\td = {1}",c,d);

}

}

}Run it:

So the above assignment is the same as char c = '2' char d = '2';

With a heart that has the courage to explore, I made another pass on the above code Explore. \u0032 represents the character encoded as 0032 in Unicode, and \x0032 represents 0032 in hexadecimal. By comparison, I found that the encoding of Unicode is the value in hexadecimal. I tried to change the code to the following:

char c = '\x0033';

char d = '\u0034';

I guess the result will be c=3 d=4; The running result is exactly like this, The above is established.

In the spirit of random thinking, I remembered the ASCII code value used when learning C. Is it possible that the ASCLII code value is the same as the Unicode code value? I found the C language textbook and found out that the ASCII decimal code value of 2 is 50, not 32. Since the above conjecture is true, 32 is 32 in hexadecimal. What is the value when I convert it to decimal? ? ? (This is a test for me. When the teacher taught about hexadecimal conversion, I... alas! I won't say it again, "I will regret it when I use the book!"), but we can use C# to write a program for hexadecimal conversion. To calculate, the code is as follows:

using System;

using System.Collections.Generic;

using System.Linq;

using System.Text;

namespace Example

{

class Program

{

static void Main(string[] args)

{

Console.Write("请输入一个十六进制数:");

string x = Console.ReadLine();

Console.WriteLine("十六进制 {0} 的十进制数表示为:{1}", x, Convert.ToInt32(x, 16)); // Convert.ToInt32(x, 16) 将16进制中x转化为10进制,

int类型就是十进制;

Console.WriteLine("十进制 50 的十六进制数表示为:{0}", Convert.ToString(50,16));//Convert.TonString(50,16)进制转换 或者 可用 int a = 50;

a.ToString("X");

}

}

}The result is as follows:

Sure enough, the ASCII code value in hexadecimal and the character code value in Unicode are Equal, after consulting the information, we can know:

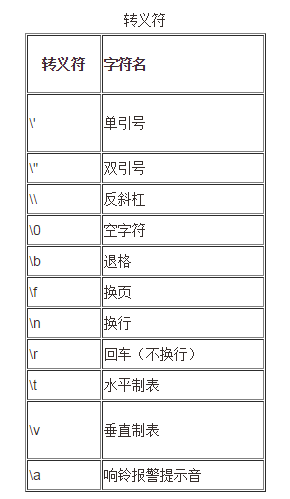

ASCLL codes are all in English and some special symbols (tab characters, etc.).

Unicode not only has English and special symbols, but also Japanese, Korean, and Chinese ……

Unicode is generally used now (this is why C# in Diary 04 supports naming variables in Chinese)