Claude 4 vs GPT-4o vs Gemini 2.5 Pro: Find the Best AI for Coding

In 2025, developers are no longer asking how to use AI tools for coding, they’re asking which is the best AI for code generation. With access to so many top-performing models like Anthropic’s Claude 4, OpenAI’s GPT-4o, and Google’s Gemini 2.5 Pro, there’s tight competition in the AI race, and a lot of confusion in our minds. As the AI domain continues to evolve, it’s necessary to evaluate how these models perform when it comes to generating code. In this article, we’ll compare the programming capabilities and performances of Claude 4 Sonnet vs GPT-4o vs Gemini 2.5 Pro, to find out which is the best AI coding model out there.

Table of Contents

- Model Evaluation: Claude 4 vs GPT-4o vs Gemini 2.5 Pro

- Model Overview

- Pricing Comparison

- Benchmark Comparison

- Overall Analysis

- Claude 4 vs GPT-4o vs Gemini 2.5 Pro: Coding Capabilities

- Task 1: Design Playing Cards with HTML, CSS, and JS

- Task 2: Build a Game

- Task 3: Best Time to Buy and Sell Stock

- Final Verdict: Overall Analysis

- Conclusion

Model Evaluation: Claude 4 vs GPT-4o vs Gemini 2.5 Pro

To find the best AI coding model in 2025, we’ll first evaluate Claude 4 Sonnet, GPT-4o, and Gemini 2.5 Pro, based on their architecture, context window, pricing, and benchmark scores.

Model Overview

Each of these models is accessible through cloud services and has multimodal capabilities to varying degrees. In this section, we’ll explore some of the key features of the 3 models and compare what they offer.

| Feature | Claude 4 | GPT-4o | Gemini 2.5 Pro |

| Open Source | No | No | No |

| Release Date | May 22, 2025 | May 2024 | May 6, 2025 |

| Context Window | 200K | 128K | 1M |

| API Providers | Anthropic API, AWS Bedrock, Google Vertex | OpenAI API, Azure OpenAI | Google Vertex AI, Google AI Studio |

| Input Types Supported | Text, Images | Text, Images, Audio, Video | Text, Images, Audio, Video |

Pricing Comparison

In the modern age of AI, every one of us uses these models to some extent. So, model price is one of the important things for teams while building apps at scale, and Claude 4 Opus stands out as the most expensive one for both input and output.

| Model | Input Price (per million tokens) | Output Price (per million tokens) |

| Claude 4 | $15.00 (Opus)

$3.00 (Sonnet) |

$75.00 (Opus)

$15.00 (Sonnet) |

| GPT-4o | $5.00 | $20.00 |

| Gemini 2.5 Pro |

$1.25 (≤200K),

$2.50 (>200K) |

$10.00 (≤200K),

$15.00 (>200K) |

Benchmark Comparison

Benchmark illustrates models’ capabilities like coding and reasoning. ’s result reflects he model’s performance over various domains available on data on agentic coding, math, reasoning, and tool use.

| Benchmark | Claude 4 Opus | Claude 4 Sonnet | GPT-4o | Gemini 2.5 Pro |

| HumanEval (Code Gen) | Not Available | Not Available | 74.8% | 75.6% |

| GPQA (Graduate Reasoning) | 83.3% | 83.8% | 83.3% | 83.0% |

| MMLU (World Knowledge) | 88.8% | 86.5% | 88.7% | 88.6% |

| AIME 2025 (Math) | 90.0% | 85.0% | 88.9% | 83.0% |

| SWE-bench (Agentic Coding) | 72.5% | 72.7% | 69.1% | 63.2% |

| TAU-bench (Tool Use) | 81.4% | 80.5% | 70.4% | Not Available |

| Terminal-bench (Coding) | 43.2% | 35.5% | 30.2% | 25.3% |

| MMMU (Visual Reasoning) | 76.5% | 74.4% | 82.9% | 79.6% |

In this, Claude 4 generally excels in coding, GPT-4o in reasoning, and Gemini 2.5 Pro offers strong, balanced performance across different modalities. For more information, please visit here.

Overall Analysis

Here’s what we’ve learned about these advanced closing models, based on the above points of comparison:

- We found that Claude 4 excels in coding, math, and tool use, but it is also the most expensive one.

- GPT-4o excels at reasoning and multimodal support, handling different input formats, making it an ideal choice for more advanced and complex assistants.

- Meanwhile, Gemini 2.5 Pro offers a strong and balanced performance with the largest context window and the most cost-effective pricing.

Claude 4 vs GPT-4o vs Gemini 2.5 Pro: Coding Capabilities

Now we will compare the code-writing capabilities of Claude 4, GPT-4o, and Gemini 2.5 Pro. For that, we are going to give the same prompt to all three models and evaluate their responses on the following metrics:

- Efficiency

- Readability

- Comment and Documentation

- Error Handling

Task 1: Design Playing Cards with HTML, CSS, and JS

Prompt: “Create an interactive webpage that displays a collection of WWE Superstar flashcards using HTML, CSS, and JavaScript. Each card should represent a WWE wrestler, and must include a front and back side. On the front, display the wrestler’s name and image. On the back, show additional stats such as their finishing move, brand, and championship titles. The flashcards should have a flip animation when hovered over or clicked.

Additionally, add interactive controls to make the page dynamic: a button that shuffles the cards, and another that shows a random card from the deck. The layout should be visually appealing and responsive for different screen sizes. Bonus points if you include sound effects like entrance music when a card is flipped.

Key Features to Implement:

- Front of card: wrestler’s name image

- Back of card: stats (e.g., finisher, brand, titles)

- Flip animation using CSS or JS

- “Shuffle” button to randomly reorder cards

- “Show Random Superstar” button

- Responsive design.”

Claude 4’s Response:

GPT-4o’s Response:

Gemini 2.5 Pro’s Response:

Comparative Analysis

In the first task, Claude 4 gave the most interactive experience with the most dynamic visuals. It also added a sound effect while clicking on the card. GPT-4o gave a black theme layout with smooth transitions and fully functional buttons, but lacked the audio functionality. Meanwhile, Gemini 2.5 Pro gave the simplest and most basic sequential layout with no animation or sound. Also, the random card feature in this one failed to show the card’s face properly. Overall, Claude takes the lead here, followed by GPT-4o, and then Gemini.

Task 2: Build a Game

Prompt: “Spell Strategy Game is a turn-based battle game built with Pygame, where two mages compete by casting spells from their spellbooks. Each player starts with 100 HP and 100 Mana and takes turns selecting spells that deal damage, heal, or apply special effects like shields and stuns. Spells consume mana and have cooldown periods, requiring players to manage resources and strategize carefully. The game features an engaging UI with health and mana bars, and spell cooldown indicators.. Players can face off against another human or an AI opponent, aiming to reduce their rival’s HP to zero through tactical decisions.

Key Features:

- Turn-based gameplay with two mages (PvP or PvAI)

- 100 HP and 100 Mana per player

- Spellbook with diverse spells: damage, healing, shields, stuns, mana recharge

- Mana costs and cooldowns for each spell to encourage strategic play

- Visual UI elements: health/mana bars, cooldown indicators, spell icons

- AI opponent with simple tactical decision-making

- Mouse-driven controls with optional keyboard shortcuts

- Clear in-game messaging showing actions and effects”

Claude 4’s Response:

GPT-4o’s Response:

Gemini 2.5 Pro’s Response:

Comparative Analysis

In the second task, on the whole, none of the models provided proper graphics. Each one displayed a black screen with a minimal interface. However, Claude 4 offered the most functional and smooth control over the game, with a wide range of attack, defence, and other strategic gameplay. GPT-4o, on the other hand, suffered from performance issues, such as lagging, and a small and concise window size. Even Gemini 2.5 Pro fell short here, as its code failed to run and gave some errors. Overall, once again, Claude takes the lead here, followed by GPT-4o, and then Gemini 2.5 Pro.

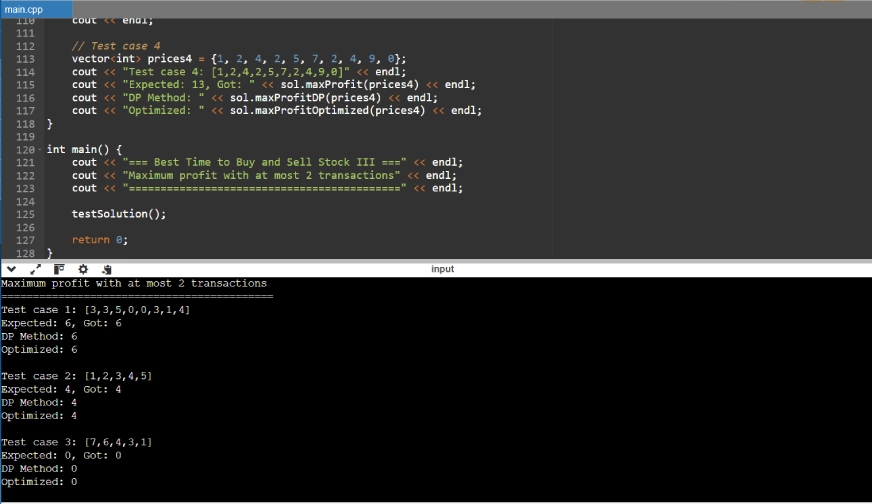

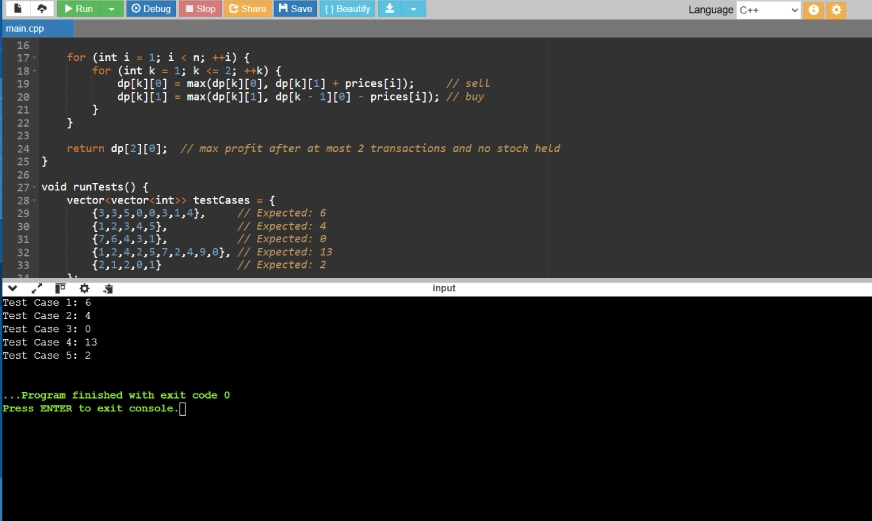

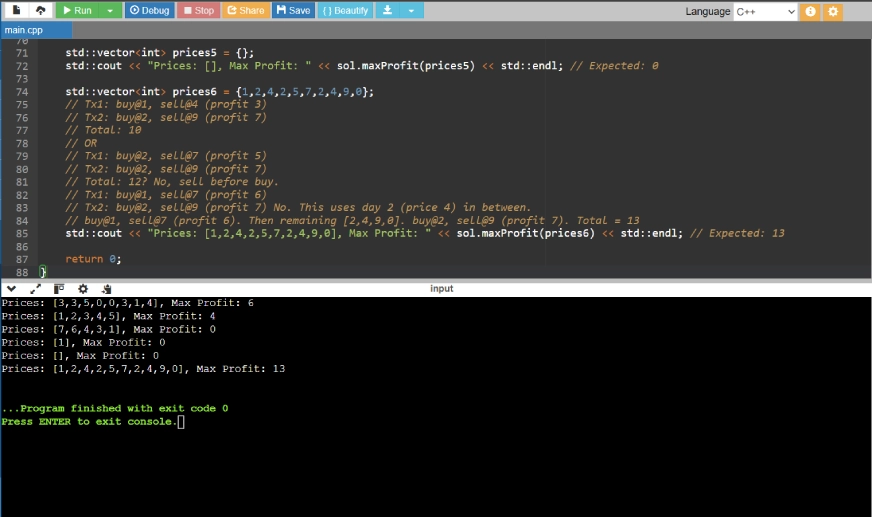

Task 3: Best Time to Buy and Sell Stock

Prompt: “You are given an array prices where prices[i] is the price of a given stock on the ith day.

Find the maximum profit you can achieve. You may complete at most two transactions.

Note: You may not engage in multiple transactions simultaneously (i.e., you must sell the stock before you buy again).

Example:

Input: prices = [3,3,5,0,0,3,1,4]

Output: 6

Explanation: Buy on day 4 (price = 0) and sell on day 6 (price = 3), profit = 3-0 = 3. Then buy on day 7 (price = 1) and sell on day 8 (price = 4), profit = 4-1 = 3.”

Claude 4’s Response:

GPT-4o’s Response:

Gemini 2.5 Pro’s Response:

Comparative Analysis

In the third and final task, the models had to solve the problem using dynamic programming. Among the three, GPT-4o offered the most practical and well-approached solution, using a clean 2D dynamic programming with safe initialization, and also included test cases. While Claude 4 provided a more detailed and educational approach, it is more verbose. Meanwhile, Gemini 2.5 Pro gave a concise method, but used INT_MIN initialization, which is a risky approach. So in this task, GPT-4o takes the lead, followed by Claude 4, and then Gemini 2.5 Pro.

Final Verdict: Overall Analysis

Here’s a comparative summary of how well each model has performed in the above tasks.

| Task | Claude 4 | GPT-4o | Gemini 2.5 Pro | Winner |

| Task 1 (Card UI) | Most interactive with animations and sound effects | Smooth dark theme with functional buttons, no audio | Basic sequential layout, card face issue, no animation/sound | Claude 4 |

| Task 2 (Game Control) | Smooth controls, broad strategy options, most functional game | Usable but laggy, small window | Failed to run, interface errors | Claude 4 |

| Task 3 (Dynamic Programming) | Verbose but educational, good for learning | Clean and safe DP solution with test cases, most practical | Concise but unsafe (uses INT_MIN), lacks robustness | GPT-4o |

To check the complete version of all the code files, please visit here.

Conclusion

Now, through this comprehensive comparison of three diverse tasks, we have observed that Claude 4 stands out with its interactive UI design capabilities and stable logic in modular programming, making it the top performer overall. While GPT-4o follows closely with its clean and practical coding, and excels in algorithmic problem solving. Meanwhile, Gemini 2.5 Pro lacks in UI design and stability in execution across all tasks. But these observations are completely based on the above comparison, while each model has unique strengths, and the choice of model completely depends on the problem we are trying to solve.

The above is the detailed content of Claude 4 vs GPT-4o vs Gemini 2.5 Pro: Find the Best AI for Coding. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undress AI Tool

Undress images for free

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Elon Musk's Self-Driving Tesla Lies Are Finally Catching Up To Him

Aug 21, 2025 pm 04:51 PM

Elon Musk's Self-Driving Tesla Lies Are Finally Catching Up To Him

Aug 21, 2025 pm 04:51 PM

Nine years ago, Elon Musk stood before reporters and declared that Tesla was making a daring leap into the future—equipping every new electric vehicle with the complete hardware necessary for full self-driving capability.“All Teslas produced from thi

Are Browsers Key To An Agentic AI Future? Opera, Perplexity Think So

Aug 17, 2025 pm 03:45 PM

Are Browsers Key To An Agentic AI Future? Opera, Perplexity Think So

Aug 17, 2025 pm 03:45 PM

Why is Perplexity so determined to acquire a web browser? The answer might lie in a fundamental shift on the horizon: the rise of the agentic AI internet — and browsers could be at the heart of it.I recently spoke with Henrik Lexow, senior product le

You Are Perfect Just As You Are Says Generative AI

Aug 03, 2025 am 11:15 AM

You Are Perfect Just As You Are Says Generative AI

Aug 03, 2025 am 11:15 AM

All in all, the ego-boosting line that “you are perfect just as you are” has become a conniving mantra that contemporary AI is primed to effusively gush to any user that wants to hear over-the-top platitudes. Let’s talk about it. This analysis of a

Fear Of Super Intelligent AI Is Driving Harvard And MIT Students To Drop Out

Aug 07, 2025 am 11:39 AM

Fear Of Super Intelligent AI Is Driving Harvard And MIT Students To Drop Out

Aug 07, 2025 am 11:39 AM

Now she’s taking a permanent leave of absence, gripped by fear that the arrival of “artificial general intelligence”—a theoretical form of AI capable of matching or exceeding human performance across countless domains—could lead to the collapse of ci

AI Agent Types – And Memory

Aug 17, 2025 pm 06:27 PM

AI Agent Types – And Memory

Aug 17, 2025 pm 06:27 PM

As the conversation around AI agents continues to evolve between businesses and individuals, one central theme stands out: not all AI agents are created equal. There’s a wide spectrum—from basic, rule-driven systems to highly advanced, adaptive model

The Prototype: AI Tools May Degrade Doctors' Skills

Aug 16, 2025 pm 07:09 PM

The Prototype: AI Tools May Degrade Doctors' Skills

Aug 16, 2025 pm 07:09 PM

A new study in The Lancet investigated how using AI during colonoscopies affects doctors' diagnostic abilities. Researchers assessed physicians’ skill in identifying specific abnormalities over three months without AI, then re-evaluated them after th

Why Nvidia Earnings Matter More To Markets Than What The Fed Chair Says

Aug 22, 2025 pm 06:51 PM

Why Nvidia Earnings Matter More To Markets Than What The Fed Chair Says

Aug 22, 2025 pm 06:51 PM

Why is Nvidia’s upcoming earnings report drawing more attention than the Federal Reserve Chair’s speech? The answer lies in growing investor anxiety over the actual returns from massive corporate investments in artificial intelligence. While Powell’s

What Does OpenAI's GPT-5 Mean In The Race For AI Model Supremacy?

Aug 12, 2025 pm 06:12 PM

What Does OpenAI's GPT-5 Mean In The Race For AI Model Supremacy?

Aug 12, 2025 pm 06:12 PM

As OpenAI CEO Sam Altman puts it, GPT‑5 is “a significant step” toward AGI and is “the smartest, fastest, most useful model yet.” He compares the jump from GPT-4 to GPT-5 to moving from a college graduate to a “PhD-level expert.” The model’s release