GitHub: https://github.com/chatsapi/ChatsAPI

Library: https://pypi.org/project/chatsapi/

Artificial Intelligence has transformed industries, but deploying it effectively remains a daunting challenge. Complex frameworks, slow response times, and steep learning curves create barriers for businesses and developers alike. Enter ChatsAPI — a groundbreaking, high-performance AI agent framework designed to deliver unmatched speed, flexibility, and simplicity.

In this article, we’ll uncover what makes ChatsAPI unique, why it’s a game-changer, and how it empowers developers to build intelligent systems with unparalleled ease and efficiency.

ChatsAPI is not just another AI framework; it’s a revolution in AI-driven interactions. Here’s why:

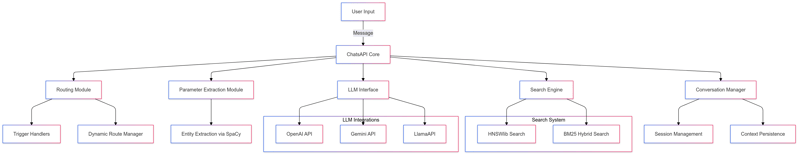

Speed: With sub-millisecond response times, ChatsAPI is the world’s fastest AI agent framework. Its HNSWlib-powered search ensures lightning-fast retrieval of routes and knowledge, even with large datasets.

Efficiency: The hybrid approach of SBERT and BM25 combines semantic understanding with traditional ranking systems, ensuring both speed and accuracy.

Seamless Integration with LLMs

ChatsAPI supports state-of-the-art Large Language Models (LLMs) like OpenAI, Gemini, LlamaAPI, and Ollama. It simplifies the complexity of integrating LLMs into your applications, allowing you to focus on building better experiences.

Dynamic Route Matching

ChatsAPI uses natural language understanding (NLU) to dynamically match user queries to predefined routes with unparalleled precision.

Register routes effortlessly with decorators like @trigger.

Use parameter extraction with @extract to simplify input handling, no matter how complex your use case.

High-Performance Query Handling

Traditional AI systems struggle with either speed or accuracy — ChatsAPI delivers both. Whether it’s finding the best match in a vast knowledge base or handling high volumes of queries, ChatsAPI excels.

Flexible Framework

ChatsAPI adapts to any use case, whether you’re building:

Designed by developers, for developers, ChatsAPI offers:

At its core, ChatsAPI operates through a three-step process:

The result? A system that’s fast, accurate, and ridiculously easy to use.

Customer Support

Automate customer interactions with blazing-fast query resolution. ChatsAPI ensures users get relevant answers instantly, improving satisfaction and reducing operational costs.

Knowledge Base Search

Empower users to search vast knowledge bases with semantic understanding. The hybrid SBERT-BM25 approach ensures accurate, context-aware results.

Conversational AI

Build conversational AI agents that understand and adapt to user inputs in real-time. ChatsAPI integrates seamlessly with top LLMs to deliver natural, engaging conversations.

Other frameworks promise flexibility or performance — but none can deliver both like ChatsAPI. We’ve created a framework that’s:

ChatsAPI empowers developers to unlock the full potential of AI, without the headaches of complexity or slow performance.

Getting started with ChatsAPI is easy:

pip install chatsapi

from chatsapi import ChatsAPI

chat = ChatsAPI()

@chat.trigger("Hello")

async def greet(input_text):

return "Hi there!"

from chatsapi import ChatsAPI

chat = ChatsAPI()

@chat.trigger("Need help with account settings.")

@chat.extract([

("account_number", "Account number (a nine digit number)", int, None),

("holder_name", "Account holder's name (a person name)", str, None)

])

async def account_help(chat_message: str, extracted: dict):

return {"message": chat_message, "extracted": extracted}

Run your message (with no LLM)

@app.post("/chat")

async def message(request: RequestModel, response: Response):

reply = await chat.run(request.message)

return {"message": reply}

import os

from dotenv import load_dotenv

from fastapi import FastAPI, Request, Response

from pydantic import BaseModel

from chatsapi.chatsapi import ChatsAPI

# Load environment variables from .env file

load_dotenv()

app = FastAPI() # instantiate FastAPI or your web framework

chat = ChatsAPI( # instantiate ChatsAPI

llm_type="gemini",

llm_model="models/gemini-pro",

llm_api_key=os.getenv("GOOGLE_API_KEY"),

)

# chat trigger - 1

@chat.trigger("Want to cancel a credit card.")

@chat.extract([("card_number", "Credit card number (a 12 digit number)", str, None)])

async def cancel_credit_card(chat_message: str, extracted: dict):

return {"message": chat_message, "extracted": extracted}

# chat trigger - 2

@chat.trigger("Need help with account settings.")

@chat.extract([

("account_number", "Account number (a nine digit number)", int, None),

("holder_name", "Account holder's name (a person name)", str, None)

])

async def account_help(chat_message: str, extracted: dict):

return {"message": chat_message, "extracted": extracted}

# request model

class RequestModel(BaseModel):

message: str

# chat conversation

@app.post("/chat")

async def message(request: RequestModel, response: Response, http_request: Request):

session_id = http_request.cookies.get("session_id")

reply = await chat.conversation(request.message, session_id)

return {"message": f"{reply}"}

# set chat session

@app.post("/set-session")

def set_session(response: Response):

session_id = chat.set_session()

response.set_cookie(key="session_id", value=session_id)

return {"message": "Session set"}

# end chat session

@app.post("/end-session")

def end_session(response: Response, http_request: Request):

session_id = http_request.cookies.get("session_id")

chat.end_session(session_id)

response.delete_cookie("session_id")

return {"message": "Session ended"}

await chat.query(request.message)

Traditional LLM (API)-based methods typically take around four seconds per request. In contrast, ChatsAPI processes requests in under one second, often within milliseconds, without making any LLM API calls.

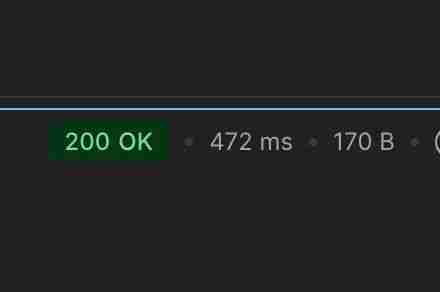

Performing a chat routing task within 472ms (no cache)

Performing a chat routing task within 21ms (after cache)

Performing a chat routing data extraction task within 862ms (no cache)

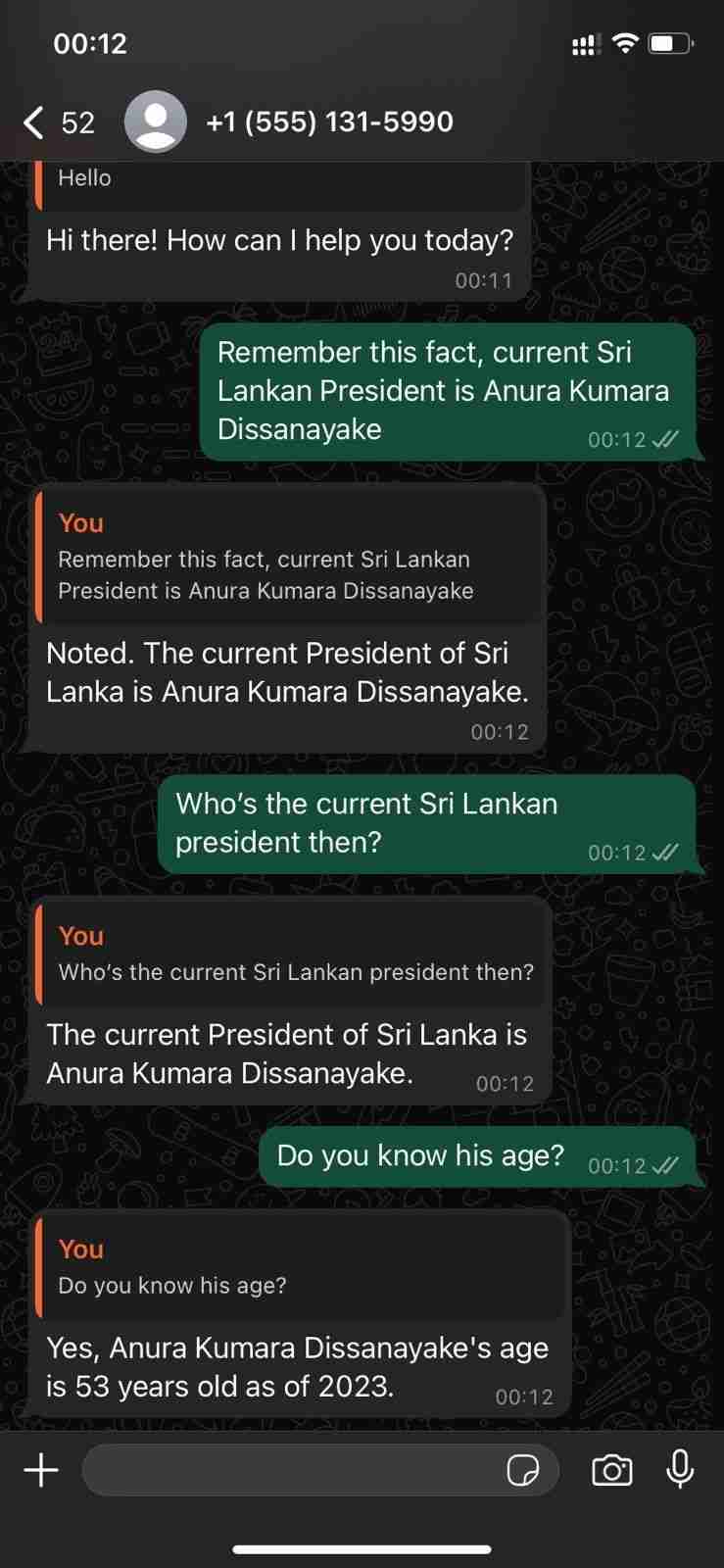

Demonstrating its conversational abilities with WhatsApp Cloud API

ChatsAPI — Feature Hierarchy

ChatsAPI is more than just a framework; it’s a paradigm shift in how we build and interact with AI systems. By combining speed, accuracy, and ease of use, ChatsAPI sets a new benchmark for AI agent frameworks.

Join the revolution today and see why ChatsAPI is transforming the AI landscape.

Ready to dive in? Get started with ChatsAPI now and experience the future of AI development.

The above is the detailed content of ChatsAPI — The World's Fastest AI Agent Framework. For more information, please follow other related articles on the PHP Chinese website!