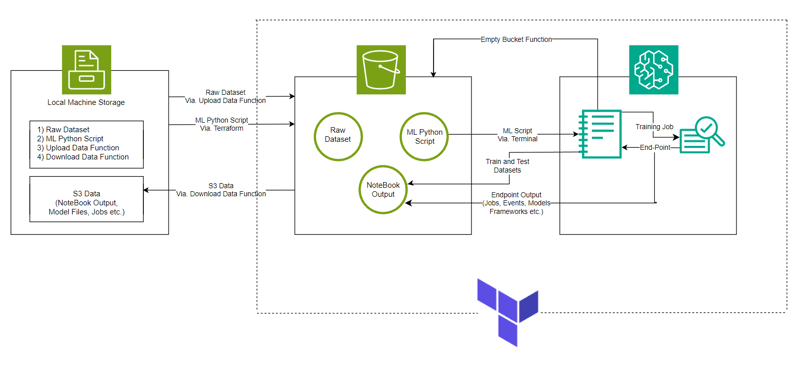

A Machine Learning model made with AWS SageMaker and its Python SDK for Classification of HDFS Logs using Terraform for automation of infrastructure setup.

Link: GitHub

Language: HCL (terraform), Python

# Looks for the XGBoost image URI and builds an XGBoost container. Specify the repo_version depending on preference.

container = get_image_uri(boto3.Session().region_name,

'xgboost',

repo_version='1.0-1')

hyperparameters = {

"max_depth":"5", ## Maximum depth of a tree. Higher means more complex models but risk of overfitting.

"eta":"0.2", ## Learning rate. Lower values make the learning process slower but more precise.

"gamma":"4", ## Minimum loss reduction required to make a further partition on a leaf node. Controls the model’s complexity.

"min_child_weight":"6", ## Minimum sum of instance weight (hessian) needed in a child. Higher values prevent overfitting.

"subsample":"0.7", ## Fraction of training data used. Reduces overfitting by sampling part of the data.

"objective":"binary:logistic", ## Specifies the learning task and corresponding objective. binary:logistic is for binary classification.

"num_round":50 ## Number of boosting rounds, essentially how many times the model is trained.

}

# A SageMaker estimator that calls the xgboost-container

estimator = sagemaker.estimator.Estimator(image_uri=container, # Points to the XGBoost container we previously set up. This tells SageMaker which algorithm container to use.

hyperparameters=hyperparameters, # Passes the defined hyperparameters to the estimator. These are the settings that guide the training process.

role=sagemaker.get_execution_role(), # Specifies the IAM role that SageMaker assumes during the training job. This role allows access to AWS resources like S3.

train_instance_count=1, # Sets the number of training instances. Here, it’s using a single instance.

train_instance_type='ml.m5.large', # Specifies the type of instance to use for training. ml.m5.2xlarge is a general-purpose instance with a balance of compute, memory, and network resources.

train_volume_size=5, # 5GB # Sets the size of the storage volume attached to the training instance, in GB. Here, it’s 5 GB.

output_path=output_path, # Defines where the model artifacts and output of the training job will be saved in S3.

train_use_spot_instances=True, # Utilizes spot instances for training, which can be significantly cheaper than on-demand instances. Spot instances are spare EC2 capacity offered at a lower price.

train_max_run=300, # Specifies the maximum runtime for the training job in seconds. Here, it's 300 seconds (5 minutes).

train_max_wait=600) # Sets the maximum time to wait for the job to complete, including the time waiting for spot instances, in seconds. Here, it's 600 seconds (10 minutes).

estimator.fit({'train': s3_input_train,'validation': s3_input_test})

xgb_predictor = estimator.deploy(initial_instance_count=1,instance_type='ml.m5.large')

# Looks for the XGBoost image URI and builds an XGBoost container. Specify the repo_version depending on preference.

container = get_image_uri(boto3.Session().region_name,

'xgboost',

repo_version='1.0-1')

hyperparameters = {

"max_depth":"5", ## Maximum depth of a tree. Higher means more complex models but risk of overfitting.

"eta":"0.2", ## Learning rate. Lower values make the learning process slower but more precise.

"gamma":"4", ## Minimum loss reduction required to make a further partition on a leaf node. Controls the model’s complexity.

"min_child_weight":"6", ## Minimum sum of instance weight (hessian) needed in a child. Higher values prevent overfitting.

"subsample":"0.7", ## Fraction of training data used. Reduces overfitting by sampling part of the data.

"objective":"binary:logistic", ## Specifies the learning task and corresponding objective. binary:logistic is for binary classification.

"num_round":50 ## Number of boosting rounds, essentially how many times the model is trained.

}

# A SageMaker estimator that calls the xgboost-container

estimator = sagemaker.estimator.Estimator(image_uri=container, # Points to the XGBoost container we previously set up. This tells SageMaker which algorithm container to use.

hyperparameters=hyperparameters, # Passes the defined hyperparameters to the estimator. These are the settings that guide the training process.

role=sagemaker.get_execution_role(), # Specifies the IAM role that SageMaker assumes during the training job. This role allows access to AWS resources like S3.

train_instance_count=1, # Sets the number of training instances. Here, it’s using a single instance.

train_instance_type='ml.m5.large', # Specifies the type of instance to use for training. ml.m5.2xlarge is a general-purpose instance with a balance of compute, memory, and network resources.

train_volume_size=5, # 5GB # Sets the size of the storage volume attached to the training instance, in GB. Here, it’s 5 GB.

output_path=output_path, # Defines where the model artifacts and output of the training job will be saved in S3.

train_use_spot_instances=True, # Utilizes spot instances for training, which can be significantly cheaper than on-demand instances. Spot instances are spare EC2 capacity offered at a lower price.

train_max_run=300, # Specifies the maximum runtime for the training job in seconds. Here, it's 300 seconds (5 minutes).

train_max_wait=600) # Sets the maximum time to wait for the job to complete, including the time waiting for spot instances, in seconds. Here, it's 600 seconds (10 minutes).

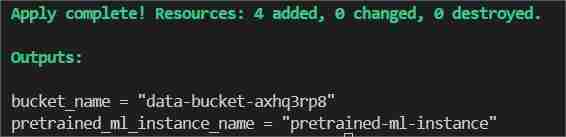

In the terminal type/paste terraform init to initialize the backend.

Then type/paste terraform Plan to view the plan or simply terraform validate to ensure that there is no error.

Finally in the terminal type/paste terraform apply --auto-approve

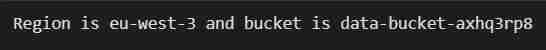

This will show two outputs one as bucket_name other as pretrained_ml_instance_name (The 3rd resource is the variable name given to the bucket since they are global resources ).

estimator.fit({'train': s3_input_train,'validation': s3_input_test})

and change it to the path where the project directory is present and save it.

xgb_predictor = estimator.deploy(initial_instance_count=1,instance_type='ml.m5.large')

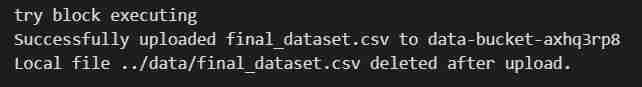

to upload dataset to S3 Bucket.

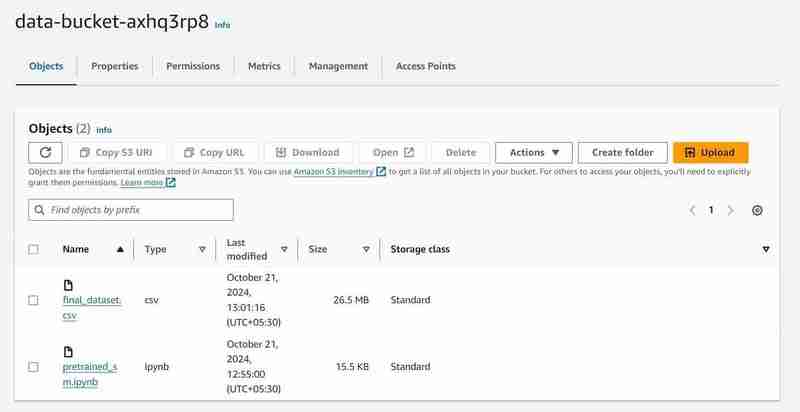

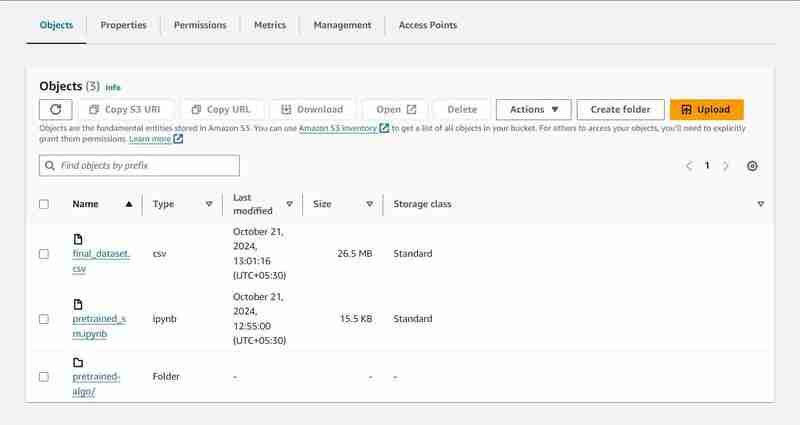

S3 Bucket with named 'data-bucket-' with 2 objects uploaded, a dataset and the pretrained_sm.ipynb file containing model code.

# Looks for the XGBoost image URI and builds an XGBoost container. Specify the repo_version depending on preference.

container = get_image_uri(boto3.Session().region_name,

'xgboost',

repo_version='1.0-1')

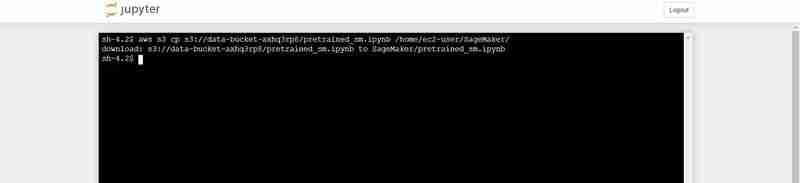

Terminal command to upload the pretrained_sm.ipynb from S3 to Notebook's Jupyter environment

hyperparameters = {

"max_depth":"5", ## Maximum depth of a tree. Higher means more complex models but risk of overfitting.

"eta":"0.2", ## Learning rate. Lower values make the learning process slower but more precise.

"gamma":"4", ## Minimum loss reduction required to make a further partition on a leaf node. Controls the model’s complexity.

"min_child_weight":"6", ## Minimum sum of instance weight (hessian) needed in a child. Higher values prevent overfitting.

"subsample":"0.7", ## Fraction of training data used. Reduces overfitting by sampling part of the data.

"objective":"binary:logistic", ## Specifies the learning task and corresponding objective. binary:logistic is for binary classification.

"num_round":50 ## Number of boosting rounds, essentially how many times the model is trained.

}

# A SageMaker estimator that calls the xgboost-container

estimator = sagemaker.estimator.Estimator(image_uri=container, # Points to the XGBoost container we previously set up. This tells SageMaker which algorithm container to use.

hyperparameters=hyperparameters, # Passes the defined hyperparameters to the estimator. These are the settings that guide the training process.

role=sagemaker.get_execution_role(), # Specifies the IAM role that SageMaker assumes during the training job. This role allows access to AWS resources like S3.

train_instance_count=1, # Sets the number of training instances. Here, it’s using a single instance.

train_instance_type='ml.m5.large', # Specifies the type of instance to use for training. ml.m5.2xlarge is a general-purpose instance with a balance of compute, memory, and network resources.

train_volume_size=5, # 5GB # Sets the size of the storage volume attached to the training instance, in GB. Here, it’s 5 GB.

output_path=output_path, # Defines where the model artifacts and output of the training job will be saved in S3.

train_use_spot_instances=True, # Utilizes spot instances for training, which can be significantly cheaper than on-demand instances. Spot instances are spare EC2 capacity offered at a lower price.

train_max_run=300, # Specifies the maximum runtime for the training job in seconds. Here, it's 300 seconds (5 minutes).

train_max_wait=600) # Sets the maximum time to wait for the job to complete, including the time waiting for spot instances, in seconds. Here, it's 600 seconds (10 minutes).

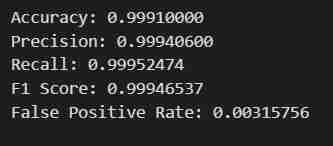

Output of the code cell execution

estimator.fit({'train': s3_input_train,'validation': s3_input_test})

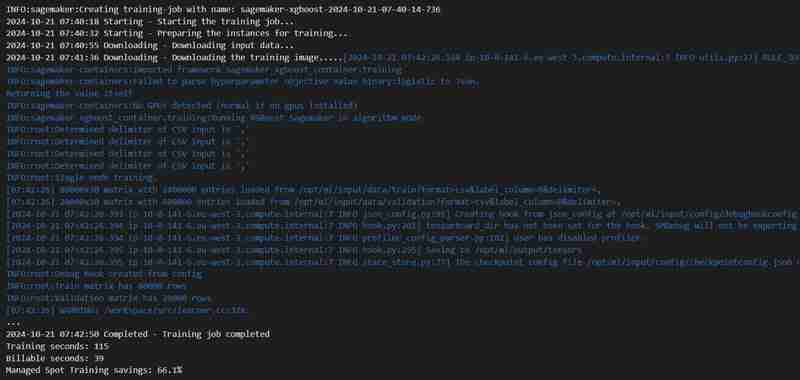

Execution of 8th cell

xgb_predictor = estimator.deploy(initial_instance_count=1,instance_type='ml.m5.large')

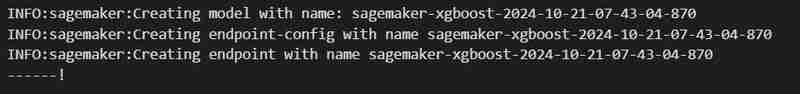

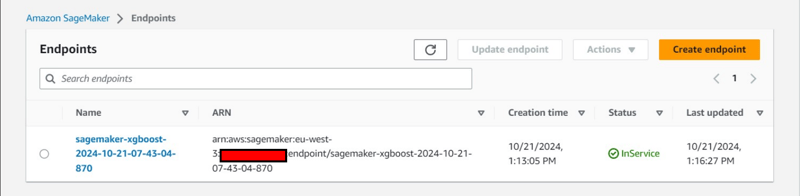

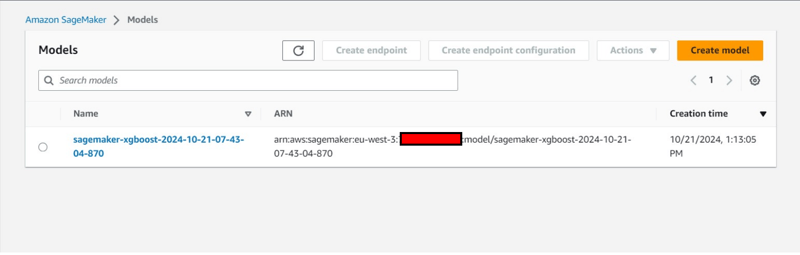

Execution of 23rd cell

# Looks for the XGBoost image URI and builds an XGBoost container. Specify the repo_version depending on preference.

container = get_image_uri(boto3.Session().region_name,

'xgboost',

repo_version='1.0-1')

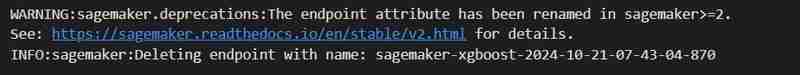

Execution of 24th code cell

hyperparameters = {

"max_depth":"5", ## Maximum depth of a tree. Higher means more complex models but risk of overfitting.

"eta":"0.2", ## Learning rate. Lower values make the learning process slower but more precise.

"gamma":"4", ## Minimum loss reduction required to make a further partition on a leaf node. Controls the model’s complexity.

"min_child_weight":"6", ## Minimum sum of instance weight (hessian) needed in a child. Higher values prevent overfitting.

"subsample":"0.7", ## Fraction of training data used. Reduces overfitting by sampling part of the data.

"objective":"binary:logistic", ## Specifies the learning task and corresponding objective. binary:logistic is for binary classification.

"num_round":50 ## Number of boosting rounds, essentially how many times the model is trained.

}

# A SageMaker estimator that calls the xgboost-container

estimator = sagemaker.estimator.Estimator(image_uri=container, # Points to the XGBoost container we previously set up. This tells SageMaker which algorithm container to use.

hyperparameters=hyperparameters, # Passes the defined hyperparameters to the estimator. These are the settings that guide the training process.

role=sagemaker.get_execution_role(), # Specifies the IAM role that SageMaker assumes during the training job. This role allows access to AWS resources like S3.

train_instance_count=1, # Sets the number of training instances. Here, it’s using a single instance.

train_instance_type='ml.m5.large', # Specifies the type of instance to use for training. ml.m5.2xlarge is a general-purpose instance with a balance of compute, memory, and network resources.

train_volume_size=5, # 5GB # Sets the size of the storage volume attached to the training instance, in GB. Here, it’s 5 GB.

output_path=output_path, # Defines where the model artifacts and output of the training job will be saved in S3.

train_use_spot_instances=True, # Utilizes spot instances for training, which can be significantly cheaper than on-demand instances. Spot instances are spare EC2 capacity offered at a lower price.

train_max_run=300, # Specifies the maximum runtime for the training job in seconds. Here, it's 300 seconds (5 minutes).

train_max_wait=600) # Sets the maximum time to wait for the job to complete, including the time waiting for spot instances, in seconds. Here, it's 600 seconds (10 minutes).

Additional Console Observation:

estimator.fit({'train': s3_input_train,'validation': s3_input_test})

# Looks for the XGBoost image URI and builds an XGBoost container. Specify the repo_version depending on preference.

container = get_image_uri(boto3.Session().region_name,

'xgboost',

repo_version='1.0-1')

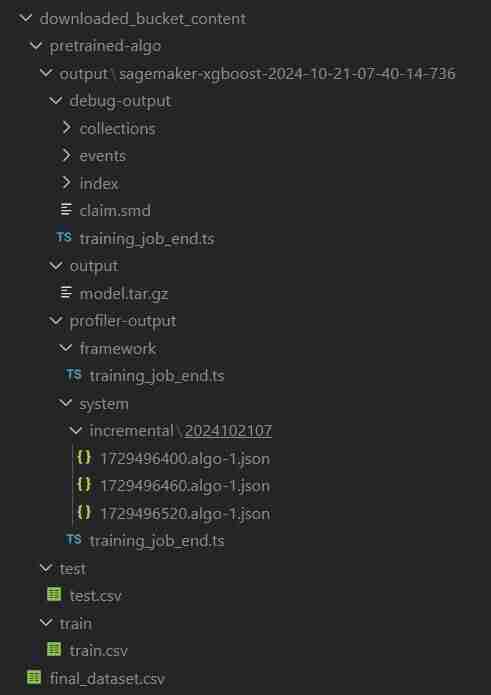

ClassiSage/downloaded_bucket_content

ClassiSage/.terraform

ClassiSage/ml_ops/pycache

ClassiSage/.terraform.lock.hcl

ClassiSage/terraform.tfstate

ClassiSage/terraform.tfstate.backup

NOTE:

If you liked the idea and the implementation of this Machine Learning Project using AWS Cloud's S3 and SageMaker for HDFS log classification, using Terraform for IaC (Infrastructure setup automation), Kindly consider liking this post and starring after checking-out the project repository at GitHub.

The above is the detailed content of ClassiSage: Terraform IaC Automated AWS SageMaker based HDFS Log classification Model. For more information, please follow other related articles on the PHP Chinese website!