The AIxiv column on this website is a column that publishes academic and technical content. In the past few years, the AIxiv column of this site has received more than 2,000 reports, covering top laboratories from major universities and companies around the world, effectively promoting academic exchanges and dissemination. If you have excellent work that you want to share, please feel free to contribute or contact us for reporting. Submission email: liyazhou@jiqizhixin.com; zhaoyunfeng@jiqizhixin.com.

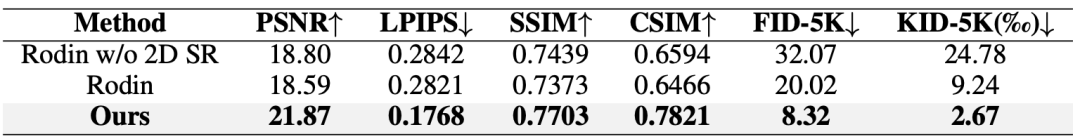

#In the research field of 3D generative modeling, the two current categories of 3D representation methods are either based on implicit decoders with insufficient fitting capabilities. Either it lacks a clearly defined spatial structure and is difficult to integrate with mainstream 3D diffusion technology. Researchers from the University of Science and Technology of China, Tsinghua University, and Microsoft Research Asia proposed GaussianCube, an explicitly structured 3D representation with powerful fitting capabilities, and can be seamlessly applied to current mainstream 3D diffusion models. GaussianCube starts with a novel density-constrained Gaussian fitting algorithm that enables high-accuracy fitting of 3D assets while ensuring the use of a fixed number of free Gaussians. These Gaussians are then rearranged into a predefined voxel grid using an optimal transport algorithm. Thanks to the structural characteristics of GaussianCube, researchers can directly apply standard 3D U-Net as the backbone network for diffusion modeling without complex network design. More importantly, the new fitting algorithm proposed in this paper greatly enhances the compactness of the representation, which is required when the 3D representation fitting quality is similar. The number of parameters is only one-tenth or one-hundredth of the number of parameters required for traditional structured representations. This compactness significantly reduces the complexity of 3D generative modeling. Researchers have conducted extensive experiments on unconditional and conditional 3D object generation, digital avatar creation, and text-to-3D content synthesis. Numerical results show that GaussianCube achieves a performance improvement of up to 74% compared to the previous baseline algorithm. As shown below, GaussianCube is not only able to generate high-quality 3D assets, but also provides highly attractive visual effects, fully demonstrating its great potential as a universal representation for 3D generation. # The method in this article can generate high-quality and diverse 3D models.  ## Figure 2. The result of digital avatar creation based on the input portrait. The method in this article can retain the identity feature information of the input portrait to a great extent, and provide detailed hairstyle and clothing modeling.

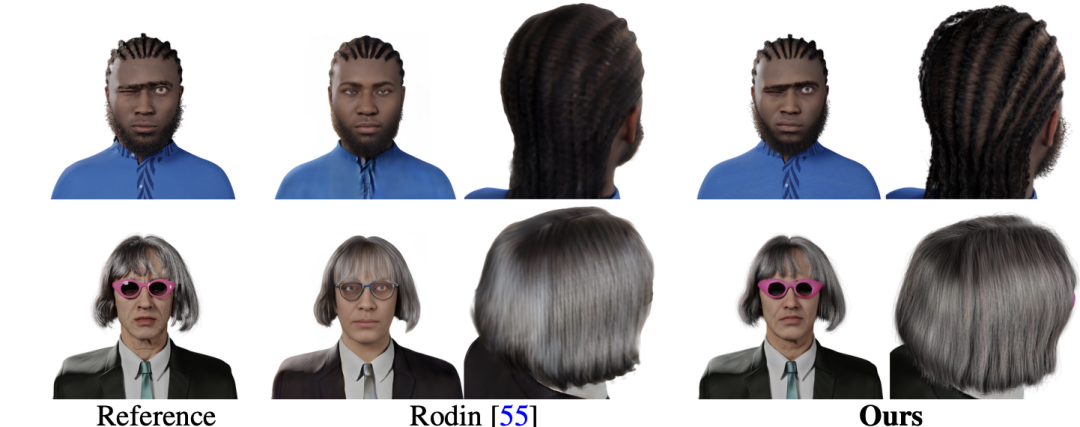

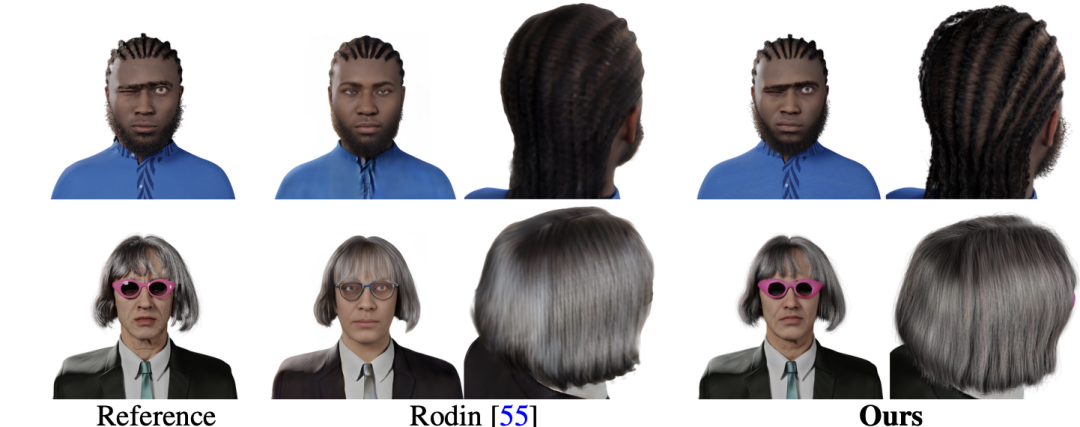

## Figure 2. The result of digital avatar creation based on the input portrait. The method in this article can retain the identity feature information of the input portrait to a great extent, and provide detailed hairstyle and clothing modeling.

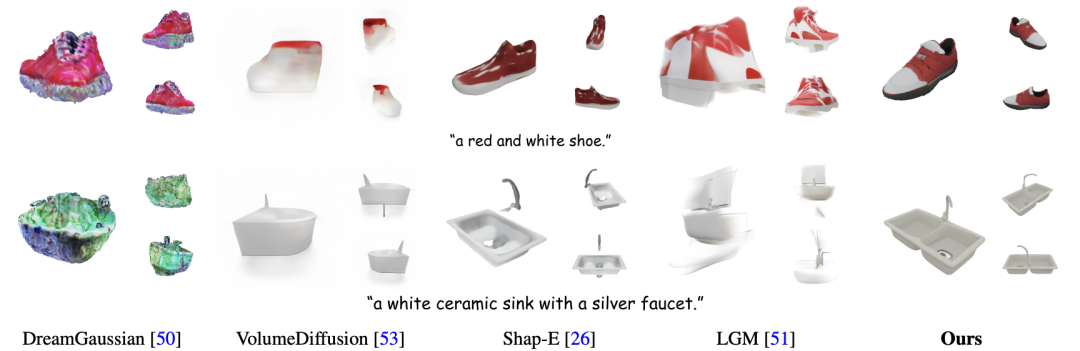

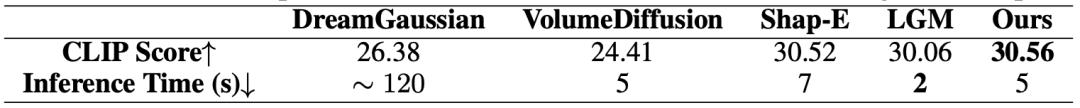

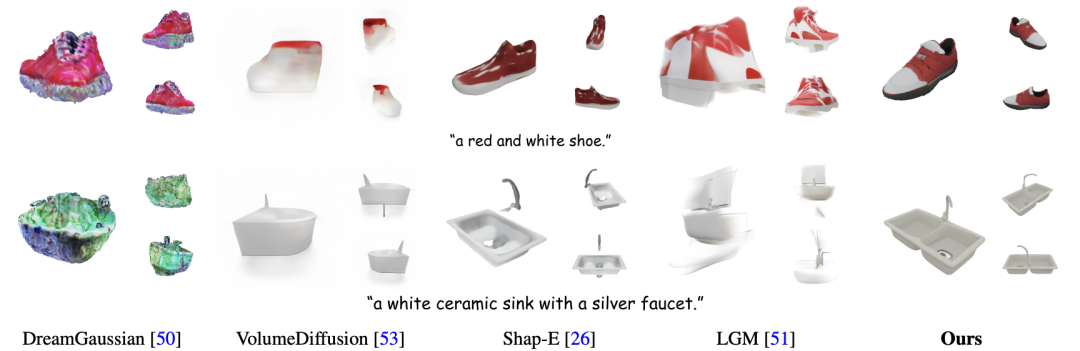

## 图 3. The results of creating three -dimensional assets based on the input text. The method in this article can output results consistent with text information, and can model complex geometric structures and detailed materials.

# 图 Figure 4. The result of the category condition. The 3D assets generated in this article have clear semantics and high-quality geometric structures and materials.

- Thesis name: GaussianCube: A Structured and Explicit Radiance Representation for 3D Generative Modeling

- Project homepage: https://gaussiancube.github.io/

- Paper link: https://arxiv.org/pdf/2403.19655

- Open source code: https://github.com/GaussianCube/ GaussianCube

- Demo video: https://www.bilibili.com/video/BV1zy411h7wB/

Are you still using traditional NeRF for 3D generative modeling? Most previous 3D generative modeling efforts have used a variant of Neural Radiance Field (NeRF) as their underlying 3D Representations, which typically combine an explicit structured feature representation with an implicit feature decoder. However, in 3D generative modeling, all 3D objects have to share the same implicit feature decoder, which greatly weakens the fitting ability of NeRF. In addition, the volume rendering technology that NeRF relies on has very high computational complexity, which results in slow rendering speed and the consumption of extremely high GPU memory. Recently, another three-dimensional representation method, 3D Gaussian Splatting (3DGS), has attracted much attention. Although 3DGS has powerful fitting capabilities, efficient computing performance, and fully explicit features, it has been widely used in three-dimensional reconstruction tasks. However, 3DGS lacks a well-defined spatial structure, which makes it unable to be directly applied in current mainstream generative modeling frameworks. Therefore, the research team proposed GaussianCube. This is an innovative three-dimensional representation method that is both structured and fully explicit, with powerful fitting capabilities. The method presented in this article first ensures a high-accuracy fit with a fixed number of free Gaussians, and then efficiently organizes these Gaussians into a structured voxel grid. This explicit and structured representation allows researchers to seamlessly adopt standard 3D network architectures, such as U-Net, without the need for complex and customized networks required when using unstructured or implicitly decoded representations. design. At the same time, the structured organization through the optimal transmission algorithm maintains the spatial structure relationship between adjacent Gaussian kernels to the greatest extent, allowing researchers to only use classic 3D convolutional networks can extract features efficiently. More importantly, in view of the findings in previous studies that diffusion models perform poorly when dealing with high-dimensional data distributions, the GaussianCube proposed in this paper significantly reduces the amount of parameters required while maintaining high-quality reconstruction, greatly easing the problem. It eliminates the pressure of diffusion models on distribution modeling and brings significant modeling capabilities and efficiency improvements to the field of 3D generative modeling.

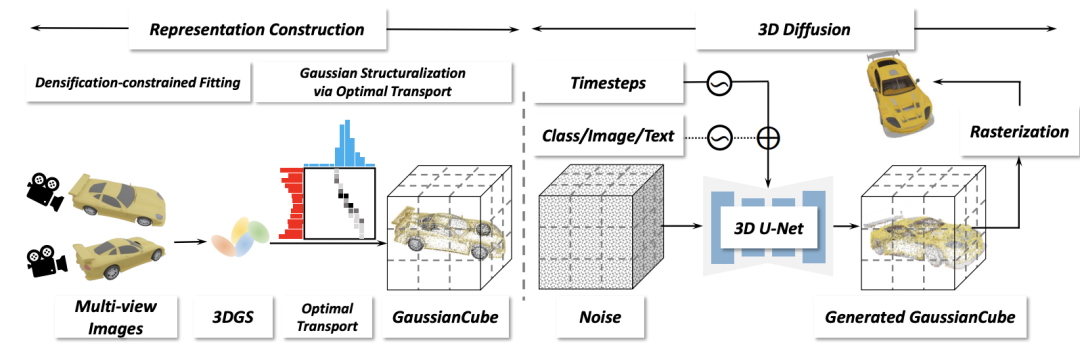

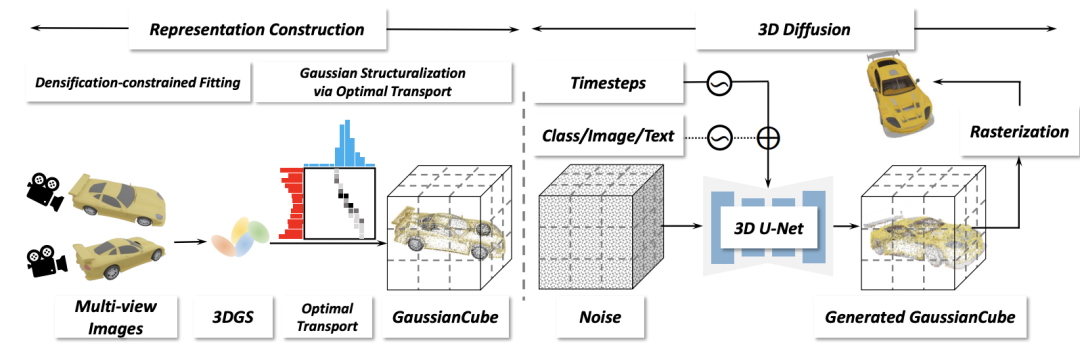

#The framework of this article consists of two main stages: representation construction and three-dimensional diffusion. In the representation construction phase, given a multi-view rendering of a 3D asset, density-constrained Gaussian fitting is performed on it to obtain a 3D Gaussian with a fixed number. Subsequently, the three-dimensional Gaussian is structured into a GaussianCube through optimized transfer. In the 3D diffusion stage, the researchers trained the 3D diffusion model to generate GaussianCubes from Gaussian noise.

Humans need to create representations for each 3D asset that are suitable for generative modeling. Considering that the generative field often requires modeled data to have a uniform fixed length, the adaptive density control in the original 3DGS fitting algorithm will lead to different numbers of Gaussian kernels used to fit different objects, which brings problems to generative modeling. Great challenge. A very simple solution would be to simply remove the adaptive density control, but the researchers found that this severely reduced the accuracy of the fit. This paper proposes a novel density constraint fitting algorithm that retains the pruning operation in the original adaptive density control, but performs new constraint processing on the splitting and cloning operations.

Specifically, assuming that the current iteration consists of Gaussians, the researchers identify splits by selecting those Gaussians whose gradient magnitude exceeds a predefined threshold τ at their position in view space Or candidates for cloning operations, the number of these candidates is recorded as

. In order to prevent exceeding the predefined maximum Gaussians,

Gaussians with the largest viewing angle spatial position gradient are selected from the candidates for splitting or cloning. After completing the fitting process, the researchers filled it with a Gaussian of α=0 to reach the target count without affecting the rendering results. Thanks to this strategy, a high-quality representation with several orders of magnitude fewer parameters compared to existing work of similar quality can be achieved, significantly reducing the modeling difficulty of diffusion models.

##儘管如此,透過上述擬合演算法得到的高斯仍然沒有明確的空間排列結構,這使得後續的擴散模型無法有效率地對資料進行建模。為此,研究人員提出將高斯映射到預先定義的結構化體素網格中來使得高斯具有明確的空間結構。直觀地說,這一步的目標是在盡可能保持高斯的空間相鄰關係的同時,將每個高斯 “移動” 到一個體素中。

研究人員將其建模為一個最優傳輸問題,使用Jonker-Volgenant 演算法來得到對應的映射關係,進而根據最優傳輸的解來組織將高斯組織到對應的體素中得到GaussianCube,並且用當前體素中心的偏移量取代了原始高斯的位置,以減少擴散模型的解空間。最終的 GaussianCube 表示不僅結構化,而且最大程度上保持了相鄰高斯之間的結構關係,這為 3D 生成建模的高效特徵提取提供了強有力的支持。

在三維擴散階段,本文使用三維擴散模型來建模 GaussianCube 的分佈。由於 GaussianCube 在空間上的結構化組織關係,無需複雜的網絡或訓練設計,標準的 3D 卷積足以有效提取和聚合鄰近高斯的特徵。於是,研究者利用了標準的 U-Net 網路進行擴散,並直接地將原始的 2D 操作符(包括卷積、注意力、上採樣和下採樣)替換為它們的 3D 實作。

本文的三維擴散模型也支援多種條件訊號來控制生成過程,包括類別標籤條件產生、根據圖像條件創建數位化身和根據文字產生三維數位資產。基於多模態條件的生成能力大大擴展了模型的應用範圍,並為未來的 3D 內容創造提供了強大的工具。 實驗結果

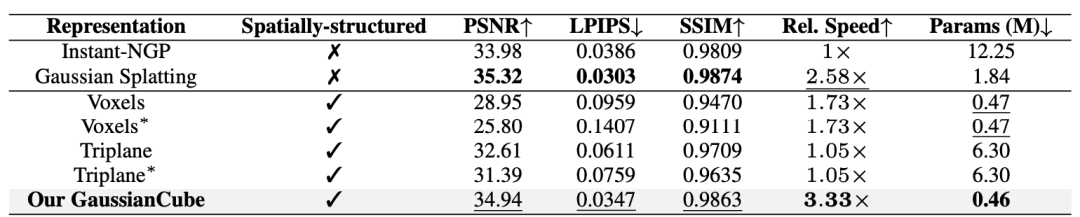

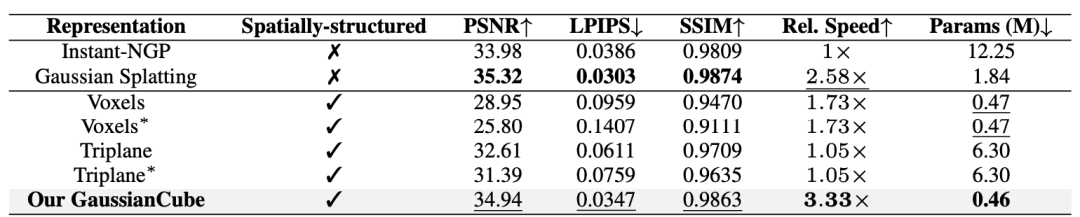

#研究人員首先在ShapeNet Car 資料集上驗證了GaussianCube的擬合能力。實驗結果表明,與基線方法相比,GaussianCube 可以以最快的速度和最少的參數量實現高精度的三維物體擬合。

表1. 在ShapeNet Car 上不同的三維表示關於空間結構、擬合品質、相對擬合速度、使用參數量的數值比較。 ∗ 表示不同物體共用隱式特徵解碼器。所有方法均以 30K 次迭代進行評估。

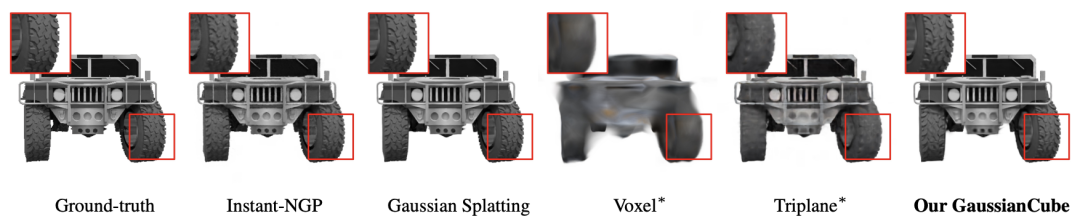

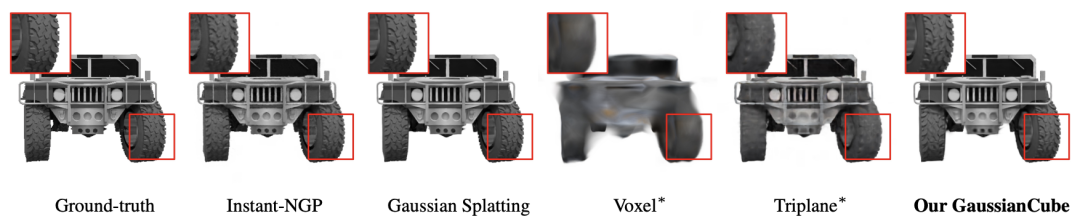

圖上使用 8. 在 ShapeNet Car 上不同的立體適應能力比較。 ∗ 表示不同物體共用隱式特徵解碼器。所有方法均以 30K 次迭代進行評估。

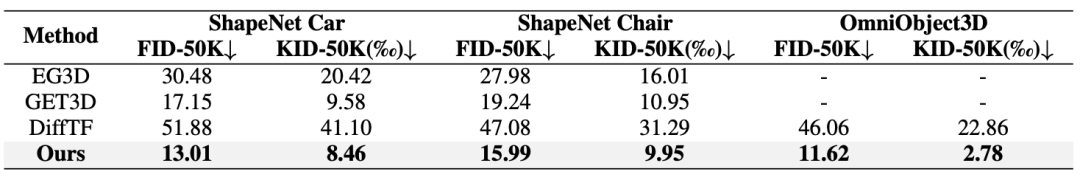

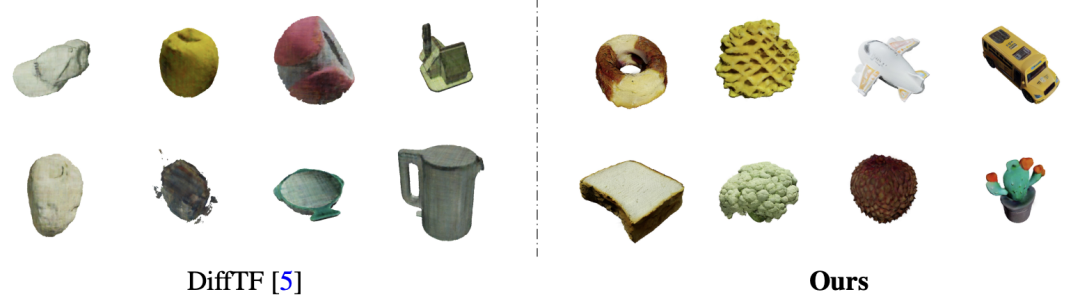

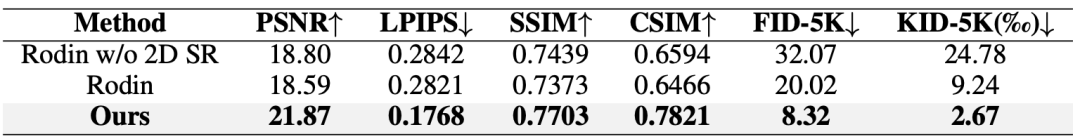

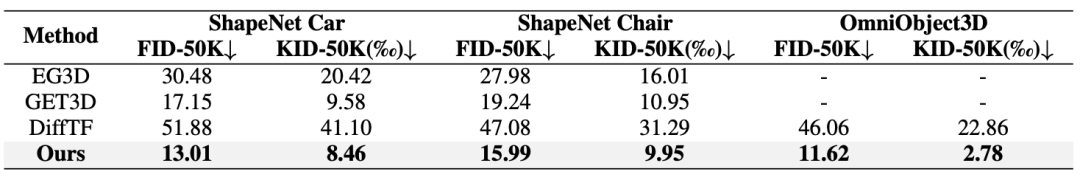

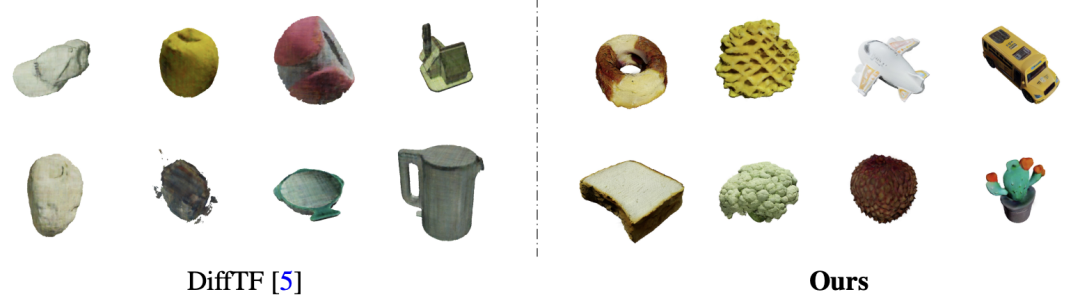

研究人員其次在大量資料集上驗證了基於GaussianCube 的擴散模型的產生能力,包括ShapeNet、OmniObject3D、合成數位化身資料集和Objaverse 資料集。實驗結果表明,本文的模型在無條件和類別條件的物件生成、數位化身創建以及文字到 3D 合成從數值指標到視覺品質都取得了領先的結果。特別地,GaussianCube 相較之前的基線演算法實現了最高 74% 的效能提升。

中對中上進行定量中進行定量的比較 條件生成中進行無條件比較。 之後中使用中無條件產生的定性比較。本文的方法可以產生精確幾何和細節材質。

1 本文的方法能夠更準確地還原輸入肖像的身份特徵、表情、配件和頭髮細節。

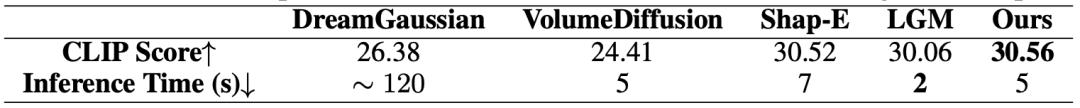

表 4. 基於輸入文字建立三維資產的量化比較。推理時間使用單張 A100 進行檢定。 Shap-E 和 LGM 與本文方法取得了相似的 CLIP Score,但它們分別使用了數百萬的訓練資料(本文僅使用十萬三維資料訓練),和二維文生圖擴散模型先驗。

圖 12. 中建立定性上為基礎資產的比較。本文的方法可以根據輸入文字實現高品質三維資產生成。 #

The above is the detailed content of The most promising episode for high-quality 3D generation? GaussianCube comprehensively surpasses NeRF in 3D generation. For more information, please follow other related articles on the PHP Chinese website!

## Figure 2. The result of digital avatar creation based on the input portrait. The method in this article can retain the identity feature information of the input portrait to a great extent, and provide detailed hairstyle and clothing modeling.

## Figure 2. The result of digital avatar creation based on the input portrait. The method in this article can retain the identity feature information of the input portrait to a great extent, and provide detailed hairstyle and clothing modeling.