離線強化學習演算法 (Offline RL) 是目前強化學習最火的子方向之一。離線強化學習不與環境交互,旨在從以往記錄的數據中學習目標策略。在面臨資料收集昂貴或危險等問題,但可能存在大量資料領域(例如,機器人、工業控制、自動駕駛),離線強化學習對比到線上強化學習(Online RL)尤其具有吸引力。

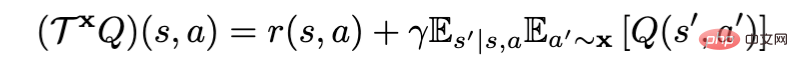

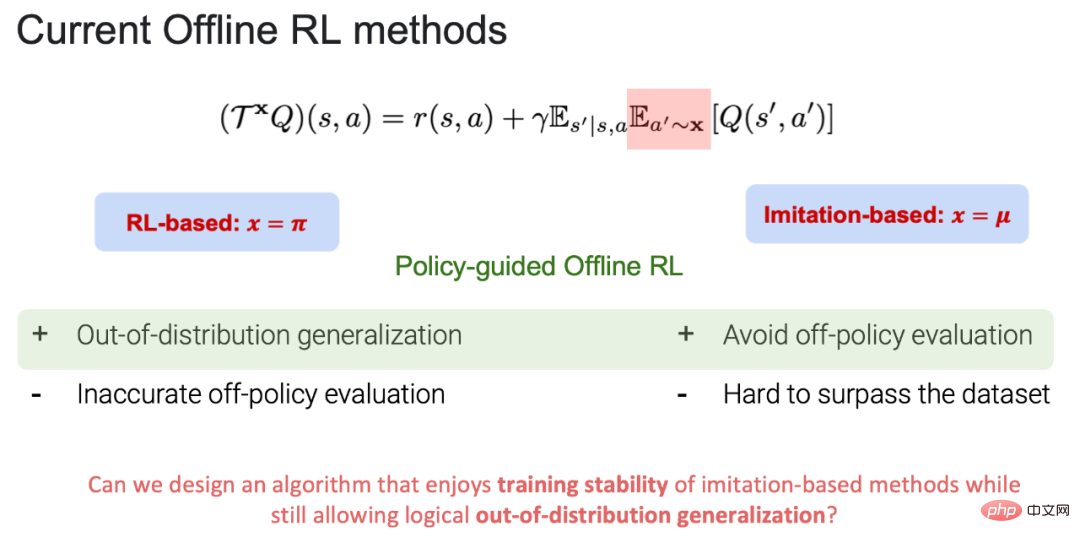

在利用貝爾曼策略評估算子進行策略評估時,根據X 的不同可以把目前的離線強化學習演算法分為RL- based (x=π)和Imitation-based (x=μ), 其中π為目標策略,μ為行為策略(註:目標策略:進行學習更新的策略;行為策略:離線資料中的策略)。目前,不管是RL-based 還是Imitation-based, 都有各自優勢以及劣勢:

1.RL-based 優點:可以進行資料外的泛化,最後達到學習到超越行為策略的目標策略。劣勢:需要在策略評估中準確的價值估計(更多的行為正則化)和策略提升(較少的行為正則化)之間進行權衡。在策略評估過程中,如果選取了資料分佈外的動作,無法準確估計動作價值函數(action-state value),最後導致的目標策略學習失敗。

2.Imitation-based 優點:因為在策略評估的過程中都是資料分佈內的動作,既可以帶來訓練的穩定性,又避免了資料分佈外的策略評估,可以學習到接近行為策略庫中最好的策略。劣勢:因為都是採取資料分佈內的動作,所以很難超越原始資料中存在的行為策略。

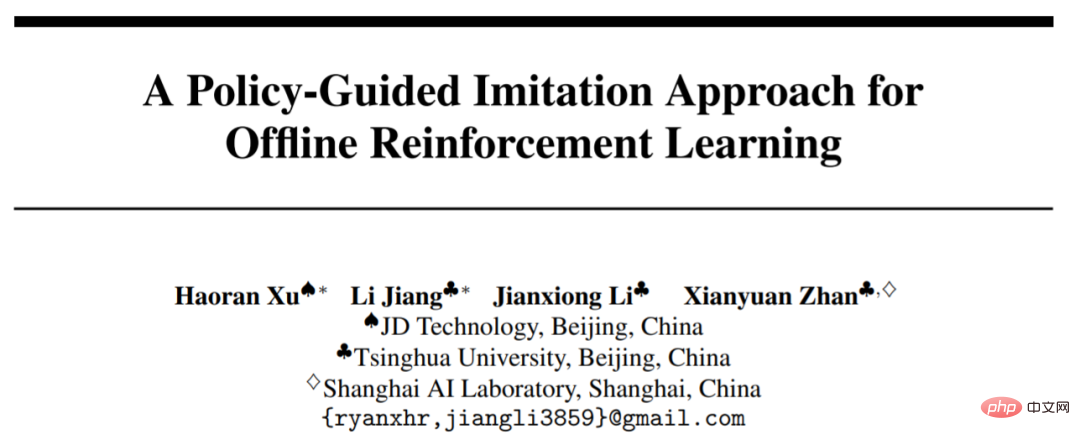

POR 基於此,既能避免策略評估過程中的權衡,也能擁有資料外泛化的能力。該工作已被 NeurIPS 2022 接收,並被邀請進行口頭報告(oral presentation)論文和程式碼均已開源。

#state-stitching vs. action-stitching

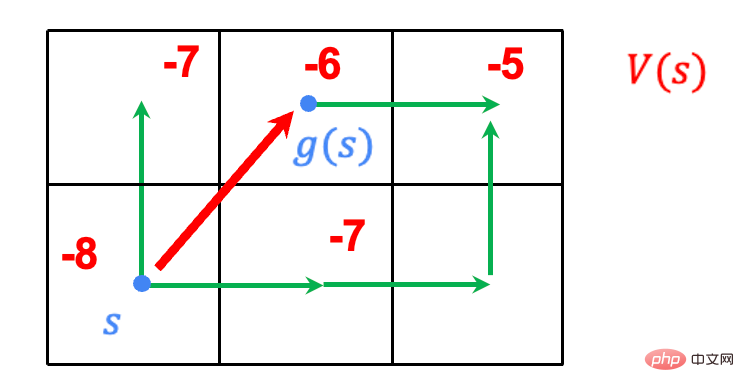

之前的 imitation-based 演算法都是使用 action-stitching: 拼湊資料中可用的軌跡以達到目標策略的學習。如影片中藍色的軌跡,是在 action-stitching 下能夠學習到的最佳軌跡,但是在資料外無法進行有效的泛化,從而學習到超越資料中行為策略的目標策略。但是,POR 透過解耦式的學習範式,能夠讓目標策略進行有效的泛化,從而超越行為策略的表現。

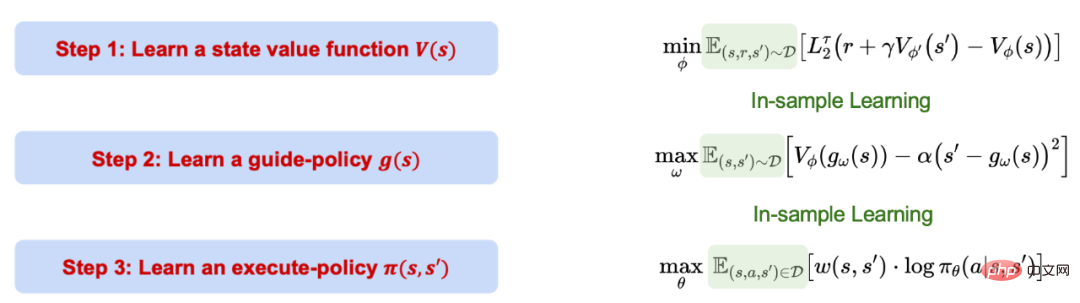

Policy-guided Offline RL (POR)的學習過程分為三步,這三步是分別解耦的,互不影響。值得注意的是,POR 全程都是基於 imitation-based 的學習,也就是樣本內的學習(in-sample learning),不會對資料分佈外的動作進行價值評估。

1. 利用分位數迴歸學習價值函數的信心上界。

2. Use the learned value function to learn a guidance strategy, which can generate the next optimal state position (s') in the sample given the current state. The latter item serves as a constraint to ensure that the generated state satisfies the MDP condition.

3. Use all samples in the data to learn an execution strategy that can take steps given the current state (s) and the next state (s'). The correct action is to move from the current state (s) to the next state (s').

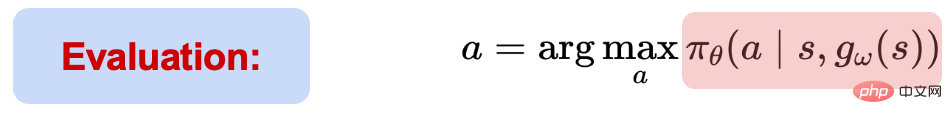

#During the testing process, based on the current status information (s), the next optimal status (s') is first given through the guidance strategy.

Given (s, s'), the execution strategy can select and execute actions. Although the entire learning process of POR is in-sample learning, the generalization performance of the neural network can be used to perform generalization learning outside the data, and finally achieve state-stitching.

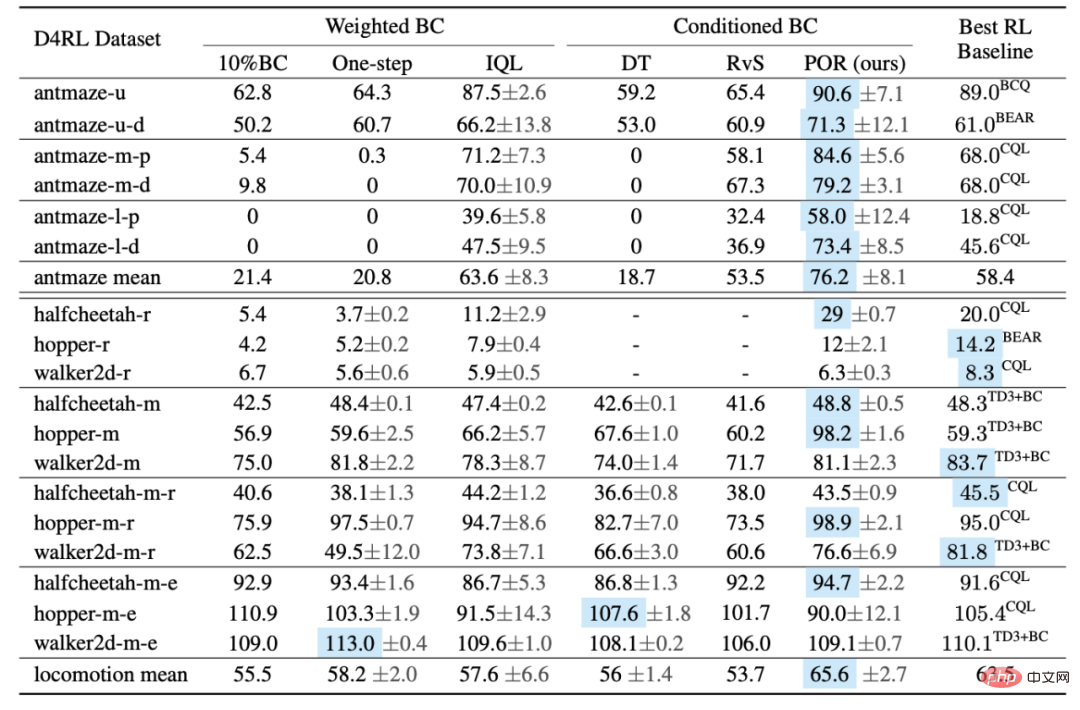

The author compared the performance of POR and other algorithms on the D4RL Benchmark. Judging from the table, POR performs very well on suboptimal data, and achieves optimal algorithm performance on more difficult Antmaze tasks.

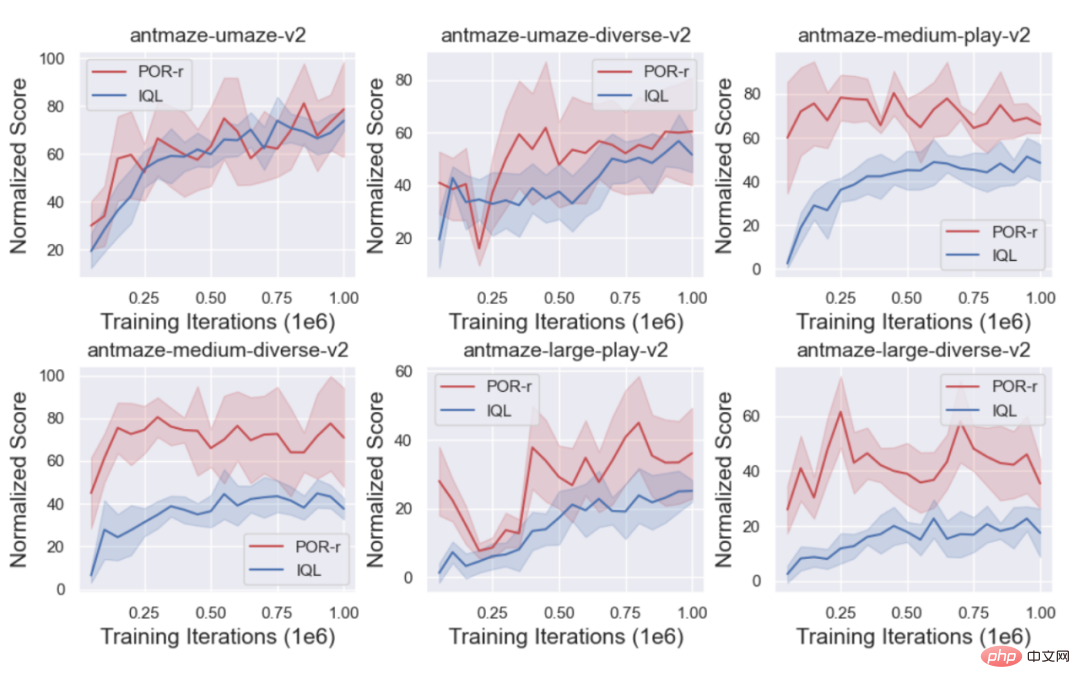

At the same time, the author compared the training curves of POR (state-stitching) and IQL (action-stitching) to show the advantages of state-stitching .

1. Re-learn the guidance strategy to achieve improved performance of the algorithm.

There are often a large number of suboptimal or even random data sets (D_o) in the real world. If they are directly introduced to the original data set (D_e) being learned, it may lead to A poor strategy is learned, but for decoupled learning algorithms, different components are targeted and different data sets are learned to improve performance. When learning the value function, the more data sets the better, because the value function can be learned more accurately; on the contrary, the learning of the strategy does not want to introduce (D_o).

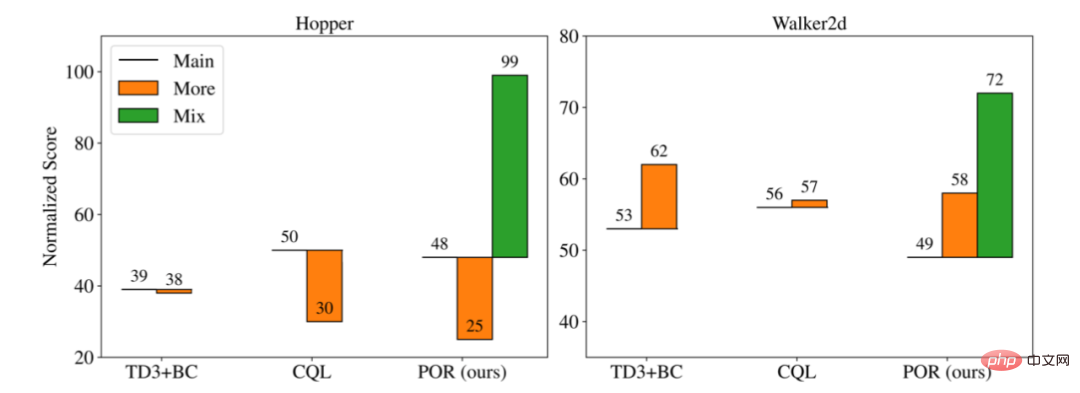

The author compared the performance of three different algorithms in different training scenarios:

1. Main: On the original data set ( D_e) Learn without introducing additional suboptimal data sets.

2. More: Mix the original data set (D_e) and the new data set (D_o) to learn as a new data set.

3. Mix: For decoupled algorithms, different data sets can be used for learning for different learning parts, so only POR can have the Mix learning paradigm.

As you can see from the above figure, when new sub-optimal data is added and trained together (More), it may result in a better performance than the original one. The original data set (Main) performs better or worse, but for a decoupled training form to re-learn the guidance strategy (D_o D_e), the author's experiments show that more data can enhance the selectivity and selectivity of the guidance strategy. Generalization ability while keeping the behavioral strategy unchanged, thereby achieving the improvement of execution strategy.

4. When facing a new task, because the execution strategy is irrelevant to the task, you only need to relearn the guidance strategy.

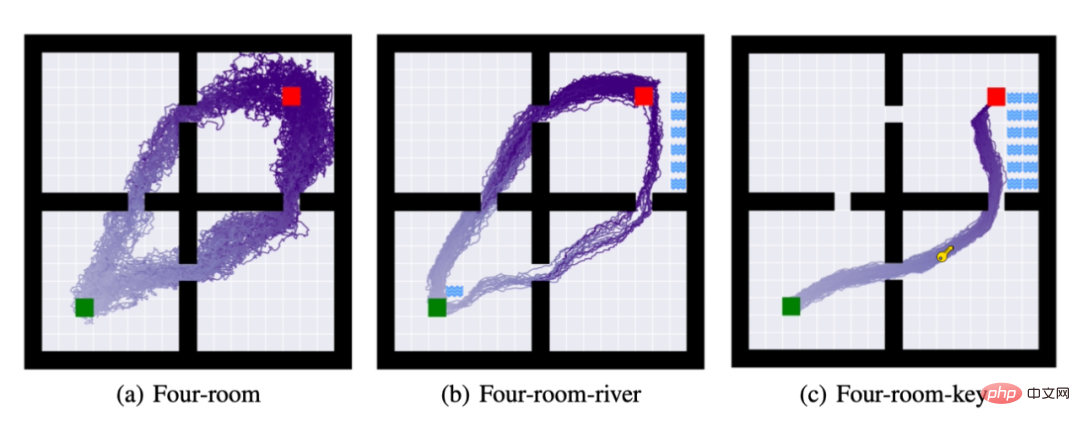

To this end, the author proposed three tasks: (a): four-room: requiring the agent to move from the green square to the red square. (b) In addition to completing task (a), the agent is required not to touch the river. (c) In addition to completing tasks (a) and (b), the agent must obtain the key to complete the task.

The above picture is the trajectory of rolling out the strategy 50 times after completing the strategy learning. In tasks (b) and task (c) The author follows the execution strategy of task (a) and only relearns the guidance strategy. As can be seen from the above figure, the decoupled learning method can complete task migration using as few computing resources as possible.

以上是離線強化學習新典範!京東科技&清華提出解耦式學習演算法的詳細內容。更多資訊請關注PHP中文網其他相關文章!