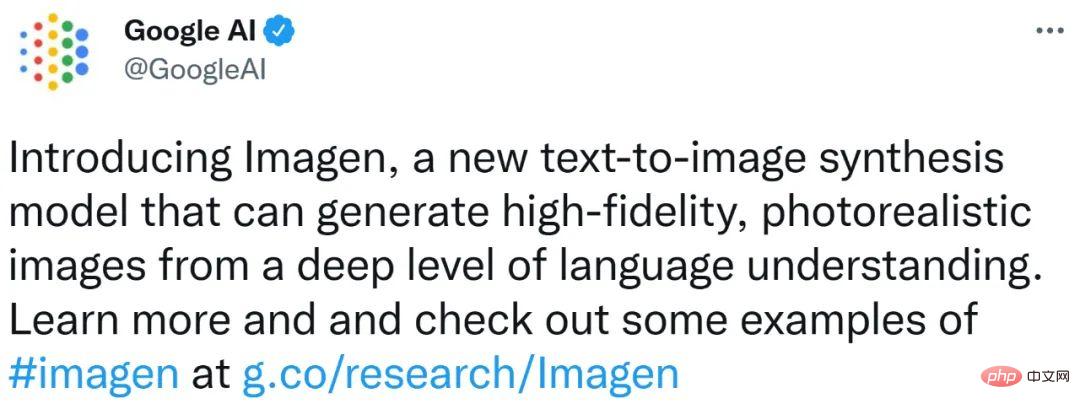

近年來,多模態學習受到重視,特別是文字 - 圖像合成和圖像 - 文字對比學習兩個方向。一些 AI 模型因在創意圖像生成、編輯方面的應用引起了公眾的廣泛關注,例如 OpenAI 先後推出的文本圖像模型 DALL・E 和 DALL-E 2,以及英偉達的 GauGAN 和 GauGAN2。

Google也不甘落後,在 5 月底發布了自己的文字到圖像模型 Imagen,看起來進一步拓展了字幕條件(caption-conditional)圖像生成的邊界。

光是給出一個場景的描述,Imagen 就能產生高品質、高解析度的影像,無論這種場景在現實世界中是否合乎邏輯。下圖為 Imagen 文字產生圖像的幾個範例,在圖像下方顯示出了相應的字幕。

這些令人印象深刻的生成圖像不禁讓人想了解:Imagen 到底是如何運作的呢?

近期,開發者講師Ryan O'Connor 在AssemblyAI 部落格撰寫了一篇長文《How Imagen Actually Works》,詳細解讀了Imagen 的工作原理,對Imagen 進行了概覽介紹,分析並理解其高級組件以及它們之間的關聯。

在這部分,作者展示了Imagen 的整體架構,並對其它的工作原理做了高級解讀;然後依次更透徹地剖析了Imagen 的每個組件。如下動圖為 Imagen 的工作流程。

首先,將字幕輸入到文字編碼器。此編碼器將文字字幕轉換成數值表示,後者將語意訊息封裝在文字中。 Imagen 中的文字編碼器是一個 Transformer 編碼器,其確保文字編碼能夠理解字幕中的單字如何彼此關聯,這裡使用自註意力方法。

如果 Imagen 只關注單字而不是它們之間的關聯,雖然可以獲得能夠捕獲字幕各個元素的高品質圖像,但描述這些圖像時無法以恰當的方式反映字幕語義。如下圖範例所示,如果不考慮單字之間的關聯,就會產生截然不同的生成效果。

雖然文字編碼器為Imagen 的字幕輸入產生了有用的表示,但仍需要設計一種方法來產生使用此表示的圖像,也即圖像生成器。為此,Imagen 使用了擴散模型,它是一種生成模型,近年來得益於其在多項任務上的 SOTA 表現而廣受歡迎。

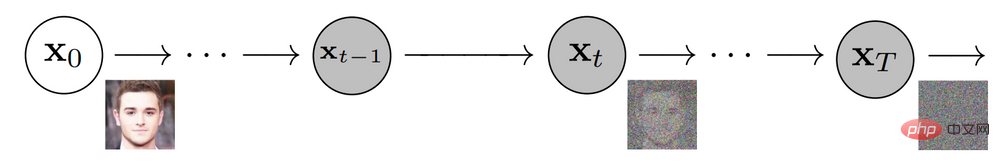

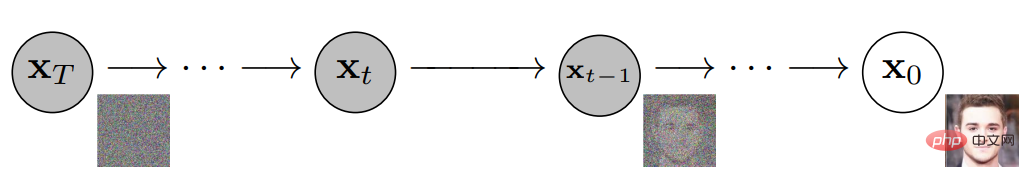

擴散模型透過添加雜訊來破壞訓練資料以實現訓練,然後透過反轉這個雜訊過程來學習恢復資料。給定輸入影像,擴散模型將在一系列時間步中迭代地利用高斯雜訊破壞影像,最終留下高斯雜訊或電視雜訊靜態(TV static)。下圖為擴散模型的迭代噪聲過程:

然後,擴散模型將向後work,學習如何在每個時間步上隔離和消除噪聲,抵消剛剛發生的破壞過程。訓練完成後,模型可以一分為二。這樣可以從隨機取樣高斯雜訊開始,使用擴散模型逐漸去噪以產生影像,如下圖所示:

In summary, the trained diffusion model starts with Gaussian noise and then iteratively generates images similar to the training images. It's obvious that there's no control over the actual output of the image, just feed Gaussian noise into the model and it will output a random image that looks like it belongs in the training dataset.

However, the goal is to create images that encapsulate the semantic information of the subtitles input to Imagen, so a way to incorporate the subtitles into the diffusion process is needed. How to do this?

As mentioned above, the text encoder produces a representative subtitle encoding, which is actually a vector sequence. To inject this encoded information into the diffusion model, these vectors are aggregated together and the diffusion model is adjusted based on them. By adjusting this vector, the diffusion model learns how to adjust its denoising process to produce images that match the subtitles well. The process visualization is shown below:

Since the image generator or base model outputs a small 64x64 image, in order to upsample this model to the final 1024x1024 version, Intelligently upsample images using super-resolution models.

For the super-resolution model, Imagen again uses the diffusion model. The overall process is basically the same as the base model, except that it is adjusted based solely on subtitle encoding, but also with smaller images being upsampled. The visualization of the entire process is as follows:

The output of this super-resolution model is not actually the final output, but a medium-sized image. To upscale this image to the final 1024x1024 resolution, another super-resolution model is used. The two super-resolution architectures are roughly the same, so they will not be described again. The output of the second super-resolution model is the final output of Imagen.

Answering exactly why the Imagen is better than the DALL-E 2 is difficult. However, a significant portion of the performance gap stems from subtitle and cue differences. DALL-E 2 uses contrasting targets to determine how closely text encodings relate to images (essentially CLIP). The text and image encoders adjust their parameters such that the cosine similarity of similar subtitle-image pairs is maximized, while the cosine similarity of dissimilar subtitle-image pairs is minimized.

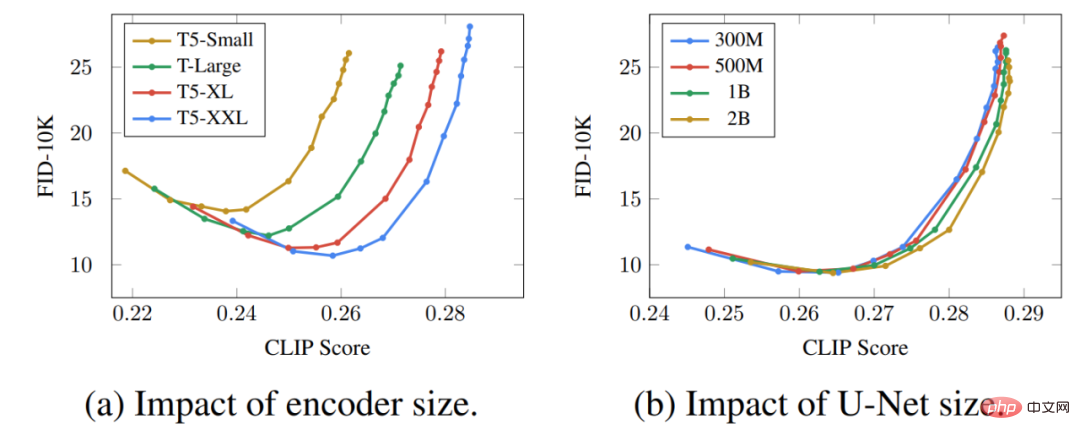

A significant part of the performance gap stems from the fact that Imagen's text encoder is much larger and trained on more data than DALL-E 2's text encoder. As evidence for this hypothesis, we can examine the performance of Imagen when the text encoder scales. Here is a Pareto curve for Imagen's performance:

The effect of upscaling text encoders is surprisingly high, while the effect of upscaling U-Net is surprisingly low. This result shows that relatively simple diffusion models can produce high-quality results as long as they are conditioned on strong encoding.

Given that the T5 text encoder is much larger than the CLIP text encoder, coupled with the fact that natural language training data is necessarily richer than image-caption pairs, much of the performance gap is likely attributable to this difference .

In addition, the author also lists several key points of Imagen, including the following:

These insights provide valuable directions for researchers who are working on diffusion models that are not only useful in the text-to-image subfield.

以上是擴散+超解析度模型強強聯合,Google影像生成器Imagen背後的技術的詳細內容。更多資訊請關注PHP中文網其他相關文章!