Home > Article > Backend Development > Why must Python big data use Numpy Array?

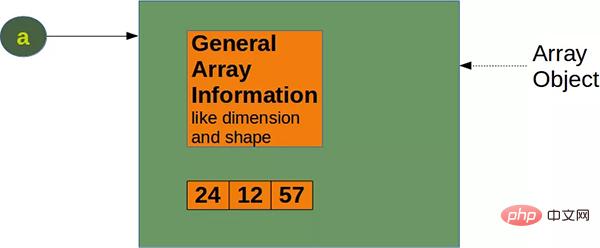

Numpy is a core module of Python scientific computing. It provides very efficient array objects, as well as tools for working with these array objects. A Numpy array consists of many values, all of the same type.

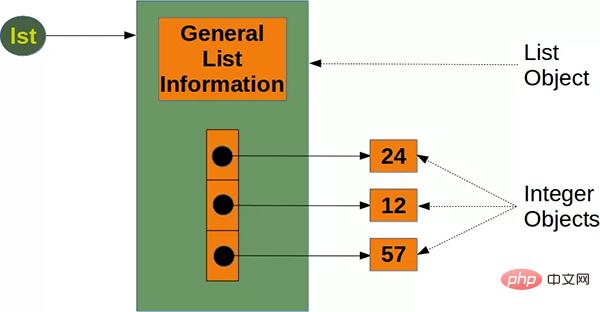

Python's core library provides List lists. Lists are one of the most common Python data types, and they can be resized and contain elements of different types, which is very convenient.

So what is the difference between List and Numpy Array? Why do we need to use Numpy Array when processing big data? The answer is performance.

Numpy data structures perform better in the following aspects:

1. Memory size—Numpy data structures take up less memory.

2. Performance - The bottom layer of Numpy is implemented in C language, which is faster than lists.

3. Operation methods - built-in optimized algebraic operations and other methods.

The following explains the advantages of Numpy arrays over Lists in big data processing.

If you use Numpy arrays instead of Lists appropriately, you can reduce your memory usage by 20 times.

For Python's native List, since every time a new object is added, 8 bytes are needed to reference the new object, and the new object itself occupies 28 bytes (taking integers as an example). So the size of the list can be calculated with the following formula:

while using Numpy , which can reduce a lot of space occupied. For example, a Numpy integer Array of length n requires:

It can be seen that the larger the array, the more money you save The more memory space there is. Assuming your array has 1 billion elements, then the difference in memory usage will be on the GB level.

Run the following script, which also generates two arrays of a certain dimension and adds them together. You can see the native List and Numpy Array. performance gap.

import time

import numpy as np

size_of_vec = 1000

def pure_python_version():

t1 = time.time()

X = range(size_of_vec)

Y = range(size_of_vec)

Z = [X[i] + Y[i] for i in range(len(X)) ]

return time.time() - t1

def numpy_version():

t1 = time.time()

X = np.arange(size_of_vec)

Y = np.arange(size_of_vec)

Z = X + Y

return time.time() - t1

t1 = pure_python_version()

t2 = numpy_version()

print(t1, t2)

print("Numpy is in this example " + str(t1/t2) + " faster!")

The results are as follows:

0.00048732757568359375 0.0002491474151611328 Numpy is in this example 1.955980861244019 faster!

As you can see, Numpy is 1.95 times faster than native arrays.

If you are careful, you can also find that Numpy array can directly perform addition operations. Native arrays cannot do this. This is the advantage of Numpy's operation method.

We will do several more repeated experiments to prove that this performance advantage is durable.

import numpy as np

from timeit import Timer

size_of_vec = 1000

X_list = range(size_of_vec)

Y_list = range(size_of_vec)

X = np.arange(size_of_vec)

Y = np.arange(size_of_vec)

def pure_python_version():

Z = [X_list[i] + Y_list[i] for i in range(len(X_list)) ]

def numpy_version():

Z = X + Y

timer_obj1 = Timer("pure_python_version()",

"from __main__ import pure_python_version")

timer_obj2 = Timer("numpy_version()",

"from __main__ import numpy_version")

print(timer_obj1.timeit(10))

print(timer_obj2.timeit(10)) # Runs Faster!

print(timer_obj1.repeat(repeat=3, number=10))

print(timer_obj2.repeat(repeat=3, number=10)) # repeat to prove it!

The results are as follows:

0.0029753120616078377 0.00014940369874238968 [0.002683573868125677, 0.002754641231149435, 0.002803879790008068] [6.536301225423813e-05, 2.9387418180704117e-05, 2.9171351343393326e-05]

It can be seen that the second output time is always much smaller, which proves that this performance advantage is persistent.

So, if you are doing some big data research, such as financial data and stock data, using Numpy can save you a lot of memory space and have more powerful performance.

References://m.sbmmt.com/link/5cce25ff8c3ce169488fe6c6f1ad3c97

Our article ends here, if you like Please continue to follow us for today’s Python practical tutorial.

The above is the detailed content of Why must Python big data use Numpy Array?. For more information, please follow other related articles on the PHP Chinese website!