Home > Article > Backend Development > Crawler&Problem Solving&Thinking

I have just come into contact with python recently. I am looking for some small tasks to practice my skills. I hope that I can continue to exercise my problem-solving ability in practice. This little crawler comes from a course on MOOC. What I record here is the problems and solutions I encountered during my learning process, as well as my thoughts outside of the crawler.

This small task is to write a small crawler. The most important reason why I chose this to practice is that big data is too hot, just like the current weather in Wuhan. Data is to "big data" what weapons are to soldiers, and bricks and tiles are to high-rise buildings. Without data, "big data" is just a loft in the sky, unable to be put into practice and applied in practice. Where does the data come from? There are two ways, one is to take it from yourself and the other is to take it from others. Needless to say, the other way is to take things from others, and this "other" refers to the Internet.

First of all, we must understand crawler: A program or script that automatically captures World Wide Web information according to certain rules (from Baidu Encyclopedia) . As the name suggests, you need to visit the page, save the content on the page, then filter out the content you are interested in from the saved page, and then store it separately. In real life, we often do this kind of thing: on a boring afternoon, we enter an address in the browser to access the page, then encounter an article or paragraph of interest, select it, and then copy and paste it into a word document. . If we do the above for one page and do it for millions of pages, then your data will become larger and larger. We call this process "data collection".

The advantage of crawlers is: automation and batching. There will be a misunderstanding here. Before I came into contact with crawlers, I thought crawlers could crawl things that I "couldn't see". Later I realized that crawlers were used to crawl things that I "couldn't see".

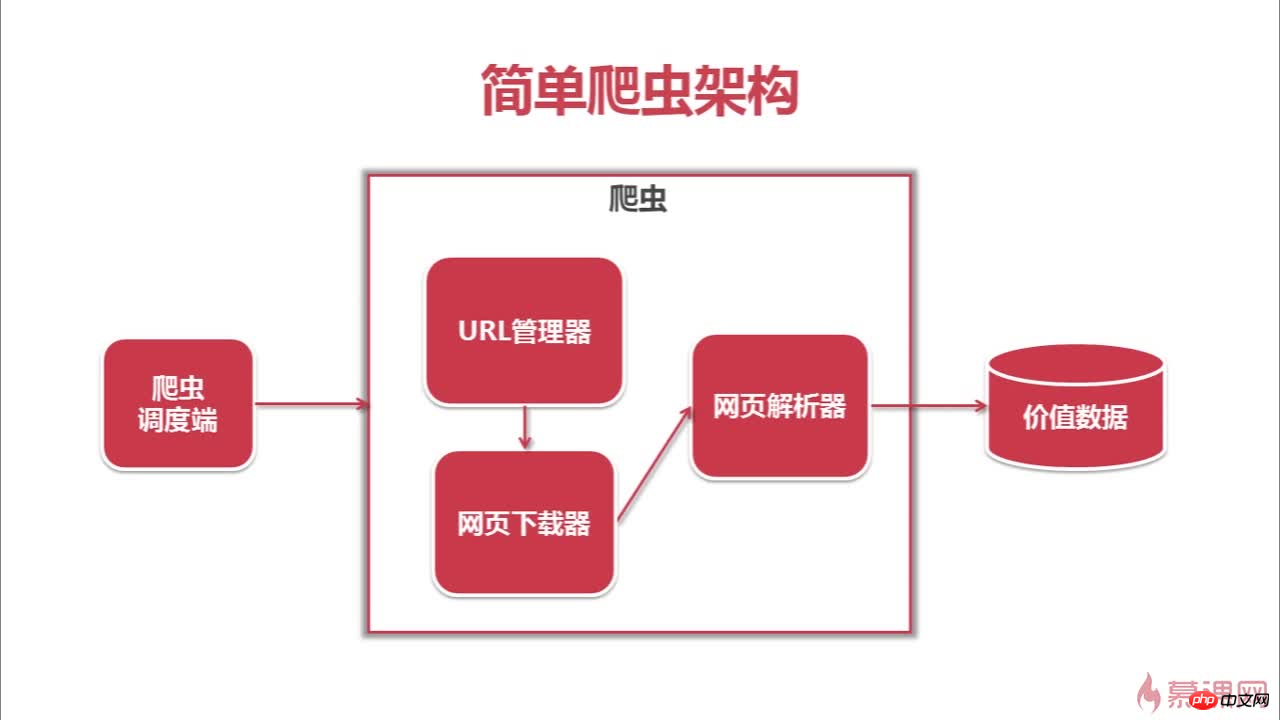

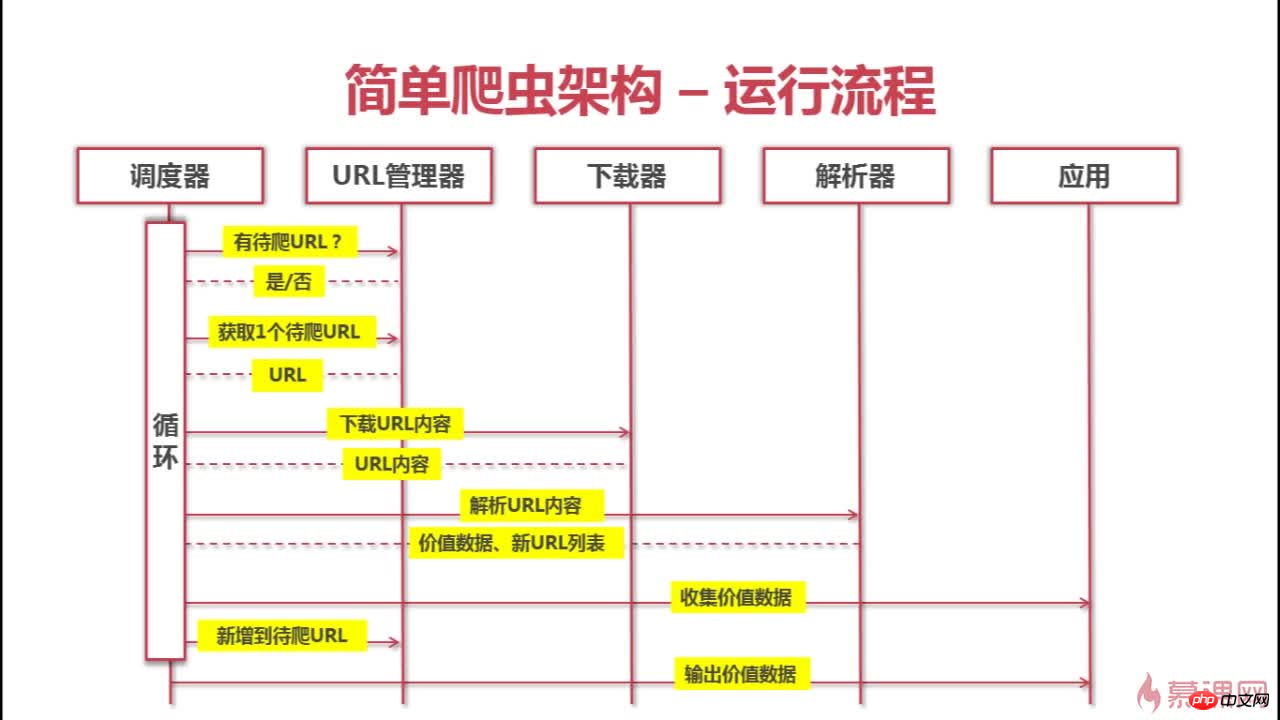

The following is the architecture and crawling process of this crawler

The above is the detailed content of Crawler&Problem Solving&Thinking. For more information, please follow other related articles on the PHP Chinese website!