Home > Article > Web Front-end > How to operate js array to remove duplicates

Array deduplication is a commonplace question. Whether it is an interview or a job, it will be involved. There are many methods for deduplication. It is difficult to say which one is better and which one is worse. You can choose according to actual needs. This article lists some common duplication removal methods, along with the advantages, disadvantages and applicable occasions of the methods. Please correct me if there are any mistakes.

1. Double loop comparison method:

const array = [1, NaN, '1', null, /a/, 1, undefined, null, NaN, '1', {}, /a/, [], undefined, {}, []];function uniqueByCirculation(arr) {

const newArr = []; let isRepet = false; for(let i=0;i < arr.length; i++) { for(let j=0;j < newArr.length; j++) { if(arr[i] === newArr[j]) {

isRepet = true;

}

}; if(!isRepet) {

newArr.push(arr[i]);

};

}; return newArr;

}const uniquedArr = uniqueByCirculation(array);

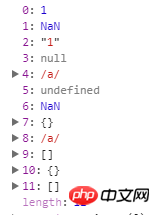

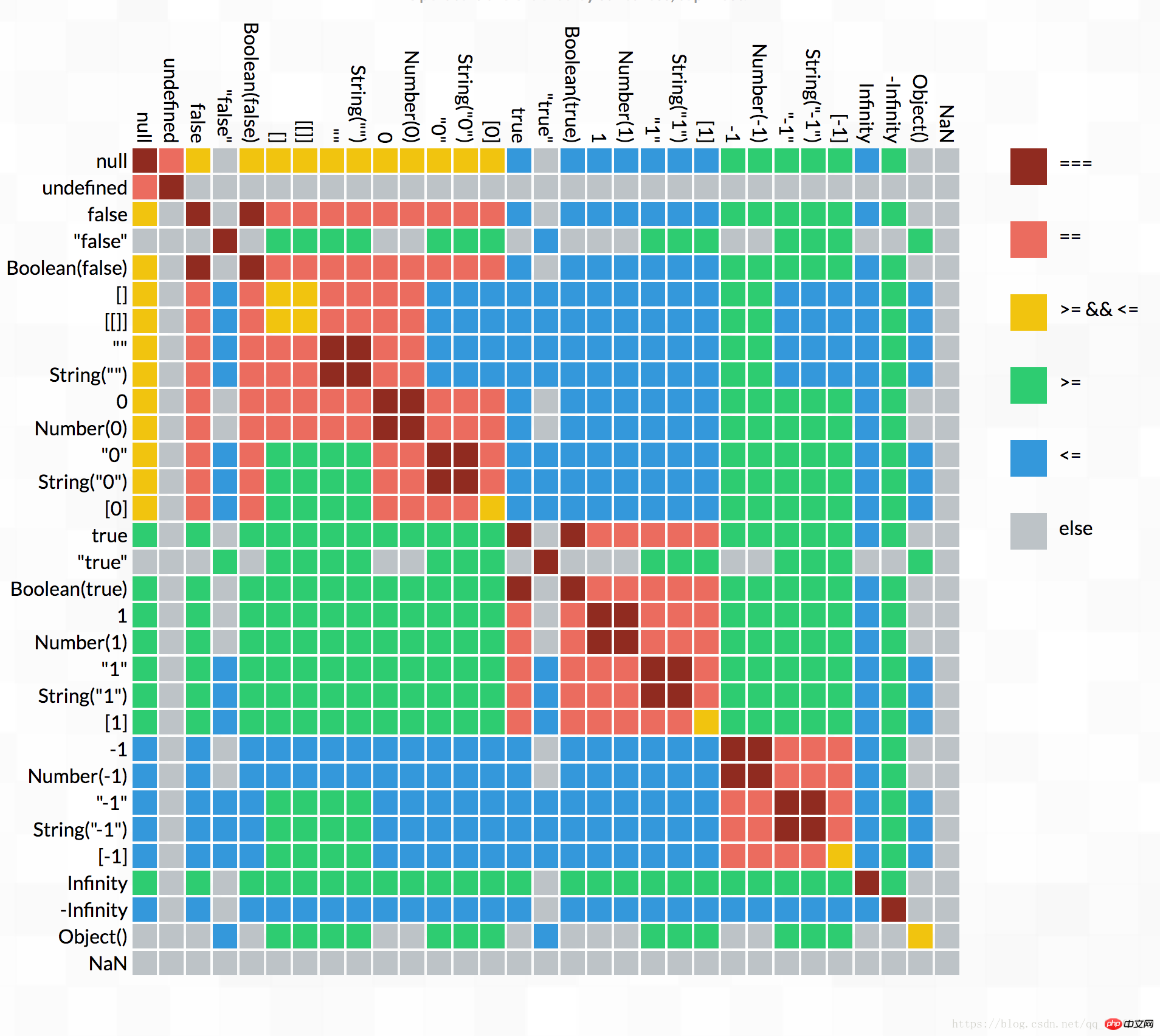

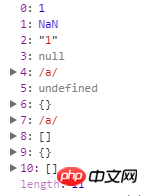

console.log(uniquedArr); Result:  , from the result it can be seen that Array, Object and RegExp are retained. NaN is not deduplicated. The reason involves the comparison mechanism of "===". For details, please read:

, from the result it can be seen that Array, Object and RegExp are retained. NaN is not deduplicated. The reason involves the comparison mechanism of "===". For details, please read:

The time complexity of this method is O(NlogN) and the space complexity is O(N). Applicable occasions: The data type is simple and the amount of data is small.

2. indexOf method:

const array = [1, NaN, '1', null, /a/, 1, undefined, null, NaN, '1', {}, /a/, [], undefined, {}, []];function uniqueByIndexOf(arr) { return arr.filter((e, i) => arr.indexOf(e) === i);

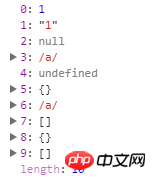

}const uniquedArr = uniqueByIndexOf(array);console.log(uniquedArr); Result:

The code is simple and crude. Judging from the results, NaN is gone, and Array, Object, and RegExp are retained. , this is because Array.indexOf(NaN) always returns -1, and the value returned by other complex types is always equal to its own index, so this result is obtained. The time and space complexity of this method is the same as that of the double loop, and the applicable situations are similar. Of course, compared with the two, this method is recommended first, after all, the code is short.

3. Object[key] method: (Personal depth enhanced version)

const array = [1, '1', NaN, 1, '1',NaN, -0, +0, 0, null, /a/, null, /a/, [], {}, [], {}, [1,2,[2,3]], [1,2,[2,3]], [1,2,[3,2]], undefined,

{a:1,b:[1,2]}, undefined, {b:[2,1],a:1}, [{a:1},2], [2,{a:1}], {a:{b:1,d:{c:2,a:3},c:1},c:1,d:{f:1,b:2}}, {a:{b:1,d:{c:2,a:3},c:1},c:1,d:{f:1,b:2}}];function uniqueByObjectKey(arr) {

const obj = {}; const newArr = []; let key = '';

arr.forEach(e => { if(isNumberOrString(e)) { // 针对number与string和某些不适合当key的元素进行优化

key = e + typeof e;

}else { if(e&&isObject(e)){ // 解决同key同value对象的去重

e = depthSortObject(e);

}

key = JSON.stringify(e) + String(e); //JSON.stringify(e)为了应对数组或对象有子内容,String(e)为了区分正则和空对象{}

} if(!obj[key]) {

obj[key] = key;

newArr.push(e);

}

}); return newArr;

}function isNumberOrString(e){

return typeof e === 'number' || typeof e === 'string';

}function isObject(e){

return e.constructor === Object;

}function depthSortObject(obj){

if(obj.constructor !== Object){ return;

} const newobj = {}; for(const i in obj){

newobj[i] = obj[i].constructor === Object ?

sortObject(depthSortObject(obj[i])) : obj[i];

}

return newobj;

}function sortObject(obj){

const newObj = {}; const objKeys = Object.keys(obj)

objKeys.sort().map((val) => {

newObj[val] = obj[val];

}); return newObj;

}const uniquedArr = uniqueByObjectKey(array);

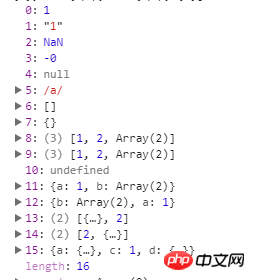

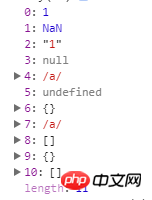

console.log(uniquedArr); Result:  This method got the result of "deep deduplication*", because I I added some type judgments to the function to change the key. If I directly force the object [original key], the numbers and strings will be rounded off, and many types cannot be used as keys. I was fooled by JSON.stringify(/a/) in the process of writing the function. I always thought it was a problem with {}~~. Later, when I output the key, I discovered that JSON.string(/a/) === '{}', and String([]) === ", so I have to use both. Convert and then add to avoid accidents. The space complexity is O(N) and the time complexity is O(N). It is suitable for situations where you want to get the weight in depth (*: the name of the deep deduplication is my own, because the object is itself. Unordered, that is, {a:1,b:2} should be equivalent to {b:2,a:1}, so deep deduplication will be performed even if the internal key:value of the Object are the same)

This method got the result of "deep deduplication*", because I I added some type judgments to the function to change the key. If I directly force the object [original key], the numbers and strings will be rounded off, and many types cannot be used as keys. I was fooled by JSON.stringify(/a/) in the process of writing the function. I always thought it was a problem with {}~~. Later, when I output the key, I discovered that JSON.string(/a/) === '{}', and String([]) === ", so I have to use both. Convert and then add to avoid accidents. The space complexity is O(N) and the time complexity is O(N). It is suitable for situations where you want to get the weight in depth (*: the name of the deep deduplication is my own, because the object is itself. Unordered, that is, {a:1,b:2} should be equivalent to {b:2,a:1}, so deep deduplication will be performed even if the internal key:value of the Object are the same)

4. . ES6 Set method:

const array = [1, NaN, '1', null, /a/, 1, undefined, null, NaN, '1', {}, /a/, [], undefined, {}, []];function uniqueByES6Set(arr) {

return Array.from(new Set(arr)) // return [...new Ser(arr)]}const uniquedArr = uniqueByES6Set(array);

console.log(uniquedArr); Result:

From the results, Array, Object, and RegExp are retained. The principle of this method is ES6’s new data structure Set, which stores unordered and non-duplicate data. , For details on the Set structure, please refer to: Set and Map - Ruan Yifeng, space complexity O(N), time complexity position, ·This method is very fast, it is best to use this directly when retaining complex objects

5. ES6. Map method:

const array = [1, NaN, '1', null, /a/, 1, undefined, null, NaN, '1', {}, /a/, [], undefined, {}, []];function uniqueByES6Map(arr) {

const map = new Map(); return arr.filter(e => { return map.has(e) ? false : map.set(e, 'map')

})

}const uniquedArr = uniqueByES6Map(array);

console.log(uniquedArr); Result:  The result is the same as when using Set. It mainly uses the new data structure Map of ES6. Map is stored in key-value group mapping. The characteristic is that the key can be of any type. Mapping data through hash addresses has a time complexity of O(1) and a space complexity of O(N). However, the actual cost of the address is larger than that of Set. It is also suitable for retaining complex objects.

The result is the same as when using Set. It mainly uses the new data structure Map of ES6. Map is stored in key-value group mapping. The characteristic is that the key can be of any type. Mapping data through hash addresses has a time complexity of O(1) and a space complexity of O(N). However, the actual cost of the address is larger than that of Set. It is also suitable for retaining complex objects.

This article records five common ways to deduplicate arrays. In principle, it is fastest to directly use ES6's Set. If you want to deduplicate an object array, you can use the third method. Of course, the result of the third method will be the same as the following if you change it slightly. It’s the same

Array deduplication is a common question, whether it is an interview or a job, it will be involved. There are many methods for deduplication. It’s hard to say which one is better and which one is worse. According to Choose according to actual needs. This article lists some common deduplication methods, and attaches the advantages, disadvantages and applicable occasions of the methods. Please correct me if there are any mistakes.

Related recommendations:

PHP array deduplication faster implementation method

JS array deduplication method summary

JS array deduplication example detailed explanation

The above is the detailed content of How to operate js array to remove duplicates. For more information, please follow other related articles on the PHP Chinese website!