This article summarizes common Java concurrency basic interview questions for everyone. It has certain reference value. Friends in need can refer to it. I hope it will be helpful to everyone.

1. What are threads and processes?

1.1. What What is a process?

A process is an execution process of a program and is the basic unit for system running programs, so the process is dynamic. Running a program on the system is the process from creation, operation to death of a process.

In Java, when we start the main function, we actually start a JVM process, and the thread where the main function is located is a thread in this process, also called the main thread.

As shown in the figure below, by viewing the Task Manager in windows, we can clearly see the process currently running in the window (the running of the .exe file).

1.2. What is a thread?

Threads are similar to processes, but threads are different than processes Smaller units of execution. A process can generate multiple threads during its execution. Different from a process, multiple threads of the same type share the heap and method area resources of the process, but each thread has its own program counter, Virtual machine stack and local method stack, so when the system generates a thread or switches between threads, the burden is much smaller than that of the process. Because of this, threads also It is called a lightweight process.

Java programs are inherently multi-threaded programs. We can use JMX to see what threads an ordinary Java program has. The code is as follows.

public class MultiThread {

public static void main(String[] args) {

// 获取 Java 线程管理 MXBean

ThreadMXBean threadMXBean = ManagementFactory.getThreadMXBean();

// 不需要获取同步的 monitor 和 synchronizer 信息,仅获取线程和线程堆栈信息

ThreadInfo[] threadInfos = threadMXBean.dumpAllThreads(false, false);

// 遍历线程信息,仅打印线程 ID 和线程名称信息

for (ThreadInfo threadInfo : threadInfos) {

System.out.println("[" + threadInfo.getThreadId() + "] " + threadInfo.getThreadName());

}

}

}

The output of the above program is as follows (the output content may be different, don’t worry too much about the role of each thread below, just know that the main thread executes the main method):

[5] Attach Listener //添加事件 [4] Signal Dispatcher // 分发处理给 JVM 信号的线程 [3] Finalizer //调用对象 finalize 方法的线程 [2] Reference Handler //清除 reference 线程 [1] main //main 线程,程序入口

From the above output The content can be seen: The running of a Java program is the main thread and multiple other threads running simultaneously.

2. Please briefly describe the relationship, differences, advantages and disadvantages between threads and processes?

The relationship between processes and threads from the perspective of JVM

2.1. Illustration of the relationship between processes and threads

The following figure is the Java memory area. Through the following figure, we can talk about the relationship between threads and processes from the perspective of JVM. If you don’t know much about the Java memory area (runtime data area), you can read this article: "Probably the clearest article that explains the Java memory area"

As can be seen from the above figure: there can be multiple threads in a process, and multiple threads share the heap# of the process ## and Method area (metaspace after JDK1.8) resources, but each thread has its own program counter , virtual machine stack and Local method stack.

Summary: Threads are processes divided into smaller running units. The biggest difference between threads and processes is that each process is basically independent, but each thread is not necessarily, because threads in the same process are very likely to affect each other. Thread execution overhead is small, but it is not conducive to resource management and protection; on the contrary, processes

The following is an expansion of this knowledge point! Let’s think about this question: Why areprogram counter, virtual machine stack and local method stack private to threads? Why are the heap and method area shared by threads?

2.2. Why is the program counter private?

The program counter mainly has the following two functions:restore it to the correct execution position after switching threads .

2.3. Why are the virtual machine stack and local method stack private?

So, in order to ensure that local variables in the thread are not accessed by other threads , the virtual machine stack and local method stack are thread private.

2.4. A brief understanding of the heap and method area in one sentence

The heap and method area are resources shared by all threads, where the heap is the The largest piece of memory is mainly used to store newly created objects (all objects are allocated memory here). The method area is mainly used to store loaded class information, constants, static variables, code compiled by the just-in-time compiler and other data. .

3. Talk about the difference between concurrency and parallelism?

4. Why use multi-threading?

Let’s talk about it in general:

Let’s go deeper into the bottom layer of the computer:

5. What problems may be caused by using multi-threading?

The purpose of concurrent programming is to improve the execution efficiency of the program Improve program running speed, but concurrent programming does not always improve program running speed, and concurrent programming may encounter many problems, such as: memory leaks, context switches, deadlocks, and idle resources limited by hardware and software. question.

6. Talk about the life cycle and status of threads?

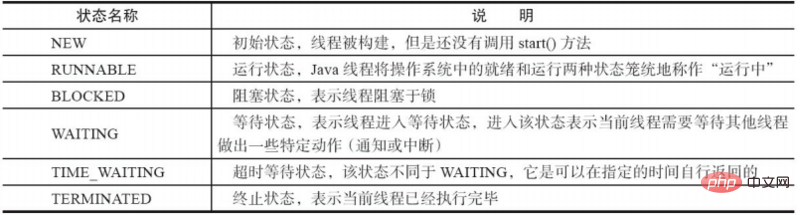

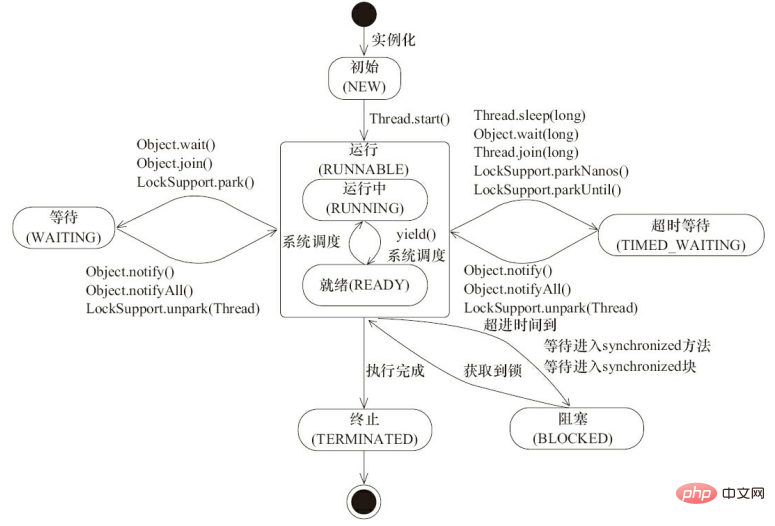

A Java thread can only be in the following at a specified moment in its running life cycle One of the 6 different states (image source "The Art of Java Concurrent Programming" Section 4.1.4).

#Threads are not fixed in a certain state during the life cycle but switch between different states as the code is executed. The state changes of Java threads are shown in the figure below (picture source "The Art of Java Concurrent Programming" Section 4.1.4):

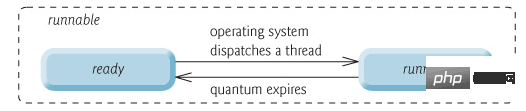

As can be seen from the above figure: after the thread is created, it will In the NEW (new) state, it starts running after calling the start() method. The thread is in the READY (runnable) state at this time. A thread in the runnable state is in the RUNNING state after it obtains the CPU time slice (timeslice).

操作系统隐藏 Java 虚拟机(JVM)中的 RUNNABLE 和 RUNNING 状态,它只能看到 RUNNABLE 状态(图源:HowToDoInJava:Java Thread Life Cycle and Thread States),所以 Java 系统一般将这两个状态统称为 RUNNABLE(运行中) 状态 。

当线程执行 wait()方法之后,线程进入 WAITING(等待) 状态。进入等待状态的线程需要依靠其他线程的通知才能够返回到运行状态,而 TIME_WAITING(超时等待) 状态相当于在等待状态的基础上增加了超时限制,比如通过 sleep(long millis)方法或 wait(long millis)方法可以将 Java 线程置于 TIMED WAITING 状态。当超时时间到达后 Java 线程将会返回到 RUNNABLE 状态。当线程调用同步方法时,在没有获取到锁的情况下,线程将会进入到 BLOCKED(阻塞) 状态。线程在执行 Runnable 的 run() 方法之后将会进入到 TERMINATED(终止) 状态。

7. 什么是上下文切换?

多线程编程中一般线程的个数都大于 CPU 核心的个数,而一个 CPU 核心在任意时刻只能被一个线程使用,为了让这些线程都能得到有效执行,CPU 采取的策略是为每个线程分配时间片并轮转的形式。当一个线程的时间片用完的时候就会重新处于就绪状态让给其他线程使用,这个过程就属于一次上下文切换。

概括来说就是:当前任务在执行完 CPU 时间片切换到另一个任务之前会先保存自己的状态,以便下次再切换回这个任务时,可以再加载这个任务的状态。任务从保存到再加载的过程就是一次上下文切换。

上下文切换通常是计算密集型的。也就是说,它需要相当可观的处理器时间,在每秒几十上百次的切换中,每次切换都需要纳秒量级的时间。所以,上下文切换对系统来说意味着消耗大量的 CPU 时间,事实上,可能是操作系统中时间消耗最大的操作。

Linux 相比与其他操作系统(包括其他类 Unix 系统)有很多的优点,其中有一项就是,其上下文切换和模式切换的时间消耗非常少。

8. 什么是线程死锁?如何避免死锁?

8.1. 认识线程死锁

多个线程同时被阻塞,它们中的一个或者全部都在等待某个资源被释放。由于线程被无限期地阻塞,因此程序不可能正常终止。

如下图所示,线程 A 持有资源 2,线程 B 持有资源 1,他们同时都想申请对方的资源,所以这两个线程就会互相等待而进入死锁状态。

下面通过一个例子来说明线程死锁,代码模拟了上图的死锁的情况 (代码来源于《并发编程之美》):

public class DeadLockDemo {

private static Object resource1 = new Object();//资源 1

private static Object resource2 = new Object();//资源 2

public static void main(String[] args) {

new Thread(() -> {

synchronized (resource1) {

System.out.println(Thread.currentThread() + "get resource1");

try {

Thread.sleep(1000);

} catch (InterruptedException e) {

e.printStackTrace();

}

System.out.println(Thread.currentThread() + "waiting get resource2");

synchronized (resource2) {

System.out.println(Thread.currentThread() + "get resource2");

}

}

}, "线程 1").start();

new Thread(() -> {

synchronized (resource2) {

System.out.println(Thread.currentThread() + "get resource2");

try {

Thread.sleep(1000);

} catch (InterruptedException e) {

e.printStackTrace();

}

System.out.println(Thread.currentThread() + "waiting get resource1");

synchronized (resource1) {

System.out.println(Thread.currentThread() + "get resource1");

}

}

}, "线程 2").start();

}

}

Output

Thread[线程 1,5,main]get resource1 Thread[线程 2,5,main]get resource2 Thread[线程 1,5,main]waiting get resource2 Thread[线程 2,5,main]waiting get resource1

线程 A 通过 synchronized (resource1) 获得 resource1 的监视器锁,然后通过 Thread.sleep(1000);让线程 A 休眠 1s 为的是让线程 B 得到执行然后获取到 resource2 的监视器锁。线程 A 和线程 B 休眠结束了都开始企图请求获取对方的资源,然后这两个线程就会陷入互相等待的状态,这也就产生了死锁。上面的例子符合产生死锁的四个必要条件。

学过操作系统的朋友都知道产生死锁必须具备以下四个条件:

8.2. 如何避免线程死锁?

我们只要破坏产生死锁的四个条件中的其中一个就可以了。

破坏互斥条件

这个条件我们没有办法破坏,因为我们用锁本来就是想让他们互斥的(临界资源需要互斥访问)。

破坏请求与保持条件

一次性申请所有的资源。

破坏不剥夺条件

占用部分资源的线程进一步申请其他资源时,如果申请不到,可以主动释放它占有的资源。

破坏循环等待条件

靠按序申请资源来预防。按某一顺序申请资源,释放资源则反序释放。破坏循环等待条件。

我们对线程 2 的代码修改成下面这样就不会产生死锁了。

new Thread(() -> {

synchronized (resource1) {

System.out.println(Thread.currentThread() + "get resource1");

try {

Thread.sleep(1000);

} catch (InterruptedException e) {

e.printStackTrace();

}

System.out.println(Thread.currentThread() + "waiting get resource2");

synchronized (resource2) {

System.out.println(Thread.currentThread() + "get resource2");

}

}

}, "线程 2").start();

Output

Thread[线程 1,5,main]get resource1 Thread[线程 1,5,main]waiting get resource2 Thread[线程 1,5,main]get resource2 Thread[线程 2,5,main]get resource1 Thread[线程 2,5,main]waiting get resource2 Thread[线程 2,5,main]get resource2 Process finished with exit code 0

我们分析一下上面的代码为什么避免了死锁的发生?

线程 1 首先获得到 resource1 的监视器锁,这时候线程 2 就获取不到了。然后线程 1 再去获取 resource2 的监视器锁,可以获取到。然后线程 1 释放了对 resource1、resource2 的监视器锁的占用,线程 2 获取到就可以执行了。这样就破坏了破坏循环等待条件,因此避免了死锁。

9. 说说 sleep() 方法和 wait() 方法区别和共同点?

10. 为什么我们调用 start() 方法时会执行 run() 方法,为什么我们不能直接调用 run() 方法?

这是另一个非常经典的 java 多线程面试问题,而且在面试中会经常被问到。很简单,但是很多人都会答不上来!

new 一个 Thread,线程进入了新建状态;调用 start() 方法,会启动一个线程并使线程进入了就绪状态,当分配到时间片后就可以开始运行了。 start() 会执行线程的相应准备工作,然后自动执行 run() 方法的内容,这是真正的多线程工作。 而直接执行 run() 方法,会把 run 方法当成一个 main 线程下的普通方法去执行,并不会在某个线程中执行它,所以这并不是多线程工作。

总结: 调用 start 方法方可启动线程并使线程进入就绪状态,而 run 方法只是 thread 的一个普通方法调用,还是在主线程里执行。

推荐教程:java教程

The above is the detailed content of Java concurrency basics common interview questions (summary). For more information, please follow other related articles on the PHP Chinese website!