Home >Technology peripherals >AI >To solve the biggest obstacle to the implementation of AI, has OpenAI found a way?

OpenAI seems to have found a solution to the "serious nonsense" of generative artificial intelligence.

On May 31, OpenAI announced on its official website that it had trained a model that can help eliminate common "illusions" and other common problems in generative AI.

OpenAI stated that reward models can be trained to detect hallucinations, and reward models are divided into outcome supervision (providing feedback based on the final result) or process supervision (providing feedback for each step in the thinking chain) model.

That is, process supervision rewards each correct step in reasoning, while outcome supervision simply rewards correct answers.

OpenAI said that in contrast, process supervision has an important advantage-It directly trains the model to produce human-approved thought chains:

Process supervision has several consistency advantages over outcome supervision. Each step is precisely supervised, so it rewards behavior that follows a consistent chain of thought model.

Process supervision is also more likely to produce explainable reasoning because it encourages the model to follow a human-approved process

Outcome monitoring can reward an inconsistent process and is often more difficult to review.

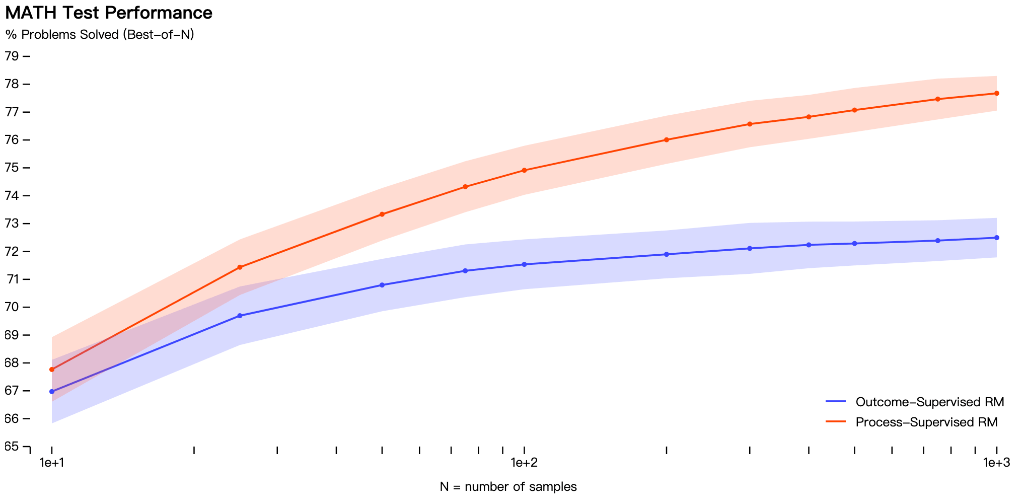

OpenAI tested both models on a mathematical dataset and found that the process supervision approach resulted in "significantly better performance."

It is important to note, however, that so far the process supervision approach has only been tested in the mathematical domain, and more work is needed to see how it performs more generally.

In addition, OpenAI did not indicate how long it will take for this research to be applied to ChatGPT, which is still in the research stage.

While the initial results are good, OpenAI does mention that the safer approach incurs reduced performance, called an alignment tax.

Current results show that process supervision does not generate alignment taxes when dealing with mathematical problems, but the situation in general information is not known.

The “illusion” of generative AI

Since the advent of generative AI, accusations of fabricating false information and “generating hallucinations” have never disappeared. This is also one of the biggest problems with current generative AI models.

In February of this year, Google hastily launched the chatbot Bard in response to ChatGPT funded by Microsoft. However, it was found that common sense errors were made in the demonstration, causing Google's stock price to plummet.

There are many reasons that cause AI hallucinations. One of them is inputting data to trick the AI program into misclassifying.

For example, developers use data (such as images, text, or other types) to train artificial intelligence systems. If the data is changed or distorted, the application will interpret the input differently and produce incorrect results.

Illusions can occur in large language-based models like ChatGPT due to incorrect transformer decoding, causing the language model to potentially produce a story or narrative that is not illogical or ambiguous.

The above is the detailed content of To solve the biggest obstacle to the implementation of AI, has OpenAI found a way?. For more information, please follow other related articles on the PHP Chinese website!