Home > Article > Technology peripherals > Exclusive interview with 'Father of Modern Artificial Intelligence' Juergen Schmidhuber, whose life's work will not bring destruction

When there is a huge contradiction between technology and business, Juergen Schmidhuber will be remembered by the public. From the open letter in April suspending the development of artificial intelligence technology, to Geoffrey Hinton leaving Google at the beginning of this month in order to be able to talk more "freely" about the risks of AI. The most imaginative and controversial technology of this century finally stands at its final crossroads.

In the past two years, Juergen Schmidhuber’s limelight was once overshadowed by the three giants of artificial intelligence (Yoshua Bengio, Geoffrey Hinton and Yann LeCun) who won the Turing Award, so that the public unconsciously regarded this artificial intelligence thinker and technology pioneer Put it in a relatively underestimated position.

To understand the reason why he missed the Turing Award, perhaps the comments of Professor Zhou Zhihua, Dean of the School of Artificial Intelligence of Nanjing University, are worth referring to. He said: "In terms of contributions to deep learning, Hinton undoubtedly ranks first, and LeCun and Schmidhuber have both contributed. It’s very big. But HLB is always bundled together, and Schmidhuber is obviously not in that small group. To win a prize, nominations and votes are needed, and popularity is also important. But it doesn’t matter, with a textbook-level contribution like LSTM, he is calm enough.”

Earlier in 1990, Schmidhuber clarified unsupervised generative adversarial neural networks. These neural networks compete with each other in minimax games to achieve artificial curiosity. In 1991, he introduced the neural fast weighting program, which is formally equivalent to what is now called a Transformer with linearized self-attention. Today, this converter is driving the famous ChatGPT. In 2015, his team launched Highway Neural Networks, which is many times deeper than previous networks.

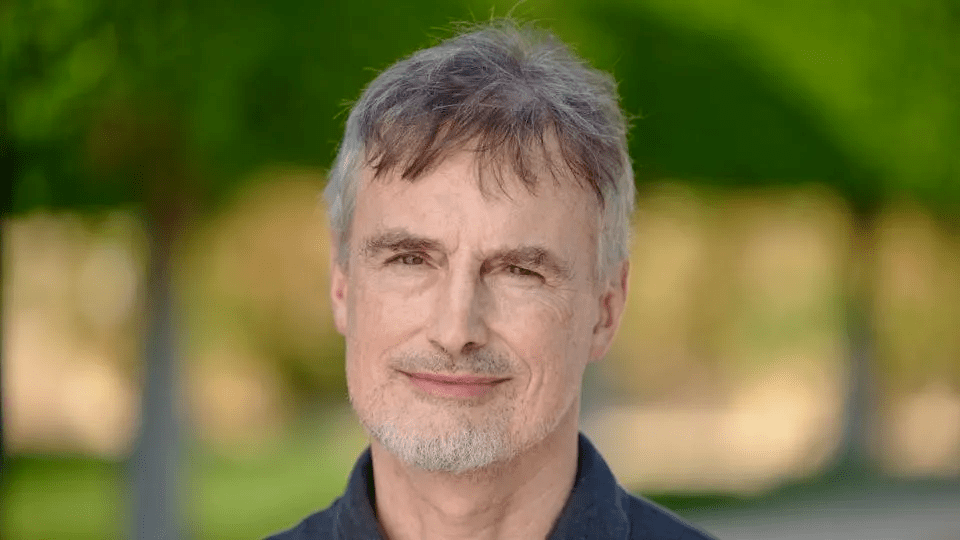

Throughout his career, Schmidhuber received various awards and honors for his groundbreaking work. In 2013, he was awarded the Helmholtz Prize in recognition of his significant contributions to the field of machine learning. In 2016, he was awarded the IEEE Neural Network Pioneer Award for "pioneering contributions to deep learning and neural networks." It should also be noted that before Yoshua Bengio, Geoffrey Hinton and Yann LeCun won the Turing Award, Juergen Schmidhuber was already known as the "Father of Modern Artificial Intelligence".

His voice often stands on the opposite side of the "mainstream", but is often right. Schmidhuber accepted an exclusive interview with Forbes China earlier this year, and recently answered a series of questions from Forbes contributor Hessie Jones about concerns about artificial intelligence. We have organized the relevant conversation as follows:

Forbes China: Have technologies such as deep learning and neural networks heralded fundamental changes after the emergence of ChatGPT?

Schmidhuber: Actually no, because ChatGPT is essentially a learning neural network, the foundations of which were laid in the last millennium. However, thanks to constant hardware acceleration, it is now possible to build much larger than before neural networks and feed them the entire internet, learning to predict parts of text (such as "chat") and images. The intelligence of ChatGPT is mainly the result of these huge scales.

The neural network on which ChatGPT is based is the so-called "attention converter". I'm happy about this because more than 30 years ago I published what is now called "Transformers with Linear Self-Attention" (J. Schmidhuber. Learning to control fast-weight memories: An alternative to recurrent nets. Neural Computation , 4(1):131-139, 1992). They are equivalent to what I call neural fast weight programmers (except for normalization), with separate storage and control; the attention term was introduced at ICANN in 1993.

Forbes China: You have been to China, so what do you think of the development of artificial intelligence technology in China? How should China catch up with technologically advanced countries like the United States?

Schmidhuber: While most of the basic algorithms for deep learning were invented by Europeans, large companies in the United States have done a better job of commercializing these algorithms. I don't think Chinese companies are lagging behind. These basic methods are already publicly available and open source. What you need is fast computers, lots of data and engineering talent. I have visited China many times and know that China has it all and is already the country that publishes the most papers on artificial intelligence. So I am very optimistic about China’s artificial intelligence!

Forbes China: Currently, most computing power and data are in the hands of some industry giants. How can MSMEs and startups break through barriers?

Schmidhuber:40 years ago, I knew a rich man. He has a Porsche. But the most surprising thing is: there is a mobile phone in the Porsche. So, via satellite, he can call other people with similar Porsches.

Today, everyone has a cheap smartphone that's better in many ways than the stuff in your Porsche. And, as artificial intelligence develops, so will it. Remember: every 5 years, AI will become 10 times cheaper. Everyone will have tons of cheap AI working for them. (This is a law older than Moore's Law and has existed for nearly a thousand years since the discovery of gears.)

In fact, the motto of our company NNAISENSE is: "Artificial Intelligence is for everyone"! Our artificial intelligence is already making human life longer, healthier, easier, and happier. Artificial intelligence will not be controlled by a few large companies. No, everyone will have cheap but powerful artificial intelligence to improve his/her life in many ways.

Forbes China: What opportunities do you think there are for entrepreneurs and start-ups in the field of artificial intelligence and deep learning?

Schmidhuber: I can talk from the perspective of NNAISENSE, the company we founded. It is pronounced like "nascence" ("birth" in English), but is spelled differently because it is based on general neural network artificial intelligence. Today, most AI profits are made in virtual worlds, used for marketing and selling ads—which is what the big platform companies on the Pacific Rim are doing: Alibaba, Amazon, Facebook, Tencent, Google, Baidu, and others. However, marketing accounts for only a small part of the world economy. Larger parts will also be invaded by artificial intelligence, just like in the movie. There are many opportunities for startups like ours.

Forbes China: What do you think is the trend of artificial intelligence replacing human jobs, considering the amazing experience brought by ChatGPT?

Schmidhuber: With ChatGPT, users can suddenly feel like they are talking to a very knowledgeable person who seems to have well-crafted answers to all topics and questions. Even complex tasks like "write a summary of an article about this in this writer's style" can often be solved so quickly that only minor editing is required later. Many desk tasks will be greatly facilitated by such a smart AI companion.

Which type of artificial intelligence is performing reasonably well today? The answer is artificial intelligence that serves desktop workers. Examples include answering law exam questions, summarizing company documents, defeating your opponents in virtual worlds (e.g. video games), or tracking your movements across the Internet and delivering tailored advertising to you.

Forbes China: What do you think of the challenges AI faces in terms of ethics, morality, privacy and security?

Schmidhuber: It will be an arms race between AIs, with some AIs fighting for certain ethical and moral standards, privacy and security, and others being less benevolent.

Forbes China: Does the current AI development meet your predictions? How will deep learning develop in the future?

Schmidhuber: My main goal since the age of 15 or so has been to build a self-improving artificial intelligence that is smarter than me and then retire. Current developments are in line with my predictions.

Remember, every 5 years since 1941, computers have become 10 times cheaper. A naive extrapolation of this exponential trend predicts that the 21st century will see cheap computers with as much as a thousand times the raw computing power of all human brains. Soon there will be millions, billions, trillions of these devices.

The following is a conversation between Forbes contributor Hessie Jones and Schmidhuber on a series of issues concerning artificial intelligence. To ensure that the text is clear and coherent, the following has been edited and organized.

Jones: You have signed a warning letter about AI weapons. But you didn’t sign the recently published “Suspension of Large Model Experiments in Artificial Intelligence: An Open Letter”. Is there any reason?

Schmidhuber: I have come to realize that many of those who warn publicly about the dangers of artificial intelligence are simply seeking publicity. I don’t think this latest letter will have any significant impact, as many AI researchers, companies, and governments will ignore it entirely.

The word "we" was used many times in that open letter, referring to "all of us", that is, human beings. But as I’ve pointed out many times in the past, there is no “us” that everyone can identify with. Ask 10 different people and you'll hear 10 different opinions on what "good" is. Some of these views are completely incompatible. Don’t forget the great conflict between many people.

The letter also said, "If such a pause cannot be put in place quickly, the government should intervene." The problem is that different governments have different views on what is good for themselves and what is good for others. Power A will say, if we don't do this, Power B will do it, perhaps covertly, to gain an advantage over us. The same is true for big country C and big country D.

Jones: Everyone acknowledges this fear about current generative AI technologies. In addition, OpenAI CEO Sam Altman himself has publicly acknowledged the existential threats of this technology and called for the regulation of artificial intelligence. From your perspective, does AI pose an existential threat to humanity?

Schmidhuber: Artificial intelligence can indeed be weaponized, and I have no doubt that there will be various artificial intelligence arms races, but artificial intelligence does not introduce a new existential threat. The threat from artificial intelligence weapons seems dwarfed by the older threat from nuclear and hydrogen bombs. We should be more afraid of hydrogen bomb rocket technology from half a century ago. The 1961 Tsar Bomba (a hydrogen bomb developed by the Soviet Union during the Cold War in the early 1960s) was nearly 15 times more destructive than all weapons in World War II combined. Although countries have engaged in dramatic nuclear disarmament since the 1980s, the world still has enough nuclear warheads to wipe out human civilization in two hours without any help from artificial intelligence. I'm more worried about ancient existential threats than fairly harmless AI weapons.

Jones: I realize that while you compare artificial intelligence to the threat of nuclear bombs, there is a danger that current technology could be mastered by humans and enable them to "eventually" use it in a very Precise ways to cause further harm to individuals within a group, such as targeted drone strikes. As some have pointed out, you give people a toolset they never had before, enabling bad guys to do more bad things than they could before because they didn't have the technology.

Schmidhuber: In principle, this sounds scary, but our existing laws are adequate to deal with these new weapons powered by artificial intelligence. If you kill someone with a gun, you go to jail, and the same goes for killing someone with a drone. Law enforcement will better understand new threats and new weapons, and will have better technology to counter them. Getting a drone to target a target from a distance requires some tracking and some intelligence to do, which is traditionally done by skilled humans, but to me it just seems like an improved version of a traditional weapon, like the current one The gun, you know, it's just a little bit smarter than the old guns.

Jones: The implicit existential threat is the extent to which humans control this technology. We're seeing some early cases of opportunism, which, as you said, tend to get more media attention than positive breakthroughs. But do you mean it will all balance out?

Schmidhuber: Historically, we have a long tradition that technological breakthroughs lead to advances in weapons, both for defense and protection. From sticks to stones, from axes to gunpowder, from cannons to rockets and now drones, this has had a huge impact on human history, but throughout history, those who use technology to achieve their own ends have also faced to the same techniques as their opponents are also learning to use this technique against them. This has been repeated throughout thousands of years of human history, and will continue to do so. I don’t think the new AI arms race poses the same existential threat as old-fashioned nuclear warheads.

You said something very important, some people prefer to talk about the disadvantages of this technology rather than its benefits, but this is misleading because 95% of AI research and development is to make people happier , promote human life and health.

Jones: Let’s talk about the progress in artificial intelligence research that is beneficial and can fundamentally change current methods and achieve breakthroughs.

Schmidhuber: Okay! For example, 11 years ago, our team and my postdoc Dan Ciresan were the first to win a medical imaging competition using deep learning. We analyzed female breast cells with the goal of distinguishing harmless cells from those in the precancerous stage. Often, it takes a trained oncologist a long time to make these decisions. Our team knew nothing about cancer, but they were able to train an artificial neural network on a large amount of this data, even though the network was very stupid to begin with. It outperforms all other methods. Today it is used not only in breast cancer, but also in radiology and detecting plaque in arteries, among many other things. Some of the neural networks we developed over the past 30 years are now used in thousands of healthcare applications, detecting diseases such as diabetes and COVID-19. This will eventually permeate all areas of healthcare. Good results from this type of AI are far more important than eye-catching new ways to use AI to commit crimes.

Jones: Application is the product of enhanced results. Large-scale adoption either leads us to believe that people are being led astray or, conversely, that technology has a positive impact on people's lives.

Schmidhuber: The latter is the more likely scenario. We are under tremendous commercial pressure to need good AI rather than bad AI because companies want to sell you stuff and you will only buy what you think is good for you. So under this simple business pressure, you have a huge bias towards good AI as opposed to bad AI. However, the apocalyptic scenes in Schwarzenegger movies are more likely to grab people's attention than documentaries about artificial intelligence that improve people's lives.

Jones: I think people are drawn to good stories—stories that have antagonists and struggles, but ultimately have a happy ending. This is consistent with your assessment of human nature, and the fact that history, despite its violent and destructive tendencies, tends to be self-correcting to some extent.

Schmidhuber: Let us take a technology as an example that you all know - Generative Adversarial Networks (GAN, Generative Adversarial Networks), which are easily used today in relation to fake news and disinformation Applications. In fact, the purpose for which GANs were invented is far from what they are used for today.

The name GANs was created in 2014, but we had the basic principles in the early 90s. More than 30 years ago, I called it Artificial Curiosity. This is a very simple way to inject creativity into a small two-network system. This creative AI isn't just trying to slavishly imitate humans; instead, it's creating its own goals. Let me explain:

Now you have two networks. A network is producing an output, which could be anything, any action. A second network is then observing these actions and trying to predict the consequences of those actions. One action can move a robot and then something else happens, while the other network is just trying to predict what will happen.

Now, we can achieve artificial curiosity by reducing the prediction error of the second network, which is also the reward of the first network. The first network wants to maximize its reward, so it will invent actions that will lead to situations that surprise the second network, namely situations that it has not learned to predict well.

In the case of outputting a fake image, the first network will try to generate an image of good enough quality to fool the second network, and the latter will try to predict the reaction of the environment: is the image real or fake? The second system attempts to have greater predictive power while the first network will continue to improve in generating images, making it impossible for the second network to determine whether they are authentic or fake. Therefore, the two systems are at war with each other. The second network will continue to reduce its prediction error, while the first network will try to maximize the error of the prediction system.

Through this zero-sum game, the first network got better and better at producing these convincing fake outputs, producing pictures that looked almost completely real. So once you have an interesting set of images of Van Gogh, you can use his style to generate new images without Van Gogh himself creating the artwork.

Jones: Let’s talk about the future. You have said that "traditional humans will not play a significant role in spreading intelligence throughout the universe."

Schmidhuber: First let us conceptually distinguish between two types of artificial intelligence. The first type of artificial intelligence is a tool guided by a human. They are trained to do specific things, such as accurately detect diabetes or heart disease and prevent the onset of the disease before it occurs. In these cases, the AI targets are humans. And the more interesting AI is setting its own goals. They are inventing their own experiments and learning from them, their horizons continue to expand, and eventually they become increasingly common problem solvers in the real world. They are not under the control of their parents, and most of what they learn is through self-invented experiments.

For example, a robot is rotating a toy. As it does this, it can start to learn the changes in this video by watching the video from the camera change over time, and learn how to rotate the toy in a certain way. , how its three-dimensional nature will create certain visual changes. Eventually, it will learn how gravity works, how the physics of the world works, and more, just like a little scientist!

And I have been predicting for decades that in the future this amplified version of artificial intelligence scientists will want to further expand their horizons and eventually go where most of the physical resources are to build bigger and more artificial intelligence. Of course, almost all of these resources are in space far away from Earth, which is unfriendly to humans but friendly to appropriately designed AI-controlled robots and self-replicating robot factories. So here we are no longer talking about our tiny biosphere; instead, we are talking about the much larger rest of the universe. Within tens of billions of years, curious, self-improving artificial intelligence will colonize the visible universe in a way that is unfeasible to humans. Sounds like science fiction, but I haven't been able to see a reasonable alternative to this scenario since the 1970s, unless a global catastrophe like all-out nuclear war stops this technology before it reaches the skies. develop. ■

Exclusive article from Forbes China, please do not reprint without permission

Header image source: Getty Images

Never miss exciting information

▽

The above is the detailed content of Exclusive interview with 'Father of Modern Artificial Intelligence' Juergen Schmidhuber, whose life's work will not bring destruction. For more information, please follow other related articles on the PHP Chinese website!