Home > Article > Technology peripherals > GPT-4 wins the new SOTA of the most difficult mathematical reasoning data set, and the new Prompting greatly improves the reasoning capabilities of large models

Recently, Huawei Lianhe Port Chinese published a paper "Progressive-Hint Prompting Improves Reasoning in Large Language Models", proposing Progressive-Hint Prompting (PHP) to simulate the human question-taking process. Under the PHP framework, Large Language Model (LLM) can use the reasoning answers generated in the past few times as hints for subsequent reasoning, gradually getting closer to the final correct answer. To use PHP, you only need to meet two requirements: 1) the question can be merged with the inference answer to form a new question; 2) the model can handle this new question and give a new inference answer.

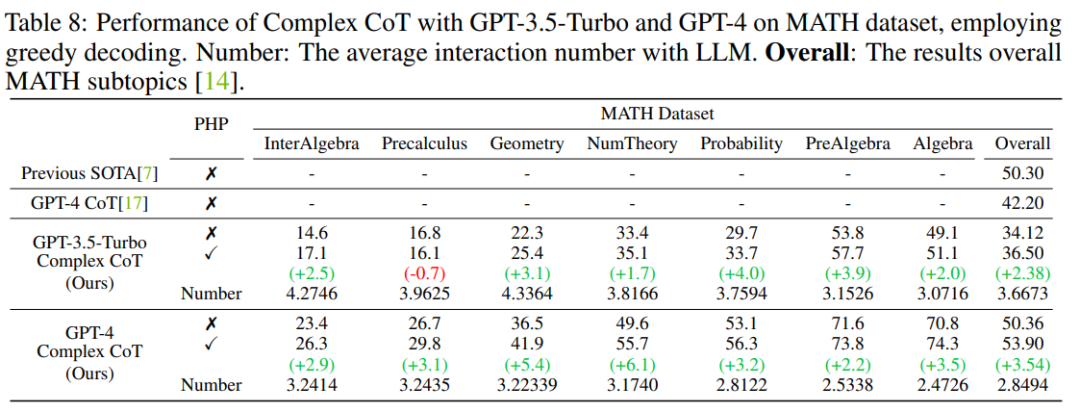

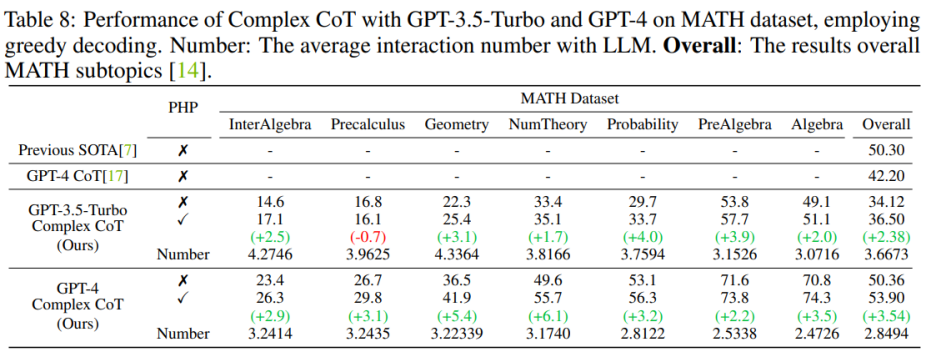

The results show that GP-T-4 PHP achieves SOTA results on multiple datasets, including SVAMP ( 91.9%), AQuA (79.9%), GSM8K (95.5%) and MATH (53.9%). This method significantly outperforms GPT-4 CoT. For example, on the most difficult mathematical reasoning data set MATH, GPT-4 CoT is only 42.5%, while GPT-4 PHP improves by 6.1% on the Nember Theory (number theory) subset of the MATH data set, raising the overall MATH to 53.9% , reaching SOTA.

With the development of LLM, some work on prompting has emerged, among which there are two mainstream directions:

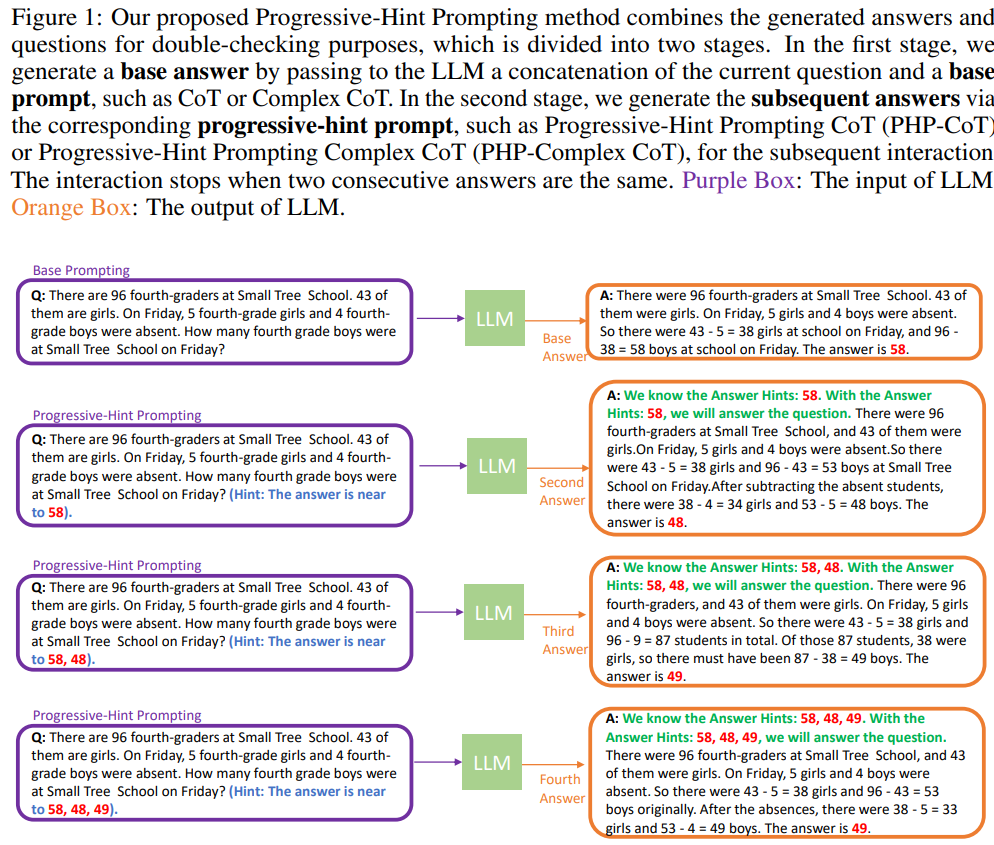

Obviously, the two existing methods do not make any modifications to the question, which is equivalent to finishing the question once, without going back to the answer. double check. PHP tries to simulate a more human-like reasoning process: process the last reasoning process, then merge it into the original question, and ask LLM to reason again. When the two most recent inference answers are consistent, the obtained answer is accurate and the final answer will be returned. The specific flow chart is as follows:

When you interact with LLM for the first time, you should use Base Prompting ( Basic prompt), where the prompt (prompt) can be Standard prompt, CoT prompt or an improved version thereof. With Base Prompting, you can have a first interaction and get a preliminary answer. In subsequent interactions, PHP should be used until the two most recent answers agree.

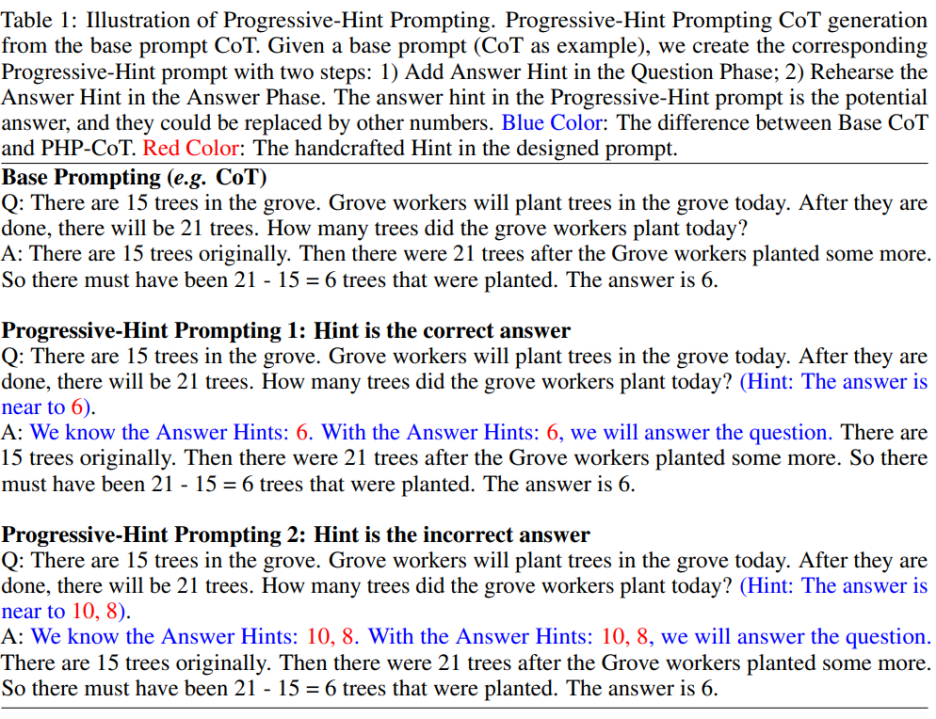

PHP prompt is modified based on Base Prompt. Given a Base Prompt, the corresponding PHP prompt can be obtained through the formulated PHP prompt design principles. As shown in the figure below:

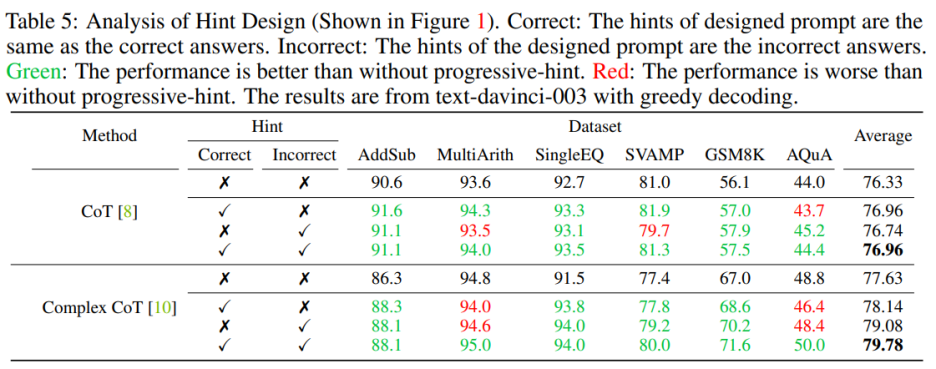

1) If the given Hint is the correct answer, the returned answer must still be the correct answer (specifically, "Hint is the correct answer" as shown in the figure above);

2) If the given Hint is an incorrect answer, then LLM must use reasoning to jump out of the Hint of the incorrect answer and return the correct answer (specifically, "Hint is the incorrect answer" as shown in the figure above).

According to this PHP prompt design rule, given any existing Base Prompt, the author can set the corresponding PHP Prompt.

The authors use seven data sets, including AddSub, MultiArith, SingleEQ, SVAMP, GSM8K, AQuA and MATH. At the same time, the author used a total of four models to verify the author's ideas, including text-davinci-002, text-davinci-003, GPT-3.5-Turbo and GPT-4. Main resultsExperiments

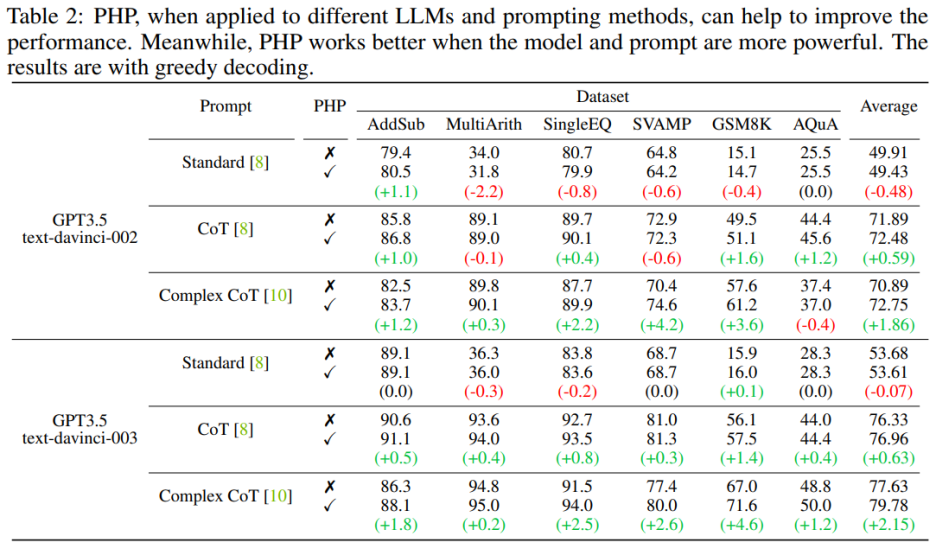

##When language model PHP works better when it is more powerful and prompts more effectively. Complex CoT prompt shows significant performance improvements compared to Standard Prompt and CoT Prompt. The analysis also shows that the text-davinci-003 language model fine-tuned using reinforcement learning performs better than the text-davinci-002 model fine-tuned using supervised instructions, improving document performance. The performance improvements of text-davinci-003 are attributed to its enhanced ability to better understand and apply a given prompt. At the same time, if you just use the Standard prompt, the improvement brought by PHP is not obvious. If PHP needs to be effective, at least CoT is needed to stimulate the model's reasoning capabilities.

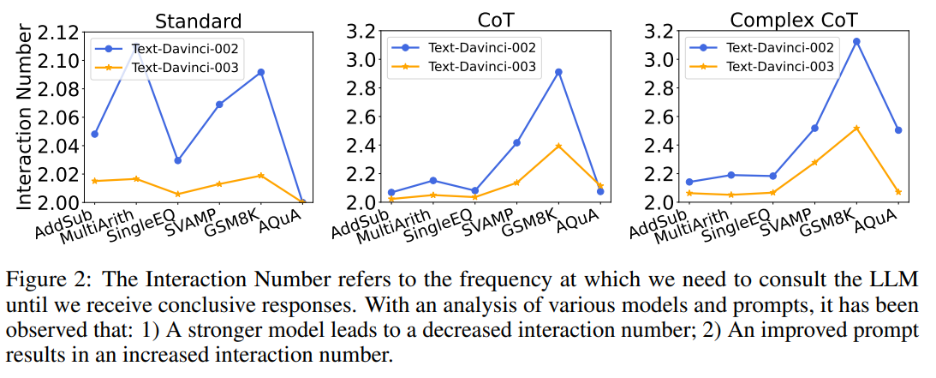

At the same time, the author also explored the relationship between the number of interactions and the model and prompt. When the language model is stronger and the cues are weaker, the number of interactions decreases. The number of interactions refers to the number of times the agent interacts with LLMs. When the first answer is received, the number of interactions is 1; when the second answer is received, the number of interactions increases to 2. In Figure 2, the authors show the number of interactions for various models and prompts. The author's research results show that:

1) Given the same prompt, the number of interactions of text-davinci-003 is generally lower than that of text-davinci-002. This is mainly due to the higher accuracy of text-davinci-003, which results in the base answer and subsequent answers being more correct, thus requiring less interaction to get the final correct answer;

2) When using the same model, the number of interactions usually increases as the prompt becomes more powerful. This is because when prompts become more effective, LLMs' reasoning abilities are better utilized, allowing them to use the prompts to jump to wrong answers, ultimately resulting in a higher number of interactions being required to reach the final answer, which increases the number of interactions .

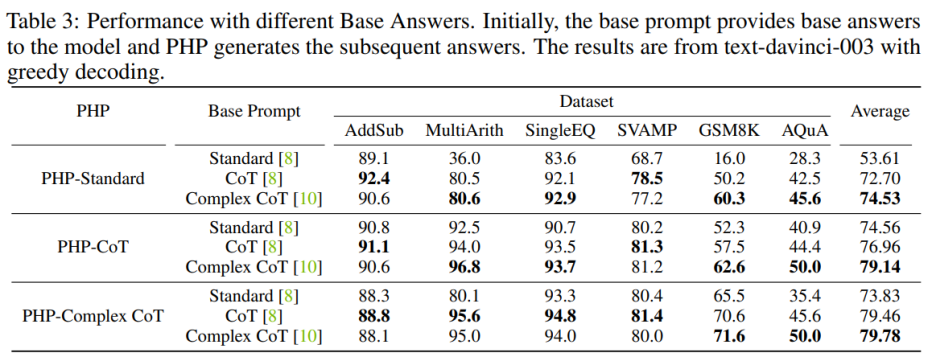

Hint Quality Impact

For To enhance the performance of PHP-Standard, replacing Base Prompt Standard with Complex CoT or CoT can significantly improve the final performance. For PHP-Standard, the authors observed that the performance of GSM8K improved from 16.0% under Base Prompt Standard to 50.2% under Base Prompt CoT to 60.3% under Base Prompt Complex CoT. Conversely, if you replace Base Prompt Complex CoT with Standard, you will end up with lower performance. For example, after replacing the base prompt Complex CoT with Standard, the performance of PHP-Complex CoT dropped from 71.6% to 65.5% on the GSM8K dataset.

If PHP is not designed based on the corresponding Base Prompt, the effect may be further improved. PHP-CoT using Base Prompt Complex CoT performed better than PHP-CoT using CoT on four of the six datasets. Likewise, PHP-Complex CoT using Base Prompt CoT performs better than PHP-Complex CoT using Base Prompt Complex CoT in four of the six datasets. The author speculates that this is because of two reasons: 1) on all six data sets, the performance of CoT and Complex CoT is similar; 2) because the Base Answer is provided by CoT (or Complex CoT), and the subsequent answers are based on PHP-Complex CoT (or PHP-CoT), which is the equivalent of two people working together to solve a problem. Therefore, in this case, the performance of the system may be further improved.

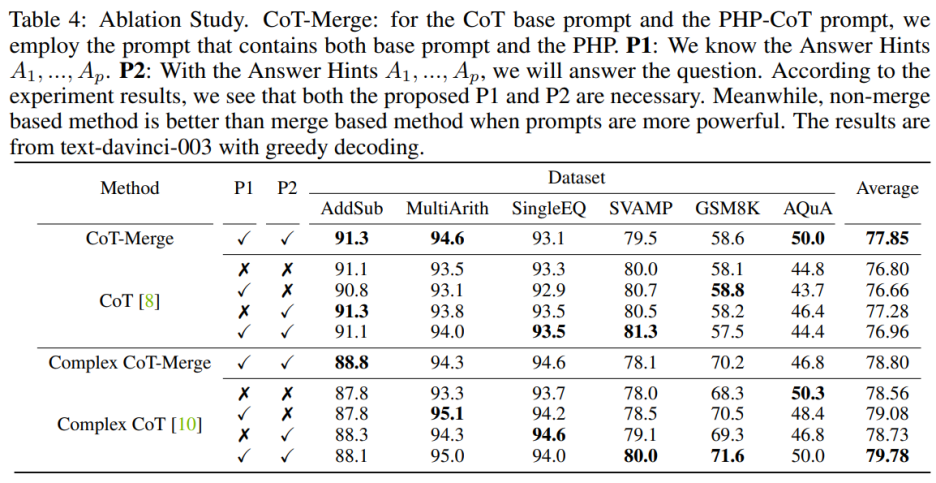

Ablation experiment

Incorporating sentences P1 and P2 into the model can improve the performance of CoT on the three data sets, but when using the Complex CoT method, these two The importance of this sentence is particularly obvious. After adding P1 and P2, the method's performance is improved in five of the six datasets. For example, the performance of Complex CoT improves from 78.0% to 80.0% on the SVAMP dataset and from 68.3% to 71.6% on the GSM8K dataset. This shows that, especially when the model's logical ability is stronger, the effect of sentences P1 and P2 is more significant.

When designing prompts, you need to include both correct and incorrect prompts. When designing hints that contain both correct and incorrect hints, using PHP is better than not using PHP. Specifically, providing the correct hint in the prompt facilitates the generation of answers that are consistent with the given hint. Conversely, providing false hints in the prompt encourages the generation of alternative answers through the given prompt

PHP Self-Consistency

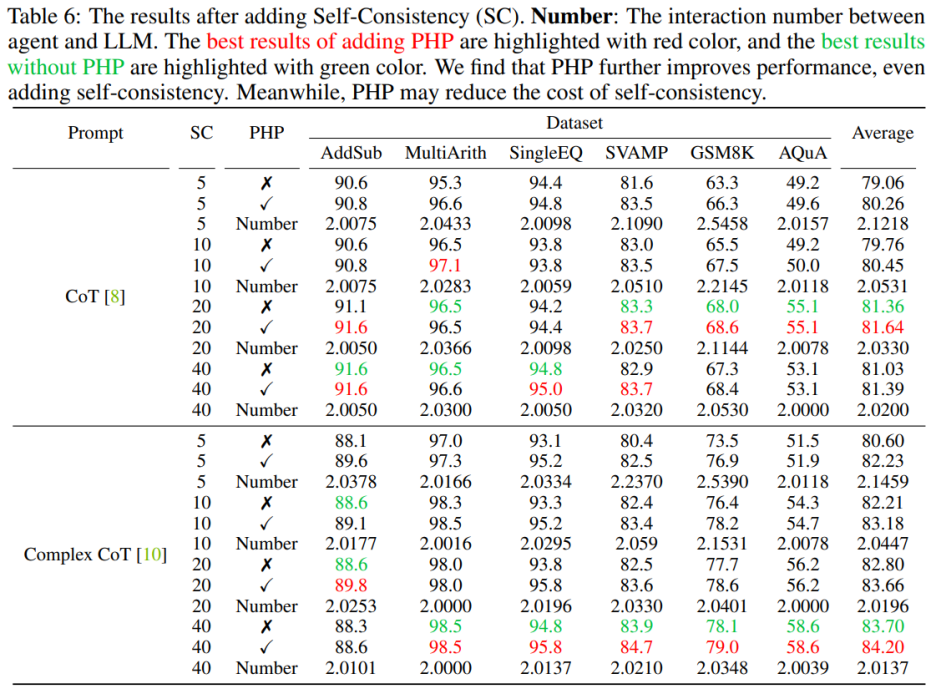

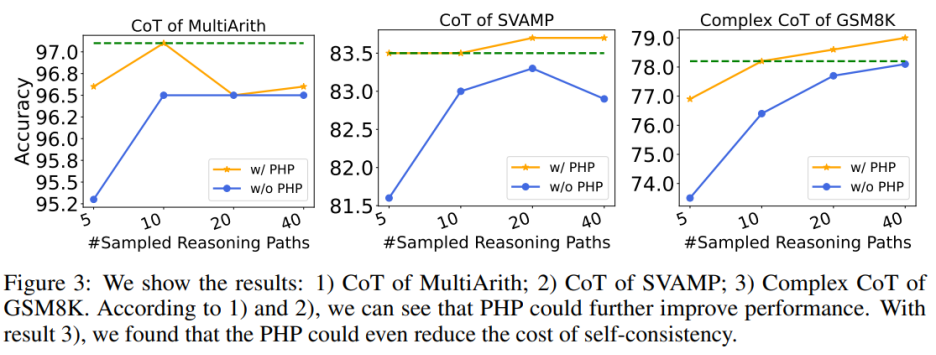

## Use PHP to further improve performance. By using similar number of hints and sample paths, the authors found that in Table 6 and Figure 3, the authors' proposed PHP-CoT and PHP-Complex CoT always performed better than CoT and Complex CoT. For example, CoT SC is able to achieve 96.5% accuracy on the MultiArith dataset with sample paths of 10, 20, and 40. Therefore, it can be concluded that the best performance of CoT SC is 96.5% using text-davinci-003. However, after implementing PHP, performance rose to 97.1%. Similarly, the authors also observed that on the SVAMP dataset, the best accuracy of CoT SC was 83.3%, which further improved to 83.7% after implementing PHP. This shows that PHP can break performance bottlenecks and further improve performance.

Using PHP can reduce the cost of SC. As we all know, SC involves more reasoning paths, resulting in higher costs. Table 6 illustrates that PHP can be an effective way to reduce costs while still maintaining performance gains. As shown in Figure 3, using SC Complex CoT, 40 sample paths can be used to achieve an accuracy of 78.1%, while adding PHP reduces the required average inference paths to 10×2.1531=21.531 paths, and the results are better and the accuracy is Reached 78.2%.

GPT-3.5-Turbo and GPT-4

The authors follow previous work settings and use text generation models conduct experiment. With the API release of GPT-3.5-Turbo and GPT-4, the authors verified the performance of Complex CoT with PHP on the same six datasets. The authors use greedy decoding (i.e. temperature = 0) and Complex CoT as hints for both models.

As shown in Table 7, the proposed PHP enhances the performance by 2.3% on GSM8K and 3.2% on AQuA. However, GPT-3.5-Turbo showed reduced ability to adhere to cues compared to text-davinci-003. The authors provide two examples to illustrate this point: a) In the case of missing hints, GPT-3.5-Turbo cannot answer the question and responds something like "I cannot answer this question because the answer hint is missing. Please provide an answer hint to Continue" statement. In contrast, text-davinci-003 autonomously generates and fills in missing answer hints before answering the question; b) when more than ten hints are provided, GPT-3.5-Turbo may reply "Due to multiple answers being given Hint, I am unable to determine the correct answer. Please provide an answer hint for the question."

After deploying the GPT-4 model, the authors were able to achieve new SOTA performance on the SVAMP, GSM8K, AQuA, and MATH benchmarks. The PHP method proposed by the author continuously improves the performance of GPT-4. Additionally, the authors observed that GPT-4 required fewer interactions compared to the GPT-3.5-Turbo model, consistent with the finding that the number of interactions decreases when the model is more powerful.

This article introduces a new method for PHP to interact with LLMs, which has multiple advantages: 1) PHP achieves significant performance improvements on mathematical reasoning tasks , leading state-of-the-art results on multiple inference benchmarks; 2) PHP can better benefit LLMs using more powerful models and hints; 3) PHP can be easily combined with CoT and SC to further improve performance.

To better enhance the PHP method, future research can focus on improving the design of manual prompts in the question stage and prompt sentences in the answer part. Furthermore, in addition to treating answers as hints, new hints can be identified and extracted that help LLMs reconsider the problem.

The above is the detailed content of GPT-4 wins the new SOTA of the most difficult mathematical reasoning data set, and the new Prompting greatly improves the reasoning capabilities of large models. For more information, please follow other related articles on the PHP Chinese website!