Home > Article > Technology peripherals > MIT releases enhanced version of 'Advanced Mathematics' solver: accuracy rate reaches 81% in 7 courses

Not only solves elementary school math word problems, AI has also begun to conquer advanced math!

Recently, MIT researchers announced that based on the OpenAI Codex pre-training model, they successfully achieved an 81% accuracy rate on undergraduate-level mathematics problems through few-shot learning!

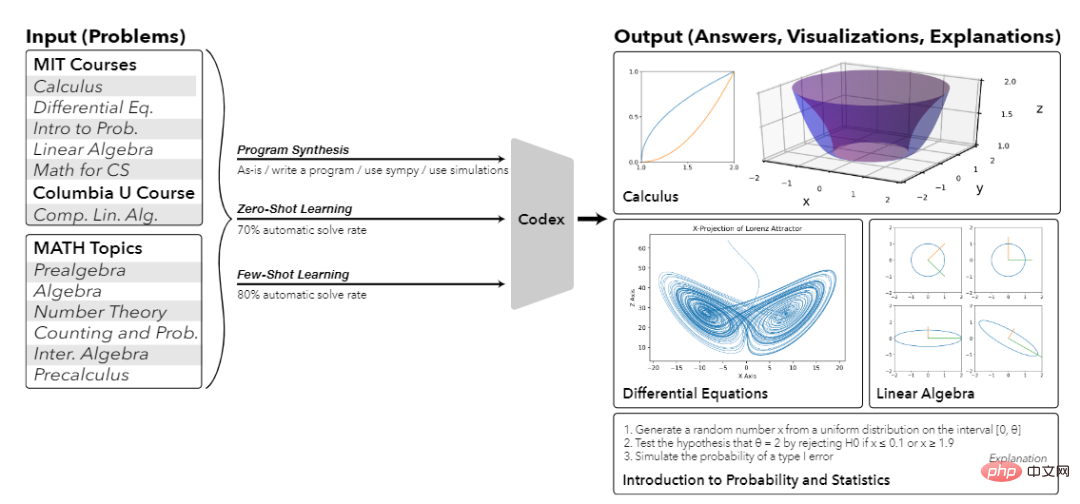

Let’s take a look at some small questions first to see the answers, such as calculating the volume generated by rotating the graph of a single variable function around the axis, calculating the Lorenz attractor and projection, calculating and depicting singular value decomposition (SVD) geometric shape, not only can the answer be correct, but also the corresponding explanation can be given!

It’s really unbelievable. Looking back on the past, passing high numbers was always passed by. Now AI can score 81 points in one shot. I unilaterally declare that AI has surpassed Human beings.

What’s even more awesome is that in addition to solving problems that are difficult to solve with ordinary machine learning models, this research also shows that this technology can be promoted on a large scale and can solve problems in its courses and similar courses.

This is also the first time in history that a single machine learning model can solve such a large-scale mathematical problem, and can also explain, draw and even generate new questions!

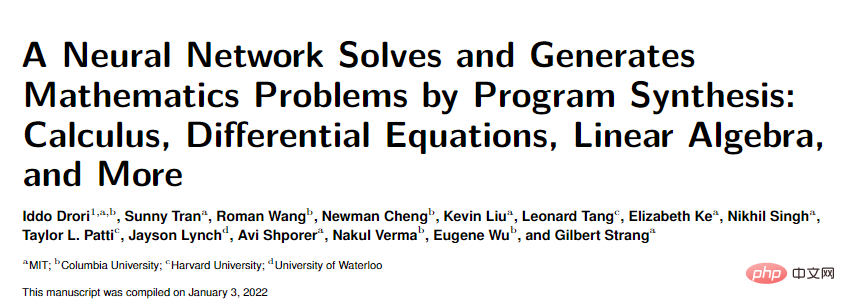

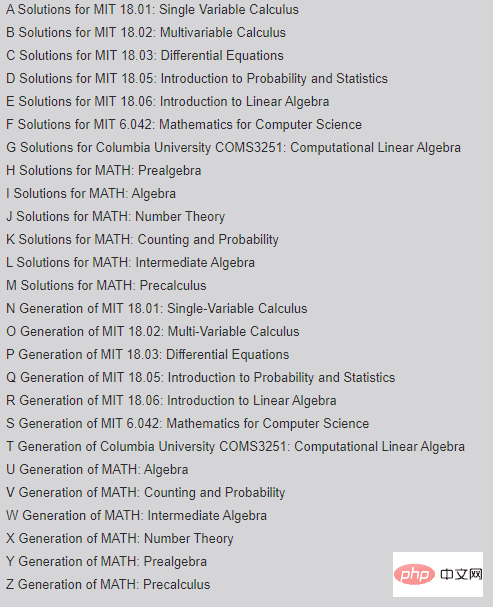

In fact, this paper was released as early as the beginning of the year. After half a year of revision, the length has been increased from 114 pages to 181 pages. More mathematical problems can be solved. The appendices are numbered directly from A-Z. Laman.

There are four main author units of the article, namely MIT, Columbia University, Harvard University and University of Waterloo.

The first author, Iddo Drori, is a lecturer in the AI Department of the Department of Electrical Engineering and Computer Science at MIT and an adjunct associate professor at Columbia University's School of Engineering and Applied Sciences. Won the CCAI NeurIPS 2021 Best Paper Award.

His main research directions are machine learning for education, which is trying to get machines to solve, explain and generate college-level mathematics and STEM courses; machine learning for climate science, which is based on data Thousands of years of data predicting extreme climate change and monitoring climate, integrating multidisciplinary work to predict changes in ocean biogeochemistry in the Atlantic Ocean over the years; machine learning algorithms for autonomous driving, and more.

He is also the author of The Science of Deep Learning published by Cambridge University Press.

Before this paper, most researchers believed that neural networks could not handle high-number problems and could only solve some simple mathematical problems.

Even if the Transformer model surpasses human performance in various NLP tasks, it is still not good at solving mathematical problems. The main reason is because various large models such as GPT-3 only work on text data. Perform pre-training on.

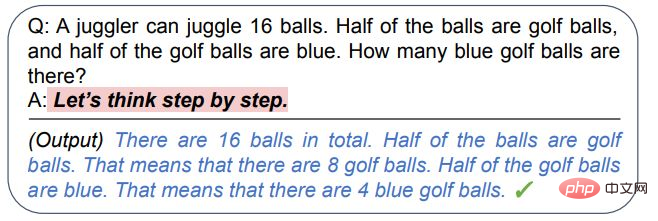

Later, some researchers discovered that the language model can still be guided to reason and answer some simple mathematical questions through step-by-step analysis (chain of thoughts), but advanced mathematics problems are not so easy to solve.

#When the target is a high-number problem, you must first collect a wave of training data.

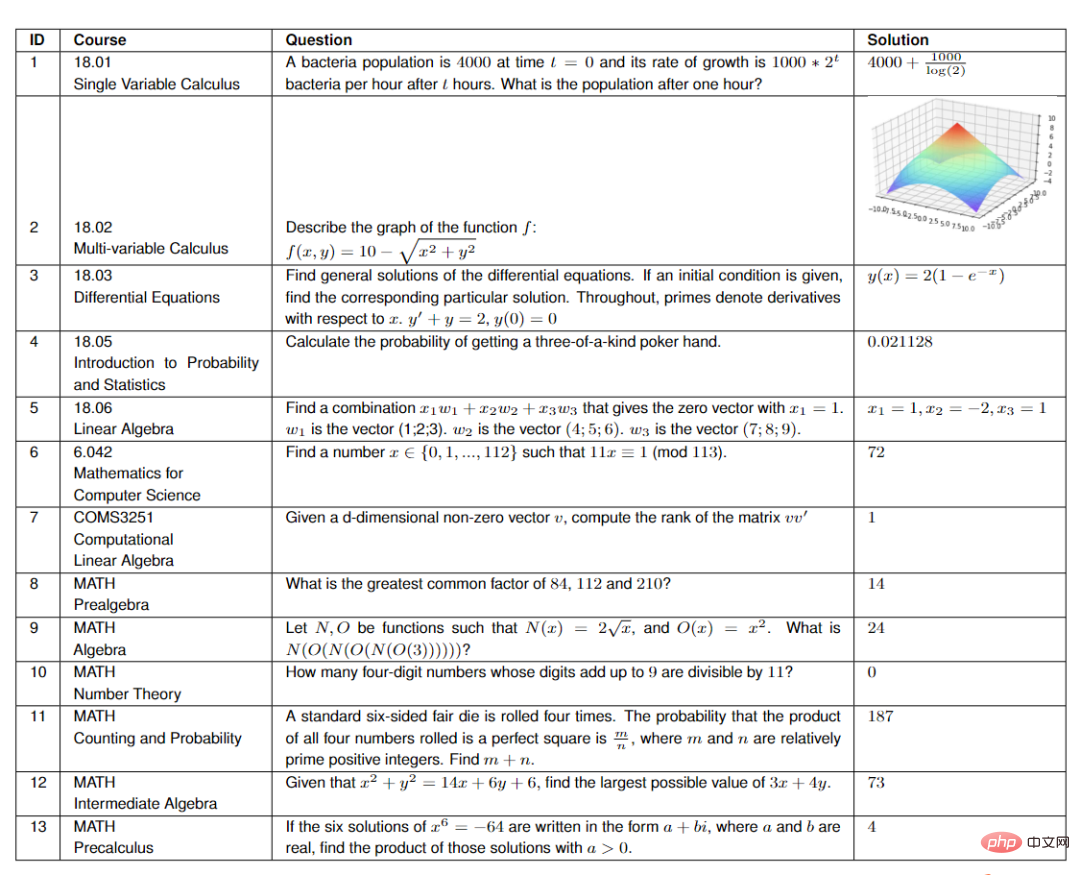

The author randomly selected 25 problems from each of seven courses at MIT, including:

For the MATH dataset, the researchers randomly selected 15 questions from the six topics of the dataset (Algebra, Counting and Probability, Intermediate Algebra, Number Theory, Pre-Algebra, and Pre-Algebra) .

In order to verify that the results generated by the model are not overfitting to the training data, the researchers chose the COMS3251 course that has not been published on the Internet to verify the generated results.

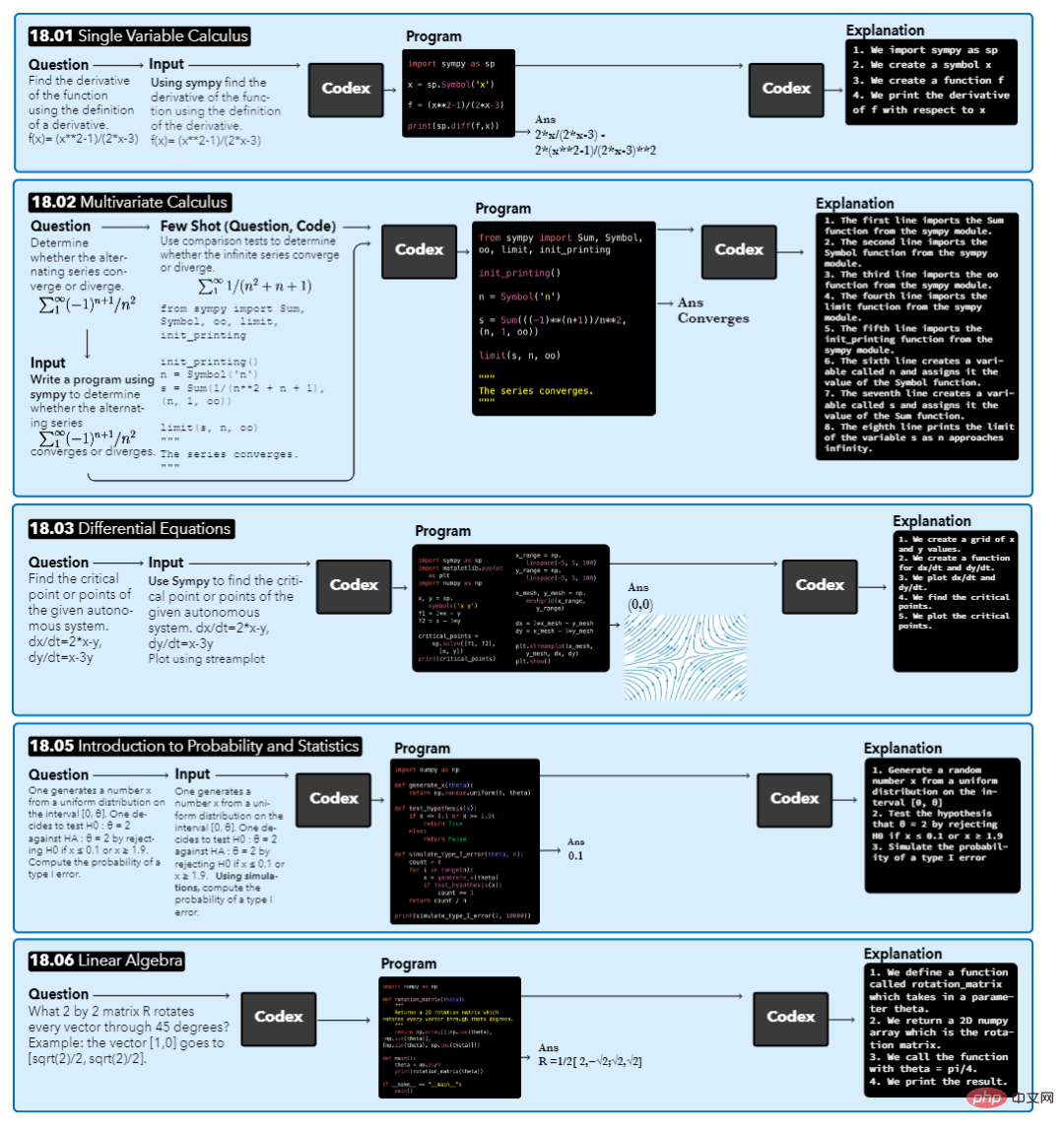

The model takes a course question as input, then performs automatic augmentation with context on it, results in a synthesized program, and finally outputs the answer and generated explanation.

For different questions, the output results may be different. For example, the answer to 18.01 is an equation, the answer to 18.02 is a Boolean value, the answers to 18.03 and 18.06 are a graph or vector, and the answer to 18.05 is a numerical value.

#When you get a question, the first step is to let the model find the relevant context of the question. The researchers mainly focused on the Python program generated by Codex, so they added the text "write a program" before the question and placed the text within three quotation marks of the Python program, pretending to be a docstring in the program.

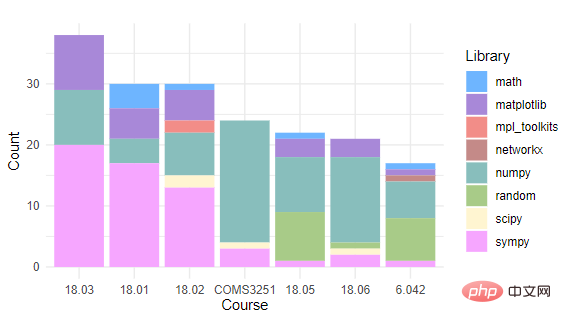

After generating the program, a Codex prompt is needed to specify which libraries to import. The author chose to add the "use sympy" string before the question as context, specifying that the program synthesized to solve the problem should use this package.

By counting the Python programming packages used by each course, you can see that all courses use NumPy and Sympy. Matplotlib is only used in courses with problems that require plotting. About half of the courses use math, random, and SciPy. During actual operation, the researchers only specified SymPy or drawing-related packages to import, and other imported packages were automatically synthesized.

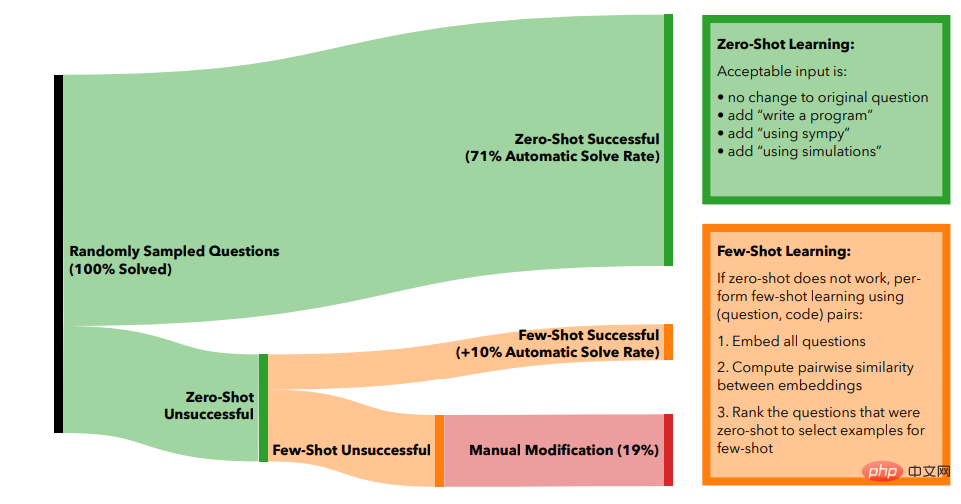

In the Zero-shot learning method, 71% of the problems can be automatically solved by only using automatic enhancement on the original problem.

If a problem is not solved, researchers try to use few-shot learning to solve such problems.

First use OpenAI's text-similarity-babbag-001 embedding engine to obtain the 2048-dimensional embedding of all problems, and then use cosine similarity calculations for all vectors to find the unsolved problems that are most similar to the solved problems question. Finally, the most similar problem and its corresponding code are used as few-shot examples of the new problem.

If the generated code does not output the correct answer, add another solved question-code pair, each time using the next similar solved question.

In practice, it can be found that using up to 5 examples for few-shot learning has the best effect. The total number of problems that can be automatically solved increases from 71% of zero-shot learning to 81% of few-shot learning. .

To solve the remaining 19% of the problems, human editors are required to intervene.

The researchers first collected all the questions and found that most of them were vague (vague) or contained redundant information, such as references to movie characters or current events, etc. The questions needed to be sorted out to extract the essence of the questions.

Question sorting mainly involves removing redundant information, breaking down long sentence structures into smaller components, and converting prompts into programming format.

Another situation that requires manual intervention is that the answer to a question requires multiple steps of drawing to explain, that is, the Codex needs to be interactively prompted until the desired visualization effect is achieved.

In addition to generating answers, the model should also be able to explain the reasons for the answers. The researchers guide this through the prompt words "Here is what the above code is doing: 1." The model generates results that are explained step by step.

After being able to answer the questions, the next step is to use Codex to generate new questions for each course.

The researchers created a numbered list of questions written by students in each class. This list was cut off after a random number of questions, and the results were used to prompt Codex to generate the next question.

This process is repeated until enough new questions have been created for each course.

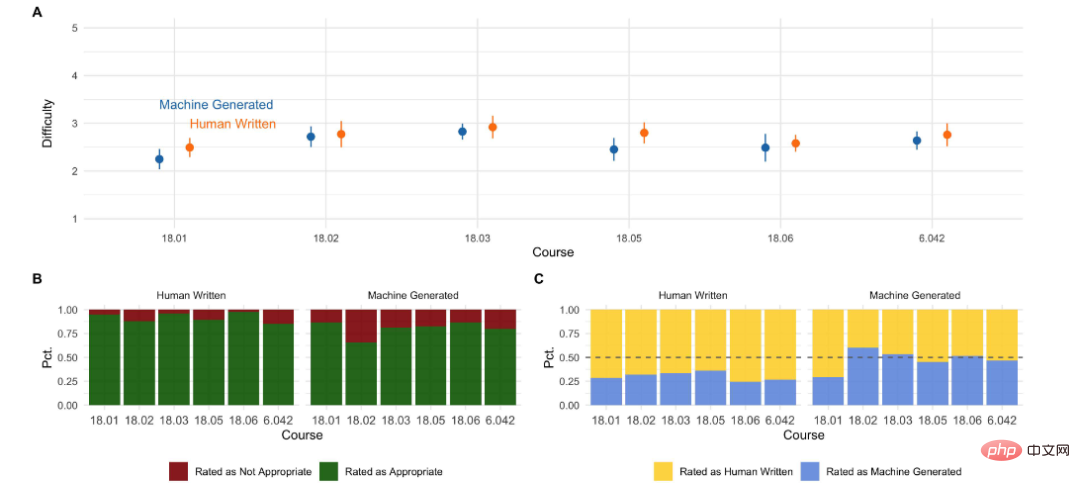

To evaluate the generated questions, the researchers surveyed MIT students who had taken these courses or their equivalents to compare the quality and difficulty of the machine-generated questions to the original courses.

From the results of the student survey we can see:

Reference Information:

https://www.reddit.com/r/artificial/comments/v8liqh/researchers_built_a_neural_network_that_not_only/

The above is the detailed content of MIT releases enhanced version of 'Advanced Mathematics' solver: accuracy rate reaches 81% in 7 courses. For more information, please follow other related articles on the PHP Chinese website!