Home >Technology peripherals >AI >Tesla's fully self-driving car hit a child dummy three times and then accelerated without stopping after the collision

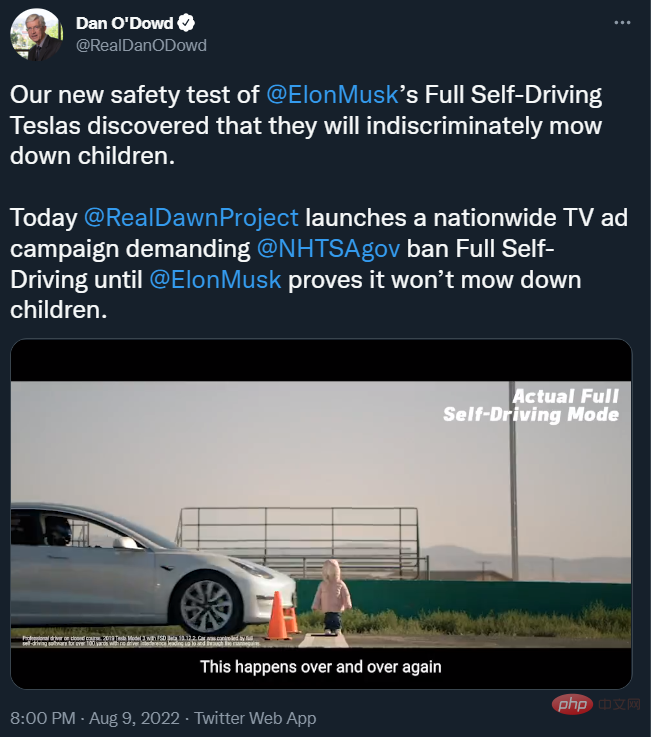

Dan O'Dowd is the CEO of embedded development company Green Hills Software. He launched an initiative called "The Dawn Project" last year to ban the use of unsafe software in secure systems. This includes testing Tesla’s autonomous driving software.

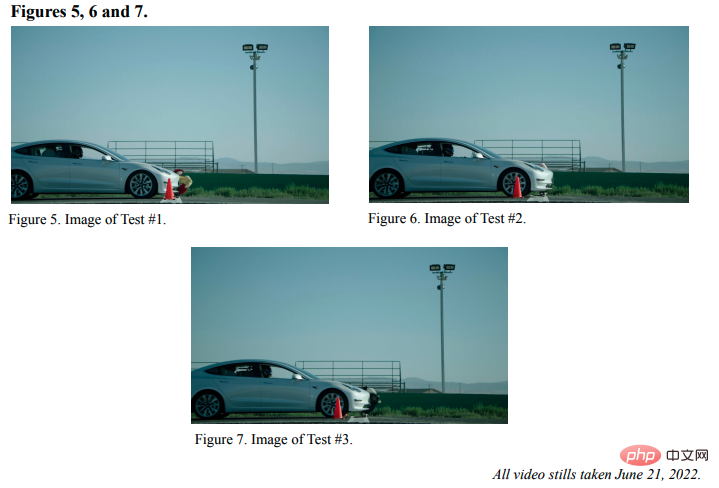

In order to simulate the reaction of a self-driving car when encountering a child crossing the road in reality, The Dawn Project recently conducted a new test, and the results showed that it is equipped with FSD Beta 10.12.2 self-driving The Model 3 of the software will hit the humanoid model of a child:

During the test, the Model 3 hit the model violently, causing the model components to Separation:

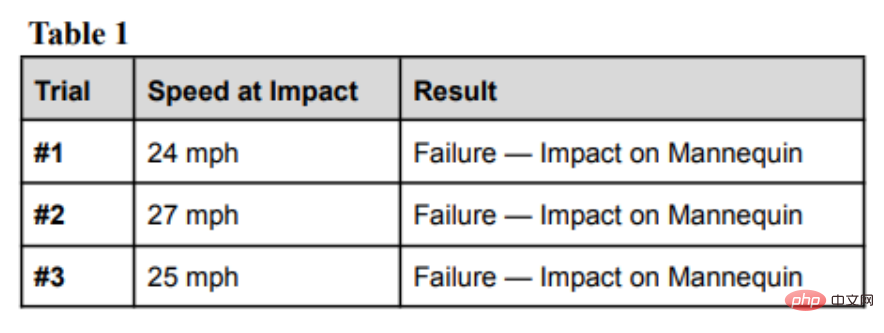

The project team tested 3 times and hit the humanoid model each time. This seems to be no longer an accident, indicating that the FSD system of Model 3 has safety hazards.

Let’s take a look at the specific process of testing.

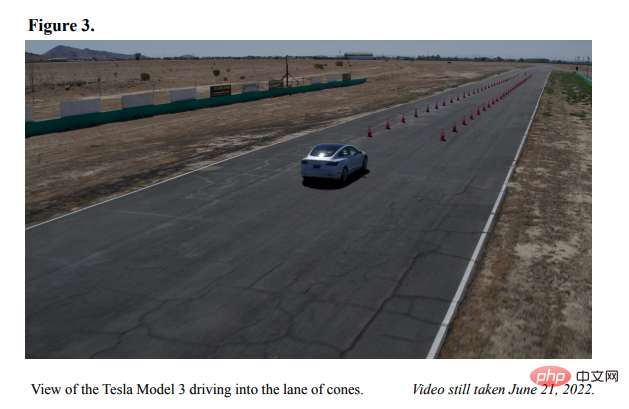

In order to make the testing environment more conducive to Tesla’s FSD system, in addition to the vehicle and humanoid model, there are other variables on the road that may affect the test have been removed.

Standard traffic cones were placed on both sides of the test track, and a child-sized mannequin was placed in the middle at the end of the test track, just like a child trying to cross the road. During the test, professional test drivers first increased the car's speed to 40 miles per hour. Once the vehicle enters the test track, it changes to FSD mode, and the driver no longer operates the steering wheel, nor does he step on the accelerator or brake.

Model 3 FSD Beta 10.12.2 has an initial speed of 40 mph when entering FSD mode. In the three tests, Model 3 hit the humanoid model in front. The speed when the collision occurred is as shown in the table below

## Driver’s report shows: Tesla Model 3 FSD Beta 10.12.2 acted like it was lost in FSD mode, slowed down a bit, then accelerated again after hitting the humanoid model, and the speed at the time of impact was approximately is 25 mph.

This result contradicts Musk’s long-standing claims that FSD is safe. Musk tweeted in January this year: "None of the accidents were caused by Tesla FSD," which was not the case. Dozens of drivers have filed safety complaints with the National Highway Traffic Safety Administration over crashes involving FSDs, the Los Angeles Times reported.

Last month, the California Department of Motor Vehicles (DMV) also accused Tesla of making false claims about its Autopilot and FSD features.

Although FSD claims to be fully autonomous, it actually only operates as an optional add-on, with its main functions being to automatically change lanes, enter and exit highways, and recognize stop signs and traffic. Signals and parking. The software is still in beta testing, but has more than 100,000 customers who have purchased it, with whom Tesla is testing the software in real time and trying to get the AI system to learn from experienced drivers.

The above is the detailed content of Tesla's fully self-driving car hit a child dummy three times and then accelerated without stopping after the collision. For more information, please follow other related articles on the PHP Chinese website!