Home>Article>Backend Development> Crawler parsing method five: XPath

Many languages can crawl, but crawlers based onpythonare more concise and convenient. Crawlers have also become an essential part of the python language. There are also many ways to parse crawlers. The previous article told you about the fourth method of parsingcrawlers: PyQuery. Today I bring you another method, XPath.

The basic use of xpath for python crawlers

1. Introduction

XPath is a language for finding information in XML documents. XPath can be used to traverse elements and attributes in XML documents. XPath is a major element of the W3C XSLT standard, and both XQuery and XPointer are built on XPath expressions.

2. Installation

pip3 install lxml

3. Use

1 , Import

from lxml import etree

2. Basic usage

from lxml import etree

wb_data = """ """ html = etree.HTML(wb_data) print(html) result = etree.tostring(html) print(result.decode("utf-8"))

From the results below, our printer html is actually a python object, and etree.tostring(html) is The basic writing method of html in Buquanli completes the tags that are missing arms and legs.

3. Get the content of a certain tag (basic use). Note that to get all the content of the a tag, there is no need to add a forward slash after a, otherwise an error will be reported.

Writing method one

html = etree.HTML(wb_data) html_data = html.xpath('/html/body/div/ul/li/a') print(html) for i in html_data: print(i.text)

first item second item third item fourth item fifth item

Writing method two(Directly in the tag where you need to find the content Just add a /text() after it)

html = etree.HTML(wb_data) html_data = html.xpath('/html/body/div/ul/li/a/text()') print(html) for i in html_data: print(i)

4. Open and read the html filefirst item second item third item fourth item fifth item

#使用parse打开html的文件 html = etree.parse('test.html') html_data = html.xpath('//*')

#打印是一个列表,需要遍历 print(html_data) for i in html_data: print(i.text)

html = etree.parse('test.html') html_data = etree.tostring(html,pretty_print=True) res = html_data.decode('utf-8') print(res)

Print:

5. Print the attributes of the a tag under the specified path (you can get a certain value by traversing The value of an attribute, find the content of the tag)

html = etree.HTML(wb_data) html_data = html.xpath('/html/body/div/ul/li/a/@href') for i in html_data: print(i)

Print:

link1.html link2.html link3.html link4.html link5.html6. We know that we use xpath to get ElementTree objects one by one. So if you need to find content, you also need to traverse the list of data. Find the content of the a tag attribute equal to link2.html under the absolute path.

html = etree.HTML(wb_data) html_data = html.xpath('/html/body/div/ul/li/a[@href="//m.sbmmt.com/m/faq/link2.html"]/text()') print(html_data) for i in html_data: print(i)

Print:

['second item']

second item

7. Above we found all absolute paths (each one is searched from the root), below we find relative paths, for example, find the a tag content under all li tags.

html = etree.HTML(wb_data) html_data = html.xpath('//li/a/text()') print(html_data) for i in html_data: print(i)

Print:

['first item', 'second item', 'third item', 'fourth item', 'fifth item'] first item second item third item fourth item fifth item8. Above we used the absolute path to find the attributes of all a tags that are equal to the href attribute value, using It is /---absolute path. Next we use relative path to find the value of the href attribute under the a tag under the li tag under the l relative path. Note that double // is required after the a tag.

html = etree.HTML(wb_data) html_data = html.xpath('//li/a//@href') print(html_data) for i in html_data: print(i)

Print:

['link1.html', 'link2.html', 'link3.html', 'link4.html', 'link5.html'] link1.html link2.html link3.html link4.html link5.html

9. The method of checking specific attributes under relative paths is similar to that under absolute paths, or it can be said to be the same.

html = etree.HTML(wb_data) html_data = html.xpath('//li/a[@href="//m.sbmmt.com/m/faq/link2.html"]') print(html_data) for i in html_data: print(i.text)

Print:

[] second item

10、查找最后一个li标签里的a标签的href属性

html = etree.HTML(wb_data) html_data = html.xpath('//li[last()]/a/text()') print(html_data) for i in html_data: print(i)

打印:

['fifth item'] fifth item

11、查找倒数第二个li标签里的a标签的href属性

html = etree.HTML(wb_data) html_data = html.xpath('//li[last()-1]/a/text()') print(html_data) for i in html_data: print(i)

打印:

['fourth item'] fourth item

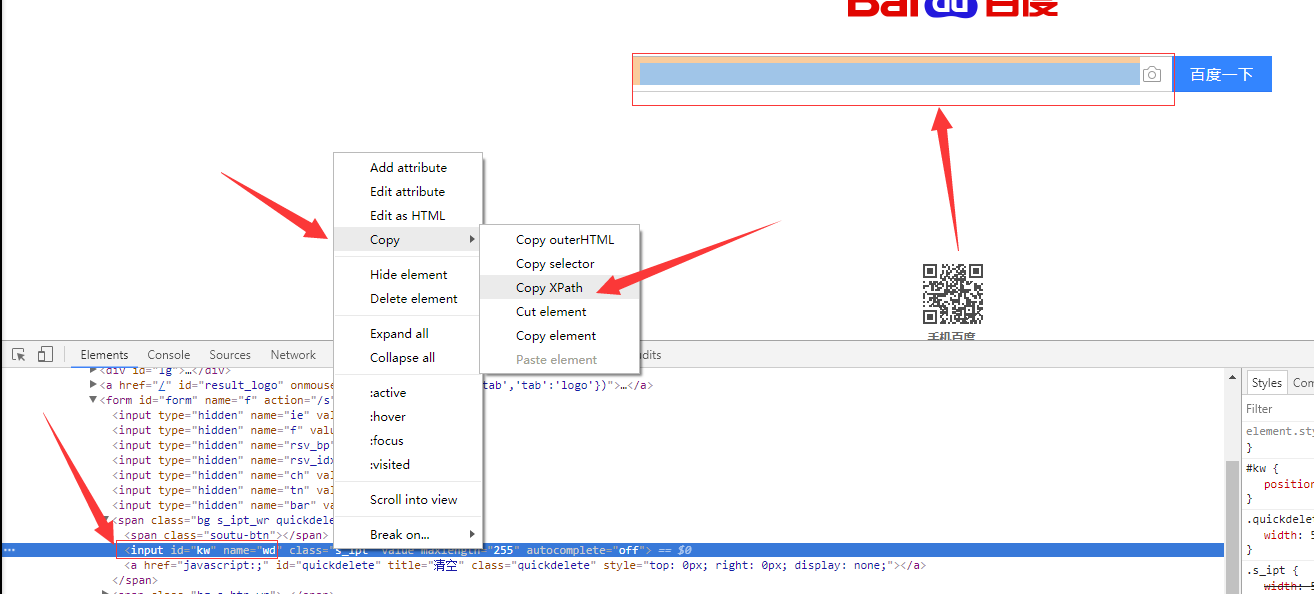

12、如果在提取某个页面的某个标签的xpath路径的话,可以如下图:

//*[@id="kw"]

解释:使用相对路径查找所有的标签,属性id等于kw的标签。

#!/usr/bin/env python # -*- coding:utf-8 -*- from scrapy.selector import Selector, HtmlXPathSelector from scrapy.http import HtmlResponse html = """""" response = HtmlResponse(url='http://example.com', body=html,encoding='utf-8') # hxs = HtmlXPathSelector(response) # print(hxs) # hxs = Selector(response=response).xpath('//a') # print(hxs) # hxs = Selector(response=response).xpath('//a[2]') # print(hxs) # hxs = Selector(response=response).xpath('//a[@id]') # print(hxs) # hxs = Selector(response=response).xpath('//a[@id="i1"]') # print(hxs) # hxs = Selector(response=response).xpath('//a[@href="//m.sbmmt.com/m/faq/link.html"][@id="i1"]') # print(hxs) # hxs = Selector(response=response).xpath('//a[contains(@href, "link")]') # print(hxs) # hxs = Selector(response=response).xpath('//a[starts-with(@href, "link")]') # print(hxs) # hxs = Selector(response=response).xpath('//a[re:test(@id, "i\d+")]') # print(hxs) # hxs = Selector(response=response).xpath('//a[re:test(@id, "i\d+")]/text()').extract() # print(hxs) # hxs = Selector(response=response).xpath('//a[re:test(@id, "i\d+")]/@href').extract() # print(hxs) # hxs = Selector(response=response).xpath('/html/body/ul/li/a/@href').extract() # print(hxs) # hxs = Selector(response=response).xpath('//body/ul/li/a/@href').extract_first() # print(hxs) # ul_list = Selector(response=response).xpath('//body/ul/li') # for item in ul_list: # v = item.xpath('./a/span') # # 或 # # v = item.xpath('a/span') # # 或 # # v = item.xpath('*/a/span') # print(v)

The above is the detailed content of Crawler parsing method five: XPath. For more information, please follow other related articles on the PHP Chinese website!