Home > Article > Backend Development > How to call and implement the least squares method in Python

The so-called linear least squares method can be understood as a continuation of solving equations. The difference is that when the unknown quantity is far smaller than the number of equations, an unsolvable problem will be obtained. The essence of the least squares method is to assign values to unknown numbers while ensuring the minimum error.

The least squares method is a very classic algorithm, and we have been exposed to this name in high school. It is an extremely commonly used algorithm. I have previously written about the principle of linear least squares and implemented it in Python: least squares and its Python implementation; and how to call nonlinear least squares in scipy: nonlinear least squares(Supplementary content at the end of the article);There is also the least squares method of sparse matrices: sparse matrix least squares method.

The following describes the linear least squares method implemented in numpy and scipy, and compares the speed of the two.

The least squares method is implemented in numpy, that is, lstsq(a,b) is used to solve x similar to a@x=b, where a is M× N matrix; then when b is a vector of M rows, it is just equivalent to solving a system of linear equations. For a system of equations like Ax=b, if A is a full-rank simulation, it can be expressed as x=A−1b, otherwise it can be expressed as x=(ATA)−1ATb.

When b is a matrix of M×K, then for each column, a set of x will be calculated.

There are 4 return values, which are the x obtained by fitting, the fitting error, the rank of matrix a, and the single-valued form of matrix a.

import numpy as np np.random.seed(42) M = np.random.rand(4,4) x = np.arange(4) y = M@x xhat = np.linalg.lstsq(M,y) print(xhat[0]) #[0. 1. 2. 3.]

scipy.linalg also provides the least squares function. The function name is also lstsq, and its parameter list is

lstsq(a, b, cond=None, overwrite_a=False, overwrite_b=False, check_finite=True, lapack_driver=None)

where a, b is Ax= b. Both provide overridable switches. Setting them to True can save running time. In addition, the function also supports finiteness checking, which is an option that many functions in linalg have. Its return value is the same as the least squares function in numpy.

cond is a floating point parameter, indicating the singular value threshold. When the singular value is less than cond, it will be discarded.

lapack_driver is a string option, indicating which algorithm engine in LAPACK is selected, optionally 'gelsd', 'gelsy', 'gelss'.

import scipy.linalg as sl xhat1 = sl.lstsq(M, y) print(xhat1[0]) # [0. 1. 2. 3.]

Finally, make a speed comparison between the two sets of least squares functions

from timeit import timeit N = 100 A = np.random.rand(N,N) b = np.arange(N) timeit(lambda:np.linalg.lstsq(A, b), number=10) # 0.015487500000745058 timeit(lambda:sl.lstsq(A, b), number=10) # 0.011151800004881807

This time, the two are not too far apart The difference is that even if the matrix dimension is enlarged to 500, the two are about the same.

N = 500 A = np.random.rand(N,N) b = np.arange(N) timeit(lambda:np.linalg.lstsq(A, b), number=10) 0.389679799991427 timeit(lambda:sl.lstsq(A, b), number=10) 0.35642060000100173

Python calls the nonlinear least squares method

Introduction and constructor

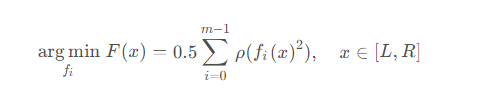

In In scipy, the purpose of the nonlinear least squares method is to find a set of functions that minimize the sum of squares of the error function, which can be expressed as the following formula

where ρ represents the loss function , can be understood as a preprocessing of fi(x).

scipy.optimize encapsulates the nonlinear least squares function least_squares, which is defined as

least_squares(fun, x0, jac, bounds, method, ftol, xtol, gtol, x_scale, f_scale, loss, jac_sparsity, max_nfev, verbose, args, kwargs)

Among them, func and x0 are required parameters, func is the function to be solved, and x0 is the function input The initial value of , there is no default value for these two parameters, they are parameters that must be entered.

bound is the solution interval, the default is (−∞,∞). When verbose is 1, there will be a termination output. When verbose is 2, more information during the operation will be printed. In addition, the following parameters are used to control the error, which is relatively simple.

| Remarks | ||

|---|---|---|

| 10 | -8 | Function tolerance|

| 10 | -8 | Independent variable tolerance|

| 10 | -8 | Gradient tolerance|

| 1.0 | Characteristic scale of the variable | |

| 1.0 | Residual margin value |

The above is the detailed content of How to call and implement the least squares method in Python. For more information, please follow other related articles on the PHP Chinese website!