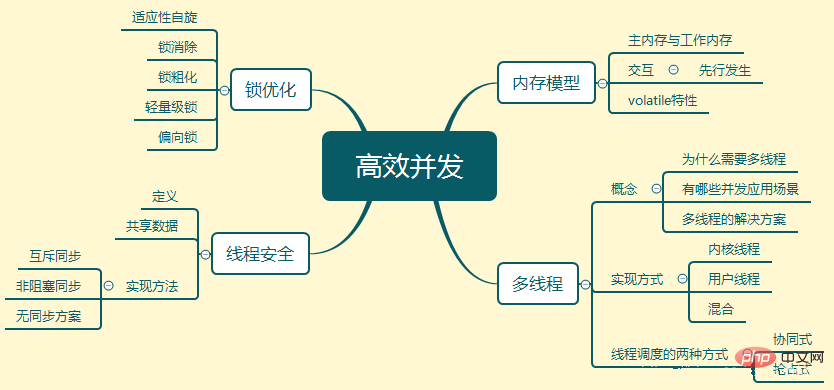

Memory model

The memory model is a specific operation protocol for a specific memory or The cache abstracts the process for read and write access. Its main goal is to define access rules for various variables in the program.

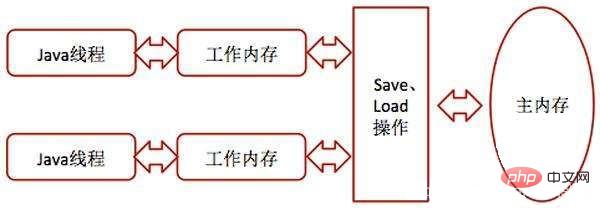

Main memory and working memory

All variables are stored in main memory. Each thread also has its own working memory. In its working memory is a copy of the main memory of the variables used by the thread. The thread's copy of the variable Operations such as reading and assignment must be performed in the working memory, and variables in the main memory cannot be read directly.

Inter-memory interaction operations

Copy from main memory to working memory: perform read and load operations sequentially.

Working memory is synchronized to main memory: store and write operations.

Characteristics of volatile

Volatile has the same function as synchronized, but is more lightweight than synchronized. Its main features are as follows:

Ensure the visibility of this variable to all threads

What does it mean? It means that when a thread modifies the value of this variable, the new value is immediately known to other threads. Ordinary variables cannot do this. The transfer of ordinary variable values between threads needs to be completed through main memory. For example, thread A modifies the value of an ordinary variable and then writes it back to the main memory. Another thread B is in thread B. After A completes the write-back and then reads from the main memory, the new variable value will be visible to thread B.

Disable instruction reordering optimization

Because instruction reordering will interfere with the concurrent execution of the program.

Multithreading

Why do you need multithreading?

The gap between the computing speed of the computer and the speed of its storage and communication subsystem is too large. A lot of time is spent on disk I/O, network communication, and database access. Using multi-threading can better utilize the CPU.

What are the concurrent application scenarios?

Make full use of your computer processor

A server provides services to multiple clients at the same time

How to make the processor internal Are the computing units fully utilized?

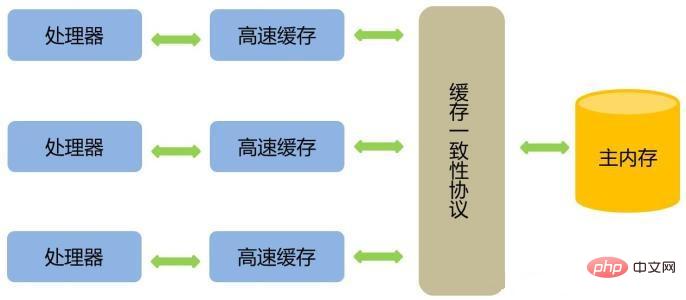

Add a layer of cache

Copy the data needed for the operation to the cache so that the operation can Do it quickly. When the operation is completed, it is synchronized back to the memory from the cache, so that the processor does not have to wait for slow memory reads and writes. However, there is a problem to consider: how to ensure cache consistency.

Optimize out-of-order execution of input code

Thread implementation

Using kernel threads to implement

Kernel threads are threads directly supported by the operating system kernel.

Use user threads to achieve

The establishment, synchronization, destruction and scheduling of user threads are completely completed in user mode without the help of the kernel, and the kernel does not An implementation that is unaware of the existence of threads. This implementation is rarely used.

Use user threads plus lightweight for hybrid implementation

Merge together

Thread scheduling

Thread scheduling refers to the process by which the system allocates processor usage rights to threads. There are two main types: collaborative and preemptive.

Cooperative

The execution time of the thread is controlled by the thread itself. After the thread finishes executing its work, it will actively notify the system to switch to another thread.

The advantage is that it is simple to implement and there is no thread synchronization problem. The disadvantage is that if there is a problem with the programming of a thread and the system is not told to switch threads, the program will always be blocked there, which can easily cause the system to crash.

Preemptive

Threads will be allocated execution time by the system, and thread switching is not determined by itself. This is the thread scheduling method used by java.

Thread safety

#When multiple threads access an object, if the scheduling of this thread in the runtime environment is not considered and alternate execution, there is no need for additional synchronization, or any other coordination operations on the caller. The behavior of calling this object can obtain the correct result, then this object is safe.

Classification of shared data

Immutable

Immutable shared data is data modified with final, which must be thread-safe. If the shared data is a basic type variable, just use the final keyword when defining it.

If the shared data is an object, then the behavior of the object needs to not affect its state. You can declare all variables with state in the object as final. For example, the String class is an immutable class

Absolutely thread-safe

Mark yourself as a thread-safe class in the Java API. Most of them are not absolutely thread-safe. For example, Vector is a thread-safe collection, and all its methods are modified to be synchronized, but in a multi-threaded environment, it is still not synchronized.

Relative thread safety

Relative thread safety is what we usually call thread safety. It can only guarantee that individual operations on this object are thread-safe. However, for some specific sequences of consecutive calls, it may be necessary to use additional synchronization means on the calling side to ensure the correctness of the calls.

Most thread-safe classes belong to this type.

Thread compatible

The object itself is not linearly safe, but it can be made through the correct use of synchronization means on the calling side To ensure that objects can be used safely in concurrent environments. Most classes that are not thread-safe fall into this category.

Thread opposition

No matter what, it cannot be used concurrently in a multi-threaded environment, such as System.setIn( ), System.SetOut(). One modifies the input and the other modifies the output. The two cannot be "alternately" performed.

Implementation method

##Method 1: Mutually exclusive synchronization - pessimistic concurrency strategy

Method 2: Non-blocking synchronization - Optimistic concurrency strategy

Perform the operation first, if there are no other threads competing for shared data , then the operation is successful; if there is contention for the shared data and a conflict occurs, other compensatory measures will be taken.

Method 3: No synchronization scheme

If a The method does not involve sharing data, so there is no need for synchronization measures. Such as repeatable code and thread-local storage.

(1) Reentrant code

If a method’s return result is predictable and can return the same result as long as the same data is input, then it Meets reentrancy requirements.

(2) Thread local storage

If the data required in a piece of code must be shared with other codes, and the codes that share data are executed in the same thread, in this way, we can The visible range of shared data is limited to one thread, so that no synchronization is needed to ensure that there are no data contention problems between threads.

Lock optimization

Adaptive spin

Because of blocking or waking up a JAVA thread The operating system needs to switch the CPU state to complete, and this state transition requires processor time. If the content in the synchronization code block is too simple, it is likely that the state transition will take longer than the execution time of the user code.

In order to solve this problem, we can let the subsequent thread requesting the lock "wait a moment", execute a busy loop, and spin. No processor execution time is given up at this time. If the spin exceeds the limited number of times and the lock is still not successfully obtained, the traditional method will be used to suspend the thread.

So what is adaptive spin?

On the same lock object, if the spin wait has just successfully obtained the lock, the virtual machine will think that the probability of obtaining the lock by spin is very high, and will allow it to spin. The wait lasts relatively longer. Conversely, if spin rarely succeeds in acquiring overlock, the spin process may be omitted.

Lock elimination

refers to the virtual machine just-in-time compiler running , Eliminate locks that require synchronization in some codes but are detected as unlikely to have shared data competition.

Lock coarsening

If a series of consecutive operations repeatedly lock and unlock the same object, or even the locking operation occurs in the loop body, then even if there is no thread competition, frequent mutual exclusion synchronization operations will Cause unnecessary performance loss.

If the virtual machine detects that a series of fragmented operations all lock the same object, it will coarsen the scope of lock synchronization to the outside of the entire operation sequence, so that it only needs to lock once.

Lightweight lock

Without multi-thread competition, reduce Traditional heavyweight locks incur performance penalties using operating system mutexes.

Applicable scenarios: No actual competition, multiple threads use locks alternately; short-term lock competition is allowed.

Biased lock

Biased lock is used to reduce the use of no competition and only one thread In the case of locks, the performance consumption caused by using lightweight locks. A lightweight lock requires at least one CAS each time it applies for and releases a lock, but a biased lock only requires one CAS during initialization.

Applicable scenarios: There is no actual competition, and only the first thread to apply for the lock will use the lock in the future.

The above is a detailed introduction to efficient concurrency in JAVA virtual machines. For more related questions, please visit the PHP Chinese website:JAVA Video Tutorial

The above is the detailed content of Detailed introduction to JAVA virtual machine (JVM) (8) - efficient concurrency. For more information, please follow other related articles on the PHP Chinese website!

How to solve mysql query error error

How to solve mysql query error error MySQL changes root password

MySQL changes root password How to solve problems when parsing packages

How to solve problems when parsing packages Detailed explanation of setinterval

Detailed explanation of setinterval How to Get Started with Buying Cryptocurrencies

How to Get Started with Buying Cryptocurrencies How to read files and convert them into strings in java

How to read files and convert them into strings in java How to install the pycharm interpreter

How to install the pycharm interpreter How to block a website

How to block a website