In recent years, autonomous driving has become increasingly popular due to its potential to reduce driver burden and improve driving safety. focus on. Vision-based three-dimensional occupancy prediction is an emerging perception task suitable for cost-effective and comprehensive investigation of autonomous driving safety. Although many studies have demonstrated the superiority of 3D occupancy prediction tools compared to object-centered perception tasks, there are still reviews dedicated to this rapidly developing field. This paper first introduces the background of vision-based 3D occupancy prediction and discusses the challenges encountered in this task. Next, we comprehensively discuss the current status and development trends of current 3D occupancy prediction methods from three aspects: feature enhancement, deployment friendliness, and labeling efficiency. Finally, current research trends are summarized and some encouraging future prospects are proposed.

Open source link: https://github.com/zya3d/Awesome-3D-Occupancy-Prediction

In summary, the main contributions of this article are as follows:

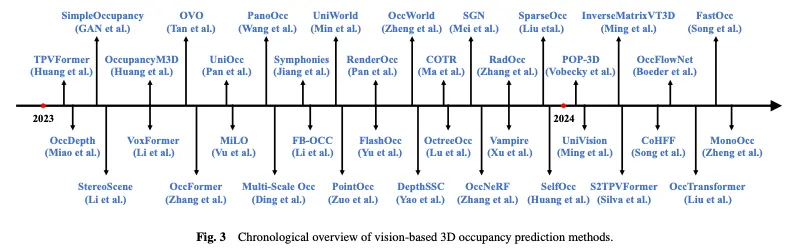

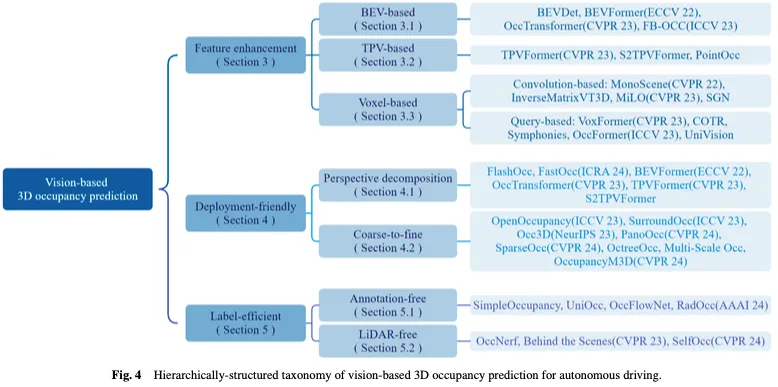

Figure 3 shows a temporal overview of vision-based 3D occupancy prediction methods, and Figure 4 shows the corresponding hierarchical structure taxonomy.

Generating GT labels is a challenge for 3D occupancy prediction. Although many 3D perception datasets, such as nuScenes and Waymo, provide lidar point cloud segmentation labels, these labels are sparse and difficult to supervise dense 3D occupancy prediction tasks. The importance of using dense occupancy as GT label has been demonstrated by Wei et al. Some recent research focuses on generating dense occupancy labels using sparse lidar point cloud segmentation annotations, providing some useful datasets and benchmarks for 3D occupancy prediction tasks.

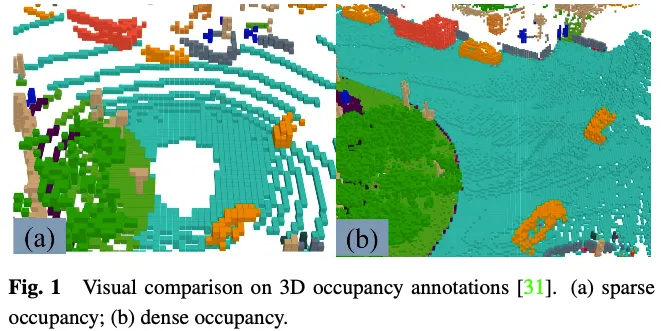

The GT label in the 3D occupancy prediction task indicates whether each element in the 3D space is occupied and the semantic label of the occupied element. Due to the large number of elements in three-dimensional space, it is difficult to label each element manually. A common approach is to voxelize the ground truth of existing 3D point cloud segmentation tasks and then generate GTs for 3D occupancy predictions through voting based on the semantic labels of voxel midpoints. However, the ground truth generated this way is actually simplified. As shown in Figure 1, there are still many occupied elements in places such as roads that are not marked as occupied. Supervisory tools that have models with this simplified terrain reality will result in degraded model performance. Therefore, some work how to automatically or semi-automatically generate high-quality dense 3D occupancy annotations.

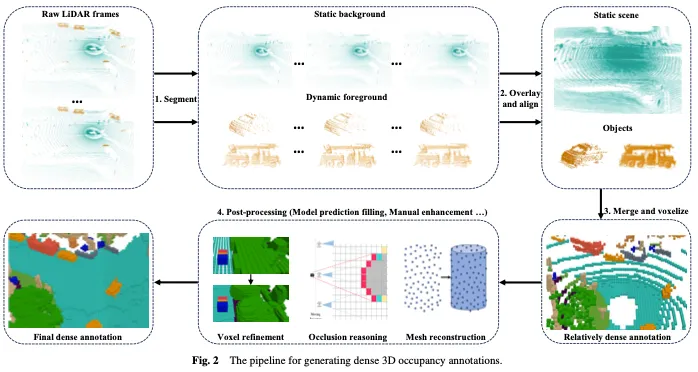

As shown in Figure 2, generating dense three-dimensional occupancy annotations usually includes the following four steps:

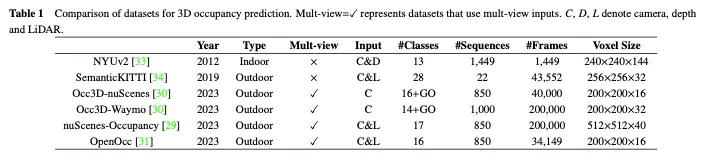

In this section, we introduce some open source, large-scale datasets commonly used for 3D occupancy prediction, which are given in Table 1 comparison between them.

The NUYv2 dataset consists of video sequences from various indoor scenes, captured by Microsoft Kinect's RGB and Depth cameras. It contains 1449 pairs of densely labeled aligned RGB and depth images, and 407024 unlabeled frames from 3 cities. Although mainly intended for indoor use and not suitable for autonomous driving scenarios, some studies have used this dataset for 3D occupancy prediction.

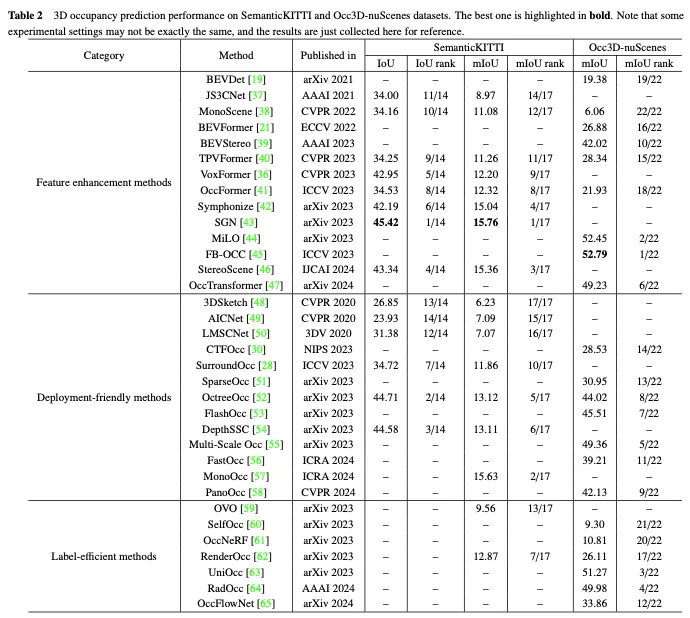

SemanticKITTI is a widely used dataset for 3D occupancy prediction, including 22 sequences and more than 43,000 frames from the KITTI dataset. It creates dense 3D occupancy annotations by overlaying future frames, segmenting voxels and assigning labels via point voting. Additionally, it traces rays to examine for each pose of the car which voxels are visible to the sensor, and ignores invisible voxels during training and evaluation. However, since it is based on the KITTI dataset, it only uses images from the front camera as input, while subsequent datasets usually use multi-view images. As shown in Table 2, we collected the evaluation results of existing methods on the SemanticKITTI dataset.

NuScenes occupancy is a 3D occupancy prediction data set based on NuScenes, a large-scale autonomous driving data set in outdoor environments. It contains 850 sequences, 200,000 frames and 17 semantic categories. The dataset is initially generated using an augmentation and purification (AAP) pipeline to generate coarse 3D occupancy labels, and then manual augmentation is used to refine the labels. Furthermore, it introduces OpenOccupancy, the first benchmark for ambient semantic occupancy awareness, to evaluate advanced 3D occupancy prediction methods.

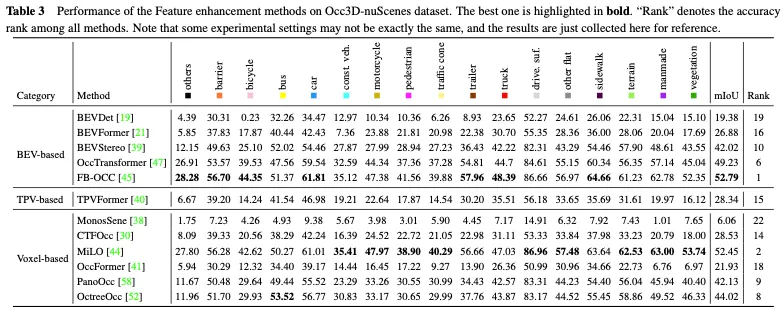

Subsequently, Tian et al. further constructed the Occ3D nuScenes and Occ3D Waymo data sets for 3D occupancy prediction based on the nuScenes and Waymo autonomous driving data sets. They introduce a semi-automatic label generation pipeline that leverages existing labeled 3D perception datasets and identifies voxel types based on their visibility. In addition, they established the Occ3d benchmark for large-scale 3D occupancy prediction to enhance the evaluation and comparison of different methods. As shown in Table 2, we collected the evaluation results of existing methods on the Occ3D nuScenes dataset.

Additionally, similar to Occ3D Nude and Nude Occupancy, OpenOcc is also a dataset built for 3D occupancy prediction based on the Nude dataset. It contains 850 sequences, 34149 frames and 16 classes. Note that this dataset provides additional annotations for eight foreground objects, which aids in downstream tasks such as motion planning.

Although vision-based 3D occupancy prediction has made significant progress in recent years, it still faces limitations from feature representation, practical application, and annotation costs. . For this task, there are three key challenges: (1) Obtaining perfect 3D features from 2D visual input is difficult. The goal of vision-based 3D occupancy prediction is to achieve detailed perception and understanding of 3D scenes from image input only. However, the lack of depth and geometric information inherent in images poses a significant challenge to learning 3D feature representations directly from them. (2) Heavy computational load in three-dimensional space. 3D occupancy prediction usually requires the use of 3D voxel features to represent the environment space, which inevitably involves operations such as 3D convolution for feature extraction, which greatly increases computational and memory overhead and hinders practical deployment. (3) Expensive fine-grained annotations. 3D occupancy prediction involves predicting the occupancy status and semantic category of high-resolution voxels, but achieving this often requires fine-grained semantic annotation of each voxel, which is time-consuming and expensive, creating a bottleneck for this task.

In response to these key challenges, the research work on vision-based three-dimensional occupancy prediction for autonomous driving has gradually formed three main lines: feature enhancement, deployment friendliness, and label efficiency. Feature enhancement methods alleviate the difference between 3D space output and 2D space input by optimizing the feature representation capabilities of the network. The deployment-friendly approach aims to significantly reduce resource consumption while ensuring performance by designing a simple and efficient network architecture. Efficient labeling methods are expected to achieve satisfactory performance even when annotations are insufficient or completely absent. Next, we provide a comprehensive overview of current approaches around these three branches.

The task of vision-based 3D occupancy prediction involves predicting the occupancy status and semantic information of the 3D voxel space from the 2D image space, which is useful for predicting the occupancy status and semantic information of the 3D voxel space from the 2D image space. Obtaining perfect 3D features for visual input poses key challenges. To address this problem, some methods improve occupancy prediction from the feature enhancement perspective, including learning from bird's-eye view (BEV), three-view view (TPV), and three-dimensional voxel representation.

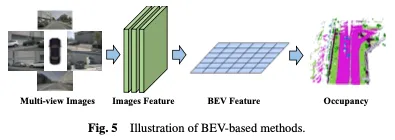

An effective method for learning occupancy is based on bird's-eye view (BEV), which provides features that are insensitive to occlusion and contains certain Depth geometry information. By learning strong BEV representation, robust 3D occupancy scene reconstruction can be achieved. First, a 2D backbone network is used to extract image features from visual input, then BEV features are obtained through viewpoint transformation, and finally 3D occupancy prediction is completed based on BEV feature representation. The BEV-based method is shown in Figure 5.

A straightforward approach is to leverage BEV learning from other tasks, such as using methods such as BEVDet and BEVFormer in 3D object detection. To extend these occupancy learning methods, occupancy heads can be added or replaced during training to obtain the final results. This adaptation allows the integration of occupancy estimation into existing BEV-based frameworks, enabling simultaneous detection and reconstruction of 3D occupancy in a scene. Based on the powerful baseline BEVFormer, OccTransformer adopts data augmentation to increase the diversity of training data to improve model generalization capabilities and leverage the powerful image backbone to extract more informative features from the input data. It also introduces a 3D Unet Head to better capture the spatial information of the scene, and additional loss functions to improve model optimization.

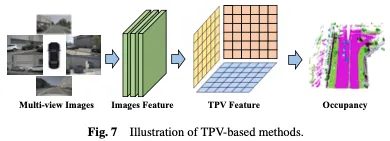

Although BEV-based representations have certain advantages compared to images because they inherently provide top-down projections of 3D space, but they inherently lack the ability to describe the fine-grained 3D structure of a scene using only a single plane. The method based on three perspective views (TPV) utilizes three orthogonal projection planes to model the 3D environment, which further enhances the representation ability of visual features for occupancy prediction. First, image features are extracted from visual input using a 2D backbone network. Subsequently, these image features are promoted to a three-view space, and finally 3D occupancy prediction is achieved based on the feature representation of three projection viewpoints. The BEV-based method is shown in Figure 7.

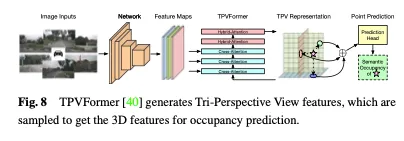

In addition to BEV features, TPVFormer generates features in front and side views in the same way. Each plane models the 3D environment from a different perspective, and their combination provides a comprehensive description of the entire 3D structure. Specifically, to obtain the features of a point in three-dimensional space, we first project it onto each of the three planes and use bilinear interpolation to obtain the features of each projected point. Then, we summarize the three projection features into synthetic features of 3D points. Therefore, TPV representation can describe 3D scenes at arbitrary resolutions and generate different features for different points in 3D space. It further proposes a transformer-based encoder (TPVFormer) to efficiently obtain TPV features from 2D images and perform image cross-attention between TPV grid queries and corresponding 2D image features, thus converting 2D information into Upgrade to 3D space. Finally, cross-view hybrid attention between TPV features enables interactions between the three planes. The overall architecture of TPVFormer is shown in Figure 8.

In addition to converting 3D space into a projected perspective (such as BEV or TPV), there are also Methods that operate directly on 3D voxel representations. A key advantage of these methods is the ability to learn directly from the original 3D space, minimizing information loss. By leveraging raw three-dimensional voxel data, these methods can effectively capture and utilize complete spatial information, resulting in a more accurate and comprehensive understanding of occupancy. First, a 2D backbone network is used to extract image features, and then, a specially designed convolution-based mechanism is used to bridge 2D and 3D representations, or a query-based approach is used to directly obtain the 3D representation. Finally, a 3D occupancy head is used to complete the final prediction based on the learned 3D representation. The voxel-based method is shown in Figure 9.

Convolution-based methods

One approach is to utilize specially designed convolutional architectures to bridge the gap from 2D to 3D and learn 3D occupancy representations. A prominent example of this approach is the adoption of the U-Net architecture as a carrier of feature bridging. The U-Net architecture adopts an encoder-decoder structure with skip connections between upsampling and downsampling paths, retaining low-level and high-level feature information to mitigate information loss. Through convolutional layers of different depths, the U-Net structure can extract features of different scales, helping the model capture local details and global context information in the image, thereby enhancing the model's understanding of complex scenes and performing effective occupancy prediction.

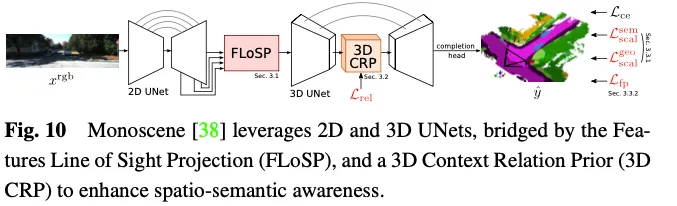

Monoscene utilizes U-net for vision-based 3D occupancy prediction. It introduces a mechanism called two-dimensional feature line-of-sight projection (FLoSP), which uses feature perspective projection to project two-dimensional features onto three-dimensional space, and calculates the three-dimensional feature space on two-dimensional features based on the imaging principle and camera parameters. The coordinates of each point to sample features in the three-dimensional feature space. This method promotes 2D features into a unified 3D feature map and serves as a key component connecting 2D and 3D U-net. Monoscene also proposes a 3D Contextual Relation Prior (3D CRP) layer inserted at the 3D UNet bottleneck, which learns an n-way voxel-to-voxel semantic scene relationship graph. This provides the network with a global receptive field and improves spatial semantic awareness due to the relationship discovery mechanism. The overall architecture of Monoscene is shown in Figure 10.

Query-based methods

Another way of learning from 3D space involves generating a set of Query to capture a representation of the scene. In this approach, query-based techniques are used to generate query suggestions, which are then used to learn comprehensive representations of 3D scenes. Subsequently, cross-attention and self-attention mechanisms on images are applied to refine and enhance the learned representations. This approach not only enhances scene understanding but also enables accurate reconstruction and occupancy prediction in 3D space. Furthermore, the query-based approach provides greater flexibility to adjust and optimize based on different data sources and query strategies, enabling better capture of local and global contextual information to facilitate 3D occupancy prediction representation.

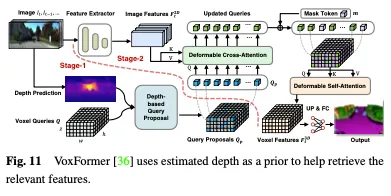

Depth can be used as a valuable prior for selecting occupied queries. In Voxformer, the estimated depth is used as a prior for predicting occupancy and selecting relevant queries. Only occupied queries are used to gather information from images using deformable attention. The updated query proposals and masked tokens are then combined to reconstruct voxel features. Voxformer extracts 2D features from RGB images and then utilizes a sparse set of 3D voxel queries to index these 2D features, using the camera projection matrix to link the 3D positions to the image stream. Specifically, voxel queries are learnable parameters of 3D mesh shapes designed to query features from images into 3D volumes using attention mechanisms. The entire framework is a two-stage cascade consisting of class-agnostic proposals and class-specific segmentation. Stage 1 generates class-agnostic query suggestions, while stage 2 adopts a MAE-like architecture to propagate information to all voxels. Finally, the voxel features are upsampled for semantic segmentation. The overall architecture of VoxFormer is shown in Figure 11.

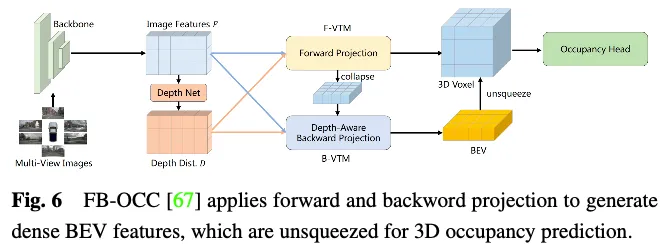

The performance comparison of feature enhancement methods on the Occ3D nuScenes dataset is shown in Table 3. The results show that methods that deal directly with voxel representations often achieve strong performance because they do not suffer significant information loss during computation. Furthermore, although BEV-based methods have only one projected viewpoint for feature representation, they can still achieve comparable performance due to the rich information contained in the bird's-eye view and their insensitivity to occlusion and scale changes. Furthermore, by reconstructing 3D information from multiple complementary views, three-perspective view (TPV) based methods are able to mitigate potential geometric ambiguities and capture a more comprehensive scene context, thereby enabling effective 3D occupancy prediction. Notably, FB-OCC utilizes both forward and backward view conversion modules, enabling them to enhance each other to obtain higher-quality pure electric vehicle representation and achieve excellent performance. This shows that BEV-based methods also have great potential in improving 3D occupancy prediction through effective feature enhancement.

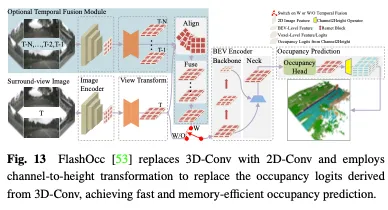

Learning occupancy representation directly from 3D space is extremely challenging due to its wide scope and complex data nature. The high dimensionality and intensive computation associated with 3D voxel representation make the learning process very resource demanding, which is not conducive to practical deployment applications. Therefore, methods for designing deployment-friendly 3D representations aim to reduce computational costs and improve learning efficiency. This section presents methods to address computational challenges in 3D scene occupancy estimation, focusing on developing accurate and efficient methods rather than directly processing the entire 3D space. The techniques discussed include perspective decomposition and coarse-to-fine refinement, which have been demonstrated in recent work to improve the computational efficiency of 3D occupancy predictions.

Computational complexity can be effectively reduced by separating viewpoint information from 3D scene features or projecting it into a unified representation space , making the model more robust and generalizable. The core idea of this method is to decouple the representation of the three-dimensional scene from the viewpoint information, thereby reducing the number of variables that need to be considered in the feature learning process and reducing the computational complexity. Decoupling viewpoint information enables the model to generalize better and adapt to different viewpoint transformations without having to relearn the entire model.

To address the computational burden of learning from the entire 3D space, a common approach is to use Bird’s Eye View (BEV) and Three View View (TPV) representations. By decomposing the 3D space into these individual view representations, the computational complexity is significantly reduced while still capturing essential information for occupancy prediction. The key idea is to first learn from the BEV and TPV perspectives and then recover the complete 3D occupancy information by combining the insights obtained from these different views. This perspective decomposition strategy allows for more efficient and effective occupancy estimation compared to learning directly from the entire 3D space.

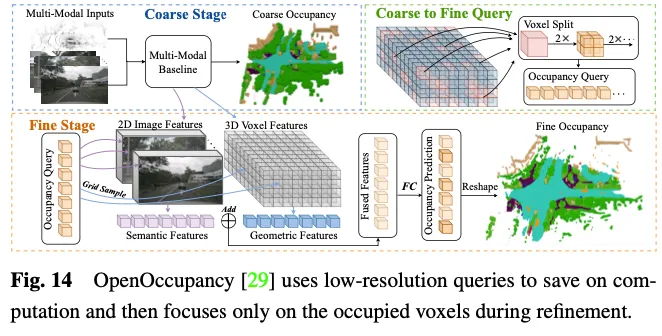

Learning high-resolution fine-grained global voxel features directly from large-scale 3D space is Time consuming and challenging. Therefore, some methods have begun to explore the coarse-to-fine feature learning paradigm. Specifically, the network initially learns a coarse representation from an image and then refines and recovers a fine-grained representation of the entire scene. This two-step process helps achieve more accurate and efficient predictions of scene occupancy.

OpenOccupancy adopts a two-step approach to learn occupancy representation in 3D space. As shown in Figure 14.

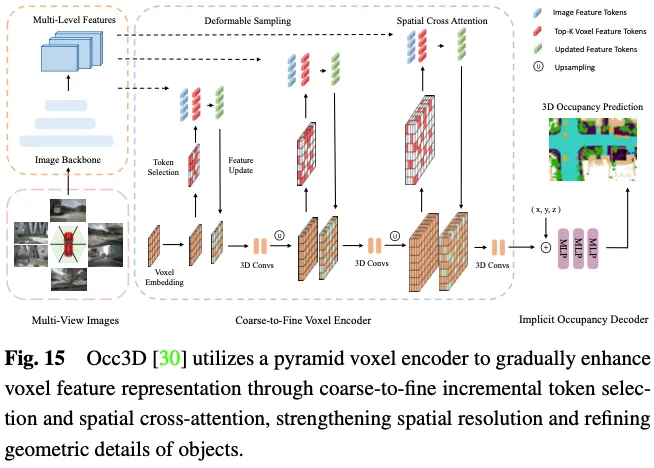

Predicting 3D occupancy requires a detailed geometric representation, and utilizing all 3D voxel markers to interact with ROIs in multi-view images will incur significant computational and memory costs. As shown in Figure 15, Occ3D proposes an incremental token selection strategy to selectively select foreground and uncertain voxel tokens during the cross-attention calculation process, thereby achieving adaptation without sacrificing accuracy. Efficient computing. Specifically, at the beginning of each pyramid layer, each voxel label is input into a binary classifier to predict whether the voxel is empty, supervised by binary ground-truth occupancy maps to train the classifier. PanoOcc proposes to seamlessly integrate object detection and semantic segmentation within a joint learning framework to promote a more comprehensive understanding of 3D environments. The method utilizes voxel queries to aggregate spatiotemporal information from multi-frame and multi-view images, merging feature learning and scene representation into a unified occupancy representation. In addition, it explores the sparsity of 3D space by introducing an occupancy sparsity module, which gradually sparses the occupancy during the upsampling process from coarse to fine, significantly improving storage efficiency.

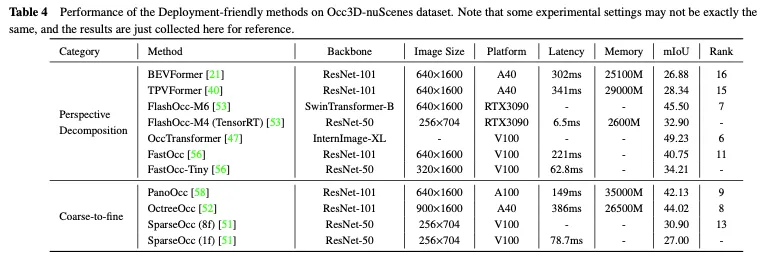

The performance comparison of deployment-friendly methods on the Occ3D nuScenes dataset is shown in Table 4. Since the results were collected from different papers with differences in backbone, image size and computing platform, only some preliminary conclusions can be drawn. Generally, under similar experimental settings, coarse-to-fine methods outperform perspective decomposition methods in terms of performance due to less information loss, while perspective decomposition usually exhibits better real-time performance and lower memory usage. Additionally, models with heavier backbones and processing larger images can achieve better accuracy but also impair real-time performance. Although lightweight versions of methods such as FlashOcc and FastOcc are close to the requirements for practical deployment, their accuracy needs to be further improved. For deployment-friendly methods, both the perspective decomposition strategy and the coarse-to-fine strategy strive to continuously reduce the computational load while maintaining the accuracy of 3D occupancy prediction.

Among the existing methods for creating accurate occupancy labels, there are two basic steps. The first is to collect lidar point clouds corresponding to multi-view images and annotate them for semantic segmentation. The other is to use the tracking information of dynamic objects to fuse multi-frame point clouds through complex algorithms. Both steps are quite expensive, which limits the ability of the occupancy network to exploit the large number of multi-view images in autonomous driving scenarios. In recent years, neural radiation fields (Nerf) have been widely used in two-dimensional image rendering. There are several methods that plot predicted 3D occupancy into 2D maps in a Nerf-like manner and train the occupancy network without the involvement of fine-grained annotations or lidar point clouds, which significantly reduces the cost of data annotation.

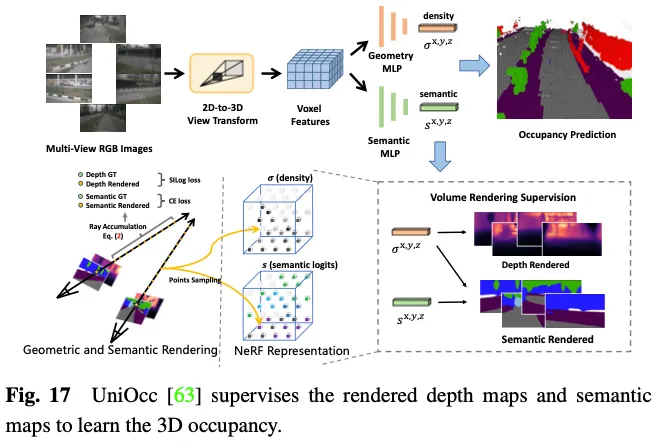

SimpleOccupancy first generates explicit 3D voxel features of the scene from image features via view transformations, then renders them in a Nerf-style manner is a 2D depth map. The 2D depth map is supervised by a sparse depth map generated from the lidar point cloud. Depth maps are also used to synthesize surround images for self-supervision. UniOcc uses two separate MLPs to convert 3D voxel logits into density of voxels and semantic logits of voxels. Afterwards, UniOCC follows general volume rendering to obtain multi-view depth maps and semantic maps, as shown in Figure 17. These 2D maps are supervised by labels generated from segmented LiDAR point clouds. RenderOcc builds NeRF-like 3D volumetric representations from multi-view images and generates 2D renderings using advanced volumetric rendering techniques that can provide direct 3D supervision using only 2D semantic and depth labels. With this 2D rendering supervision, the model learns multi-view consistency by analyzing intersections of rays from various camera frustums to gain a deeper understanding of geometric relationships in 3D space. Furthermore, it introduces the concept of auxiliary rays to utilize rays from adjacent frames to enhance the multi-view consistency constraint of the current frame, and develops a dynamic sampling training strategy to filter misaligned rays. To address the imbalance problem between dynamic and static categories, OccFlowNet further introduces occupancy flow to predict the scene flow for each dynamic voxel based on 3D bounding boxes. Using voxel streaming, dynamic voxels can be moved to the correct location in the time frame, eliminating the need for dynamic object filtering during rendering. During training, correctly predicted voxels and voxels within bounding boxes are transformed using flows to align with the target location in the time frame, followed by grid alignment using distance-based weighted interpolation.

The above approach eliminates the need for explicit 3D occupancy annotations, greatly reducing the burden of manual annotation. However, they still rely on lidar point clouds to provide depth or semantic labels to supervise the rendered maps, which cannot yet achieve a fully self-supervised framework for 3D occupancy prediction.

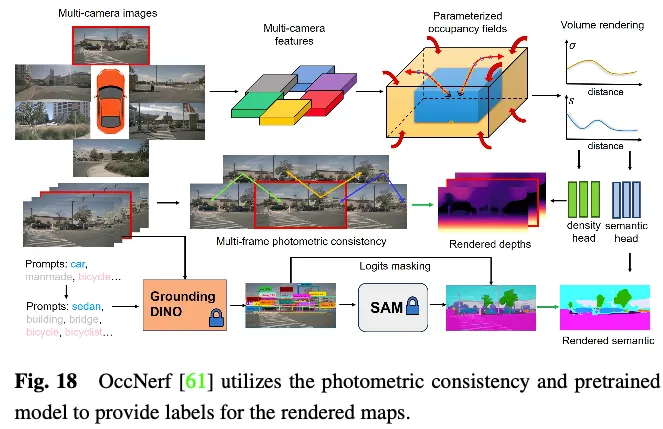

OccNerf does not utilize lidar point clouds to provide depth and semantic labels. Instead, as shown in Figure 18, it uses a parameterized occupancy field to handle boundless outdoor scenes, reorganizes the sampling strategy, and uses volume rendering to convert the occupancy field into a multi-camera depth map, which is finally supervised by multi-frame photometric consistency . Furthermore, the method leverages a pre-trained open vocabulary semantic segmentation model to generate 2D semantic labels, supervising the model to deliver semantic information to occupied fields. Behind the scenes a single view image sequence is used to reconstruct the driving scene. It treats the frustum features of the input image as a density field and renders a composite of the other views. The entire model is trained with a specially designed image reconstruction loss. SelfOcc predicts signed distance field values of BEV or TPV features to render 2D depth maps. Additionally, original color and semantic maps are also rendered and supervised by labels generated from multi-view image sequences.

#These methods sidestep the need for depth or semantic labels from lidar point clouds. Instead, they leverage image data or pre-trained models to obtain these labels, enabling a truly self-supervised framework for 3D occupancy prediction. Although these methods can achieve training patterns that are most consistent with practical application experience, further exploration is needed to obtain satisfactory performance.

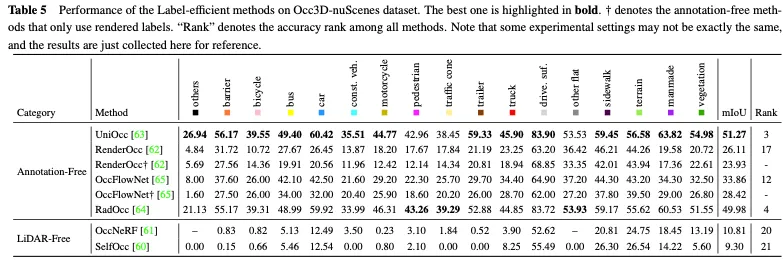

Table 5 shows the performance comparison of label-efficient methods on the Occ3D nuScenes dataset. Most annotation-free methods use 2D rendering supervision as a complement to explicit 3D occupancy supervision and obtain certain performance improvements. Among them, UniOcc and RadOcc even obtained excellent rankings of 3 and 4 respectively among all methods, which fully proves that the annotation-free mechanism can promote the extraction of additional valuable information. When employing only 2D rendering supervision, they can still achieve comparable accuracy, illustrating the feasibility of saving the cost of explicit 3D occupancy annotation. The lidar-free approach establishes a comprehensive self-supervised framework for 3D occupancy prediction, further eliminating the need for tags and lidar data. However, since the point cloud itself lacks precise depth and geometric information, its performance is greatly limited.

Motivated by the above methods, we summarize current trends and propose several important research directions that have the potential to benefit from data , methods and task perspectives significantly advance the field of vision-based 3D occupancy prediction for autonomous driving.

Obtaining sufficient real driving data is crucial to improving the overall capabilities of the autonomous driving perception system. Data generation is a promising approach as it does not incur any acquisition costs and provides the flexibility to manipulate data diversity as needed. Although some methods utilize cues such as text to control the content of generated driving data, they cannot guarantee the accuracy of spatial information. In contrast, 3D Occupancy provides a fine-grained and actionable representation of the scene, facilitating controllable data generation and spatial information display compared to point clouds, multi-view images, and BEV layouts. WoVoGen proposes volume-aware diffusion that can map 3D occupancy to realistic multi-view images. After modifications are made to the 3D occupancy, such as adding a tree or changing a car, the diffusion model will synthesize the corresponding new driving scene. The modified three-dimensional occupancy records three-dimensional position information, ensuring the authenticity of the synthetic data.

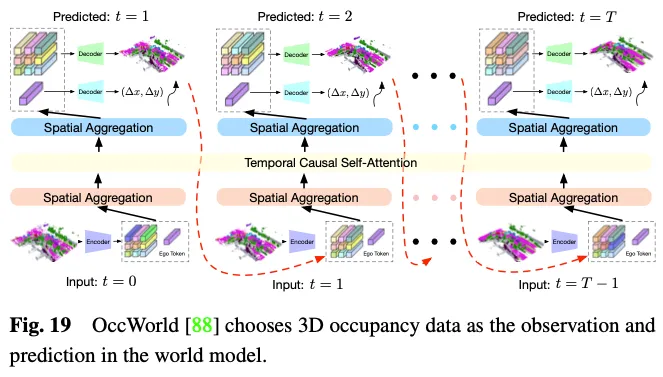

The world model of autonomous driving is becoming more and more prominent. It provides a simple and elegant framework that enhances the model to understand the entire scene based on environmental input observations and directly output appropriate The ability of dynamic scene evolution data. Leveraging 3D occupancy as an environmental observation in a world model has clear advantages given its ability to expertly represent entire driving scene data in detail. As shown in Figure 19, OccWorld selects 3D occupancy as the input of the world model and uses a GPT-like module to predict what future 3D occupancy data should look like. UniWorld leverages off-the-shelf BEV-based 3D occ-pancy models, but also builds a world model by processing past multi-view images to predict future 3D occupancy data. However, regardless of the mechanism, there is inevitably a domain gap between generated data and real data. To solve this problem, one feasible approach is to combine 3D occupancy prediction with the emerging 3D artificial intelligence generated content (3D AIGC) method to generate more realistic scene data, while another approach is to combine domain adaptation methods Combined to reduce field gaps.

When it comes to 3D occupancy prediction methods, there are ongoing challenges that require further attention within the categories we outlined previously: feature enhancement methods, Deployment-friendly methods and label-efficient methods. Feature enhancement methods need to be developed in the direction of significantly improving performance while maintaining controllable consumption of computing resources. A deployment-friendly approach should be kept in mind to reduce memory usage and latency while ensuring performance degradation is minimized. Label-efficient methods should be developed in the direction of reducing the need for expensive annotations while achieving satisfactory performance. The ultimate goal may be to achieve a unified framework that combines feature enhancements, deployment friendliness, and labeling efficiency to meet the expectations of real-world autonomous driving applications.

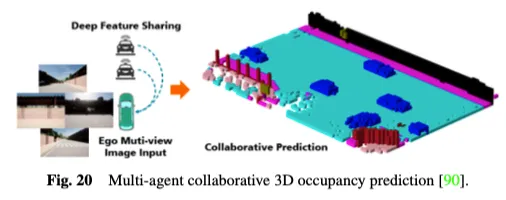

In addition, existing single-agent autonomous driving perception systems are inherently unable to solve key issues, such as sensitivity to occlusion, insufficient long-range sensing capabilities, and limited field of view, which make it challenging to achieve comprehensive environmental awareness. In order to overcome the bottleneck of single-agent, multi-agent collaborative sensing methods open up a new dimension, allowing vehicles to share complementary information with other traffic elements to obtain an overall perception of the surrounding environment. As shown in Figure 20, the multi-agent collaborative 3D occupancy prediction method utilizes the power of collaborative sensing and learning for 3D occupancy prediction. By sharing features among connected automated vehicles, it can gain a deeper understanding of the 3D road environment. CoHFF is the first vision-based collaborative semantic occupancy prediction framework, which improves local 3D semantic occupancy prediction through a hybrid fusion of semantic and occupancy task features, as well as compressed orthogonal attention features shared between vehicles, significantly improving performance. It is better than the bicycle system. However, this method often requires communicating with multiple agents simultaneously, facing a contradiction between accuracy and bandwidth. Therefore, it is an interesting research direction to determine which agents require coordination the most, and to identify the areas where collaboration is most valuable to achieve the best balance between accuracy and speed.

In current 3D occupancy benchmarks, some categories have clear semantics, such as "car", "pedestrian" and "truck". In contrast, the semantics of other categories such as “artificial” and “vegetation” tend to be vague and general. These categories contain broad undefined semantics and should be subdivided into more fine-grained categories to provide detailed descriptions of driving scenarios. Furthermore, for unknown categories that have never been seen before, they are often viewed as a general barrier to flexible expansion of new category perception based on human cues. For this problem, open vocabulary tasks show strong performance in 2D image perception and can be extended to improve 3D occupancy prediction tasks. OVO proposes a framework that supports open vocabulary 3D occupancy prediction. It utilizes frozen 2D segmenters and text encoders to obtain semantic references for open vocabularies. Then, three different levels of alignment are employed to extract the 3D occupancy model, enabling it to perform open word predictions. POP-3D designed a self-supervised framework that combines the three modalities with the help of powerful pre-trained visual language models. It facilitates open-lexicon tasks such as zero-shot occupancy segmentation and text-based 3D retrieval.

Perceiving dynamic changes in the surrounding environment is crucial for the safe and reliable execution of downstream tasks in autonomous driving. Although 3D occupancy predictions can provide dense occupancy representations of large-scale scenes based on current observations, they are mostly limited to representing the current 3D space and do not consider the future states of surrounding objects along the timeline. Recently, several methods have been proposed to further consider temporal information and introduce 4D occupancy prediction tasks, which are more practical in real autonomous driving scenarios. Cam4Occ establishes a new benchmark for 4D occupancy prediction using the widely used nuScenes dataset for the first time. The benchmark includes different metrics to evaluate occupancy predictions for General Movable Objects (GMO) and General Static Objects (GSO) respectively. Furthermore, it provides several baseline models to illustrate the construction of a 4D occupancy prediction framework. Although the open vocabulary 3D occupancy prediction task and the 4D occupancy prediction task aim to enhance the perception capabilities of autonomous driving in open dynamic environments from different perspectives, they are still considered as independent tasks for optimization. A modular task-based paradigm where multiple modules have inconsistent optimization goals can lead to information loss and accumulated errors. Combining open-set dynamic occupancy prediction with end-to-end autonomous driving tasks and directly mapping raw sensor data to control signals is a promising research direction.

The above is the detailed content of Take a look at the past and present of Occ and autonomous driving! The first review comprehensively summarizes the three major themes of feature enhancement/mass production deployment/efficient annotation.. For more information, please follow other related articles on the PHP Chinese website!