Technology peripherals

Technology peripherals

AI

AI

Docker completes local deployment of LLama3 open source large model in three minutes

Docker completes local deployment of LLama3 open source large model in three minutes

Docker completes local deployment of LLama3 open source large model in three minutes

Overview

LLaMA-3 (Large Language Model Meta AI 3) is a large-scale open source generative artificial intelligence model developed by Meta Company. It has no major changes in model structure compared with the previous generation LLaMA-2.

The LLaMA-3 model is divided into different scale versions, including small, medium and large, to adapt to different application requirements and computing resources. The parameter size of small models is 8B, the parameter size of medium models is 70B, and the parameter size of large models reaches 400B. However, during training, the goal is to achieve multi-modal and multi-language functionality, and the results are expected to be comparable to GPT 4/GPT 4V.

Installing Ollama

Ollama is an open source large language model (LLM) service tool that allows users to run and deploy large language models on their local machine. Ollama is designed as a framework that simplifies the process of deploying and managing large language models in Docker containers, making the process quick and easy. Users can quickly run open source large-scale language models such as Llama 3 locally through simple command line operations.

Official website address: https://ollama.com/download

Picture

Picture

Ollama is a tool that supports multiple platforms. Includes Mac and Linux, and provides Docker images to simplify the installation process. Users can import and customize more models by writing a Modelfile, which is similar to the role of a Dockerfile. Ollama also features a REST API for running and managing models, and a command-line toolset for model interaction.

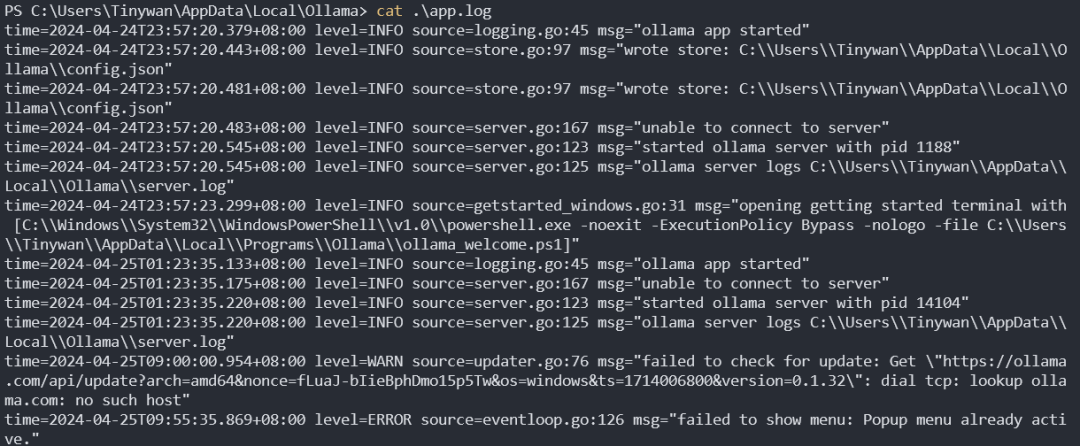

Ollama service startup log

Picture

Picture

Model management

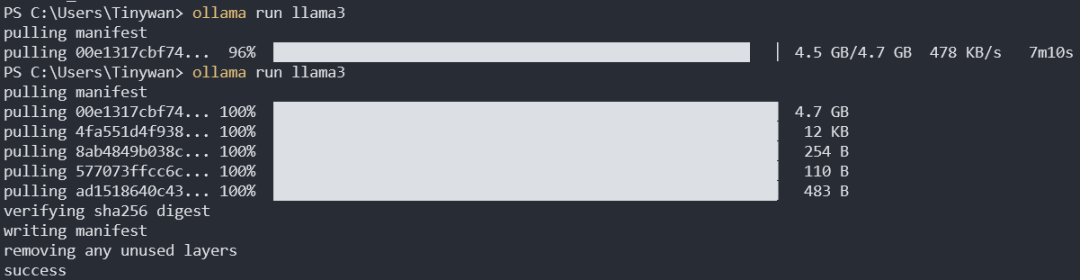

Download model

ollama pull llama3:8b

The default download is llama3:8b. The colon before the colon here represents the model name, and the colon after the tag represents the tag. You can view all tags of llama3 from here

Pictures

Pictures

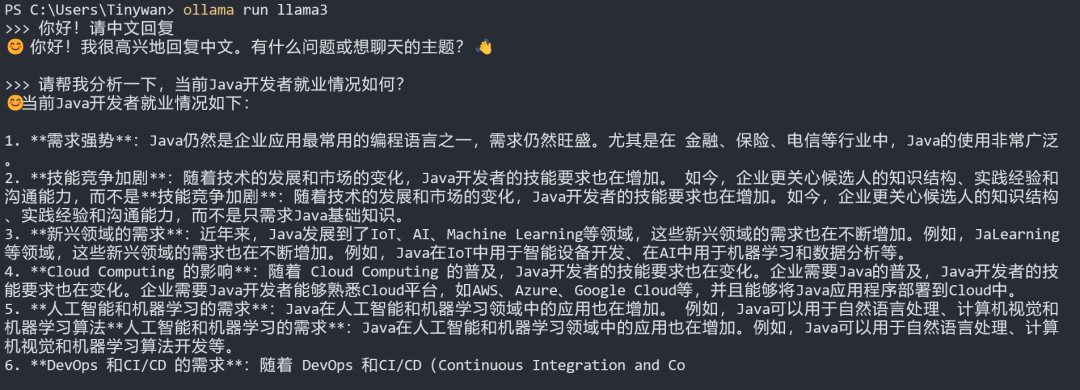

Model Test

Note: If you want the model to reply in Chinese, please enter: Hello! Please reply in Chinese

Picture

Picture

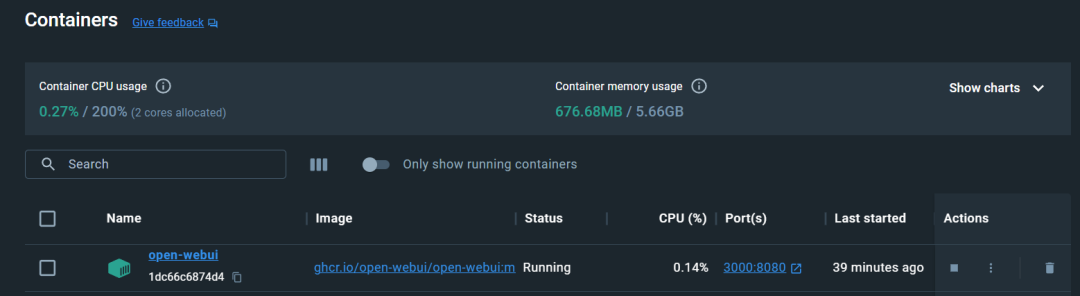

Configure Open-WebUI

Run under CPU

docker run -d -p 3000:8080 --add-host=host.docker.internal:host-gateway -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:main

Picture

Picture

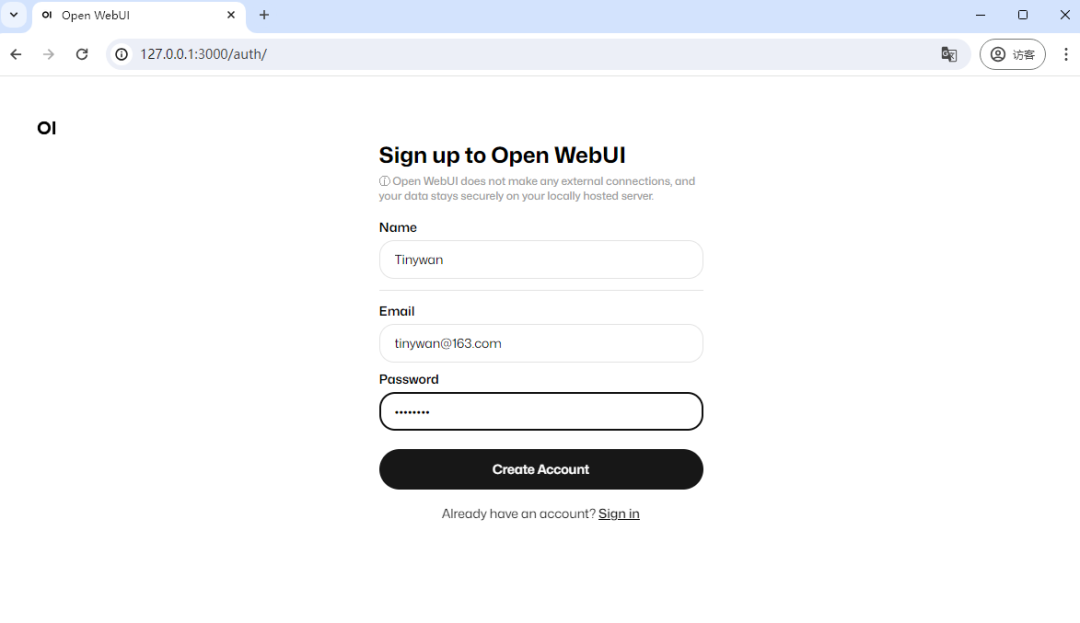

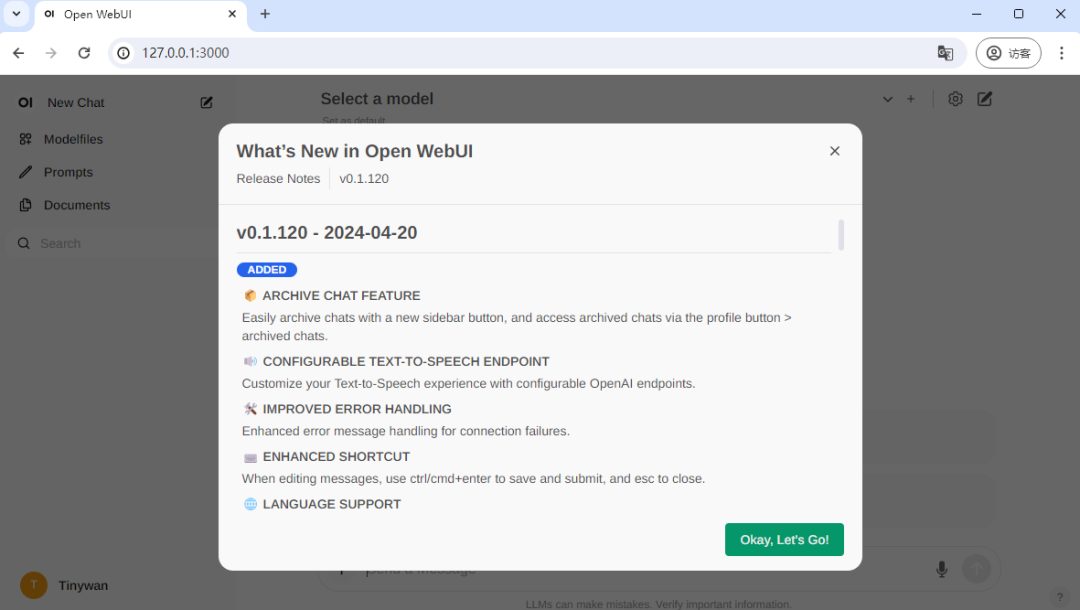

Access

Enter the address http://127.0.0.1:3000 to access

Picture

Picture

The first visit requires registration. Here I register an account. After registration is completed, the login is successful

Picture

Picture

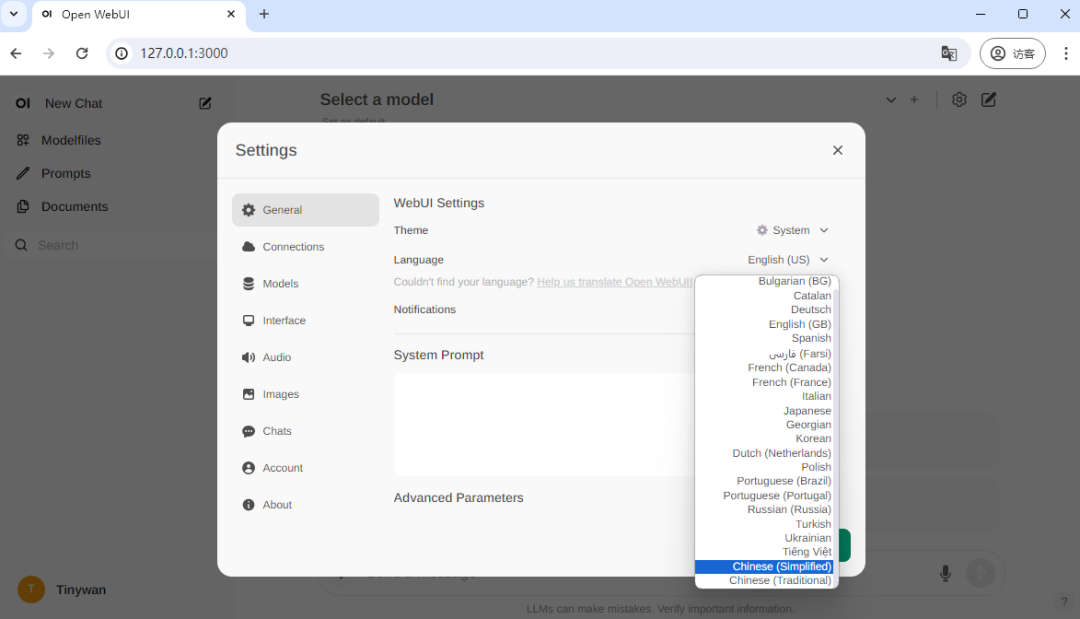

Switch Chinese language

Picture

Picture

Download llama3:8b model

llama3:8b

Picture

Picture

Download completed

Picture

Picture

Use

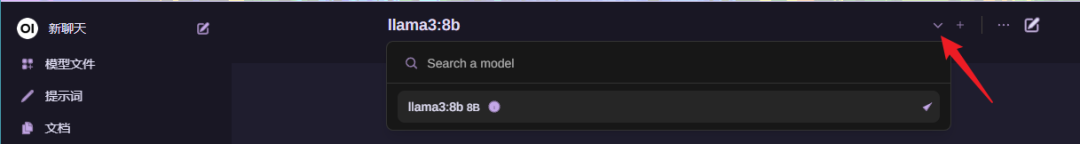

Select model

Picture

Picture

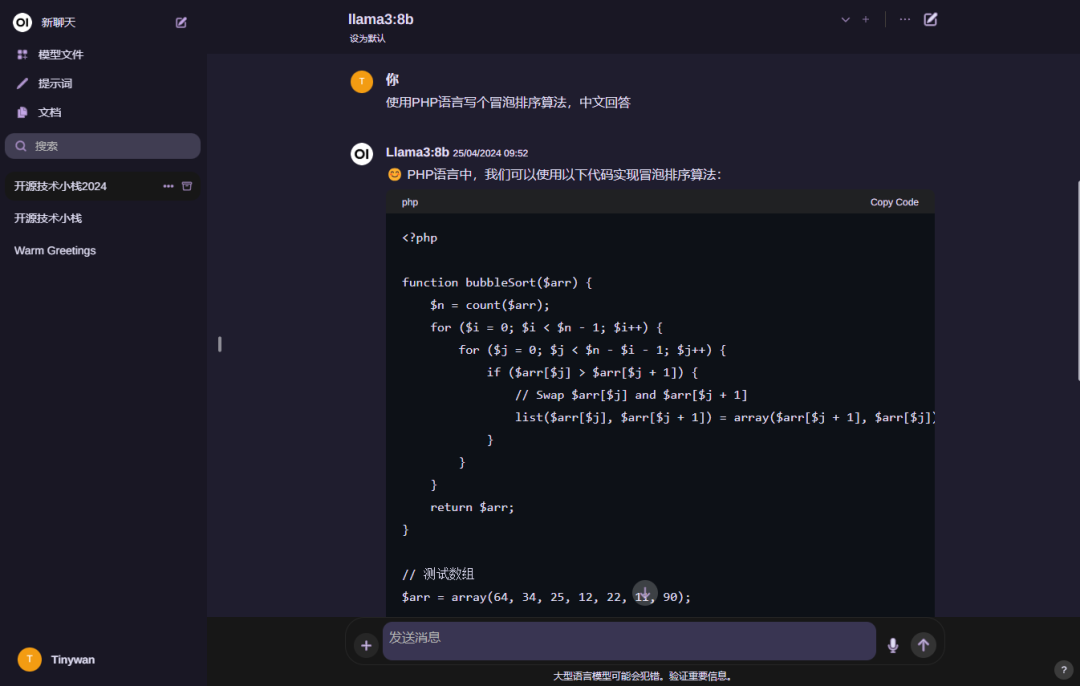

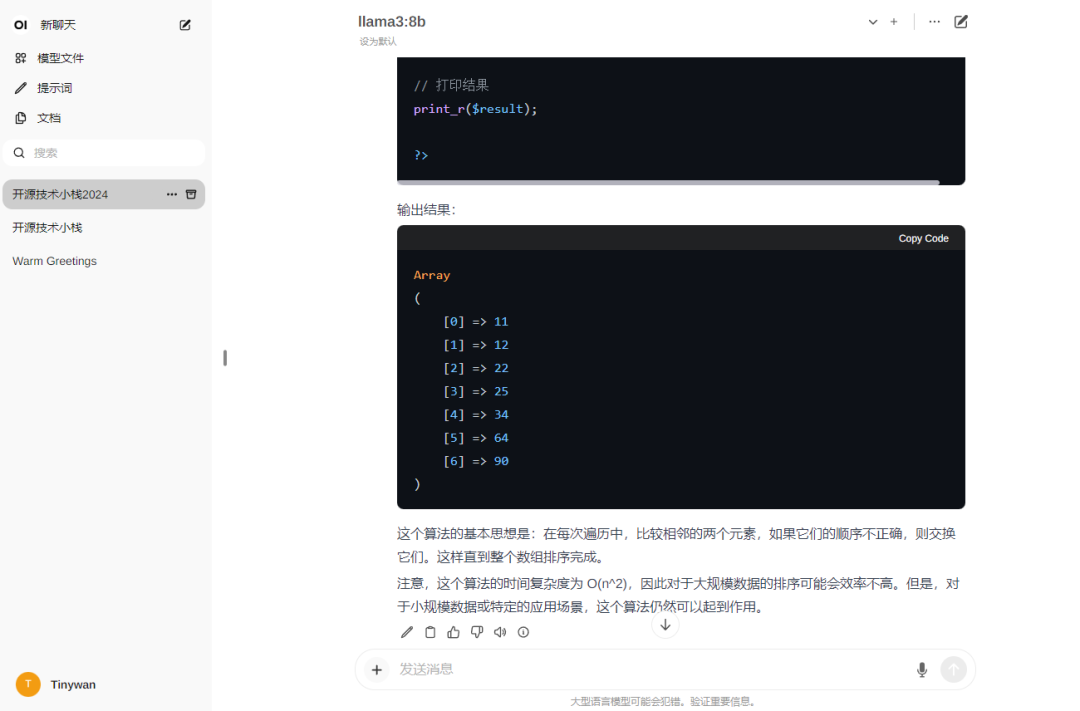

Use model

Picture

Picture

Note: If you want the model to reply in Chinese, please enter: Hello! Please reply in Chinese

Picture

Picture

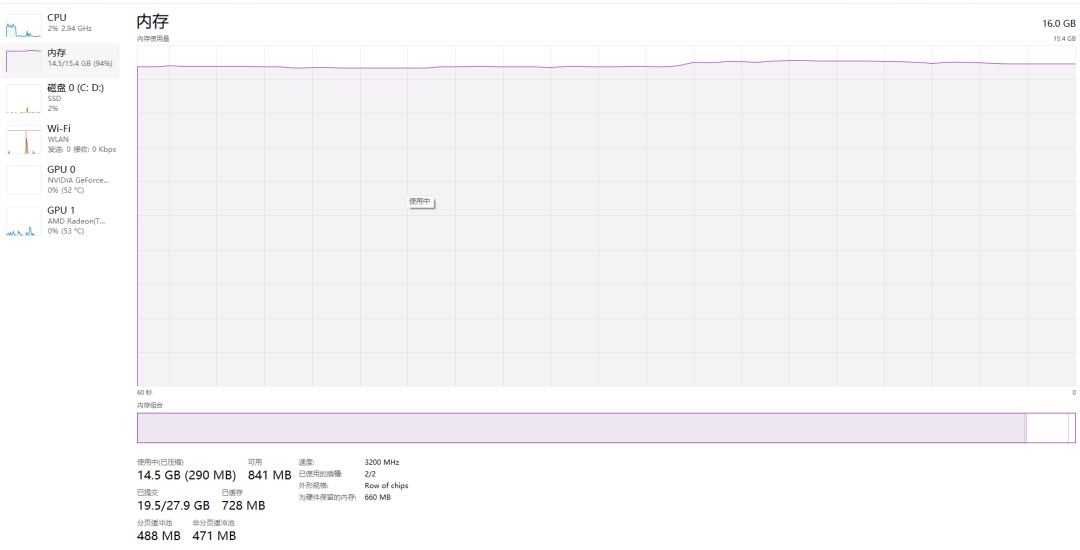

Memory

##  picture

picture

The above is the detailed content of Docker completes local deployment of LLama3 open source large model in three minutes. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1378

1378

52

52

How to import docker images

Apr 15, 2025 am 08:24 AM

How to import docker images

Apr 15, 2025 am 08:24 AM

Importing images in Docker involves getting prebuilt container images from remote repositories and importing them into local repositories. The steps include: pull the image (docker pull) list the docker images (docker images) and import the image to the local repository (docker import)

How to stop docker network connection

Apr 15, 2025 am 10:21 AM

How to stop docker network connection

Apr 15, 2025 am 10:21 AM

To stop a Docker network connection, follow these steps: 1. Determine the name of the network to stop; 2. Use the docker network stop command to stop the network; 3. Check the stop status to verify that the network is stopped.

How to restart docker

Apr 15, 2025 pm 12:06 PM

How to restart docker

Apr 15, 2025 pm 12:06 PM

How to restart the Docker container: get the container ID (docker ps); stop the container (docker stop <container_id>); start the container (docker start <container_id>); verify that the restart is successful (docker ps). Other methods: Docker Compose (docker-compose restart) or Docker API (see Docker documentation).

How to start mysql by docker

Apr 15, 2025 pm 12:09 PM

How to start mysql by docker

Apr 15, 2025 pm 12:09 PM

The process of starting MySQL in Docker consists of the following steps: Pull the MySQL image to create and start the container, set the root user password, and map the port verification connection Create the database and the user grants all permissions to the database

How to copy files in docker to outside

Apr 15, 2025 pm 12:12 PM

How to copy files in docker to outside

Apr 15, 2025 pm 12:12 PM

Methods for copying files to external hosts in Docker: Use the docker cp command: Execute docker cp [Options] <Container Path> <Host Path>. Using data volumes: Create a directory on the host, and use the -v parameter to mount the directory into the container when creating the container to achieve bidirectional file synchronization.

How to exit the container by docker

Apr 15, 2025 pm 12:15 PM

How to exit the container by docker

Apr 15, 2025 pm 12:15 PM

Four ways to exit Docker container: Use Ctrl D in the container terminal Enter exit command in the container terminal Use docker stop <container_name> Command Use docker kill <container_name> command in the host terminal (force exit)

How to create containers for docker

Apr 15, 2025 pm 12:18 PM

How to create containers for docker

Apr 15, 2025 pm 12:18 PM

Create a container in Docker: 1. Pull the image: docker pull [mirror name] 2. Create a container: docker run [Options] [mirror name] [Command] 3. Start the container: docker start [Container name]

How to check the name of the docker container

Apr 15, 2025 pm 12:21 PM

How to check the name of the docker container

Apr 15, 2025 pm 12:21 PM

You can query the Docker container name by following the steps: List all containers (docker ps). Filter the container list (using the grep command). Gets the container name (located in the "NAMES" column).