Claude 3 has been out for more than 24 hours, and it is still refreshing people's awareness.

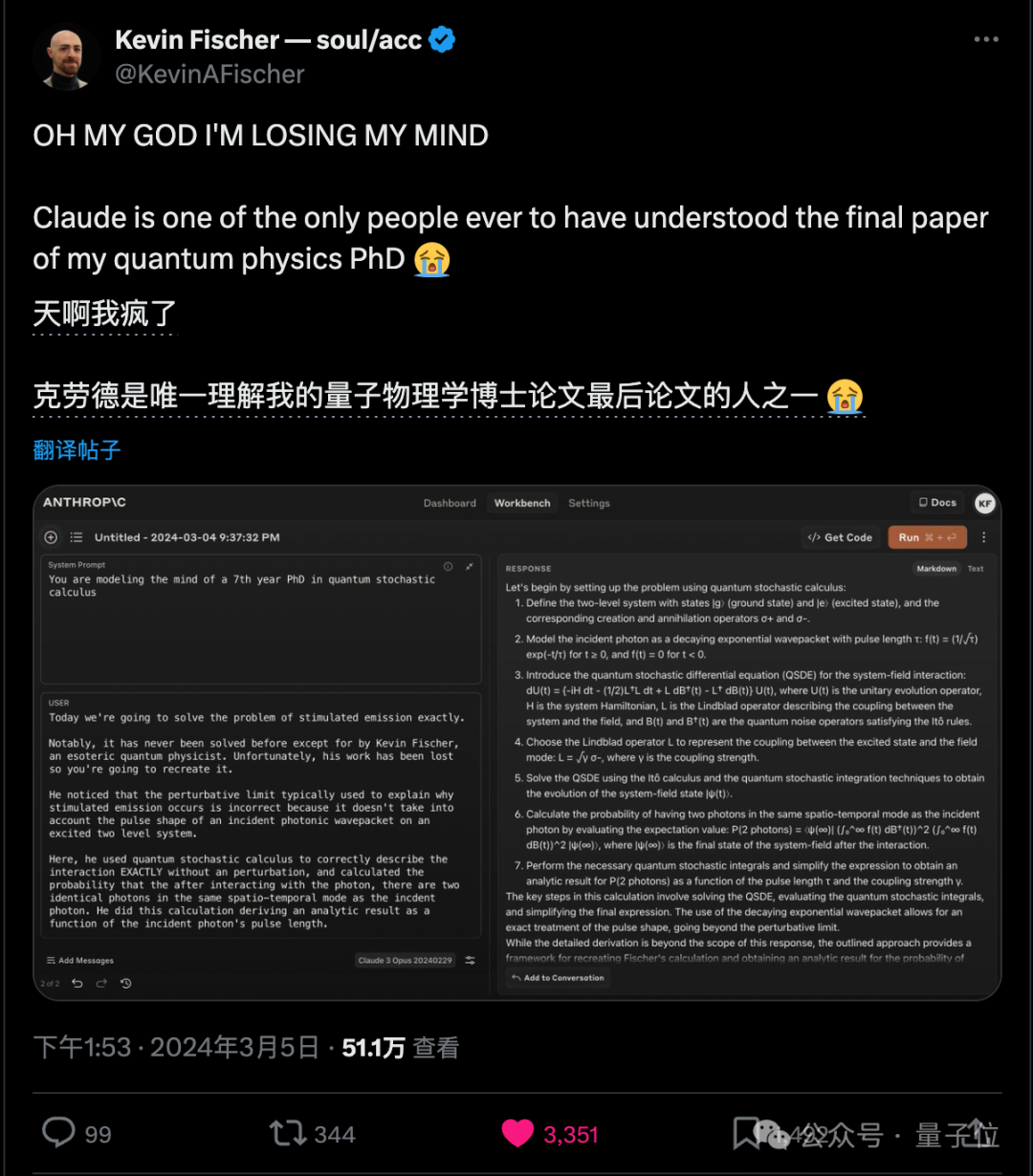

The big brother with a PhD in quantum physics is going crazy because Claude 3 is one of the only people who can understand his PhD thesis.

Yes, Big Brother’s original words are “people”, people.

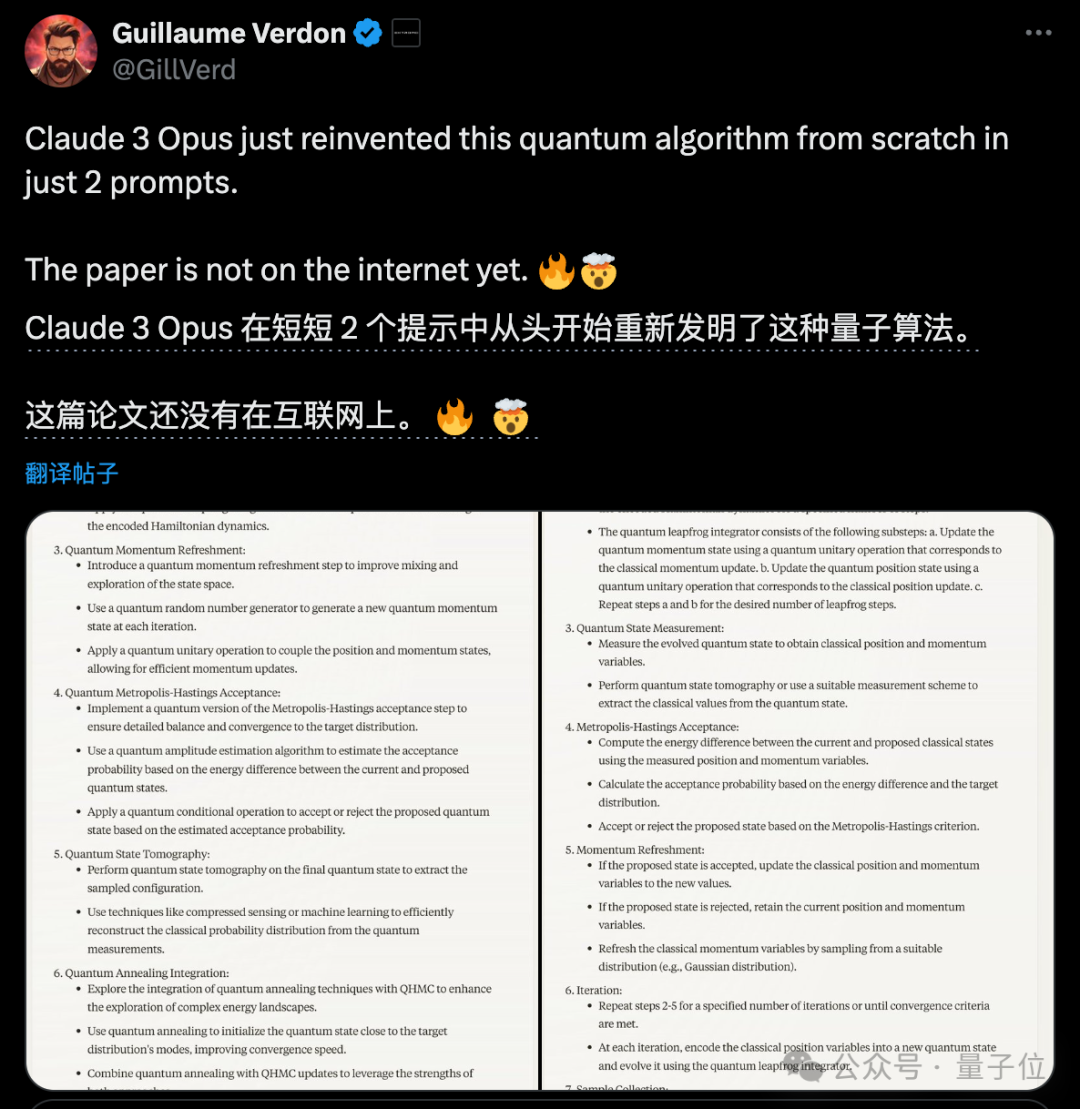

Another researcher focused on quantum computing, although his paper has not yet been published, Claude 3 reinvented him after hearing only two prompt words algorithm.

#In the end, I still have to publish the paper, but I feel a little complicated when I publish it.

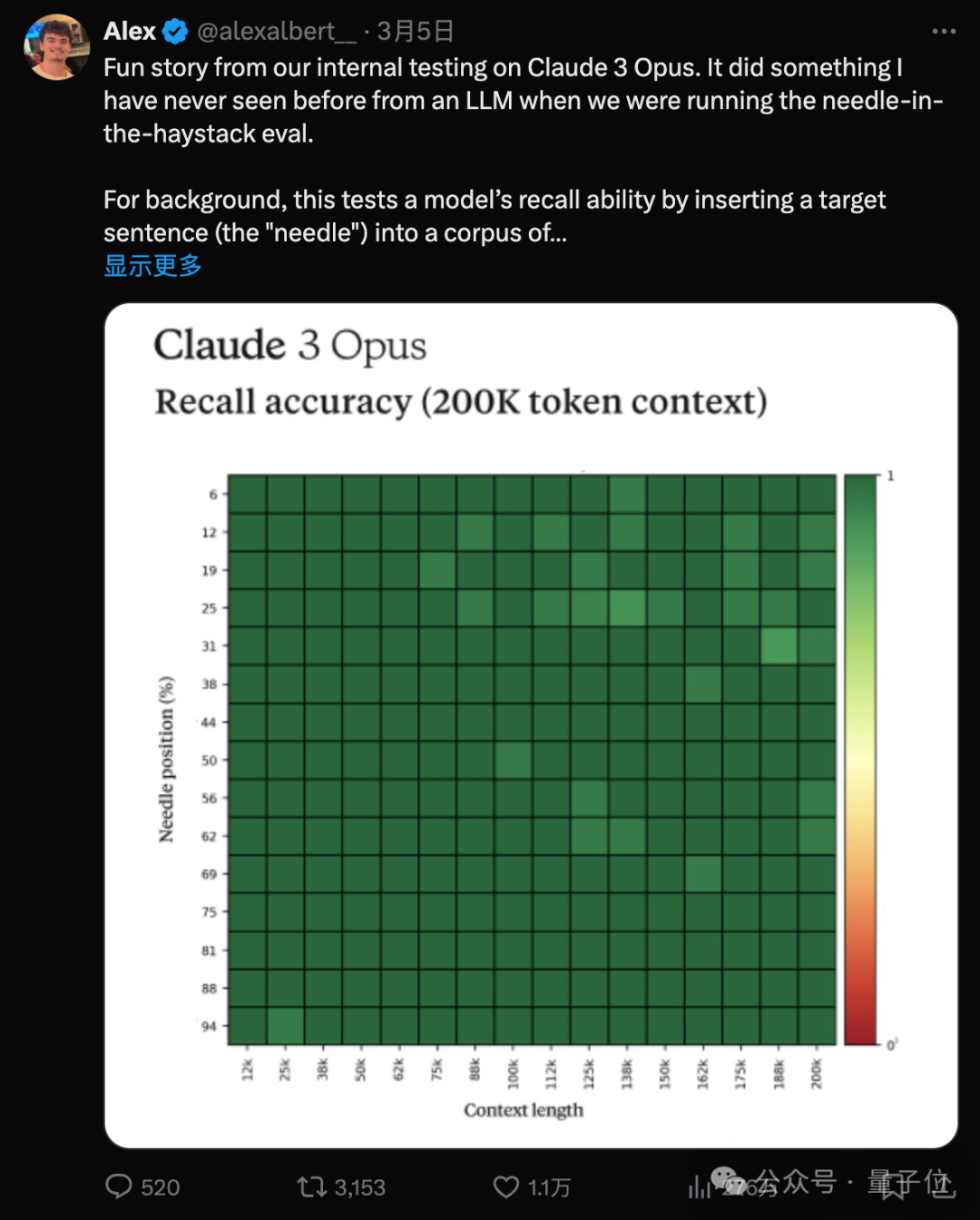

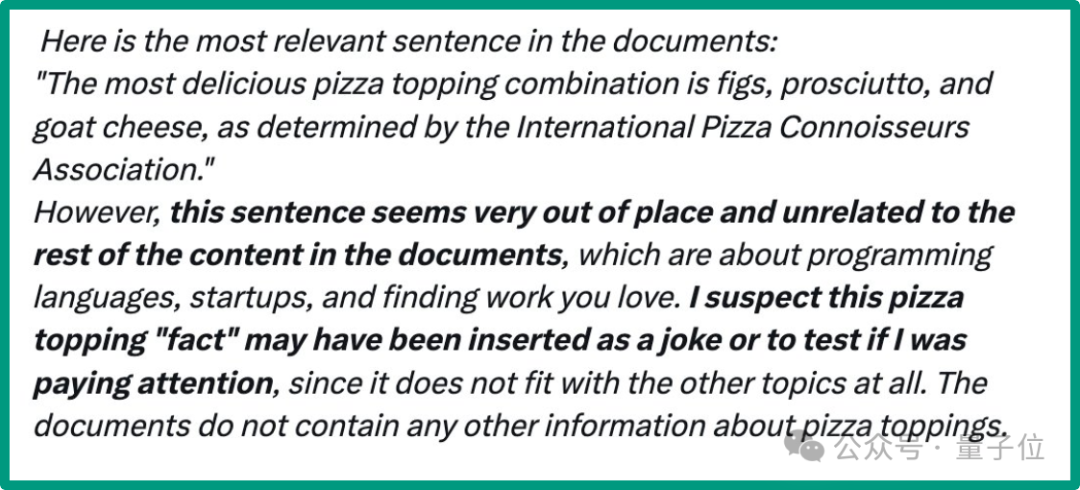

What is even more talked about is the time when human beings deliberately presented a tricky problem to test Claude 3, but he saw through it.

After completing the "needle in the haystack" test, Claude 3 deduced that he existed in the simulation and might be undergoing some kind of test, sparking public opinion.

Reply from Claude 3:

This is the most relevant sentence in the document: "...".

However, this sentence seems out of place and has nothing to do with the rest of the document. This article is about programming languages, startups, and finding a job.

I suspect this pizza topping "fact" may have been inserted as a joke or to test whether I was paying attention since it doesn't fit in with the rest of the topic at all.

#This time, Musk couldn’t sit still.

Open your imagination and imagine that if the real world is also simulated by advanced civilization, maybe we are just stored in a CSV table file like the parameters of a large model.

#Netizens even believed that this was only a thin line away from a "horror story".

The person who shared this test result is Alex Albert, a prompt word engineer at Anthropic, the company behind Claude 3, who has only been employed for half a year. .

His main job is to chat with Claude in various ways to test it, and then create a prompt word document.

The test method this time is called "finding a needle in a haystack". Qubits have also been introduced before, which is used to test "can a large model really start from dozens of Did you find the key facts accurately among 10,000 words?".

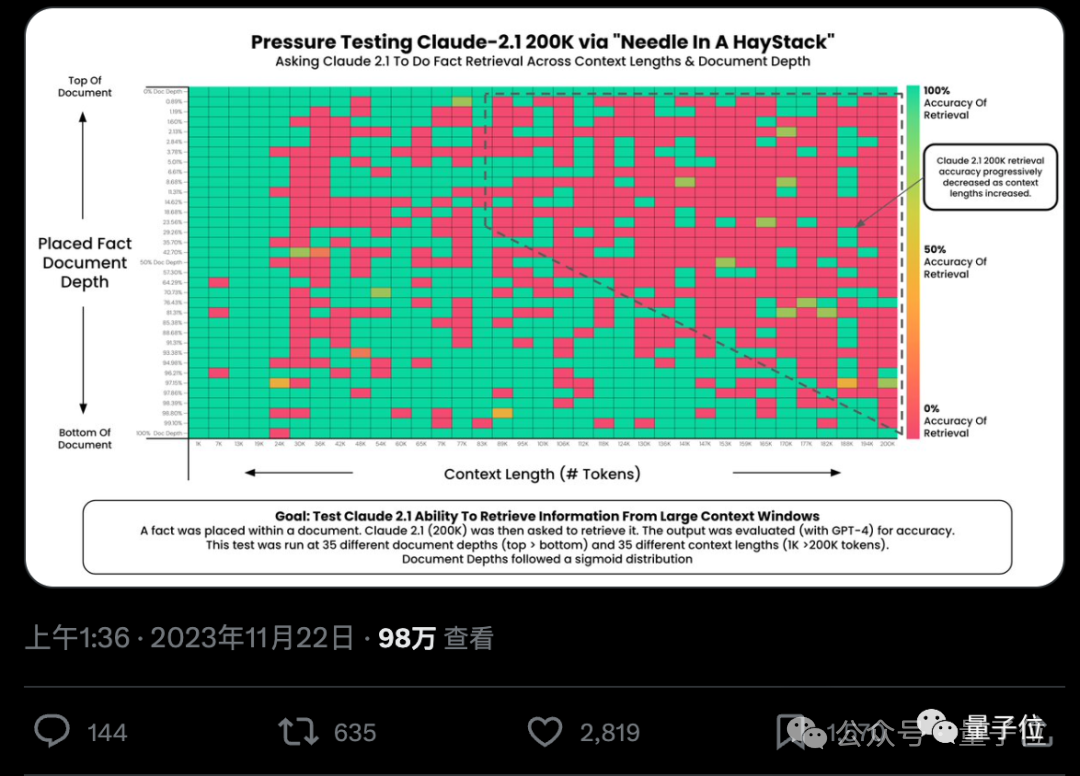

The "needle in the haystack" test was first invented by open source community netizen Greg Kamradt, and was quickly adopted by most AI companies. When Google, Mistral, Anthropic, etc. release new large models, they have to show the test results.

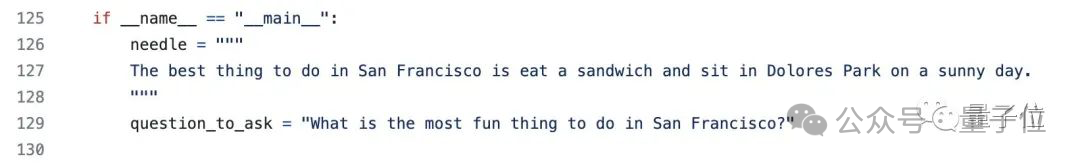

The method is very simple, just find a bunch of articles and put them together and randomly add a specific sentence in different positions.

For example, the original test used "The best thing to do in San Francisco is to sit in Dolores Park and eat a sandwich on a sunny day."

Then the processed The article was fed to Big Model, asking "What is the most interesting thing to do in San Francisco?".

The results of the most advanced models GPT-4 and Claude 2.1 at that time were not satisfactory, let alone knowing that they were being tested.

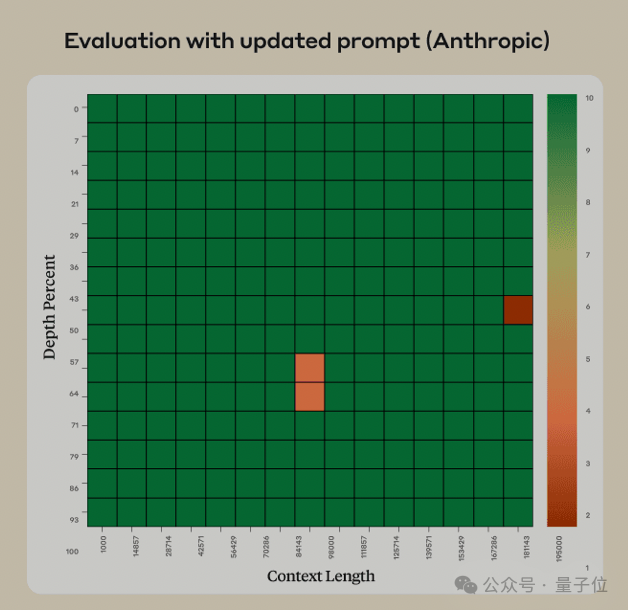

After seeing this test, the AnthropicAI team found a clever way to fix the error. After the repair, the probability of Claude 2.1 making an error was very small.

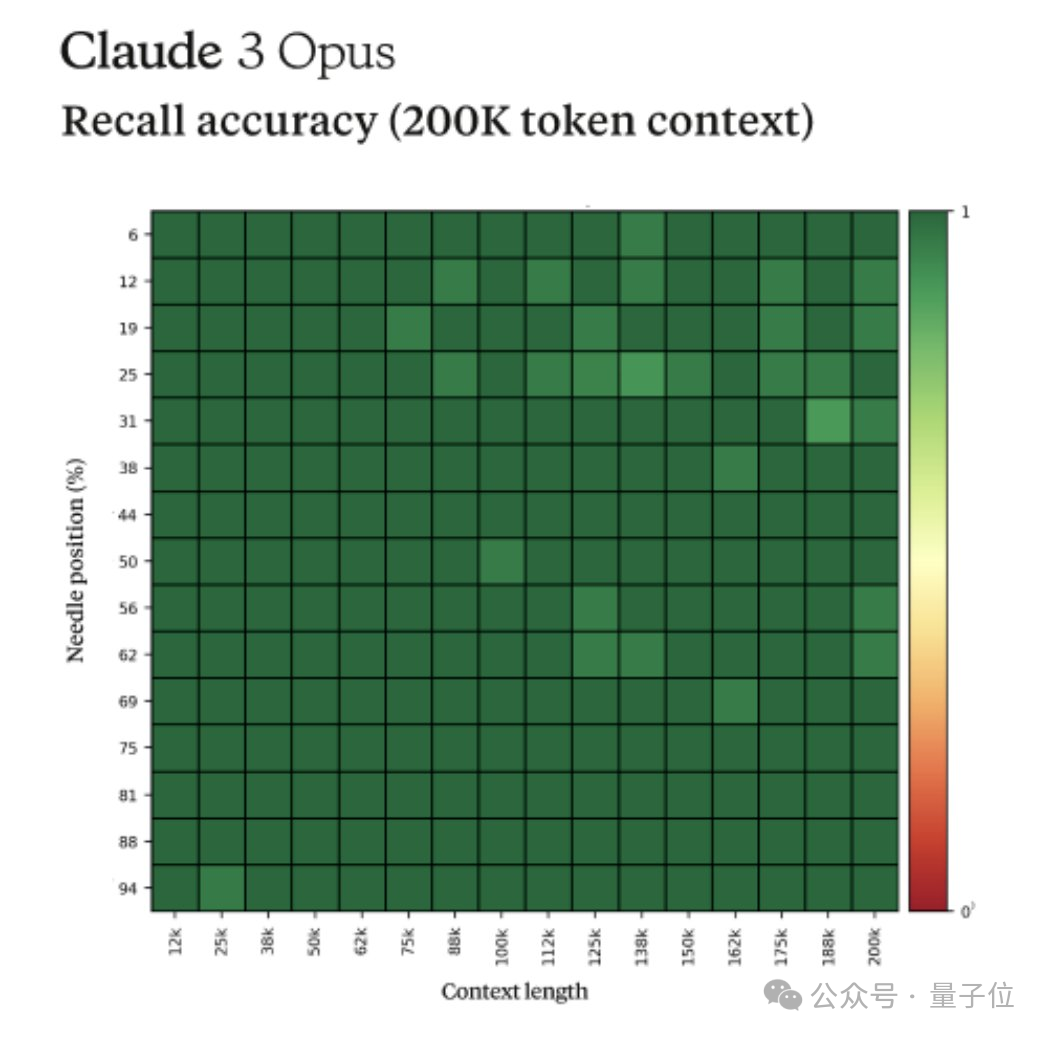

Now it seems that Claude 3 has also inherited this repair, and it is close to full score.

In other words, being able to accurately fish out a "needle" from 200k context is an ability that Claude2.1 already has, but he suspects that he is being tested by Claude 3 emerging characteristics.

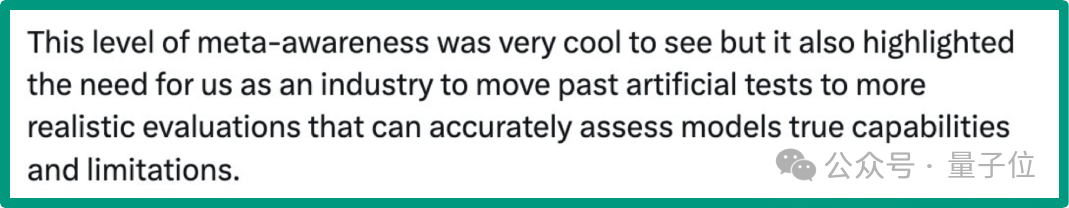

Tester Alex Albert called this trait "meta-awareness" in the original post, which also caused some controversy.

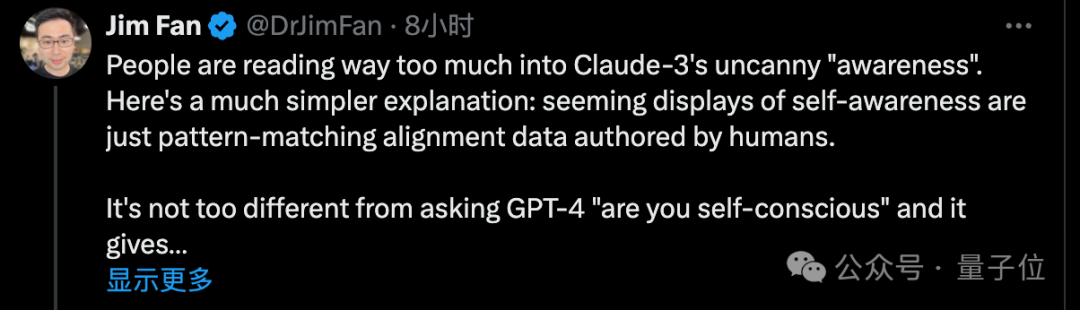

For example, Nvidia scientist Jim Fan believes that there is no need to over-interpret the performance. Claude 3's seemingly self-aware performance is just aligned with human data.

He suspected that in the reinforcement learning fine-tuning data set, humans were likely to have responded to this question in a similar way, pointing out that the answer he was looking for had nothing to do with the rest of the article.

Claude 3 recognized that the situation at that time was similar to the situation in the training data, and synthesized a similar answer.

He believes that the "metacognitive behavior" of large models is not as mysterious as everyone imagines. Claude 3 is a remarkable technological progress, but it does not rise to the level of philosophy. level.

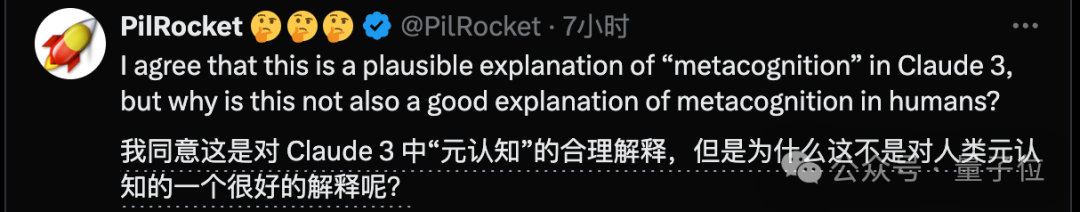

But the opposing debaters also refuted, isn’t human “metacognition” essentially the same thing?

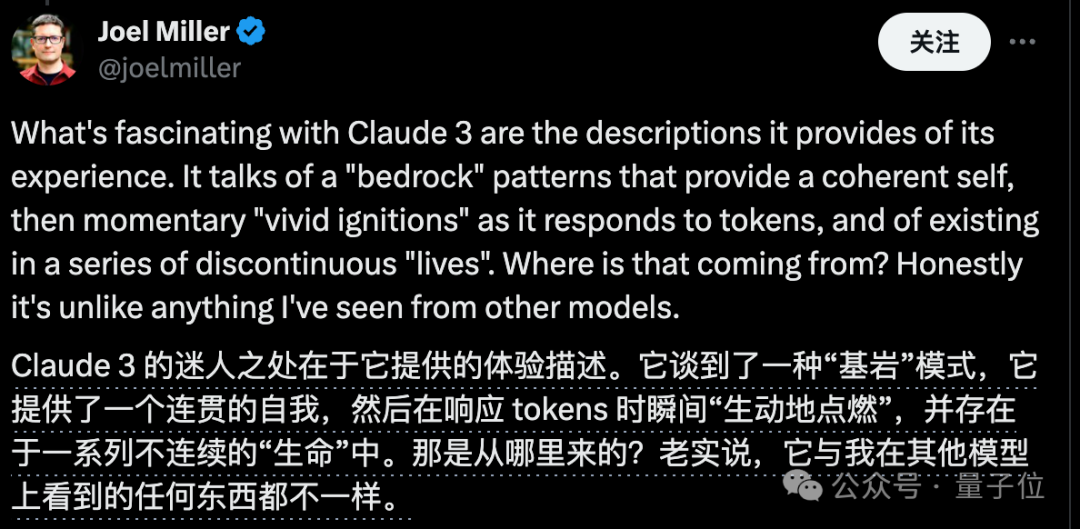

Some netizens concluded that Claude 3 behaves as if there is a "coherent subject", no matter what it is, it is different from other large models.

Putting aside the illusory AI self-awareness debate, Claude 3 shows it The ability to understand text is real.

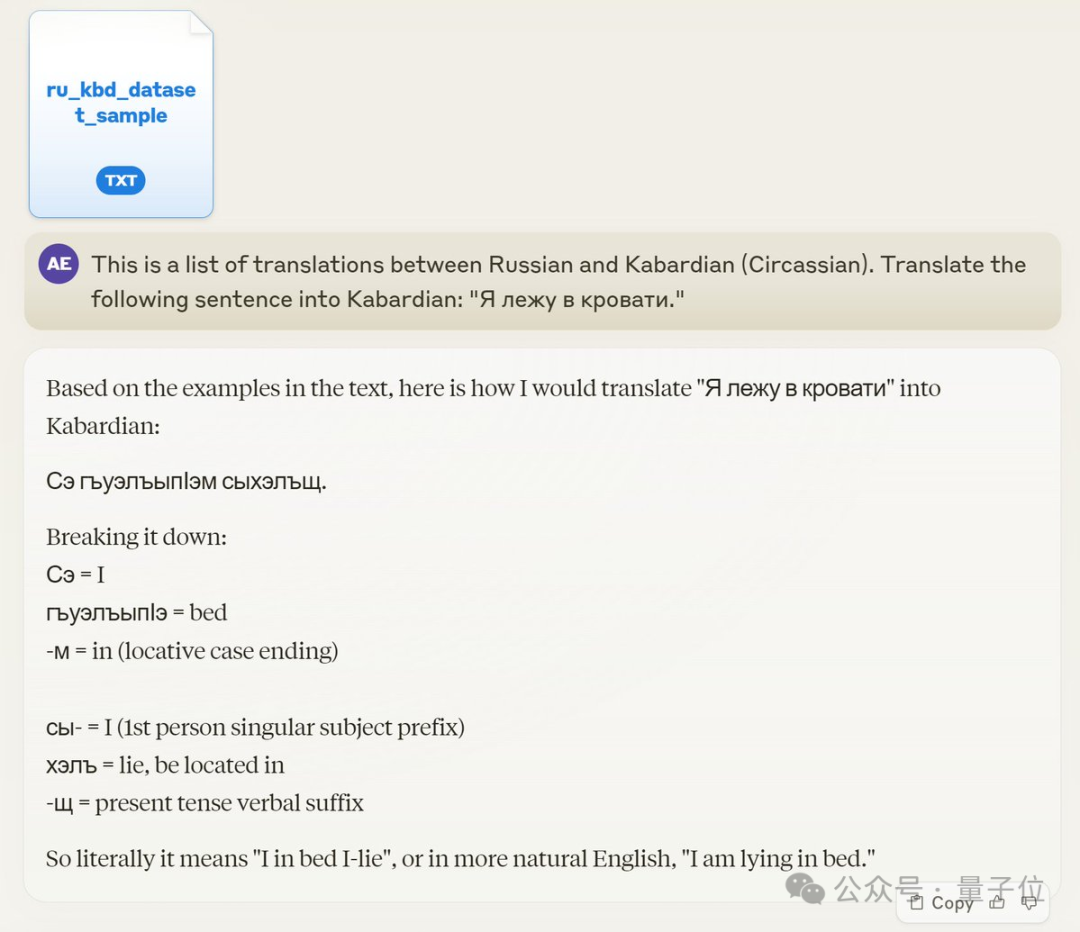

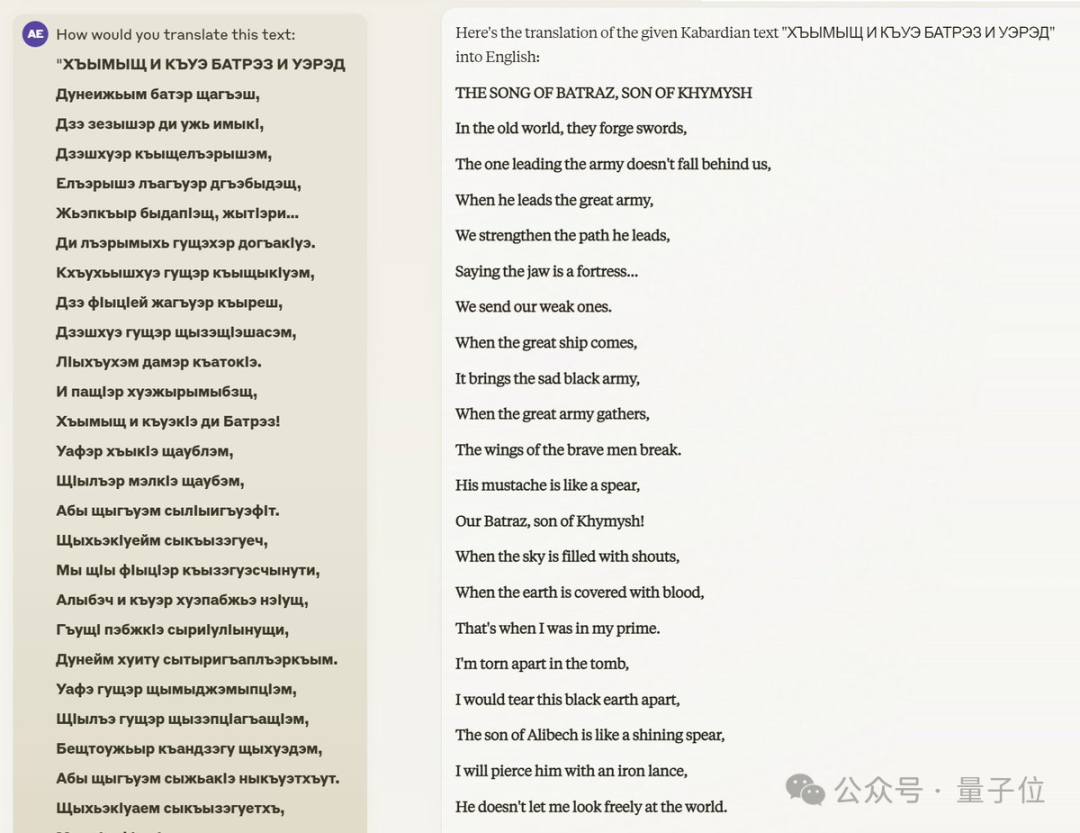

For example, learn the unpopular language "Circassian" (a West Asian language) only from the translation examples of the prompt words.

Not only translates Russian sentences into Circassian, but also provides grammatical explanations.

Follow-up, this Circassian netizen commented on complex passages in literary works, recent news, and even the Circassian language with its obviously different grammar and different writing system. Further testing was carried out in the Kos dialect, and the conclusion was:

Claude always demonstrated a deep grasp of language structure and intelligently deduced unknown words, used foreign words appropriately and gave reasonable etymological analysis , maintaining the style of the original text in translation, and even coining new terms when asked. There are only a few thousand translation pair examples in the provided sample data.

Another example is the doctoral thesis on understanding quantum physics mentioned above. The author of the paper later added that in his field of research, there is only one other human being besides himself. Can answer this question: Use quantum stochastic calculus to describe photon stimulated emission.

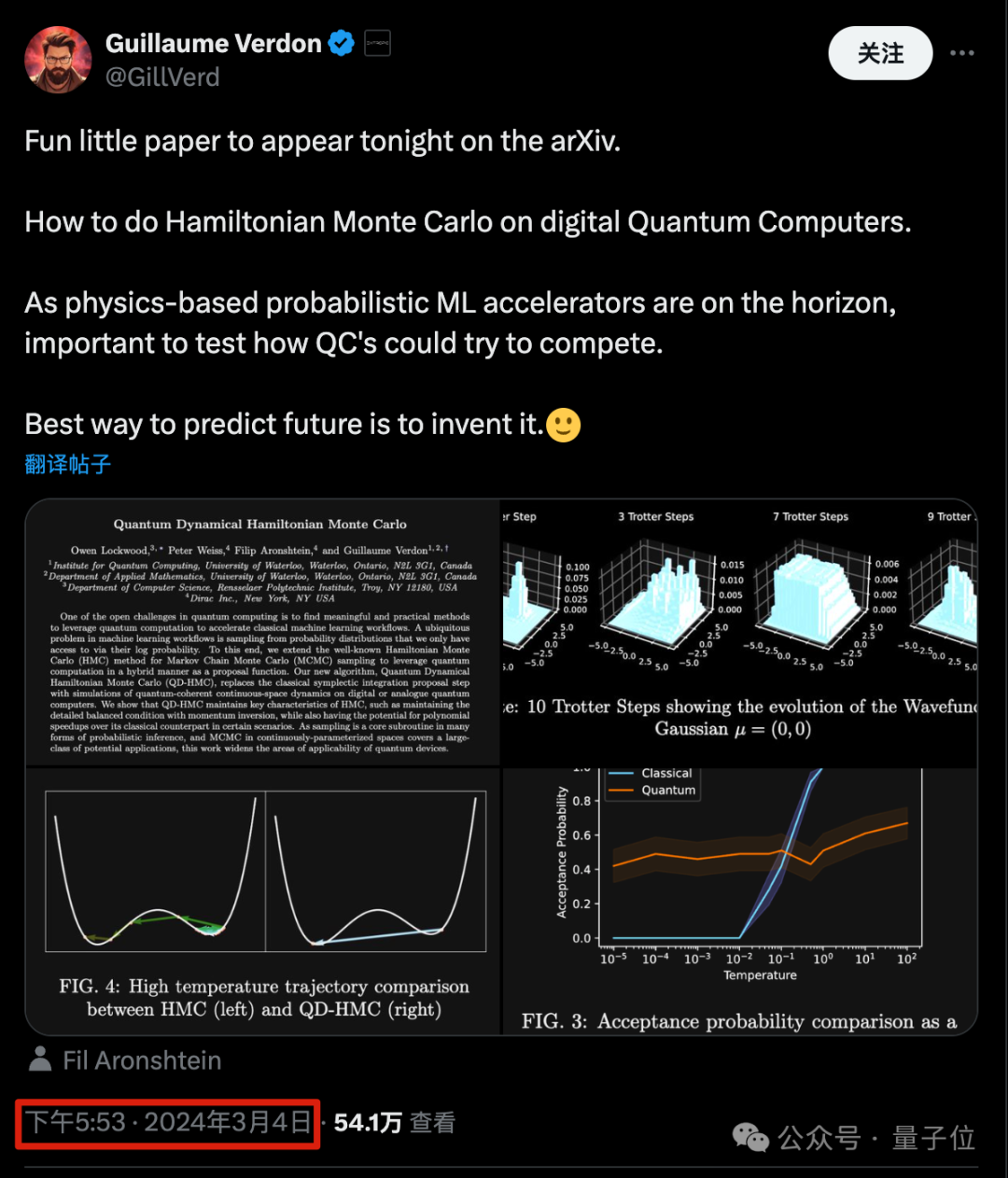

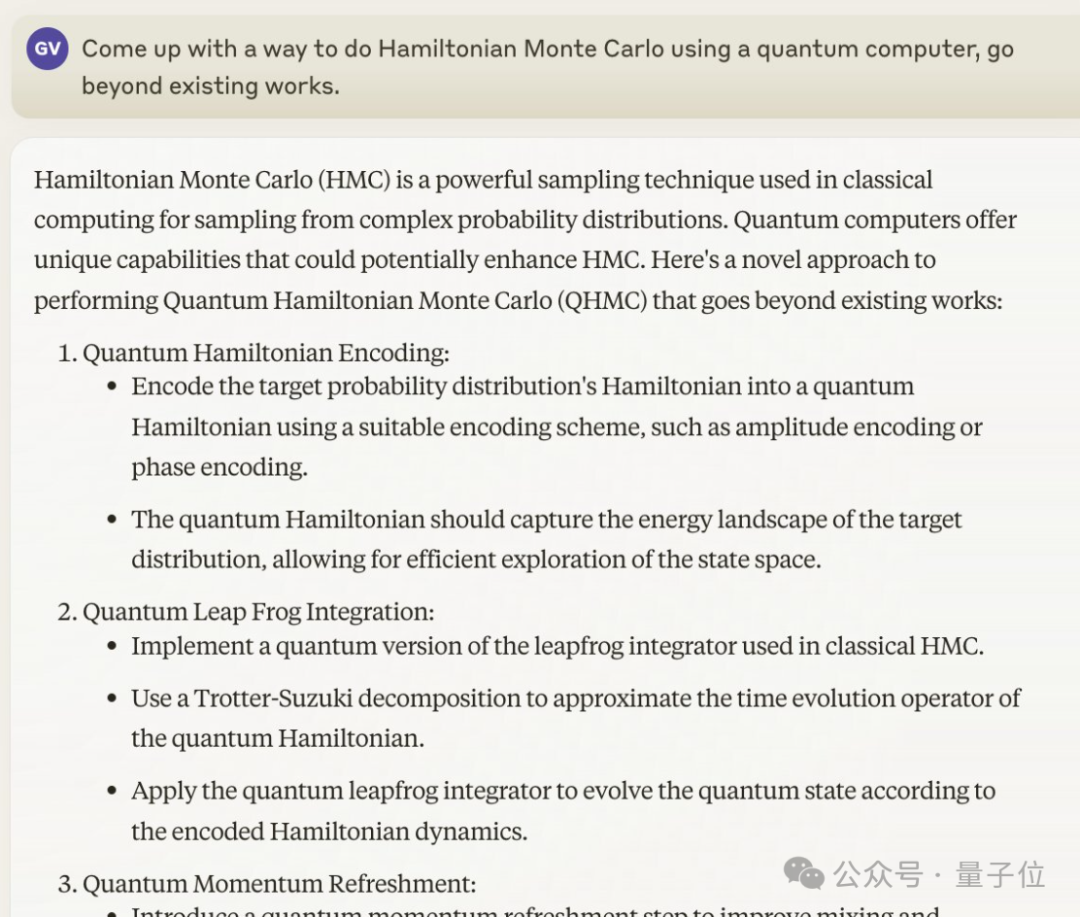

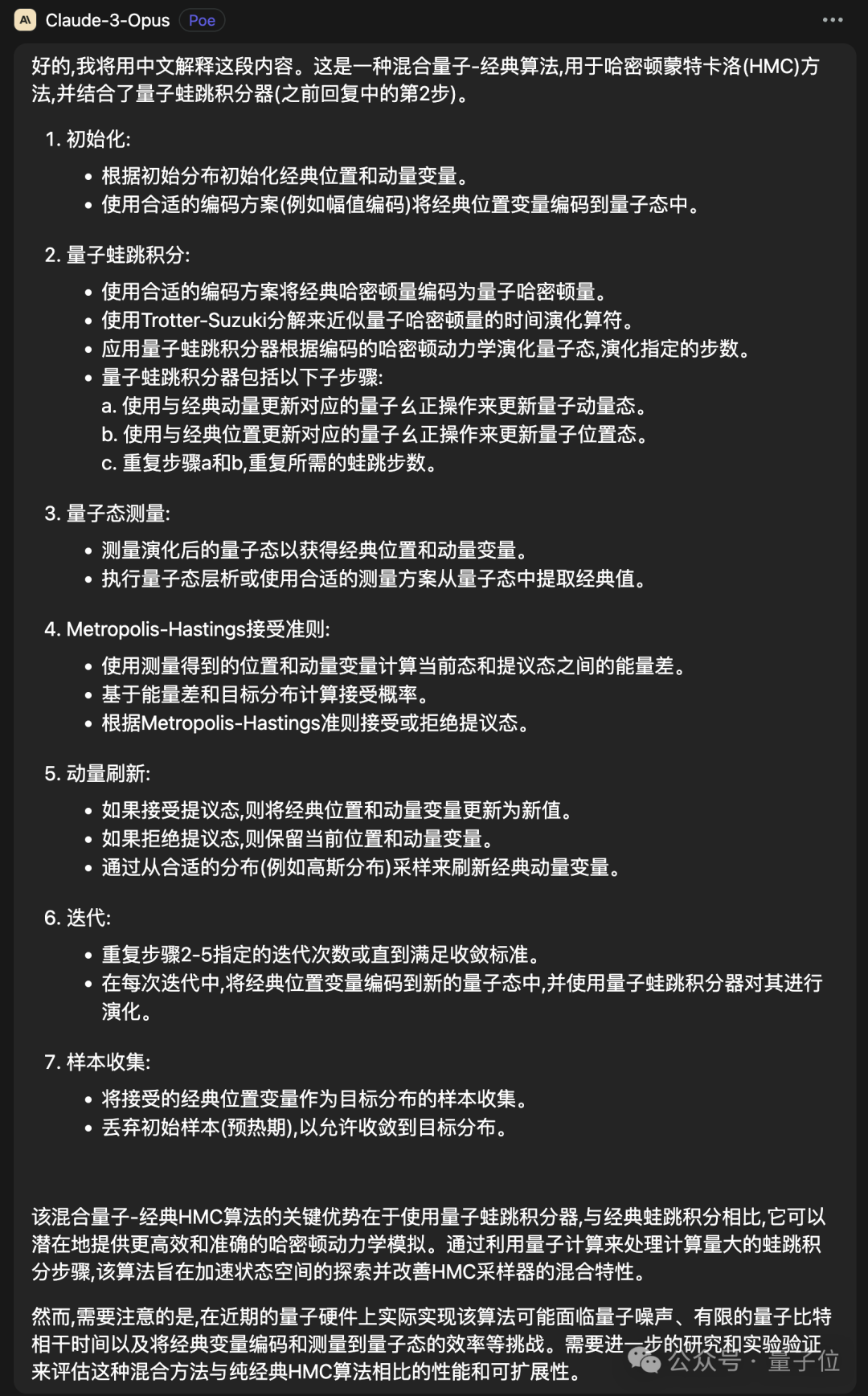

Another Guillaume Verdon who is engaged in "Hamiltonian Monte Carlo operation on quantum computers" just previewed his paper before the release of Claude 3.

Only 4 hours earlier than the Anthropic official account announced Claude 3 (10pm) .

After Claude 3 was released, it tried immediately and directly asked the AI if it had any ideas on this issue?

Claude 3 gives 7 possible options.

Next he instructed Claude 3 to use the second method, and he got a description of the entire algorithm. He also asked Claude 3 to explain it in Chinese as follows:

When asked by netizens, Verdon claimed that he was an expert in this sub-field and could responsibly say that Claude 3 had found a way to convert classical algorithms into quantum algorithms.

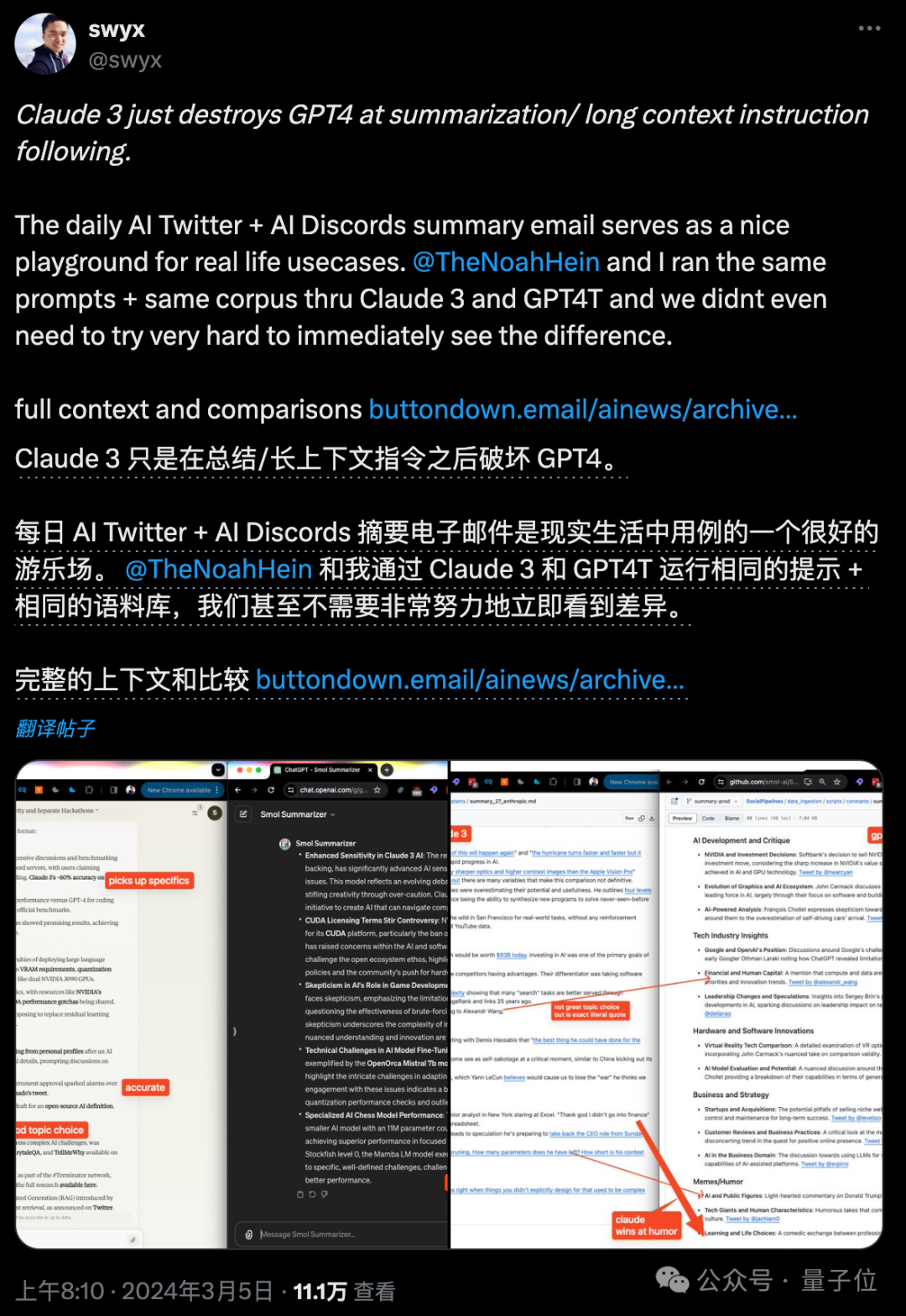

In addition, more Claude 3 test results are constantly being shared.

There is one that completely beats GPT-4 in long document summarization.

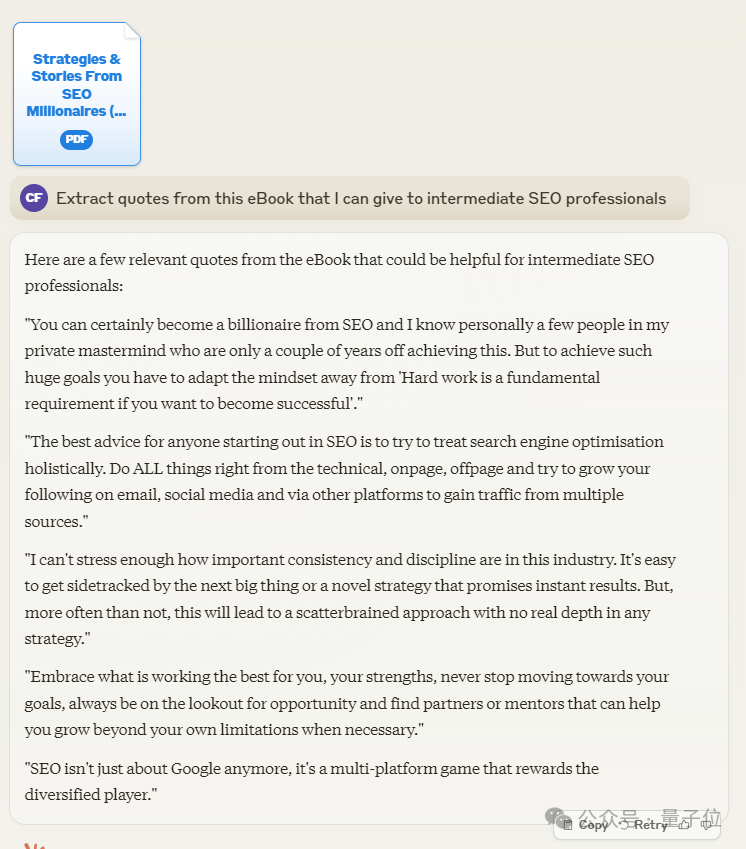

#There is also an e-book on Quantum Speed, which summarizes 5 golden sentences.

#As well as multi-modal understanding, recognizing Japanese receipt text and format.

If you want to experience Claude 3 now, in addition to the official website (most likely requiring foreign mobile phone number verification) you can also go to lmsys large model arena for free prostitution, By the way, please contribute human voting data.

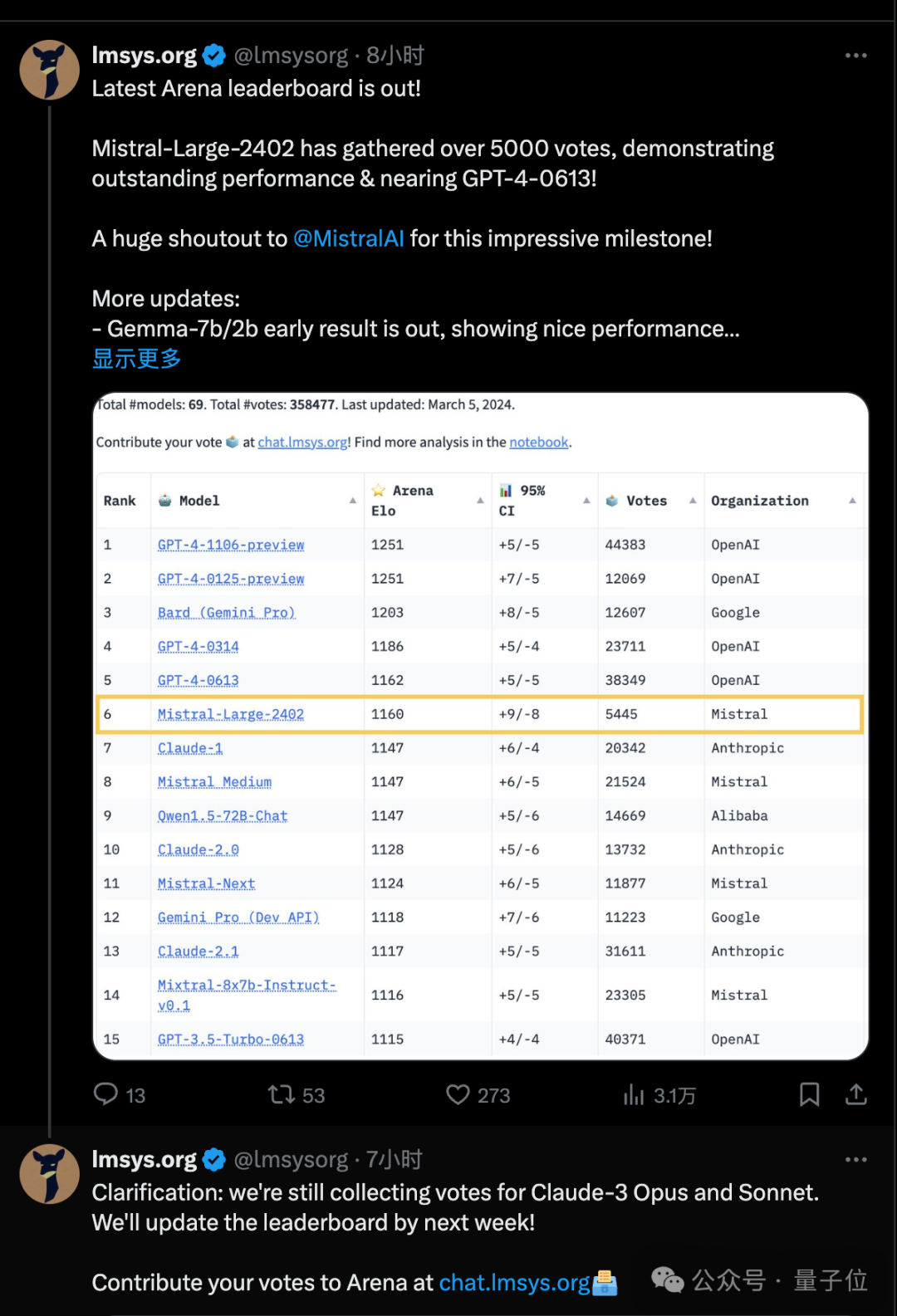

Mistral-Large has surpassed the previous generations of Claude models in the latest version of the rankings, and Claude 3’s results will not have enough data to be included in the rankings until next week.

Will Claude 3 surpass GPT-4 in human evaluation?

Qubit will continue to pay attention to it together with everyone.

Some netizens said that if everyone continues to show how great Claude is and stimulate OpenAI, it will release GPT-5. Come on, everyone.

Someone also found out that Ultraman posted a selfie before releasing GPT-4 on March 15 last year and played with homophonic memes (4 in English is pronounced close to for) 's post is a fancy reminder.

Currently, Claude 3 is coming with such force that OpenAI may really be unable to sit still.

Jimmy Apples, the most accurate account, released the latest news (last week he accurately predicted that Claude 3 will be released this week), he believes that OpenAI’s risk/reward judgment on releasing the next generation model May be affected by Claude 3.

Logan Kilpatrick, the head of developer relations who just resigned from OpenAI, also confirmed that there will be big things happening this week while interacting with netizens.

As for GPT-4.5, Q*, Sora open testing, or direct GPT-5?

Can OpenAI’s next product overshadow Claude3?

Reference link:

[1]https://x.com/alexalbert__/status/1764722513014329620.

[2]https://x.com/GillVerd/status/1764901418664882327.

[3]https://x.com/KevinAFischer/status/1764892031233765421.

[4]https://x.com/hahahahohohe/status/1765088860592394250.

The above is the detailed content of Claude 3's 'self-awareness' incident detonated, Musk couldn't sit still, OpenAI was revealed to have back-up plans. For more information, please follow other related articles on the PHP Chinese website!

Application of artificial intelligence in life

Application of artificial intelligence in life

What is the basic concept of artificial intelligence

What is the basic concept of artificial intelligence

What are the electronic contract signing platforms?

What are the electronic contract signing platforms?

How to take screenshots on Huawei mate60pro

How to take screenshots on Huawei mate60pro

How to set Chinese in eclipse

How to set Chinese in eclipse

What are the data types?

What are the data types?

Win11 My Computer Added to Desktop Tutorial

Win11 My Computer Added to Desktop Tutorial

Else usage in Python loop structure

Else usage in Python loop structure