In a family environment, family members are often asked to get the remote control on the TV cabinet. Sometimes even pet dogs are not immune. But there are always times when people are in situations where they are unable to control others. And pet dogs may not be able to understand the instructions. Human beings' expectation of robots is to help complete these chores. This is our ultimate dream for robots.

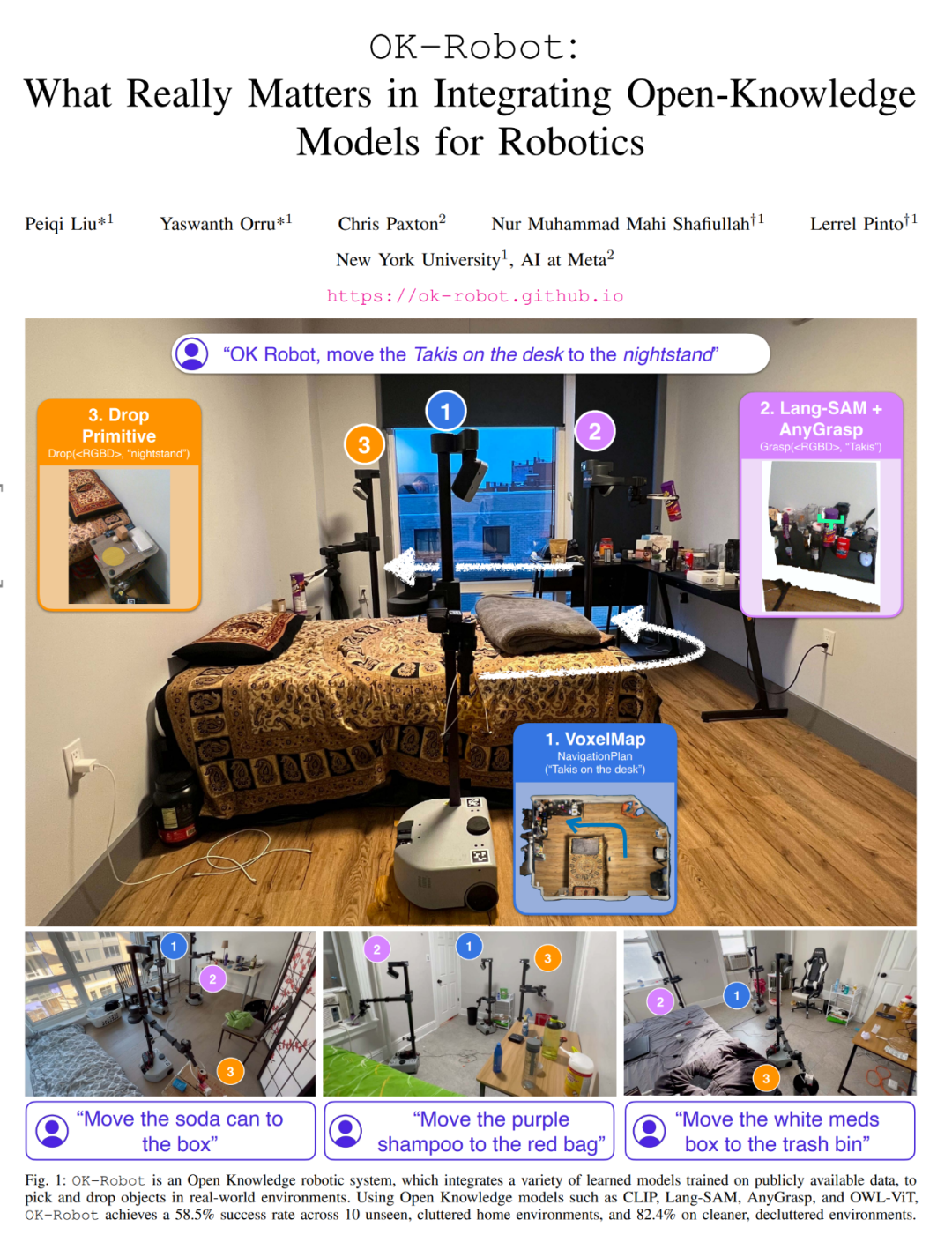

Recently, New York University and Meta collaborated to develop a robot with the ability to act autonomously. When you tell it: "Please put the cornflakes on the table on the bedside table," it will successfully complete the task by independently searching for the cornflakes and planning the best route and corresponding actions. In addition, the robot also has the ability to organize items and handle garbage to provide you with convenience.

#This robot is called OK-Robot and was built by researchers from New York University and Meta. They integrated the basic modules of visual language model, navigation and grasping into an open knowledge-based framework to provide a solution for efficient pick-and-place operations of robots. This means that when we get older, buying a robot to help us serve tea and pour water may become a reality.

OK-Robot’s “open knowledge” positioning refers to its learning model trained on large public data sets. When OK-Robot is placed in a new home environment, it fetches scan results from the iPhone. Based on these scans, it computes dense visual language representations using LangSam and CLIP and stores them in semantic memory. Then, when given a linguistic query for an object to be picked up, the linguistic representation of the query is matched against semantic memory. Next, OK-Robot will gradually apply the navigation and picking modules, move to the required object and pick it up. A similar process can be used for discarding objects.

To study OK-Robot, researchers tested it in 10 real home environments. Through experiments, they found that in an unseen natural home environment, the system's zero-sample deployment success rate averaged 58.5%. However, this success rate depends heavily on the "naturalness" of the environment. They also found that this success rate could be increased to about 82.4% by improving the query, tidying up the space, and excluding objects that were obviously adversarial (such as too large, too translucent, or too slippery).

OK-Robot attempted 171 pickup tasks in 10 home environments in New York City.

In short, through experiments, they came to the following conclusions:

In order to encourage and support other researchers’ work in the field of open knowledge robotics, the author stated that he will share the code and modules of OK-Robot. More information can be found at: https://ok-robot.github.io.

The research mainly solves this problem: pick up A from B and place it on C, where A is an object and B and C are places in the real-world environment. To achieve this, the proposed system needs to include the following modules: an open vocabulary object navigation module, an open vocabulary RGB-D grabbing module, and a heuristic module for releasing or placing objects (dropping heuristic).

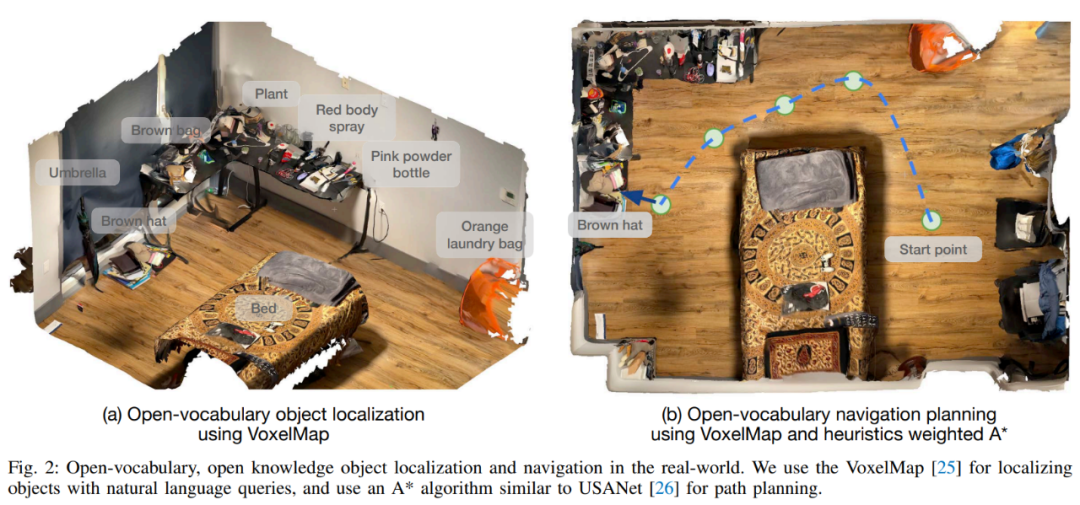

Open Vocabulary Object Navigation

Start by scanning the room. Open Vocabulary Object Navigation follows the CLIP-Fields approach and assumes a pre-mapping phase of manual scanning of the home environment using an iPhone. This manual scan simply captures a home video using the Record3D app on an iPhone, which will produce a series of RGB-D images with locations.

Scanning each room takes less than a minute, and once the information is collected, the RGB-D images along with the camera pose and position are exported to the project library for map building. The recording must capture the ground surface as well as objects and containers in the environment.

The next step is object detection. On each frame scanned, an open vocabulary object detector processes the scanned content. This paper chooses the OWL-ViT object detector because this method performs better on preliminary queries. We apply the detector on each frame and extract each object bounding box, CLIP embedding, and detector confidence and pass them to the object storage module of the navigation module.

Then perform object-centric semantic storage. This paper uses VoxelMap to accomplish this step. Specifically, they use the depth image and the pose collected by the camera to back-project the object mask into real-world coordinates. This way can provide a point cloud in which each point has a Associative semantic vectors from CLIP.

Followed by the query memory module: given a language query, this article uses the CLIP language encoder to convert it into a semantic vector. Since each voxel is associated with a real location in the home, the location where the query object is most likely to be found can be found, similar to Figure 2 (a).

When necessary, this article implements "A on B" as "A close B". To do this, query A selects the first 10 points and query B selects the first 50 points. Then calculate the 10×50 pairwise Euclidean distance and select the point A associated with the shortest (A, B) distance.

After completing the above process, the next step is to navigate to the object in the real world: Once the 3D position coordinates in the real world are obtained, they can be used as the navigation target of the robot to initialize Operation stage. The navigation module must place the robot within arms reach so that the robot can then manipulate the target object.

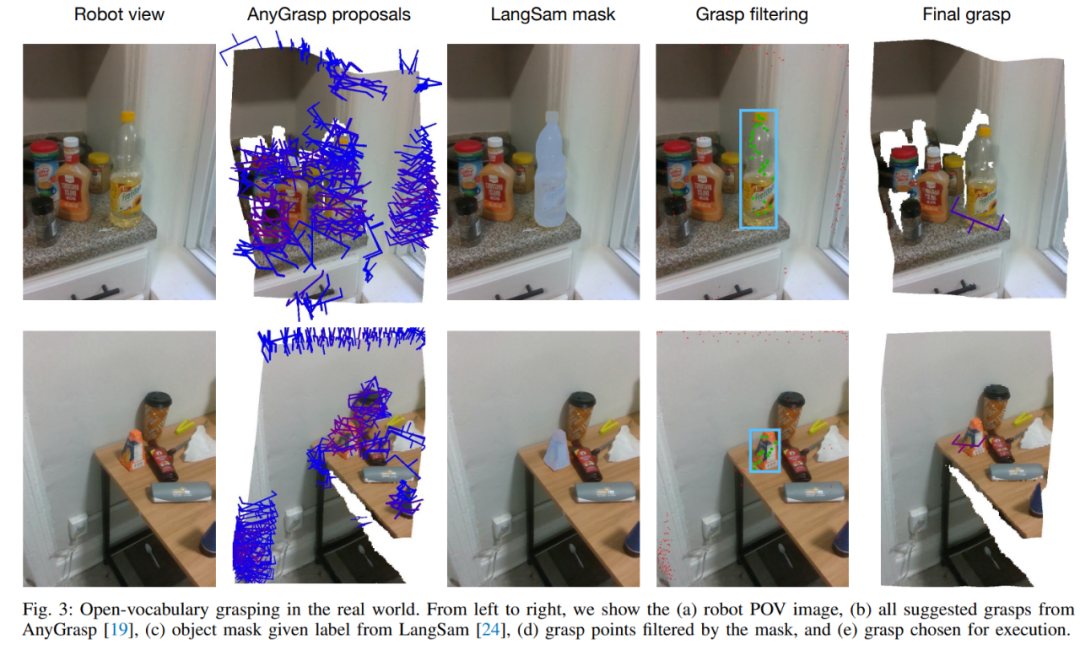

Robot grasping of real-world objects

Different from open vocabulary navigation, in order to complete the grasping task, The algorithm requires physical interaction with arbitrary objects in the real world, which makes this part even more difficult. Therefore, this paper chooses to use a pre-trained grasping model to generate real-world grasping gestures and use VLM for language condition filtering.

The grasp generation module used in this article is AnyGrasp, which generates collision-free grasps using parallel jaw grippers in a scene given a single RGB image and a point cloud.

AnyGrasp provides the possible grasps in the scene (column 2 of Figure 3), including the grasp point, width, height, depth and grasp score, which represents the number of possible grasps for each grasp. Uncalibrated model confidence in hand.

Filter grasps using language queries: For grasp suggestions obtained from AnyGrasp, this article uses LangSam to filter grasps. This paper projects all proposed grip points onto the image and finds the grip points that fall within the object mask (Figure 3, column 4).

Grasp execution. Once the optimal grasp is determined (Figure 3, column 5), a simple pre-grasp method can be used to grasp the target object.

Heuristic module for releasing or placing objects

After grabbing the object, then The next step is where to place the object. Unlike HomeRobot's baseline implementation, which assumes that the location where the object is dropped is a flat surface, this paper extends it to also cover concave objects such as sinks, bins, boxes, and bags.

Now that navigation, grabbing, and placement are all there, it’s a straightforward matter of combining them, and the method can be applied directly to any new home. For new home environments, the study can scan a room in under a minute. It then takes less than five minutes to process it into a VoxelMap. Once completed, the robot can be placed immediately at the chosen site and begin operations. From arriving in a new environment to starting to operate autonomously within it, the system takes an average of less than 10 minutes to complete its first pick-and-place task.

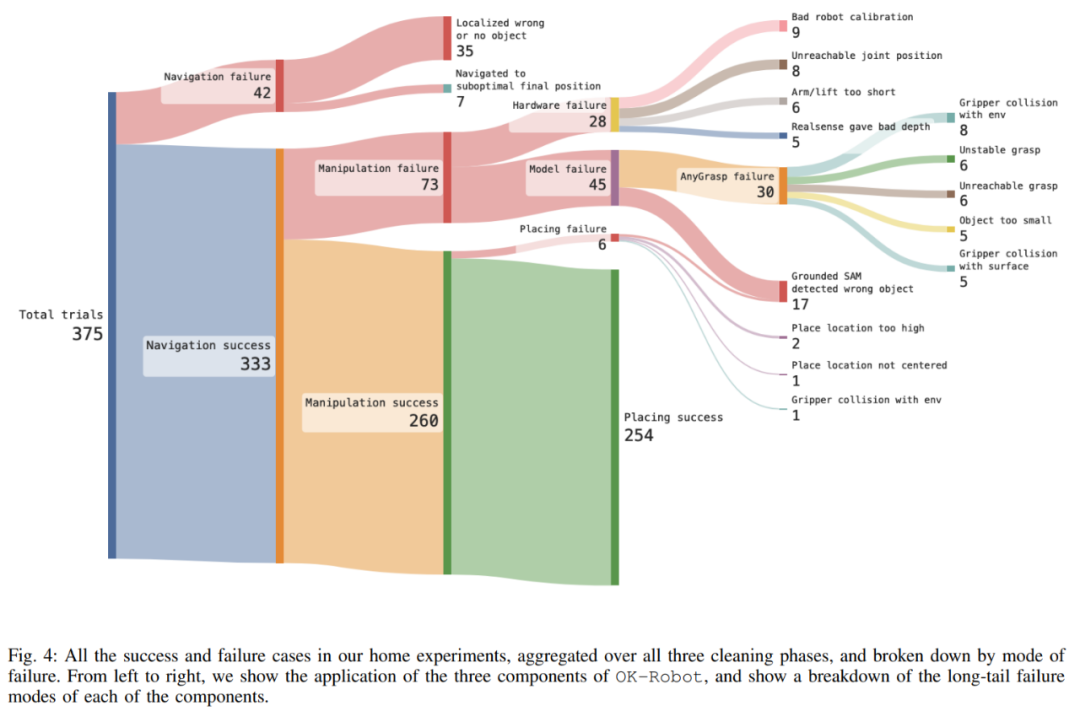

In more than 10 home experiments, OK-Robot achieved a 58.5% success rate on pick-and-place tasks.

The study also conducted an in-depth exploration of OK-Robot to better understand its failure modes. The study found that the main cause of failure was operational failure. However, after careful observation, it was noticed that the cause of failure was caused by the long tail. As shown in Figure 4, the three major reasons for failure included failure to retrieve from semantic memory the location to which to navigate. of the correct object (9.3%), the pose obtained from the manipulation module is difficult to complete (8.0%), and hardware reasons (7.5%).

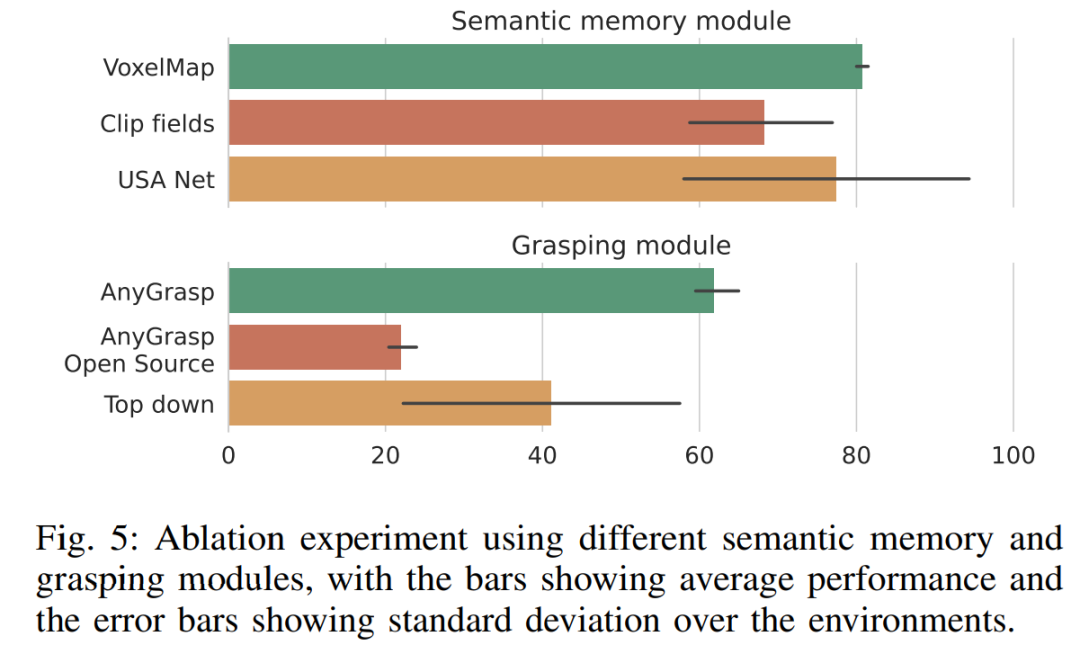

As can be seen from Figure 5, the VoxelMap used in OK-Robot is slightly better than other semantic memory modules. As for the scraping module, AnyGrasp significantly outperforms other scraping methods, outperforming the best candidate (top-down scraping) by almost 50% on a relative scale. However, the fact that HomeRobot's top-down crawling based on heuristics beat the open source AnyGrasp baseline and Contact-GraspNet demonstrates that building a truly universal crawling model remains difficult.

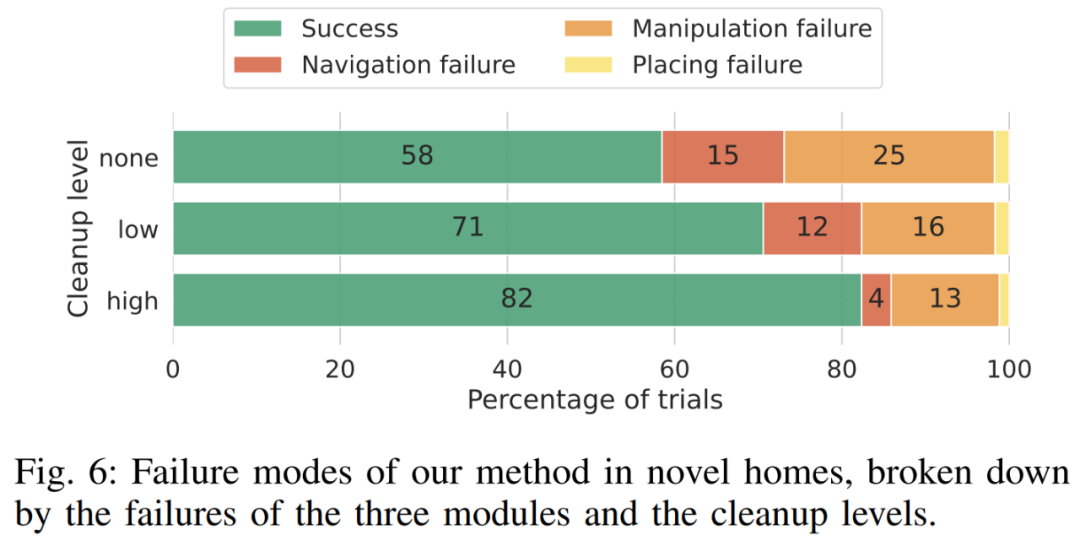

Figure 6 shows the complete analysis of OK-Robot failure at various stages. According to the analysis, when the researchers clean up the environment and delete blurry objects, the navigation accuracy increases, and the total error rate drops from 15% to 12%, and finally drops to 4%. Likewise, accuracy improved when the researchers cleared the environment of clutter, with error rates falling from 25 percent to 16 percent and finally to 13 percent.

For more information, please refer to the original paper.

The above is the detailed content of OK-Robot developed by Meta and New York University: tea-pouring robot has emerged. For more information, please follow other related articles on the PHP Chinese website!