Paper: Lift-Attend-Splat method for fusion of bird's-eye view camera and lidar using Transformer technology

Please click the link to view the file: https://arxiv.org/pdf/2312.14919.pdf

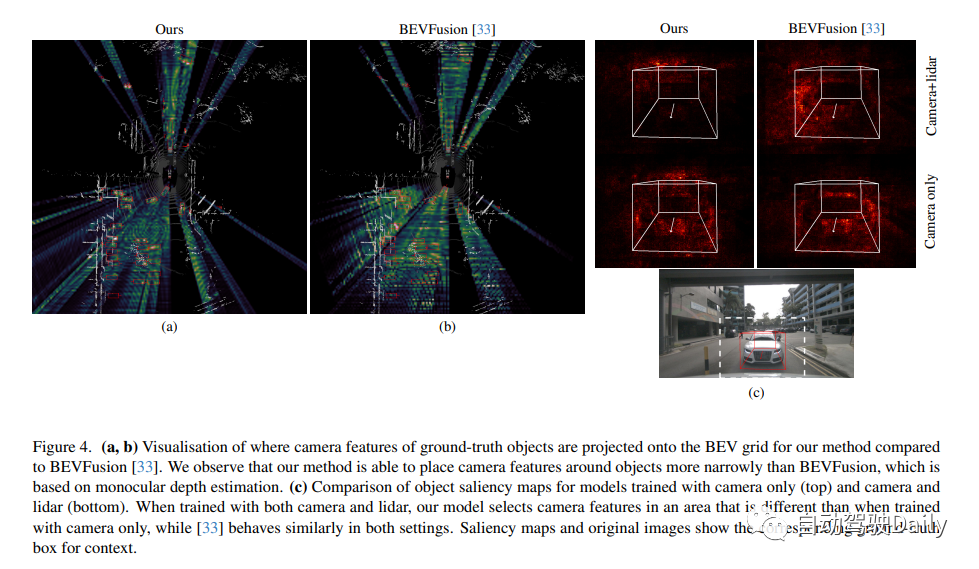

For safety-critical applications such as autonomous driving, combining complementary sensor modalities is crucial. Recent autonomous driving camera-lidar fusion methods use monocular depth estimation to improve perception, but this is a difficult task compared to directly using depth information from lidar. Our study finds that this approach does not fully exploit depth information and demonstrates that naively improving depth estimation does not improve object detection performance. Surprisingly, removing depth estimation entirely does not degrade object detection performance, suggesting that reliance on monocular depth may be an unnecessary architectural bottleneck during camera-lidar fusion. This study proposes a new fusion method that completely bypasses monocular depth estimation and instead utilizes a simple attention mechanism to select and fuse camera and lidar features in a BEV grid. The results show that the proposed model is able to adjust its use of camera features based on the availability of lidar features and has better 3D detection performance on the nuScenes dataset than baseline models based on monocular depth estimation

This study introduces a new camera-lidar fusion method called "Lift Attented Splat". This method avoids monocular depth estimation and instead utilizes a simple transformer to select and fuse camera and lidar features in BEV. Experiments prove that compared with methods based on monocular depth estimation, this research method can better utilize cameras and improve object detection performance. The contributions of this study are as follows:

The camera-lidar fusion method based on the Lift Splat paradigm does not utilize depth as expected. In particular, we show that they perform equally well or better if monocular depth prediction is completely removed. We propose a camera-lidar fusion method that completely bypasses monocular depth estimation while It uses a simple transformer to fuse camera and lidar features in a bird's-eye view. However, due to the large number of camera and lidar features and the quadratic nature of attention, the transformer architecture is difficult to be simply applied to the camera-lidar fusion problem. When projecting camera features in BEV, the geometry of the problem can be used to significantly limit the scope of attention, since camera features should only contribute to the position along their corresponding rays. We apply this idea to the case of camera-lidar fusion and introduce a simple fusion method that uses cross-attention between columns in the camera plane and polar rays in the lidar BEV grid! Instead of predicting monocular depth, cross-attention learns which camera features are most salient in the context provided by lidar features along their rays

We propose a camera-lidar fusion method that completely bypasses monocular depth estimation while It uses a simple transformer to fuse camera and lidar features in a bird's-eye view. However, due to the large number of camera and lidar features and the quadratic nature of attention, the transformer architecture is difficult to be simply applied to the camera-lidar fusion problem. When projecting camera features in BEV, the geometry of the problem can be used to significantly limit the scope of attention, since camera features should only contribute to the position along their corresponding rays. We apply this idea to the case of camera-lidar fusion and introduce a simple fusion method that uses cross-attention between columns in the camera plane and polar rays in the lidar BEV grid! Instead of predicting monocular depth, cross-attention learns which camera features are most salient in the context provided by lidar features along their rays

Our model has similarities to methods based on the Lift Splat paradigm The overall architecture, except for projecting camera features in BEV. As shown in the figure below, it consists of a camera and lidar backbone, a module that independently generates each modal feature, a projection and fusion module that embeds the camera features into the BEV and fuses them with the lidar, and a detection head. When considering target detection, the final output of the model is the attributes of the target in the scene, including position, dimension, direction, speed and classification information, represented in the form of a 3D bounding box

Lift Attented Splat Camera Lidar Fusion Architecture As follows. (Left) Overall architecture: Features from the camera and lidar backbone are fused together before being passed to the detection head. (inset) The geometry of our 3D projection: The “Lift” step embeds the lidar BEV features into the projected horizon by using bilinear sampling to lift the lidar features along the z-direction. The "splat" step corresponds to the inverse transformation, as it uses bilinear sampling to project the features from the projected horizon back to the BEV grid, again along the z direction! On the right are the details of the project module.

The above is the detailed content of New BEV LV Fusion Solution: Lift-Attend-Splat Beyond BEVFusion. For more information, please follow other related articles on the PHP Chinese website!