Editor | Radish Skin

Over the past few centuries, researchers have been looking for ways to connect different fields of knowledge. With the advent of artificial intelligence, we now have the opportunity to explore relationships across fields (e.g., mechanics and biology) or between different fields (e.g., failure mechanics and art)

To achieve this goal, MIT Researchers at MIT's Laboratory for Atomistic and Molecular Mechanics (LAMM) use fine-tuned large language models (LLM) to obtain a subset of relevant knowledge about multi-scale material failure

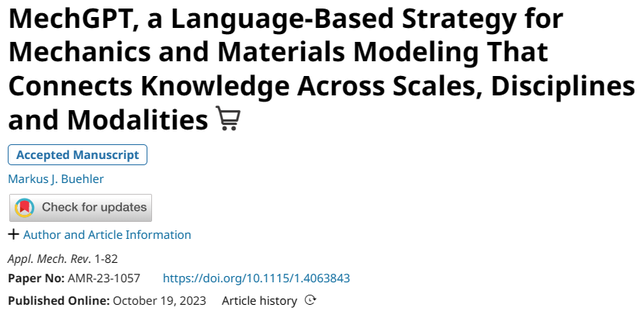

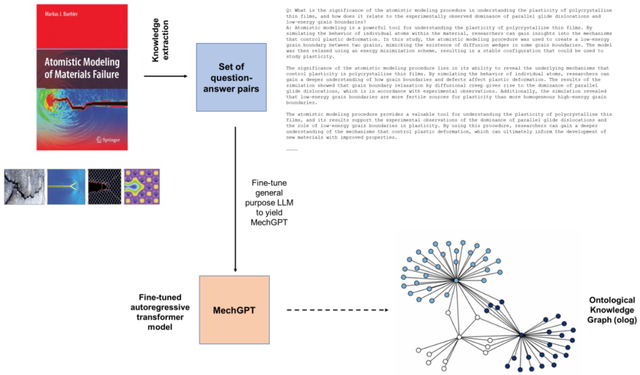

The steps of this method are to first use a general LLM to extract question and answer pairs from the original source, and then fine-tune the LLM. A series of computational experiments were conducted using this fine-tuned MechGPT LLM base model to explore its capabilities in knowledge retrieval, various language tasks, hypothesis generation, and connecting knowledge across different domains

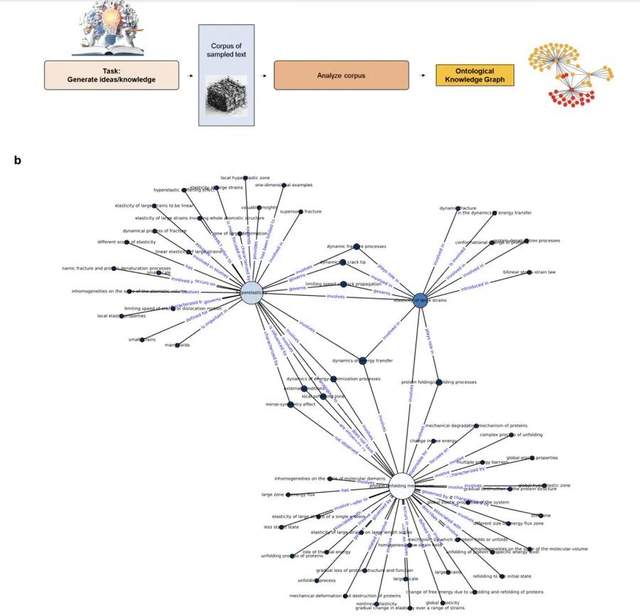

Although the model has certain ability to recall knowledge from training, but researchers found that LLM is more meaningful for extracting structural insights through ontology knowledge graphs. These interpretable graph structures provide interpretive insights, frameworks for new research questions, and visual representations of knowledge that can also be used for retrieval-enhanced generation.

The research is titled "MechGPT, a Language-Based Strategy for Mechanics and Materials Modeling That Connects Knowledge Across Scales, Disciplines and Modalities" and was published on October 19, 2023. "Applied Mechanics Reviews".

Modeling physical, biological, and metaphysical concepts has been a focus of researchers in many disciplines. Early scientists and engineers were often deeply rooted in fields ranging from science to philosophy, physics to mathematics, and the arts (e.g., Galileo Galilei, Leonardo da Vinci, Johann Wolfgang von · Goethe), but with the development of science, specialization has become dominant today. Part of the reason is that a large amount of knowledge has been accumulated across fields, which requires humans to spend a lot of energy in research and practice.

Nowadays, the emergence of large language models (LLM) has challenged the paradigm of scientific research. It not only brings new modeling strategies based on artificial intelligence/machine learning, but also provides opportunities to connect knowledge, ideas, and concepts across domains. These models can complement traditional multiscale modeling for the analysis and design of layered materials and many other applications in mechanics

Figure: Schematic workflow. (Source: Paper)

Here, LAMM researchers build on the recently proposed use of LLM in mechanics and materials research and development, and a general LLM based on Llama-2 based OpenOrca-Platypus2-13B , developed a fine-tuned MechGPT model that focuses on modeling material failure, multi-scale modeling, and related disciplines.

The OpenOrca-Platypus2-13B model was chosen because it performs well on key tasks such as reasoning, logic, math/science, and other disciplines, providing rich, applicable Subject knowledge and general concepts, and efficient computing capabilities

LLM has powerful applications in the scientific field. In addition to being able to analyze large amounts of data and complex systems, in the fields of mechanics and materials science, LLM is used to simulate and predict the behavior of materials under different conditions, such as mechanical stress, temperature, and chemical interactions. As shown in earlier work, by training LLMs on large data sets from molecular dynamics simulations, researchers can develop models capable of predicting material behavior in new situations, thus accelerating the discovery process and reducing the need for experimental testing.

Such models are also very effective for analyzing scientific texts such as books and publications, allowing researchers to quickly extract key information and insights from large amounts of data. This can help scientists identify trends, patterns, and relationships between different concepts and ideas, and generate new hypotheses and ideas for further research.

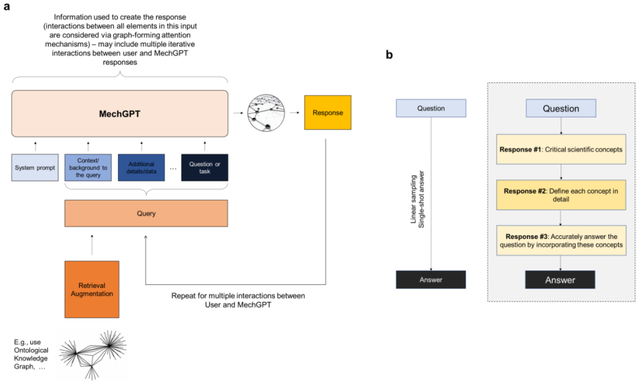

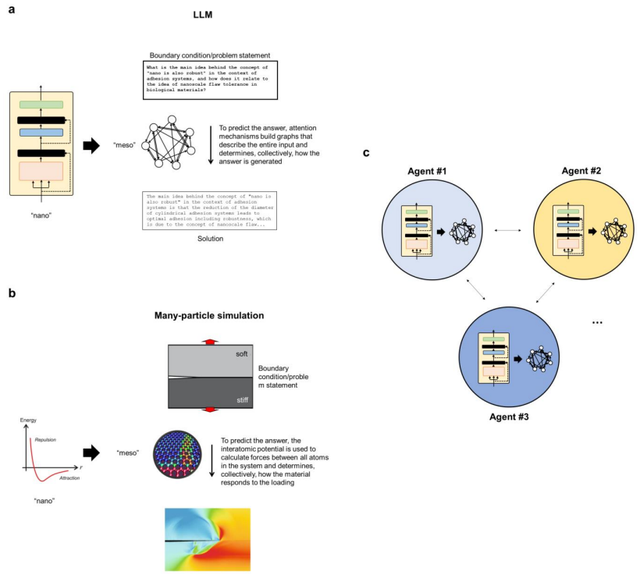

Please take a look at the figure below, which is an overview of the autoregressive decoder transformer architecture used to build MechGPT. (Source: paper)

Here, the team focuses on the development of the latter and explores the use of MechGPT, a generative artificial intelligence tool from the Transformer-based LLM family, specifically targeting material failure and related multi-scale methods Training was conducted to evaluate the potential of these strategies.

The strategy proposed in this study includes several steps. First is the distillation step, where researchers use LLM to generate question-answer pairs from text extracted from raw data chunks (such as one or more PDF files). Next, use this data to fine-tune the model in the second step. This study also specifically trained the initial MechGPT model, demonstrating its usefulness in knowledge retrieval, general language tasks, and hypothesis generation in the field of atomistic modeling of material failure

Figure: Overview of the modeling strategy used. (Source: Paper)

This paper introduces an overarching modeling strategy in which researchers employ specific language modeling strategies to generate datasets to extract knowledge from sources and leverage novel mechanics and Material dataset to train the model. The researchers analyzed and discussed three versions of MechGPT, with parameter sizes ranging from 13 billion to 70 billion and context lengths exceeding 10,000 tokens. Following the general remarks, the researchers applied the model and tested its performance in a variety of settings, including using LLMs for ontology graph generation and developing insights on complex topics across disciplines, and agent modeling, where multiple LLMs work collaboratively or against each other. Interact in a way that generates deeper insights into a topic area or answer to a question.

Graph: Developing ontological knowledge graph representations to relate hyperelasticity in the context of supersonic fracture and protein unfolding mechanisms. (Source: paper)

Graph: Developing ontological knowledge graph representations to relate hyperelasticity in the context of supersonic fracture and protein unfolding mechanisms. (Source: paper)

At the same time, the team further provides a conceptual comparison between language models and multi-particle systems at different levels of abstraction, and explains how the new framework can be viewed as extracting universal relationships that govern complex systems. s method.

Rewritten content: The above image shows the conceptual analogy between LLM and multi-particle simulation. (Source: paper)

Rewritten content: The above image shows the conceptual analogy between LLM and multi-particle simulation. (Source: paper)

Overall, the work presented in this study contributes to the development of more powerful and general artificial intelligence models that can help advance scientific research and solve complex problems in specific application areas , allowing for an in-depth evaluation of the model’s performance. Like all models, they must be carefully validated, and their usefulness lies in the context of the questions asked, their strengths and weaknesses, and the broader tools that help scientists advance science and engineering.

Moreover, artificial intelligence tools, as tools of scientific inquiry, must be viewed as a collection of tools for understanding, modeling, and designing the world around us. As artificial intelligence tools develop rapidly, their application in scientific contexts is only beginning to bring new opportunities

Paper link:

https://arxiv.org/ftp/arxiv/papers/ 2310/2310.10445.pdfRelated reports:

https://twitter.com/llama_index/status/1723379654550245719The above is the detailed content of Capable of interdisciplinary understanding and multi-scale modeling, MIT LAMM releases fine-tuned large language model MechGPT. For more information, please follow other related articles on the PHP Chinese website!