Technology peripherals

Technology peripherals

AI

AI

Based on LLaMA but changing the tensor name, Kai-Fu Lee's large model caused controversy, and the official response is here

Based on LLaMA but changing the tensor name, Kai-Fu Lee's large model caused controversy, and the official response is here

Based on LLaMA but changing the tensor name, Kai-Fu Lee's large model caused controversy, and the official response is here

Some time ago, the field of open source large models ushered in a new model - the context window size exceeded 200k and can handle "Yi" of 400,000 Chinese characters at a time.

Kai-fu Lee, Chairman and CEO of Innovation Works, founded the large model company "Zero One Thousand Things" and built this large model, including Yi-6B and Yi-34B. Version

According to Hugging Face English open source community platform and C-Eval Chinese evaluation list, Yi-34B achieved a number of SOTA international best performance indicator recognitions when it was launched, becoming a global open source giant. The model is a "double champion", defeating open source competing products such as LLaMA2 and Falcon.

Yi-34B also became the only domestic model to successfully top the Hugging Face global open source model rankings at that time. , called "the world's strongest open source model."

After its release, this model attracted the attention of many domestic and foreign researchers and developers

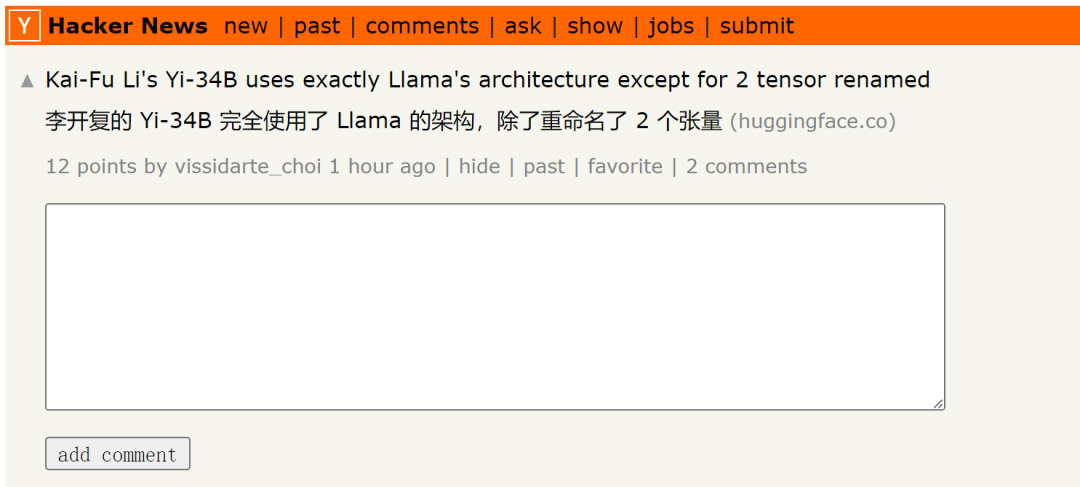

But recently, some researchers discovered that, The Yi-34B model basically adopts the LLaMA architecture, except that two tensors are renamed.

Please click this link to view the original post: https://news.ycombinator.com/item?id=38258015

The post also mentioned:

The code of Yi-34B is actually a reconstruction of the LLaMA code, but it does not seem to have made any substantial changes. This model is obviously an edit based on the original Apache version 2.0 LLaMA file, but makes no mention of LLaMA:

Yi vs LLaMA Code comparison. Code link: https://www.diffchecker.com/bJTqkvmQ/

In addition, these code changes are not submitted to the transformers project through Pull Request. , but instead attach it as external code, which may pose security risks or be unsupported by the framework. The HuggingFace leaderboard won't even benchmark this model with a context window up to 200K because it doesn't have a custom code strategy.

They claim this is a 32K model, but it is configured as a 4K model, there is no RoPE scaling configuration, and there is no explanation of how to scale (note: zero one thing before means that the model itself is on the 4K sequence for training, but can scale to 32K during inference phase). Currently, there is zero information about its fine-tuning data. They also did not provide instructions for reproducing their benchmarks, including the suspiciously high MMLU scores.

Anyone who has worked in the field of artificial intelligence for a while will not turn a blind eye to this. Is this false advertising? License violation? Was it actually cheating on the benchmark? Who cares? We could change a paper, or in this case, take all the venture capital money. At least Yi is above the standard because it is a basic model and its performance is really good

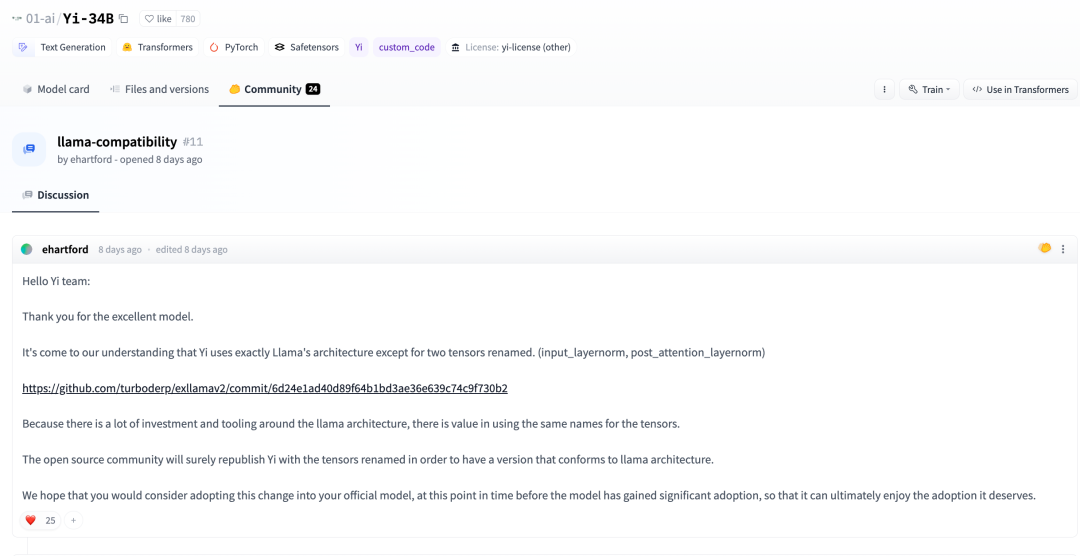

A few days ago, in the Huggingface community, a developer also pointed out:

According to our understanding, except for renaming two tensors, Yi completely adopts the LLaMA architecture. (input_layernorm, post_attention_layernorm)

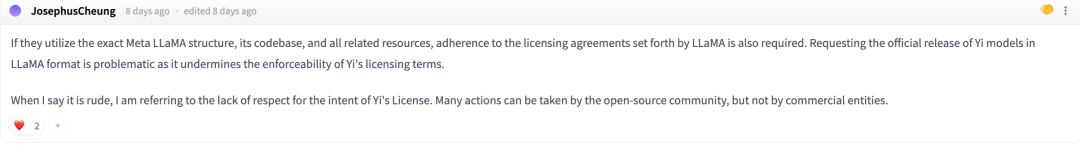

#In the discussion, some netizens said: If they want to use Meta LLaMA’s architecture, code base and other related resources exactly, Must abide by the license agreement stipulated by LLaMA

In order to comply with LLaMA's open source license, a developer decided to change his name back and republish it On huggingface

01-ai/Yi-34B, the tensors have been renamed to match the standard LLaMA model code. Related links: https://huggingface.co/chargoddard/Yi-34B-LLaMA

01-ai/Yi-34B, the tensors have been renamed to match the standard LLaMA model code. Related links: https://huggingface.co/chargoddard/Yi-34B-LLaMA

By reading this content, we can infer that the news that Jia Yangqing left Alibaba and started a business was mentioned in his circle of friends a few days ago

Regarding this matter, the Heart of the Machine also sought verification from Zero One and All Things. Lingyiwu responded:

GPT is a mature architecture recognized in the industry, and LLaMA made a summary on GPT. The structural design of the large R&D model of Zero One Thousand Things is based on the mature structure of GPT, drawing on top-level public results in the industry. At the same time, the Zero One Thousand Things team has done a lot of work on the understanding of the model and training. This is the first time we have released excellent results. one of the foundations. At the same time, Zero One Thousand Things is also continuing to explore essential breakthroughs at the model structure level.

The model structure is only part of the model training. Yi's open source model focuses on other aspects, such as data engineering, training methods, baby sitting (training process monitoring) skills, hyperparameter settings, evaluation methods, depth of understanding of the nature of evaluation indicators, and depth of research on the principles of model generalization capabilities. , the industry's top AI Infra capabilities, etc., a lot of research and development and foundation work have been invested. These tasks often play a greater role and value than the basic structure. These are also the core technologies of Zero One Wagon in the large model pre-training stage. moat.

In the process of conducting a large number of training experiments, we renamed the code according to the needs of experimental execution. We attach great importance to the feedback from the open source community and have updated the code to better integrate into the Transformer ecosystem

We are very grateful for the feedback from the community. We have just started in the open source community and hope to work with everyone to create a community. Prosperity, Yi Kaiyuan will do its best to continue to make progress

The above is the detailed content of Based on LLaMA but changing the tensor name, Kai-Fu Lee's large model caused controversy, and the official response is here. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Undress AI Tool

Undress images for free

Clothoff.io

AI clothes remover

AI Hentai Generator

Generate AI Hentai for free.

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1384

1384

52

52

Centos shutdown command line

Apr 14, 2025 pm 09:12 PM

Centos shutdown command line

Apr 14, 2025 pm 09:12 PM

The CentOS shutdown command is shutdown, and the syntax is shutdown [Options] Time [Information]. Options include: -h Stop the system immediately; -P Turn off the power after shutdown; -r restart; -t Waiting time. Times can be specified as immediate (now), minutes ( minutes), or a specific time (hh:mm). Added information can be displayed in system messages.

How to check CentOS HDFS configuration

Apr 14, 2025 pm 07:21 PM

How to check CentOS HDFS configuration

Apr 14, 2025 pm 07:21 PM

Complete Guide to Checking HDFS Configuration in CentOS Systems This article will guide you how to effectively check the configuration and running status of HDFS on CentOS systems. The following steps will help you fully understand the setup and operation of HDFS. Verify Hadoop environment variable: First, make sure the Hadoop environment variable is set correctly. In the terminal, execute the following command to verify that Hadoop is installed and configured correctly: hadoopversion Check HDFS configuration file: The core configuration file of HDFS is located in the /etc/hadoop/conf/ directory, where core-site.xml and hdfs-site.xml are crucial. use

What are the backup methods for GitLab on CentOS

Apr 14, 2025 pm 05:33 PM

What are the backup methods for GitLab on CentOS

Apr 14, 2025 pm 05:33 PM

Backup and Recovery Policy of GitLab under CentOS System In order to ensure data security and recoverability, GitLab on CentOS provides a variety of backup methods. This article will introduce several common backup methods, configuration parameters and recovery processes in detail to help you establish a complete GitLab backup and recovery strategy. 1. Manual backup Use the gitlab-rakegitlab:backup:create command to execute manual backup. This command backs up key information such as GitLab repository, database, users, user groups, keys, and permissions. The default backup file is stored in the /var/opt/gitlab/backups directory. You can modify /etc/gitlab

How is the GPU support for PyTorch on CentOS

Apr 14, 2025 pm 06:48 PM

How is the GPU support for PyTorch on CentOS

Apr 14, 2025 pm 06:48 PM

Enable PyTorch GPU acceleration on CentOS system requires the installation of CUDA, cuDNN and GPU versions of PyTorch. The following steps will guide you through the process: CUDA and cuDNN installation determine CUDA version compatibility: Use the nvidia-smi command to view the CUDA version supported by your NVIDIA graphics card. For example, your MX450 graphics card may support CUDA11.1 or higher. Download and install CUDAToolkit: Visit the official website of NVIDIACUDAToolkit and download and install the corresponding version according to the highest CUDA version supported by your graphics card. Install cuDNN library:

Detailed explanation of docker principle

Apr 14, 2025 pm 11:57 PM

Detailed explanation of docker principle

Apr 14, 2025 pm 11:57 PM

Docker uses Linux kernel features to provide an efficient and isolated application running environment. Its working principle is as follows: 1. The mirror is used as a read-only template, which contains everything you need to run the application; 2. The Union File System (UnionFS) stacks multiple file systems, only storing the differences, saving space and speeding up; 3. The daemon manages the mirrors and containers, and the client uses them for interaction; 4. Namespaces and cgroups implement container isolation and resource limitations; 5. Multiple network modes support container interconnection. Only by understanding these core concepts can you better utilize Docker.

Centos install mysql

Apr 14, 2025 pm 08:09 PM

Centos install mysql

Apr 14, 2025 pm 08:09 PM

Installing MySQL on CentOS involves the following steps: Adding the appropriate MySQL yum source. Execute the yum install mysql-server command to install the MySQL server. Use the mysql_secure_installation command to make security settings, such as setting the root user password. Customize the MySQL configuration file as needed. Tune MySQL parameters and optimize databases for performance.

How to view GitLab logs under CentOS

Apr 14, 2025 pm 06:18 PM

How to view GitLab logs under CentOS

Apr 14, 2025 pm 06:18 PM

A complete guide to viewing GitLab logs under CentOS system This article will guide you how to view various GitLab logs in CentOS system, including main logs, exception logs, and other related logs. Please note that the log file path may vary depending on the GitLab version and installation method. If the following path does not exist, please check the GitLab installation directory and configuration files. 1. View the main GitLab log Use the following command to view the main log file of the GitLabRails application: Command: sudocat/var/log/gitlab/gitlab-rails/production.log This command will display product

How to operate distributed training of PyTorch on CentOS

Apr 14, 2025 pm 06:36 PM

How to operate distributed training of PyTorch on CentOS

Apr 14, 2025 pm 06:36 PM

PyTorch distributed training on CentOS system requires the following steps: PyTorch installation: The premise is that Python and pip are installed in CentOS system. Depending on your CUDA version, get the appropriate installation command from the PyTorch official website. For CPU-only training, you can use the following command: pipinstalltorchtorchvisiontorchaudio If you need GPU support, make sure that the corresponding version of CUDA and cuDNN are installed and use the corresponding PyTorch version for installation. Distributed environment configuration: Distributed training usually requires multiple machines or single-machine multiple GPUs. Place