DoNews News on November 14th, NVIDIA released the next generation of artificial intelligence supercomputer chips on the 13th Beijing time. These chips will play an important role in deep learning and large language models (LLM), such as OpenAI’s GPT-4.

The new generation of chips has made significant progress compared with the previous generation and will be widely used in data centers and supercomputers to handle complex tasks such as weather and climate prediction, drug research and development, and quantum computing

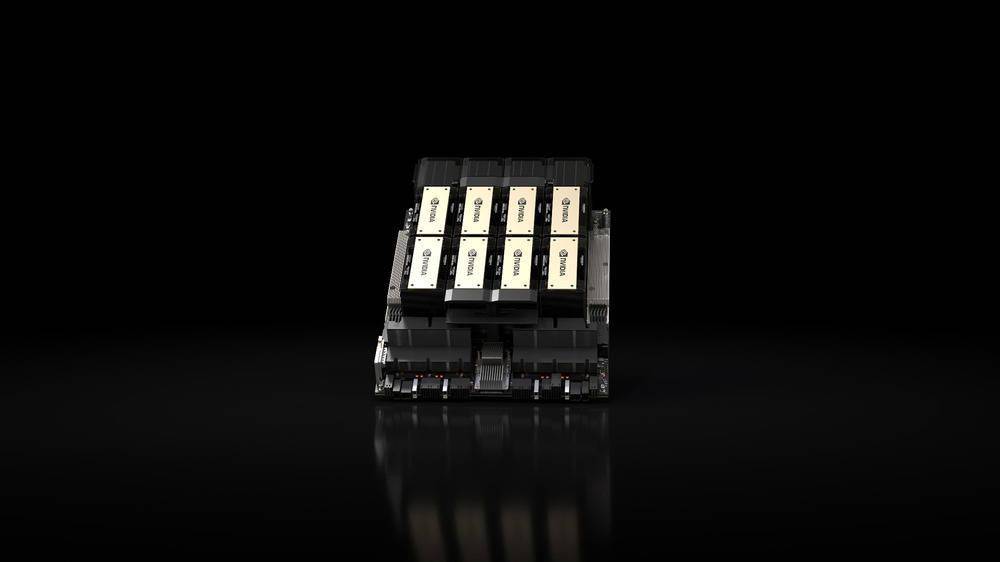

The key product released is the HGX H200 GPU based on Nvidia's "Hopper" architecture, which is the successor to the H100 GPU and is the company's first chip to use HBM3e memory. HBM3e memory has faster speed and larger capacity, so it is very suitable for the application of large language models

NVIDIA said: "With HBM3e technology, NVIDIA H200 memory speed reaches 4.8TB per second, capacity is 141GB, almost twice that of A100, and bandwidth has also increased by 2.4 times."

In the field of artificial intelligence, NVIDIA claims that the inference speed of HGX H200 on Llama 2 (70 billion parameter LLM) is twice as fast as that of H100. HGX H200 will be available in 4-way and 8-way configurations and is compatible with the software and hardware in the H100 system

It will be available in every type of data center (on-premises, cloud, hybrid cloud and edge) and deployed by Amazon Web Services, Google Cloud, Microsoft Azure and Oracle Cloud Infrastructure, etc., and will be available in Q2 2024 roll out.

Another key product released by NVIDIA this time is the GH200 Grace Hopper "superchip", which combines the HGX H200 GPU and Arm-based NVIDIA Grace CPU through the company's NVLink-C2C interconnect, officials said It is designed for supercomputers and allows "scientists and researchers to solve the world's most challenging problems by accelerating complex AI and HPC applications running terabytes of data."

The GH200 will be used in "more than 40 AI supercomputers at research centers, system manufacturers and cloud providers around the world," including Dell, Eviden, Hewlett Packard Enterprise (HPE), Lenovo, QCT and Supermicro.

It is worth noting that HPE’s Cray EX2500 supercomputer will use four-way GH200 and can scale to tens of thousands of Grace Hopper superchip nodes

The above is the detailed content of Nvidia releases AI chip H200: performance soars by 90%, Llama 2 inference speed doubles. For more information, please follow other related articles on the PHP Chinese website!

How to adjust the text size in text messages

How to adjust the text size in text messages

How to use the large function

How to use the large function

Mini program path acquisition

Mini program path acquisition

What platform is 1688?

What platform is 1688?

The difference between ipv4 and ipv6

The difference between ipv4 and ipv6

Top ten currency trading software apps ranking list

Top ten currency trading software apps ranking list

What is the difference between original screen and assembled screen?

What is the difference between original screen and assembled screen?

How to recover deleted WeChat chat history

How to recover deleted WeChat chat history