On October 9, Beijing Moonshot AI Technology Co., Ltd. (Moonshot AI) announced a breakthrough in the field of "long text" and launched Kimi Chat, the first intelligent assistant product that supports input of 200,000 Chinese characters. This is the longest context input length that can be supported by a large model service that can be used commercially in the global market, marking Moonshot AI's world leadership in this important technology.

Volcano Engine has in-depth cooperation with Moonshot AI to exclusively provide it with highly stable and cost-effective AI training and inference acceleration solutions. The two parties jointly conduct technology research and development to jointly promote the application of large language models in vertical fields and general scenarios. . At the same time, Kimi Chat will soon join the Volcano Engine large model service platform - Volcano Ark. The two parties will continue to provide enterprises and consumers with richer AI applications in the field of large model ecology.

Compared with the current large model services on the market that are based on English training, Kimi Chat has strong multi-language capabilities. For example, Kimi Chat has significant advantages in Chinese, and the actual use effect can support about 200,000 Chinese characters. Context, 2.5 times that of Anthropic's Claude-100k (actually measured about 80,000 words), and 8 times that of OpenAI's GPT-4-32k (actually measured about 25,000 words). At the same time, Kimi Chat can achieve a lossless long-range attention mechanism under hundreds of billions of parameters through innovative network structure and engineering optimization, and does not rely on "shortcut" solutions such as sliding windows, downsampling, and small models that can greatly damage performance. .

In a previous interview, Yang Zhilin, the founder of Moonshot AI, once said that lossless compression of massive data can achieve a high degree of intelligence, whether it is text, voice or video. The upper limit of a large model's capabilities (i.e., lossless compression ratio) is determined by both the single-step capability and the number of steps executed. The former is related to the number of parameters, and the latter refers to the context length

Coping with the challenges of implementing large language models and promoting the implementation of industry applications

Moonshot AI believes that increasing the context length can bring new development opportunities to large-scale model applications, allowing it to enter the Long LLM (LLLM) era from the LLM era, and achieve precise adaptation to various industries. When exploring effective methods for processing long text scenes, large-scale model applications need to continuously explore new means to solve the problem of model illusion and improve the controllability of generated content, while seeking new paths for the development of personalized large-scale model capabilities. In the development process of large-scale language models, it is also necessary to overcome multiple thresholds such as the expansion of computing resource requirements, instability of task engineering, high project costs, security and trust, etc., to improve the training efficiency of the model

In order to solve the above problems, Moonshot AI has joined hands with Volcano Engine to innovate AI technology and conduct AGI practice on the Volcano Engine machine learning platform veMLP. Moonshot AI makes full use of the GPU resource pool and is based on large-scale pre-training models to achieve normal and stable training on a scale of thousands of calories per day. Within six months, it trained a large language model Kimi Chat with a scale of hundreds of billions of parameters, unlocking professional scene writing and ultra-long texts. It can understand complex scenarios such as analysis, personalized dialogue with ultra-long memory, and knowledge Q&A based on large amounts of documents, and has been successfully used in many well-known companies.

Moonshot AI co-founder Zhou Xinyu said: "Moonshot AI focuses on exploring the boundaries of general artificial intelligence and is committed to transforming computing power into intelligent optimal solutions. The Volcano Engine has domestic leading infrastructure capabilities and computing power reserves. In the future, the two parties will further cooperate in AI computing infrastructure and application scenario expansion, jointly promote the development of artificial intelligence technology, and provide users with a stable, efficient, and intelligent service experience."

By using the Volcano Engine machine learning platform, the training of large models can be more stable and faster

Volcano Engine provides highly stable and cost-effective AI training and inference acceleration solutions for the construction and training of large models. Its machine learning platform veMLP has been polished for a long time by massive user businesses such as Douyin, and has formed a full-stack AI development Engineering optimization solutions, task fault self-healing, experimental observability and other solutions and best practices provide efficient, stable, safe and trustworthy one-stop AI algorithm development and iteration services to make large model training faster, more stable and more reliable. High cost performance. Moonshot AI is based on the ultra-large-scale AI training and inference acceleration solution provided by the Volcano Engine, helping the team achieve continuous training iteration, fine-tuning and inference of large language models quickly, stably and at low cost.

1. Scaled scheduling of IaaS computing power and storage resources

Build a high-performance computing cluster to achieve 10,000-ka-level large model training, microsecond-level delay network, and elastic computing to save 70% of computing power costs; use the vePFS TOS hot and cold tiered acceleration solution to meet the high throughput of training data. Overall storage costs are reduced by 65%. For the file system reading and writing pattern of large models, we jointly developed a dedicated file caching system to greatly improve graphics card utilization.

2. Ensure the stability of PaaS computing cluster

Optimize the stability of the ultra-large training cluster, provide hardware fault self-healing optimization and independent diagnosis capabilities, allow user tasks to quickly retry and resume training, and achieve monthly-level stable training. Through multi-machine training task communication affinity optimization, reduce Cross-switch communication for RingAllReduce.

3. Experiment with high observability

Conduct experimental management for multiple training tasks, compare training results through visualization, and determine the model to be launched iteratively; use complete monitoring logs to help the business optimize 3D parallel parameters and assist in locating training faults

Security mutual trust solution for large model services

Combine trusted privacy computing with LLM applications to provide security sandbox functions and improve developer permission control. The Volcano Engine also works with Moonshot AI to design a workflow suitable for large model development habits, ensuring hierarchical access to data and ensuring data security while ensuring work efficiency.

Wu Di, head of the intelligent algorithm of Volcano Engine, said: "Volcano Engine has always adhered to a cooperative attitude of focusing on technology, empowering partners, and symbiosis of values. Moonshot AI has the most advanced large-model R&D team in China and has an in-depth understanding and understanding of AI technology. Application experience, the cooperation between the two parties will further provide enterprises and consumers with richer AI applications in the field of multi-model ecological services."

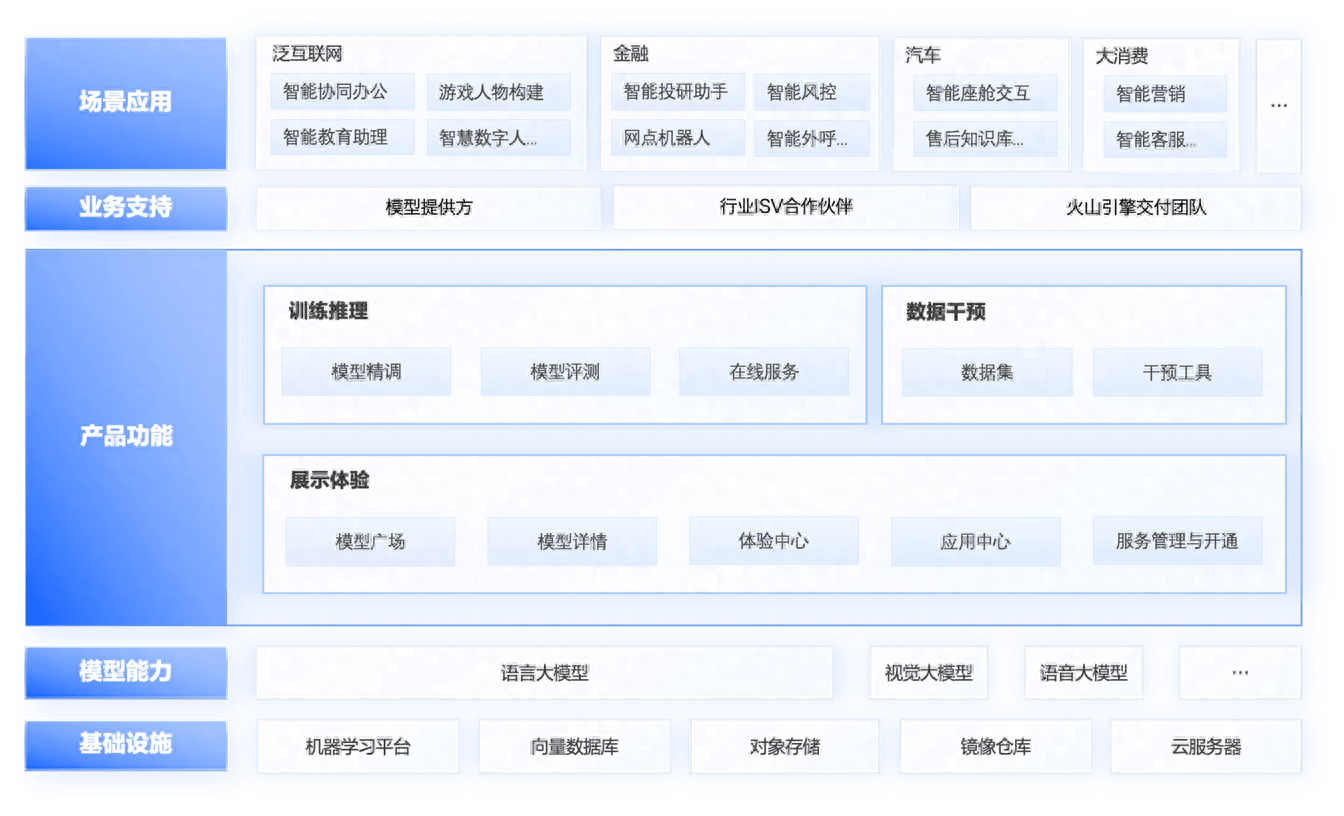

Panorama of Volcano Ark functions

At present, Volcano Ark has attracted large models from many AI technology companies and scientific research institutes such as Zhipu AI, Minimax, and ByteDance Skylark. Moonshot AI’s large model service Kimi Chat is also coming to Volcano Ark. Volcano Engine will cooperate with outstanding domestic large model service providers to provide a full range of functions and services such as model training, inference, evaluation, and fine-tuning to help all walks of life accelerate the development of AI. All companies are welcome to experience large models in Volcano Ark. Volcano Ark is willing to grow together with everyone!

The above is the detailed content of Kimi Chat internal testing starts, Volcano Engine provides acceleration solutions, supports training and inference of Moonshot AI large model service. For more information, please follow other related articles on the PHP Chinese website!