Nginx is a high-performance http and reverse proxy server, which is characterized by small memory usage and strong concurrency capabilities. Nginx is specially developed for performance optimization. Performance is its most important consideration. It can withstand the test of high load. Reports indicate that it can support up to 50,000 concurrent connections.

Forward proxy: Configure the proxy server in the browser and access the Internet through the proxy server.

Reverse proxy: Send the request to the reverse proxy server, and the reverse proxy server selects the target server to obtain the data, and then returns it to the client. At this time, the reverse proxy server and the target server are one and the same to the outside world. server, what is exposed is the proxy server address.

If the number of requests is too large and cannot be handled by a single server, we increase the number of servers and then distribute the requests to each On the server, the original request is concentrated on a single server and the request is distributed to multiple servers, which is load balancing.

In order to speed up the parsing speed of the server, dynamic pages and static pages can be handed over to different servers for parsing to speed up the parsing speed. Reduce the pressure on a single server.

Nginx requires several dependency packages, namely pcre, openssl, zlib, you need to install these dependencies before installing nginx.

pcreCompressed package

1wget http://downloads.sourceforge.net/project/pcre/pcre/8.37/pcre-8.37.tar.gz

Extract the compressed file

1tar -xvf pcre-8.37.tar.gz

Enter the decompressed file Directory, execute the following command

1./configure

Use the following command to compile and install

1make && make install

pcreversion number

1pcre-config --version

1yum -y install make zlib zlib-devel gcc-c++ libtool openssl openssl-devel

nginx官网下载nginx,官网地址:https://nginx.org/download/;

将压缩包拖到服务器上;

使用命令tar -xvf nginx-1.12.2.tar.gz解压压缩包;

使用命令./configure检查;

使用命令make && make isntall编译安装;

安装成功后,在usr会多出来一个文件夹,local/nginx,在nginx的sbin文件夹下有启动脚本。

在/usr/local/nginx/sbin文件夹下,使用以下命令启动

1./nginx

然后浏览器访问服务器ip,nginx默认端口是80,出现以下页面则证明nginx安装成功;

使用这些命令时需要进入/usr/local/nginx/sbin文件夹

查看nginx的版本号

1./nginx -v

启动nginx

1./nginx

关闭nginx

1./nginx -s stop

重新加载nginx

1./nginx -s reload

nginx的配置文件在/usr/local/nginx/conf中的nginx.conf。我们将nginx.conf中注释的内容删除一下。

1#user nobody;

2worker_processes 1;

3

4#pid logs/nginx.pid;

5

6events {

7 worker_connections 1024;

8}

9

10http {

11 include mime.types;

12 default_type application/octet-stream;

13

14 sendfile on;

15 #tcp_nopush on;

16

17 #keepalive_timeout 0;

18 keepalive_timeout 65;

19

20 #gzip on;

21

22 server {

23 listen 80;

24 server_name localhost;

25

26 location / {

27 root html;

28 index index.html index.htm;

29 }

30 }

31}nginx的配置文件包含三部门。

1.全局块

从配置文件开始到events块之间的内容,主要会设置一些nginx服务器整体运行的配置指令。

1worker_processes 1;

这个代表nginx处理并发的关键配置,值越大,处理并发能力越强。但是会受到硬件、软件等约束。

2.events块

events块涉及的指令主要影响nginx服务器与用户网络的连接。

1worker_connections 1024;

这个代表nginx支持的最大连接数。

3.http全局块

nginxThe most frequent part of server configuration. httpGlobal block contains http block and server block.

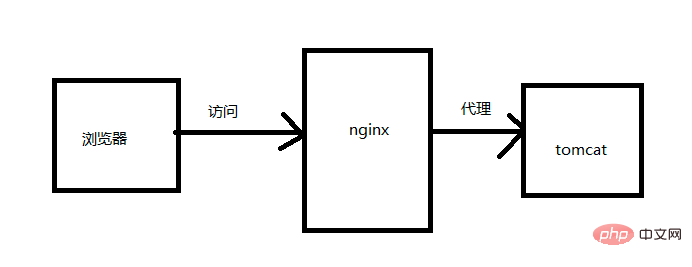

Local browser accesses nginx server, nginx server reverse proxy tomcat server, when we request nginx Direct access to tomcat. The installation of tomcat will not be discussed here. I installed tomcat and nginx on the same server.

由于我们的nginx没有域名,为了演示,因此我们在本地host文件中配置nginx服务器ip和域名进行绑定。这个host文件的具体位置在C:\Windows\System32\drivers\etc。在host文件中增加一句配置:

147.104.xxx.xxx www.javatrip.com

前面的ip是服务器的ip地址,后面的域名是我随便起的用于绑定这个ip的一个域名。配置好之后,我们使用域名访问一下tomcat,如果能请求到tomcat默认页面,则配置成功。

1 server {

2 listen 80;

3 server_name localhost;

4

5 location / {

6 root html;

7 index index.html index.htm;

8 }

9 }我们将以上默认的配置文件做个修改:

1server {

2 listen 80;

3 server_name 47.104.xxx.xxx;

4

5 location / {

6 root html;

7 proxy_pass http://127.0.0.1:8080;

8 index index.html index.htm;

9 }

10}以上这段配置的意思就是请求是47.104.xxx.xxx:80,都会转发至47.104.xxx.xxx:8080。

现在浏览器访问www.javatrip.com,发现直接转发到了tomcat上了,这样简单的反向代理就完成了。

我们再解压一个tomcat,端口号设置为8081,分别在两个tomcat下webapps目录下面新建dev和prod目录,然后在该目录下写一个文件。

将请求www.javatrip.com:7001/dev转发到tomcat8080,将请求www.javatrip.com:7001/prod转发到tomcat8081。现在我们的nginx监听的端口号是7001。打开nginx的配置文件,新建一个server如下:

1server {

2 listen 7001;

3 server_name 47.104.xxx.xxx;

4

5 location ~ /dev/ {

6 proxy_pass http://127.0.0.1:8080;

7 }

8

9 location ~ /prod/ {

10 proxy_pass http://127.0.0.1:8081;

11 }

12}然后试试效果,分别访问www.javatrip.com:7001/dev/a.html和www.javatrip.com:7001/prod/a.html,效果如下:

其中,配置转发的时候用到了~,其含义内容如下:

= 严格匹配。如果这个查询匹配,那么将停止搜索并立即处理此请求。

~ is a case-sensitive match (regular expressions available)

!~ is a case-sensitive non-match

~* is case-insensitive matching (regular expressions available)

## !~* is a case-insensitive mismatch

nginxif the path matches Then regular expressions are not tested.

Load Balance means balancing and allocating loads (work tasks, access requests) to multiple operating units (servers, components) for execution. It is the ultimate solution to solve high performance, single point of failure (high availability), and scalability (horizontal scaling).

Now what we want to achieve is to distribute the requests to two tomcats respectively by accessing www.javatrip.com:7001/prod/a.html. First, we willtomcat8080 Create a new folder prod on and put a file a.html in it. In this way, both tomcat8081 and tomcat8080 will have a prod file plus a a.html file inside.

首先,在http块中配置两个tomcat的服务列表

1upstream myserver{

2 server 127.0.0.1:8080;

3 server 127.0.0.1:8081;

4}其次,在server块中配置规则:

1server {

2 listen 80;

3 server_name 47.104.xxx.xxx;

4

5 location / {

6 root html;

7 proxy_pass http://myserver;

8 index index.html index.htm;

9 }

10}访问地址:www.javatrip.com:7001/prod/a.html,多刷新几次。发现有的请求到tomcat8080上,有的请求到tomcat8081上。

轮询(默认):每个请求按时间顺序逐一分配到不同的服务器,如果服务器down了,会自动剔除。

1upstream myserver{

2 server 127.0.0.1:8080;

3 server 127.0.0.1:8081;

4}weight(权重):默认为1,权重越高,分配的请求越多。

1upstream myserver{

2 server 127.0.0.1:8080 weight=1;

3 server 127.0.0.1:8081 weight=2;

4}ip hash:每个请求按访问ip的hash结果分配,这样每个访客固定访问一个后台服务器,可以解决session的问题。

1upstream myserver{

2 ip_hash;

3 server 127.0.0.1:8080;

4 server 127.0.0.1:8081;

5}fair(第三方):按后端响应时间进行分配,响应时间越短分配的请求越多。

1upstream myserver{

2 server 127.0.0.1:8080;

3 server 127.0.0.1:8081;

4 fair;

5}由于动静分离在实际开发中也不常用,就不再写了。本篇文章做为一个nginx入门,到这里就基本完结了。最后留给大家一个问题思考一下:如何保证nginx的高可用?

The above is the detailed content of Nginx super simple tutorial, just read this article to get started. For more information, please follow other related articles on the PHP Chinese website!