Technology peripherals

Technology peripherals

AI

AI

Huawei launches two new commercial AI large model storage products, supporting 12 million IOPS performance

Huawei launches two new commercial AI large model storage products, supporting 12 million IOPS performance

Huawei launches two new commercial AI large model storage products, supporting 12 million IOPS performance

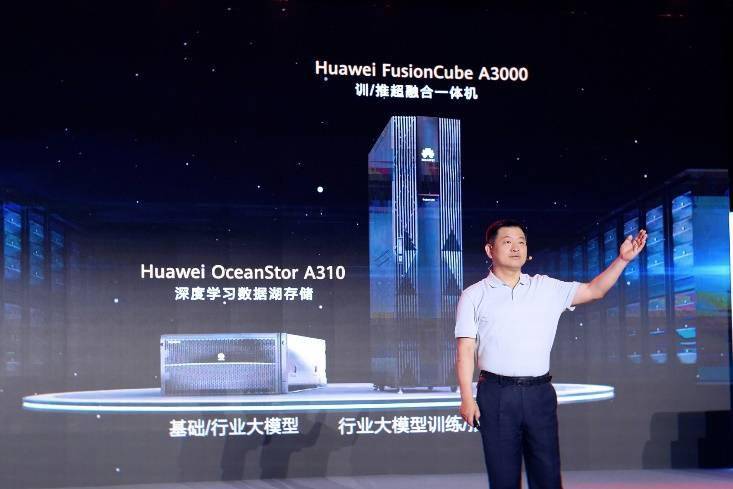

IT House News on July 14th, Huawei recently released new commercial AI storage products “OceanStor A310 Deep Learning Data Lake Storage” and “FusionCube A3000 Training/Push Hyper-converged All-in-one Machine”. Officials said that “these two products can It provides new momentum for AI basic model training, industry model training, and segmented scene model training and reasoning."

▲ Picture source Huawei

IT House compiled and summarized:

OceanStor A310 deep learning data lake storage is mainly oriented to basic/industry large model data lake scenarios, realizing full-process massive data management of AI from data collection and preprocessing to model training and inference applications.

Officially stated that OceanStor A310 single frame 5U supports the industry’s highest bandwidth of 400GB/s and the highest performance of 12 million IOPS, can be linearly expanded to 4096 nodes, and can achieve multi-protocol lossless interoperability. The global file system GFS realizes cross-regional intelligent data weaving, simplifies the data collection process, realizes near-data preprocessing through near-memory computing, reduces data movement, and improves preprocessing efficiency by 30%.

FusionCube A3000 training/pushing hyper-converged all-in-one machine is mainly oriented to industry large model training/inference scenarios. For tens of billions of model applications, it integrates OceanStor A300 high-performance storage nodes, training/pushing nodes, switching equipment, AI platform software and Management and operation software provides large model partners with a turn-key deployment experience, enabling one-stop "out-of-the-box" delivery and deployment can be completed within 2 hours.

Officially stated that the all-in-one machine supports two flexible business models, including Huawei’s Ascend one-stop solution and third-party partner’s one-stop solution for open computing, network, and AI platform software. The training/push nodes and storage nodes of the all-in-one machine can be independently expanded horizontally to match the needs of models of different sizes. At the same time, FusionCube A3000 uses high-performance containers to share the GPU for multiple model training and inference tasks, increasing resource utilization from 40% to more than 70%.

Zhou Yuefeng, President of Huawei’s Data Storage Product Line, pointed out that in the era of large-scale models, data storage is an important infrastructure that supports large-scale artificial intelligence models. Huawei Data Storage will provide diversified solutions and products for the era of AI large models, and work with partners to overcome these difficulties and promote AI empowerment in various industries. ”

The above is the detailed content of Huawei launches two new commercial AI large model storage products, supporting 12 million IOPS performance. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undress AI Tool

Undress images for free

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

AI Investor Stuck At A Standstill? 3 Strategic Paths To Buy, Build, Or Partner With AI Vendors

Jul 02, 2025 am 11:13 AM

AI Investor Stuck At A Standstill? 3 Strategic Paths To Buy, Build, Or Partner With AI Vendors

Jul 02, 2025 am 11:13 AM

Investing is booming, but capital alone isn’t enough. With valuations rising and distinctiveness fading, investors in AI-focused venture funds must make a key decision: Buy, build, or partner to gain an edge? Here’s how to evaluate each option—and pr

The Unstoppable Growth Of Generative AI (AI Outlook Part 1)

Jun 21, 2025 am 11:11 AM

The Unstoppable Growth Of Generative AI (AI Outlook Part 1)

Jun 21, 2025 am 11:11 AM

Disclosure: My company, Tirias Research, has consulted for IBM, Nvidia, and other companies mentioned in this article.Growth driversThe surge in generative AI adoption was more dramatic than even the most optimistic projections could predict. Then, a

AGI And AI Superintelligence Are Going To Sharply Hit The Human Ceiling Assumption Barrier

Jul 04, 2025 am 11:10 AM

AGI And AI Superintelligence Are Going To Sharply Hit The Human Ceiling Assumption Barrier

Jul 04, 2025 am 11:10 AM

Let’s talk about it. This analysis of an innovative AI breakthrough is part of my ongoing Forbes column coverage on the latest in AI, including identifying and explaining various impactful AI complexities (see the link here). Heading Toward AGI And

Build Your First LLM Application: A Beginner's Tutorial

Jun 24, 2025 am 10:13 AM

Build Your First LLM Application: A Beginner's Tutorial

Jun 24, 2025 am 10:13 AM

Have you ever tried to build your own Large Language Model (LLM) application? Ever wondered how people are making their own LLM application to increase their productivity? LLM applications have proven to be useful in every aspect

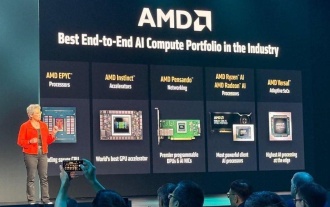

AMD Keeps Building Momentum In AI, With Plenty Of Work Still To Do

Jun 28, 2025 am 11:15 AM

AMD Keeps Building Momentum In AI, With Plenty Of Work Still To Do

Jun 28, 2025 am 11:15 AM

Overall, I think the event was important for showing how AMD is moving the ball down the field for customers and developers. Under Su, AMD’s M.O. is to have clear, ambitious plans and execute against them. Her “say/do” ratio is high. The company does

Kimi K2: The Most Powerful Open-Source Agentic Model

Jul 12, 2025 am 09:16 AM

Kimi K2: The Most Powerful Open-Source Agentic Model

Jul 12, 2025 am 09:16 AM

Remember the flood of open-source Chinese models that disrupted the GenAI industry earlier this year? While DeepSeek took most of the headlines, Kimi K1.5 was one of the prominent names in the list. And the model was quite cool.

Future Forecasting A Massive Intelligence Explosion On The Path From AI To AGI

Jul 02, 2025 am 11:19 AM

Future Forecasting A Massive Intelligence Explosion On The Path From AI To AGI

Jul 02, 2025 am 11:19 AM

Let’s talk about it. This analysis of an innovative AI breakthrough is part of my ongoing Forbes column coverage on the latest in AI, including identifying and explaining various impactful AI complexities (see the link here). For those readers who h

7 Key Highlights from Geoffrey Hinton on Superintelligent AI - Analytics Vidhya

Jun 21, 2025 am 10:54 AM

7 Key Highlights from Geoffrey Hinton on Superintelligent AI - Analytics Vidhya

Jun 21, 2025 am 10:54 AM

If the Godfather of AI tells you to “train to be a plumber,” you know it’s worth listening to—at least that’s what caught my attention. In a recent discussion, Geoffrey Hinton talked about the potential future shaped by superintelligent AI, and if yo