How to use Scrapy to build an efficient crawler system

With the development of the Internet, people's demand for information is getting stronger and stronger, but it is becoming more and more difficult to obtain and process this information. Therefore, crawler technology came into being. Crawler technology has been widely used in web search engines, data mining, social networks, finance and investment, e-commerce and other fields.

Scrapy is an efficient web crawler framework based on Python, which can help us quickly build an efficient crawler system. In this article, we will introduce how to use Scrapy to build an efficient crawler system.

1. Introduction to Scrapy

Scrapy is a Python-based web crawler framework with efficient processing capabilities and strong scalability. It provides a powerful data extraction mechanism, supports asynchronous processing, and has a powerful middleware and plug-in system. Scrapy can also easily implement proxy, user agent, anti-crawler and other functions through configuration files. Scrapy provides a powerful debugging and logging system that can help us locate crawler problems more easily.

2. Scrapy installation and environment configuration

- Installing Scrapy

Installing Scrapy requires installing Python first. It is recommended to use Python2.7 or Python3.6 or above Version. Installation method:

pip install scrapy

- Environment configuration

After installing Scrapy, we need to perform relevant environment configuration, mainly including:

(1) Setup request Headers

In Scrapy’s configuration file, we can set our request headers. This can help us disguise ourselves as a browser to access the target website and avoid being blocked by the website's anti-crawler mechanism. The code is as follows:

DEFAULT_REQUEST_HEADERS = {

'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/webp,*/*;q=0.8',

'Accept-Language': 'en',

'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/49.0.2623.110 Safari/537.36'

}(2) Set downloader middleware

Scrapy supports many downloader middleware, such as HttpErrorMiddleware, RetryMiddleware, UserAgentMiddleware, etc. These middleware can help us solve various download and network problems. We can set the downloader middleware in the configuration file and set the downloader middleware parameters as needed. The code example is as follows:

DOWNLOADER_MIDDLEWARES = {

'scrapy.contrib.downloadermiddleware.httpproxy.HttpProxyMiddleware': 110,

'scrapy.contrib.downloadermiddleware.useragent.UserAgentMiddleware' : None,

'myproject.spiders.middlewares.RotateUserAgentMiddleware': 400,

'scrapy.contrib.downloadermiddleware.retry.RetryMiddleware': 90,

}3. Scrapy crawler development

- Create a Scrapy project

Before using Scrapy, we need to create a Scrapy project. Using the command line, enter the following command:

scrapy startproject myproject

This will create a Scrapy project named myproject.

- Writing crawler programs

The Scrapy framework has a very good architecture and is divided into five modules: engine, scheduler, downloader, crawler and pipeline. To develop a Scrapy crawler, you need to write the following programs:

(1) Crawler module

In Scrapy, the crawler is the most important part. You need to create a spider folder in the myproject directory and write a crawler file in it, such as myspider.py. The sample code is as follows:

import scrapy

class MySpider(scrapy.Spider):

name = 'myspider'

allowed_domains = ['www.example.com']

start_urls = ['http://www.example.com']

def parse(self, response):

# 爬虫主逻辑In the code, we need to define a Spider class, where the name attribute is the crawler name, the allowed_domains attribute is the domain name that is allowed to be crawled, and the start_urls attribute is the URL to start crawling. Commonly used crawler categories in Scrapy include: CrawlSpider, XMLFeedSpider, SitemapSpider, etc.

(2) Data extraction module

The data extraction module is responsible for extracting data from the HTML page returned by the crawler. Scrapy provides two methods for extracting data: XPath and CSS selectors.

XPath: Scrapy implements the XPath selector through the lxml library. The usage method is as follows:

selector.xpath('xpath-expression').extract()CSS selector: Scrapy implements the CSS selector through the Sizzle library. The usage method is as follows:

selector.css('css-expression').extract()(3) Pipeline module

In Scrapy, the pipeline module is responsible for processing the data extracted by the crawler. Create a pipelines.py file in the myproject directory and write the code for the pipeline module:

class MyProjectPipeline(object):

def process_item(self, item, spider):

# 处理item数据

return item- Run the crawler program

Use the following command to start the crawler:

scrapy crawl myspider

4. Scrapy crawler scheduling and optimization

- Set download delay

In order to avoid too many requests to the target website, we should set a download delay. The DOWNLOAD_DELAY attribute can be set in Scrapy's configuration file:

DOWNLOAD_DELAY = 2

- Set request timeout

Sometimes the target website will return an error message or the request times out, in order to avoid falling into an infinite loop , we should set a request timeout. The DOWNLOAD_TIMEOUT attribute can be set in Scrapy's configuration file:

DOWNLOAD_TIMEOUT = 3

- Set the number of concurrent threads and concurrent requests

Scrapy can set the number of concurrent threads and concurrent requests. The number of concurrent threads refers to the number of web pages downloaded at the same time, while the number of concurrent requests refers to the number of requests made to the target website at the same time. It can be set in the Scrapy configuration file:

CONCURRENT_REQUESTS = 100 CONCURRENT_REQUESTS_PER_DOMAIN = 16

- Comply with Robots protocol

The target website may set the Robots protocol, which is used to restrict crawler access. We should comply with the Robots protocol and adjust our crawler code according to the robots.txt file of the target website.

- Anti-crawler mechanism

Some websites will use anti-crawler technology to prevent our crawlers, such as forced login, IP blocking, verification code, JS rendering, etc. In order to avoid these limitations, we need to use technologies such as proxies, distributed crawlers, and automatic identification of verification codes to solve these problems.

In short, using Scrapy to build an efficient crawler system requires a certain amount of technical accumulation and experience summary. During the development process, we need to pay attention to the efficiency of network requests, the accuracy of data extraction, and the reliability of data storage. Only through continuous optimization and improvement can our crawler system achieve higher efficiency and quality.

The above is the detailed content of How to use Scrapy to build an efficient crawler system. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undress AI Tool

Undress images for free

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

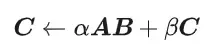

CUDA's universal matrix multiplication: from entry to proficiency!

Mar 25, 2024 pm 12:30 PM

CUDA's universal matrix multiplication: from entry to proficiency!

Mar 25, 2024 pm 12:30 PM

General Matrix Multiplication (GEMM) is a vital part of many applications and algorithms, and is also one of the important indicators for evaluating computer hardware performance. In-depth research and optimization of the implementation of GEMM can help us better understand high-performance computing and the relationship between software and hardware systems. In computer science, effective optimization of GEMM can increase computing speed and save resources, which is crucial to improving the overall performance of a computer system. An in-depth understanding of the working principle and optimization method of GEMM will help us better utilize the potential of modern computing hardware and provide more efficient solutions for various complex computing tasks. By optimizing the performance of GEMM

Huawei's Qiankun ADS3.0 intelligent driving system will be launched in August and will be launched on Xiangjie S9 for the first time

Jul 30, 2024 pm 02:17 PM

Huawei's Qiankun ADS3.0 intelligent driving system will be launched in August and will be launched on Xiangjie S9 for the first time

Jul 30, 2024 pm 02:17 PM

On July 29, at the roll-off ceremony of AITO Wenjie's 400,000th new car, Yu Chengdong, Huawei's Managing Director, Chairman of Terminal BG, and Chairman of Smart Car Solutions BU, attended and delivered a speech and announced that Wenjie series models will be launched this year In August, Huawei Qiankun ADS 3.0 version was launched, and it is planned to successively push upgrades from August to September. The Xiangjie S9, which will be released on August 6, will debut Huawei’s ADS3.0 intelligent driving system. With the assistance of lidar, Huawei Qiankun ADS3.0 version will greatly improve its intelligent driving capabilities, have end-to-end integrated capabilities, and adopt a new end-to-end architecture of GOD (general obstacle identification)/PDP (predictive decision-making and control) , providing the NCA function of smart driving from parking space to parking space, and upgrading CAS3.0

Which version of Apple 16 system is the best?

Mar 08, 2024 pm 05:16 PM

Which version of Apple 16 system is the best?

Mar 08, 2024 pm 05:16 PM

The best version of the Apple 16 system is iOS16.1.4. The best version of the iOS16 system may vary from person to person. The additions and improvements in daily use experience have also been praised by many users. Which version of the Apple 16 system is the best? Answer: iOS16.1.4 The best version of the iOS 16 system may vary from person to person. According to public information, iOS16, launched in 2022, is considered a very stable and performant version, and users are quite satisfied with its overall experience. In addition, the addition of new features and improvements in daily use experience in iOS16 have also been well received by many users. Especially in terms of updated battery life, signal performance and heating control, user feedback has been relatively positive. However, considering iPhone14

Always new! Huawei Mate60 series upgrades to HarmonyOS 4.2: AI cloud enhancement, Xiaoyi Dialect is so easy to use

Jun 02, 2024 pm 02:58 PM

Always new! Huawei Mate60 series upgrades to HarmonyOS 4.2: AI cloud enhancement, Xiaoyi Dialect is so easy to use

Jun 02, 2024 pm 02:58 PM

On April 11, Huawei officially announced the HarmonyOS 4.2 100-machine upgrade plan for the first time. This time, more than 180 devices will participate in the upgrade, covering mobile phones, tablets, watches, headphones, smart screens and other devices. In the past month, with the steady progress of the HarmonyOS4.2 100-machine upgrade plan, many popular models including Huawei Pocket2, Huawei MateX5 series, nova12 series, Huawei Pura series, etc. have also started to upgrade and adapt, which means that there will be More Huawei model users can enjoy the common and often new experience brought by HarmonyOS. Judging from user feedback, the experience of Huawei Mate60 series models has improved in all aspects after upgrading HarmonyOS4.2. Especially Huawei M

Huawei will launch the Xuanji sensing system in the field of smart wearables, which can assess the user's emotional state based on heart rate

Aug 29, 2024 pm 03:30 PM

Huawei will launch the Xuanji sensing system in the field of smart wearables, which can assess the user's emotional state based on heart rate

Aug 29, 2024 pm 03:30 PM

Recently, Huawei announced that it will launch a new smart wearable product equipped with Xuanji sensing system in September, which is expected to be Huawei's latest smart watch. This new product will integrate advanced emotional health monitoring functions. The Xuanji Perception System provides users with a comprehensive health assessment with its six characteristics - accuracy, comprehensiveness, speed, flexibility, openness and scalability. The system uses a super-sensing module and optimizes the multi-channel optical path architecture technology, which greatly improves the monitoring accuracy of basic indicators such as heart rate, blood oxygen and respiration rate. In addition, the Xuanji Sensing System has also expanded the research on emotional states based on heart rate data. It is not limited to physiological indicators, but can also evaluate the user's emotional state and stress level. It supports the monitoring of more than 60 sports health indicators, covering cardiovascular, respiratory, neurological, endocrine,

What are the computer operating systems?

Jan 12, 2024 pm 03:12 PM

What are the computer operating systems?

Jan 12, 2024 pm 03:12 PM

A computer operating system is a system used to manage computer hardware and software programs. It is also an operating system program developed based on all software systems. Different operating systems have different users. So what are the computer systems? Below, the editor will share with you what computer operating systems are. The so-called operating system is to manage computer hardware and software programs. All software is developed based on operating system programs. In fact, there are many types of operating systems, including those for industrial use, commercial use, and personal use, covering a wide range of applications. Below, the editor will explain to you what computer operating systems are. What computer operating systems are Windows systems? The Windows system is an operating system developed by Microsoft Corporation of the United States. than the most

Detailed explanation of how to modify system date in Oracle database

Mar 09, 2024 am 10:21 AM

Detailed explanation of how to modify system date in Oracle database

Mar 09, 2024 am 10:21 AM

Detailed explanation of the method of modifying the system date in the Oracle database. In the Oracle database, the method of modifying the system date mainly involves modifying the NLS_DATE_FORMAT parameter and using the SYSDATE function. This article will introduce these two methods and their specific code examples in detail to help readers better understand and master the operation of modifying the system date in the Oracle database. 1. Modify NLS_DATE_FORMAT parameter method NLS_DATE_FORMAT is Oracle data

Differences and similarities of cmd commands in Linux and Windows systems

Mar 15, 2024 am 08:12 AM

Differences and similarities of cmd commands in Linux and Windows systems

Mar 15, 2024 am 08:12 AM

Linux and Windows are two common operating systems, representing the open source Linux system and the commercial Windows system respectively. In both operating systems, there is a command line interface for users to interact with the operating system. In Linux systems, users use the Shell command line, while in Windows systems, users use the cmd command line. The Shell command line in Linux system is a very powerful tool that can complete almost all system management tasks.