2023 Beijing Zhiyuan Conference opened in Beijing on June 9, which was organized by Zhiyuan Research Institute. The conference has been held for five consecutive years and is an annual international high-end professional exchange event on artificial intelligence.

It is reported that this conference lasted for two days. The core topic was the opportunities and challenges faced by the development of artificial intelligence. There were more than 200 top experts in the AI field participating in the conference, including OpenAI founder Sam Altman. , Turing Award winners Geoffrey Hinton, Yann LeCun and others. They all expressed their views on the development and challenges of AI.

(Source: Data map)

(Source: Data map)

"Will artificial neural networks soon be smarter than real neural networks?" Hinton mainly discussed such an issue in his speech.

(Source: Data map)

(Source: Data map)

Being able to follow instructions accurately is the key to computers in traditional computing. Because of this feature, users can choose different hardware when using the same program or neural network. This shows that there is no dependence between the weights of the neural network in the program and the hardware.

"The reason they follow instructions is because they are designed to allow us to look at a problem, determine the steps required to solve the problem, and then tell the computer to perform those steps," Hinton said.

In order to train large language models at a lower cost, he proposed "limited computing", which means abandoning the basic principle of separation of hardware and software in traditional computing and efficiently performing computing tasks based on the simulation of hardware.

However, there are two main problems with this approach.

First, “the learning process must exploit specific simulation properties of the hardware part it runs on, and we don’t know exactly what those properties are,” Hinton said.

Secondly, this method is limited. "Because knowledge is inextricably tied to hardware details, when a specific hardware device fails, all knowledge gained is lost," Hinton explains. ”

In order to solve the above problems, he and his collaborators tried many methods, such as the "distillation method", which proved to be very effective.

He also pointed out that the way in which intelligent groups share knowledge will affect many factors in computing. This means that currently large language models seem to be able to learn massive amounts of knowledge, but because they mainly acquire knowledge by learning documents and do not have the ability to learn directly from the real world, the learning method is very inefficient.

Unsupervised learning models, such as video modeling, allow them to learn efficiently. According to Hinton, once these digital agents are enabled, they have greater learning capabilities than humans and can learn very quickly. ”

If we follow this development trend, intelligent agents will soon become smarter than humans. However, this will also bring many challenges, such as the struggle for control between agents and humans, ethics and safety issues.

"Imagine that in the next ten years, artificial general intelligence systems (Artificial General Intelligence, AGI) will exceed the level of expertise that humans had in the early 1990s." Altman said.

In his speech and Q&A session with Zhang Hongjiang, Chairman of Zhiyuan Research Institute, he discussed the importance and strategies of promoting AGI safety.

(Source: Data map)

(Source: Data map)

"We must take responsibility for the problems that reckless development and deployment may cause." Altman said, pointing out two directions, namely establishing inclusive international norms and standards, and promoting AGI safety systems through international cooperation. of construction.

For now, how to train large language models so that they can become truly safe and helpful human assistants is the primary problem that needs to be solved.

In this regard, Ultraman proposed several solutions.

First, invest in scalable supervision, such as training a model that can assist humans in supervising other AI systems.

Secondly, continue to upgrade machine learning technology to further enhance the interpretability of the model.

Our ultimate goal is to enable AI systems to better optimize themselves. Altman said, "As future models become increasingly smarter and more powerful, we will find better learning techniques that reduce risks while realizing the extraordinary benefits of AI." ”

Yang Likun and Altman hold the same view on how to deal with the current risks brought by AI. This sentence can be rewritten as: "While these risks exist, they can be mitigated or controlled through careful engineering design.". ”

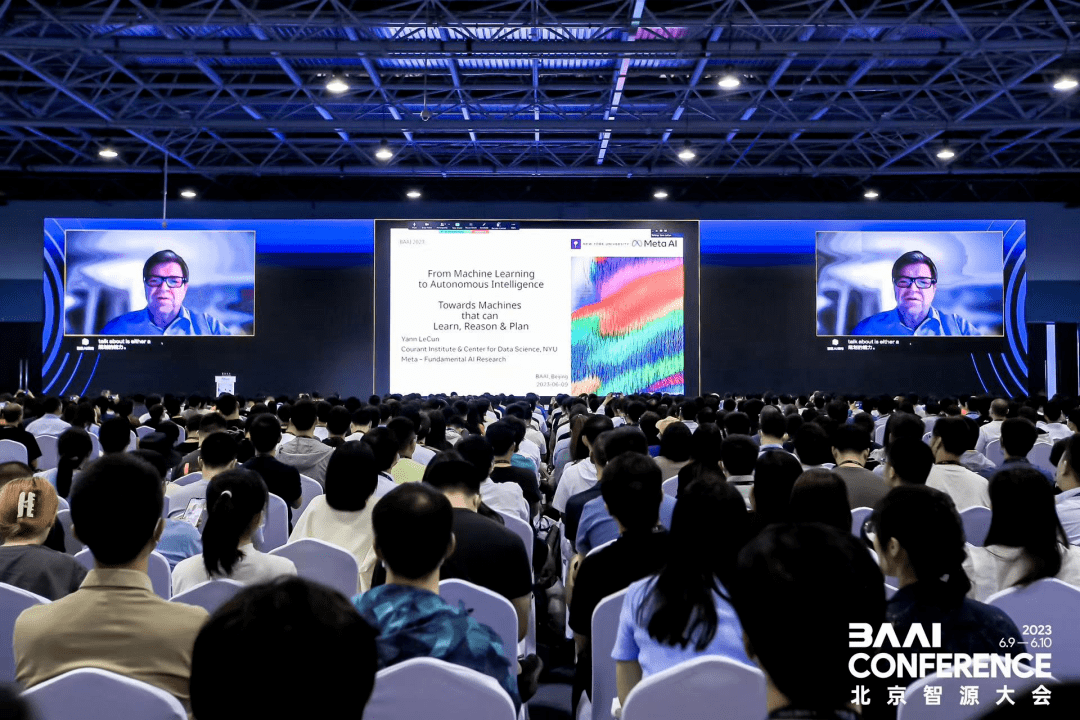

However, as an expert who has always opposed large models like GPT, Yang Likun clearly pointed out the advantages of AI systems represented by self-supervised learning in his speech titled "Towards Machines That Can Learn, Think and Plan". shortcoming.

(Source: Data map)

(Source: Data map)

Although it has shown extremely powerful effects in natural language processing and generation, it does not have the ability to reason and plan like humans and animals, so it will inevitably lead to some factual errors and logical errors. , issues such as the poisoning of values.

Based on this, Yang Likun believes that AI will face three major challenges in the next few years: learning models for world representation and prediction; learning reasoning capabilities; and learning how to decompose complex tasks into simple tasks and advance them in layers.

And, he put forward the idea that the world model is the core of the road to AGI.

In his view, a world model is a system that can imagine what will happen in the future and can make its own predictions at minimal cost.

The system operates by utilizing previous worldviews that may be stored in memory to process the current state of the world. You then use the world model to predict how the world will proceed and what will happen. " Yang Likun said.

So, how to implement this world model? "We have a layered system that extracts more and more abstract representations of the world state through a chain of encoders and models of the world using different levels of predictors," he said.

Simply put, it means to complete complex tasks by decomposing and planning them to the millisecond level. To be clear, there must be a system in place to control costs and optimize standards.

It can be seen from the above that although the three experts have different views and opinions on AI, they all stated that the development of AI has become a general trend. As Ultraman said, we cannot stop the development of AI.

On this basis, how to find a better way to develop AI, suppress a series of risks and harms it may bring, and finally move towards the end of AGI will be the main direction that people need to focus on in the next stage.

The above is the detailed content of Debate: What are the differences between OpenAI's opinions on AI between Ultraman, Hinton, and Yang Likun?. For more information, please follow other related articles on the PHP Chinese website!

what is search engine

what is search engine

What are the parameters of marquee?

What are the parameters of marquee?

How to solve the problem of dns server not responding

How to solve the problem of dns server not responding

How to solve Permission denied

How to solve Permission denied

How to solve the problem that the device manager cannot be opened

How to solve the problem that the device manager cannot be opened

How to switch settings between Huawei dual systems

How to switch settings between Huawei dual systems

Why is the mobile hard drive so slow to open?

Why is the mobile hard drive so slow to open?

How to eliminate html code

How to eliminate html code