In the current wave of science and technology, the concept of the metaverse is attracting the world's attention like a dazzling new star with its infinite imagination and possibilities. The huge development potential has attracted waves of companies, one after another, to launch a charge into the Metaverse.

Among these companies, Apple is the heavyweight player with the highest expectations. On June 6, after seven years of preparation, Apple finally made its head-mounted display device Vision Pro officially unveiled at WWDC 2023.

In a sense, how far Apple can go in the field of XR equipment represents how far the current technological frontier can go. However, judging from the current situation, although Apple's products perform well in many aspects, they are still far from people's expectations. From many designs and indicators, we can see the current technical bottlenecks and some compromises that Apple has to make. More importantly, the final price of Vision Pro is as high as US$3,499 (approximately RMB 24,860). This price means that this product must be a niche "toy" and cannot fly into the homes of ordinary people.

Apple’s stock price

Apple’s stock price

So, how far are we from the ideal metaverse world, and what technical problems and challenges still need to be solved? In order to answer this question, Dataman interviewed Cocos CEO Lin Shun, DataMesh founder and CEO Li Jie, Youli Technology CEO Zhang Xuebing, Yuntian Bestseller CTO Liang Feng, AsiaInfo Technology R&D Center Deputy General Manager Chen Guo, Mo Universe Chief Product Officer Lin Yu and many other industry experts understand the development trends of various key technologies in the Metaverse. Next, we will combine Apple’s latest XR head display products to deeply explore the latest development trends and existing challenges in four key technology areas: near-eye display, computational rendering, 5G private network, perceptual interaction, and content production.

Near-eye display is the first door to the world of the Metaverse. Judging from the products released by Apple this time, its Vision Pro is equipped with 12 cameras, equipped with a Micro OLED screen, with 23 million pixels, each eye is allocated The number of pixels exceeds that of 4K TVs, enabling viewing on a 100-inch screen and supporting 3D video viewing. This is considered the top level currently achieved.

Apple Vision Pro Product Picture

Apple Vision Pro Product Picture

So, what are the key technologies for the entire near-eye display, and what is the current progress of the industry? In the realization of the Metaverse, near-eye display technology is the key to hardware breakthroughs. It mainly consists of two parts: the display panel and the optical system (especially the optical waveguide). Both technical routes still have many technical difficulties that need to be overcome.

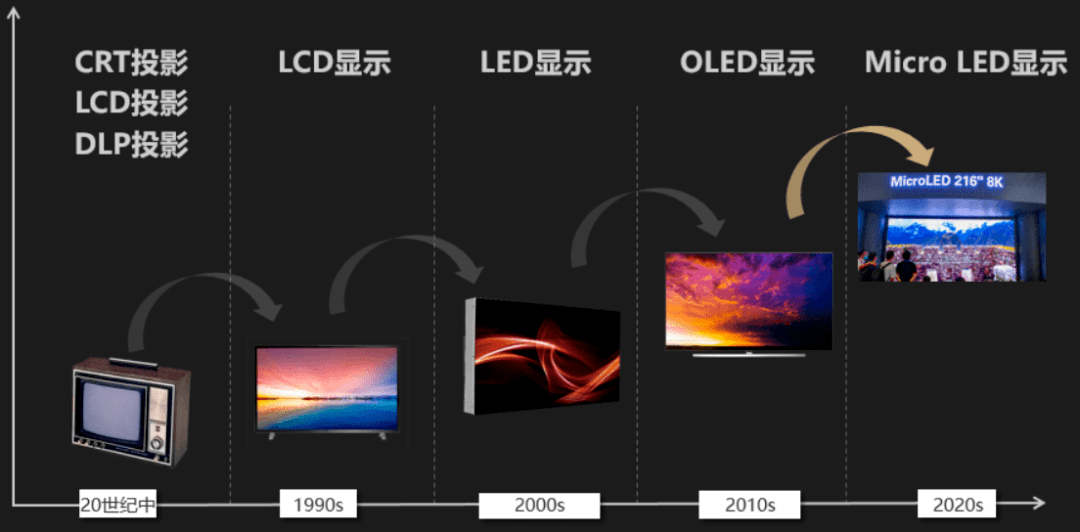

1. The display panel determines the resolution, color saturation and refresh rate. MicroLED has high hopes.

The display panel is an important part of near-eye display technology, which directly affects the user's visual experience in the Metaverse. The main technical indicators of the display panel include resolution, color saturation and refresh rate. The improvement of these indicators can bring users a clearer, richer and smoother visual experience.

Resolution is one of the core indicators of the display panel, which directly determines the ability to display details of the Metaverse. At this stage, the improvement of panel resolution is facing a technical bottleneck. How to increase its resolution while ensuring panel size and power consumption is an important issue that needs to be solved in display panel technology.

Color saturation is another important indicator, which affects the color display ability of the Metaverse. At present, although various display technologies have achieved good results in color expression, there is still a certain distance to achieve real-world colors in the metaverse.

The refresh rate affects the user's dynamic visual experience. A high refresh rate can provide smoother animation effects. However, increasing the refresh rate will increase the pressure on computing and power consumption. How to reduce power consumption while increasing the refresh rate is another major technical challenge.

In the field of display panels, the current main technologies include liquid crystal display (LCD), organic light-emitting diode (OLED), and micro-LED (Micro-LED). Although LCD technology is relatively mature and low-cost, it is lacking compared to other technologies in terms of color saturation, contrast and refresh rate, while OLED displays need to solve lifespan and cost issues.

Display panel development process

Display panel development process

In recent years, MicroLED display technology has received widespread attention from the industry for its excellent performance. It uses micron-level LEDs as pixels, which not only provides higher resolution, wider color gamut and higher refresh rate, but also has lower power consumption and longer life. However, the manufacturing of MicroLED panels is difficult and costly, especially when the pixel size is small and the pixel density is high. How to achieve large-scale, high-efficiency production is still a key technical problem to be solved. This time the Vision Pro is equipped with a Micro OLED screen, but for the price of nearly 25,000, I believe this Micro OLED screen has "contributed" a lot.

2. The maturity of optical waveguide technology is lower than that of display panels, and its technical route and breakthrough time are uncertain.

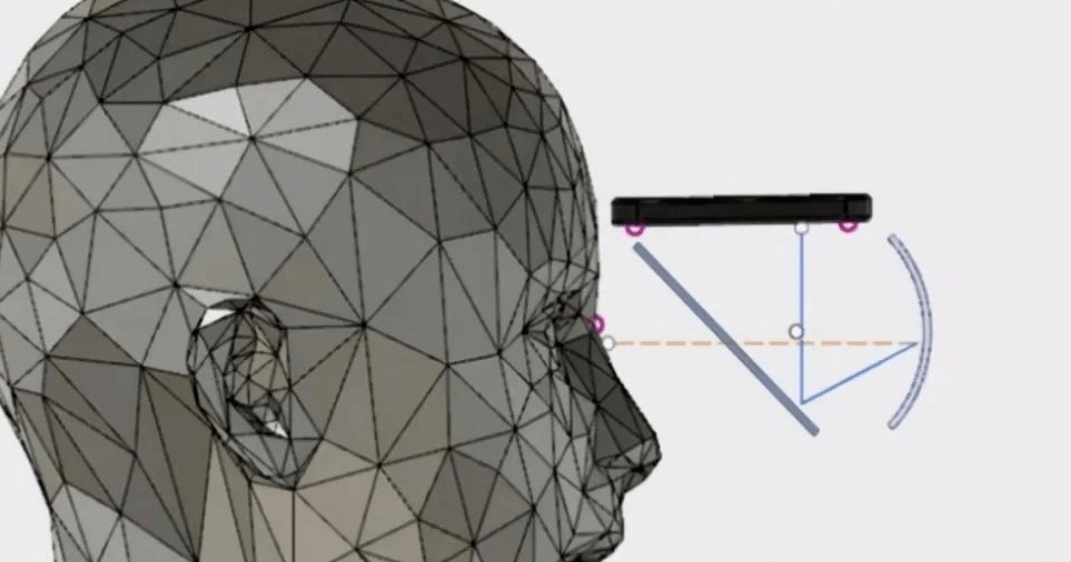

Optical systems, especially optical waveguides, are equally important for near-eye display technology. The core of optical waveguide technology is to guide light to the user's retina, thereby producing virtual images in the user's field of vision. The field of view is an important indicator to measure the quality of optical waveguides, which affects the user's visual range in the metaverse.

In augmented reality (AR) and virtual reality (VR) devices, display panels and optical waveguides are often tightly integrated. The display panel generates images, which are then fed into optical waveguides. The optical waveguide's job is to guide these images to the user's eyes, possibly making adjustments to improve the visual experience, such as widening the field of view or adjusting the focus of the image. The process can be imagined simply like a movie theater: the display panel is like the projector, which produces the image; and the optical waveguide is like the projection screen, which receives the image produced by the projector and presents it to the audience (in this case , the audience is the user’s eyes).

Optical waveguide technology can be divided into several different types, including diffractive optical waveguides, refractive optical waveguides and holographic optical waveguides. Among them, diffraction optical waveguide is currently the most commonly used one. It disperses incoming light into multiple angles through micro-gratings, and then uses total reflection to guide these rays to the user's retina. This technology can provide a wider field of view and higher imaging quality, but there are problems in terms of light efficiency, dispersion, and complex manufacturing processes.

In terms of light efficiency, the problem with diffractive optical waveguides is that only part of the light can be effectively utilized, and most of the light will be scattered, resulting in a waste of energy. In terms of dispersion, due to the diffraction angle of light, light of different colors will produce dispersion after passing through the grating, affecting the color accuracy of imaging. This is undoubtedly a big problem for applications that pursue a high degree of realism like the Metaverse.

In addition, the complexity of the manufacturing process is reflected in the extremely high requirements for precision. Micro gratings for diffractive optical waveguides need to be accurate to the nanometer level, which brings extremely high technical difficulty and cost pressure during the production process. To overcome these problems, researchers are pursuing new optical waveguide technologies, such as refractive optical waveguides and holographic optical waveguides. These technologies guide light in different ways, potentially providing new solutions in overcoming the problems of diffractive light waveguides. For example, holographic optical waveguides use holographic images to record and reproduce the wavefront of light, which can provide advantages in reducing dispersion and improving optical efficiency.

However, new optical waveguide technology also has its own challenges. For example, although refractive optical waveguides are superior to diffractive optical waveguides in optical efficiency, their field of view is usually smaller; while holographic optical waveguides face the problem of how to achieve large-scale, high-quality holographic image manufacturing.

Overall, although there is still room for improvement in display panel technology, its technical roadmap is relatively certain, and further improvements can be expected in the next few years. Compared with display panel technology, optical waveguide technology is less mature, and its technical route and breakthrough time are still uncertain.Although the basic principles of optical waveguides have been understood, how to design and manufacture efficient, high-quality, and low-cost optical waveguides in practical applications is still a technical challenge.

The Metaverse requires the construction of an immersive virtual environment that is seamlessly connected to the real world, and computational rendering is one of the key technologies to achieve this goal. The task of computational rendering is to convert the three-dimensional model in the virtual world and its materials, lighting and other attributes into the two-dimensional image seen by the end user. This process requires a lot of calculations, including geometric calculations, ray tracing, lighting calculations, material rendering, post-processing, etc.

In order to improve the computing power of the device, Apple’s Vision Pro adopts a dual-chip design, including a Mac-level M2 chip and a real-time sensor processing chip R1. Among them, the R1 chip is mainly responsible for sensor signal transmission and processing.

Apple can design special chips for its own XR devices, but looking at the entire industry, how much computing power is needed to achieve the desired effect? Next we will discuss this issue in more depth.

1. To better realize the ideal metaverse scene, each XR device must have at least one NVIDIA A100 GPU.

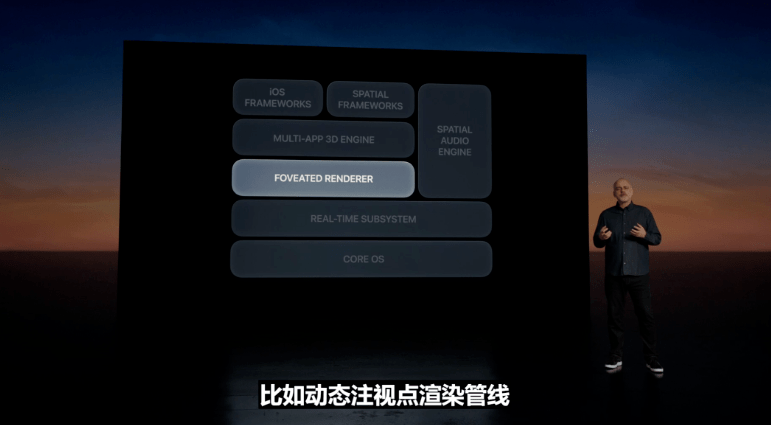

The ideal state is to achieve real-time, high-resolution, high-frame-rate, full-ray tracing rendering, which requires extremely high computing power. The specific computing power requirements depend on many factors, such as rendering complexity, resolution, frame rate, image quality, etc. At present, there are some technologies to reduce the amount of calculation. The most typical one is gaze point rendering technology. This technology uses the visual characteristics of the human eye to perform high-precision rendering only on the gaze point and its surrounding areas, while performing low-precision rendering in other areas, effectively reducing the cost of calculation. The complexity of the rendering task. The Vision Pro released by Apple this time uses dynamic foveated rendering technology to accurately deliver the maximum image quality to every frame that the user's eyes are looking at.

Apple Vision Pro Product Introduction

Overall, the Metaverse’s demand for computational rendering far exceeds the capabilities of existing technology. Especially on mobile devices, it is more difficult to provide computing power due to issues such as power consumption and heat dissipation. The processing of 3D data not only requires a large amount of computing resources, but also requires real-time or near-real-time feedback. This places extremely high demands on computing power and latency. Existing computing equipment, including state-of-the-art GPUs and AI chips, cannot yet fully meet these needs.

In order to more clearly explain the "gap" between the supply of computing power and the demand for computing power in the Metaverse, let's analyze the supply and demand situation of computing power with a typical scenario.

Suppose we want to achieve full ray tracing rendering with 4K resolution (i.e. 3840x2160 pixels), 60 frames/second, and tracking 100 rays per pixel. Processing one ray requires about 500 floating point operations ( Actual data may be higher).

Then the required computing power = 3840 pixels x 2160 pixels x 60 frames/second x 100 rays/pixel x 500 floating point operations/ray = 4,976,640,000,000 floating point operations/second = 25 TFLOPS.

It should be noted that this is a very rough estimate, and the actual computing power required may be higher, because in ray tracing rendering, in addition to ray tracing, there are many other types of calculations, such as shading and textures. Sampling, geometric transformation, etc.

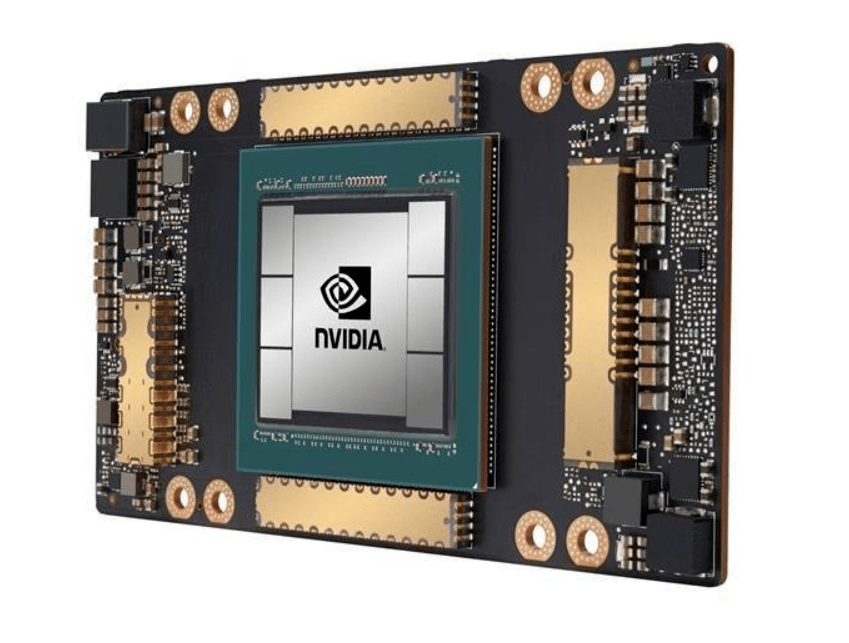

The peak computing power of NVIDIA's high-end chip A100 is 19.5TFLOPS, and the price of NVIDIA A100 40G on JD.com exceeds 60,000 yuan. In other words, to achieve an ideal metaverse scene rendering effect, a device requires at least one A100 chip. The cost of one chip alone exceeds 60,000 yuan, so the price of XR equipment will be even higher. Leaving aside other technical limitations, the price of this device is enough to dissuade most consumers.

NVIDIA A100 chip

NVIDIA A100 chip

In addition to improving general GPU performance,Special hardware and software optimization for ray tracing appear to be an important way to improve the computing performance of Metaverse applications.For Metaverse applications, ray tracing is an important computing task, so specialized ray tracing hardware can be designed. One example is Nvidia's RTX series of GPUs, which include dedicated RT cores for accelerating ray tracing calculations. This kind of hardware can directly perform some computationally intensive operations at the hardware level, such as the calculation of intersection points between light and objects in the scene, thereby greatly improving computing efficiency. Ray tracing software optimization includes better ray sorting algorithms, more efficient space division structures, etc.

Currently, the calculation and rendering of the Metaverse cannot be done without the GPU, and there are two main sources of GPU computing power, one is the local GPU, and the other is the cloud GPU. Local GPUs are mainly used for scenes that require real-time rendering, such as games and AR/VR devices. The GPU in the cloud can be used for more complex rendering tasks, such as movie special effects, architectural visualization, etc. The results of cloud rendering are transmitted to the user's device through the network.

The ideal computational rendering solution should be a hybrid rendering system that integrates local and cloud resources. This system should be able to intelligently decide whether to perform rendering tasks locally or in the cloud, and how to allocate tasks based on the nature of the task, network conditions, device performance and other factors. In addition, this system should also support various rendering technologies, such as ray tracing, real-time lighting, global illumination, etc., so that the appropriate rendering technology can be selected according to needs.

To achieve this goal, high-speed data transmission needs to be achieved locally and in the cloud (or edge). So, how high an internet speed is required to meet the requirements of the Metaverse?

2. 5G is barely enough, but the progress of 5G network construction is not as expected.

Let’s take the above typical Metaverse computing power requirements as an example (4K resolution, 60 frames/second, full ray tracing rendering with 100 rays tracked per pixel), and see what we need to do on the cloud (edge) side. Calculate the rendering solution and how much network bandwidth is required.

If we simplify the color information of each pixel to 24 bits (8-bit red, 8-bit green, 8-bit blue), we can calculate the required network bandwidth:

3840 pixels * 2160 pixels * 24 bits/pixel * 60 frames/second = 11,943,936,000 bits = 11.92Gbps.

The peak data rate of a 5G network can theoretically reach 20Gbps, but this is the peak speed in an ideal laboratory environment. In actual use, users can usually expect speeds between 100Mbps and 3Gbps, which is still some distance away from the network requirements of the Metaverse. Even the better 5G network is barely usable.

It should be pointed out that even in China, which has the most 5G base stations in the world, the progress of 5G network construction is not very fast. According to calculations, if you want to build a good 5G network, at least 10 million 5G base stations are needed. As of the end of February 2023, the total number of 5G base stations in my country reached 2.384 million, and it will take several years to exceed 10 million.In other words, in terms of network construction, we are not ready for the Metaverse yet.

Obviously, there are currently bottlenecks in terms of computing power and network. So, how to break this bottleneck? To this end, Dataman interviewed several industry experts.

Regarding the question "Is GPU cloud computing and edge computing the golden key to relieve the computing power bottleneck of the Metaverse?", Zhang Xuebing, CEO of Youli Technology, believes that cloud-edge-device collaboration is not a good way to solve the computing power problem. "By concentrating rendering logic on the server side, cloud rendering only reduces the rendering problems of a small number of terminal devices within a specific period of time. The development trend of terminal devices is that the configuration is getting higher and higher, the computing power is getting stronger, and the resources of cloud rendering are Stacking is inefficient from both a cost perspective and a concurrency perspective, and wastes the computing power of local devices. Local computing power rendering only needs to solve the bottleneck of local rendering capabilities to solve multiple concurrency problems, while cloud rendering edge computing GPU, However, many issues such as server concurrency, load balancing, video streaming network bandwidth, and cloud computing power bottlenecks need to be solved.When the local device rendering bottleneck is broken through the rendering algorithm, all cloud rendering construction will become a thing of the past."

Zhang Xuebing introduced that Uli Technology can use local computing power to solve the remote rendering problem of massive online three-dimensional data through its original domestically developed streaming rendering technology, and does not require high-end graphics cards through CPU rendering, breaking through the high cost of cloud rendering. and low data concurrency bottlenecks, which have solved various problems that cannot be solved under traditional cloud rendering logic.

Liang Feng, Yuntian’s best-selling CTO, believes that in the 5G era, emerging application scenarios with real-time interaction and rapid response such as cloud games and the Metaverse have seen exponential growth in demand for computing power, especially the accelerated integration of AI and the Metaverse. And the gradual transfer of computing power from To B to To C has brought huge room for development of computing power. To truly realize large-scale commercial applications and bring users a low-latency, immersive experience, the current computing power Far from being satisfied. With its unique and powerful parallel computing capabilities, GPU has gradually become an important direction for the market to explore and support the computing power base of emerging application scenarios. Combining cloud computing and edge computing provides new ideas for the large-scale commercialization of emerging applications. The integration of computing and network, the integration of cloud and network, and the creation of a computing power network with full coverage are becoming industry development trends."

Chen Guo, deputy general manager of AsiaInfo Technology R&D Center, holds the same view. He believes that "if we want to achieve the ideal state of the metaverse, the existing computing resources are far from enough - ultimate visual rendering, real-time virtual and real interaction , accurate intelligent reasoning all put forward extremely high requirements for computing resources. The future "cloud-edge-end" multi-computing power collaboration will better meet the computing power needs of the Metaverse. At the same time, GPU and other related technologies are also eliminating the need for computing power in the Metaverse. The key to the bottleneck - GPU computing power accelerates graphics rendering, provides physical simulation capabilities, and promotes XR technology to meet the immersive experience required by the Metaverse; AIGC technology provides high efficiency, high precision, and low-cost technical means for constructing Metaverse scenes , prompting the Metaverse to realize rapid modeling of "people, places, and goods"; the development of WebGPU technology makes high-fidelity, true 3D interactive applications possible, which will greatly improve the graphics performance of the browser and eliminate the need for future Metaverse The performance bottleneck of the universe application in the front end."

Perceptual interaction is another major challenge in building the metaverse. To create a truly immersive experience, it is necessary to ensure that users can interact with the metaverse naturally and intuitively, which requires covering multiple sensory dimensions such as sight, hearing, and touch.

Judging from Apple’s Vision Pro product, it uses the fusion of hand-eye voice interaction Eyesight’s multiple interaction methods. It is worth mentioning that Vision Pro is not equipped with a handle, which is very different from previous XR products.

Apple Vision Pro product demonstration picture

Apple Vision Pro product demonstration picture

Vision Pro is operated through voice, eye tracking and gestures. Users can browse application icons by looking at them, tap their hands to select, swipe to scroll, or issue voice commands. They can also use the virtual keyboard to enter text.

Apple Vision Pro product demonstration picture

Apple Vision Pro product demonstration picture

It should be pointed out that Vision Pro is still a "futures" product and will not be officially released until early next year. Therefore, what functions can be implemented in the demonstration video and how effective they are will have to wait until the product is officially launched and users can have a real perception after experiencing it.

Regarding perceptual interaction, Apple did not disclose many technical details. Next, we analyze the core technical aspects of perceptual interaction from an industry perspective. Generally speaking, the perceptual interaction methods of XR devices can be divided into visual interaction, auditory interaction, tactile interaction, and gesture interaction.

Visual interaction mainly relies on virtual reality (VR) and augmented reality (AR) technologies. There are already mature VR and AR devices that allow users to move and observe in the metaverse from a first-person perspective. However, these devices often require the wearing of helmets, which poses challenges to user comfort and long-term use. In addition, how to provide sufficient visual resolution and field of view to make users feel as natural and real as in the real world is also an important technical challenge.

Auditory interaction relies on 3D audio technology, including spatial audio and object audio. These technologies create a sound field with depth and direction, allowing users to accurately determine the source and distance of sounds. However, current 3D audio technology is still difficult to provide a completely natural listening experience, especially in simulating complex sound environments and sound physical effects.

Tactile interaction is one of the biggest challenges. Although there are various tactile feedback devices, such as vibrating handles and haptic suits, the feedback strength and accuracy of these devices cannot meet the real-world tactile experience. More advanced technologies, such as electrical stimulation and ultrasonic tactile feedback, are under development but are not yet mature.

Compared with the above methods, gesture interaction, as an intuitive and natural way of interaction, is regarded as an ideal means of interaction in the metaverse.

There are two core links in gesture interaction: capture and analysis. Capture refers to collecting movement information of the user's hand through various technologies, while analysis processes this information and identifies specific gestures and movements.

Capture technology is mainly divided into external capture and internal capture. External capture often relies on cameras or sensors to record the position and movement of the hand, as is the case with Microsoft's Kinect and Leap Motion. Internal capture uses wearable devices, which are usually equipped with a series of sensors, such as accelerometers, gyroscopes, magnetometers, etc., which can capture the displacement, rotation and acceleration of the hand in three spatial dimensions.

It can be said that internal capture is the most ideal way of interaction. Internal Capture Because the sensor is in direct contact with the hand, hand movement information can be obtained stably and accurately no matter what environment or light conditions the user is in. In addition, internal capture devices are usually small and easy to wear, allowing users to interact with gestures anytime and anywhere. The biggest technical difficulties in internal capture are sensor accuracy and complex gesture recognition capabilities. The movement of the hand is very complex. To accurately capture and convert it into virtual movements in real time, high requirements are placed on the accuracy and processing speed of the sensor. Currently, while internal capture does a good job of capturing basic hand movements, more complex gestures, such as tiny movements of fingers, may be difficult to accurately identify.If the problem of high-precision recognition of complex gestures can be solved, internal capture will be the most ideal interaction method in the metaverse.

In terms of analysis technology, it mainly relies on computer vision and machine learning algorithms. Computer vision is used to process images or videos obtained from capture devices to extract key points and contours of the hand. Machine learning algorithms, especially deep learning algorithms, are used to analyze these data and identify specific gestures. In terms of parsing technology, current algorithms mainly rely on deep learning, which requires a large amount of annotated data for training. However, obtaining and labeling this data is very difficult due to the complexity and diversity of hands.

In addition to capturing and parsing, there is another problem that needs to be solved - interaction with virtual objects, how to enable users to grab, move and manipulate objects through gestures in the virtual environment, and how to provide users with tactile feedback, are all issues that need resolving. One possible method is to use a virtual hand model to convert the user's gestures into movements of the virtual hand, and then let the virtual hand operate virtual objects. At the same time, more effective tactile feedback technologies, such as electrical stimulation and ultrasound, need to be studied to provide users with tactile feedback.

It can be found that there are still a series of technical problems that need to be solved in terms of perceptual interaction.

Apple is a master of creating a content ecosystem. At this Vision Pro conference, Apple also announced some progress in content construction. For example, Complete HeartX creates an interactive 3D heart; JigSpace allows designers to visually review their F1 racing design drafts.

Vision Pro product introduction picture

Vision Pro product introduction picture

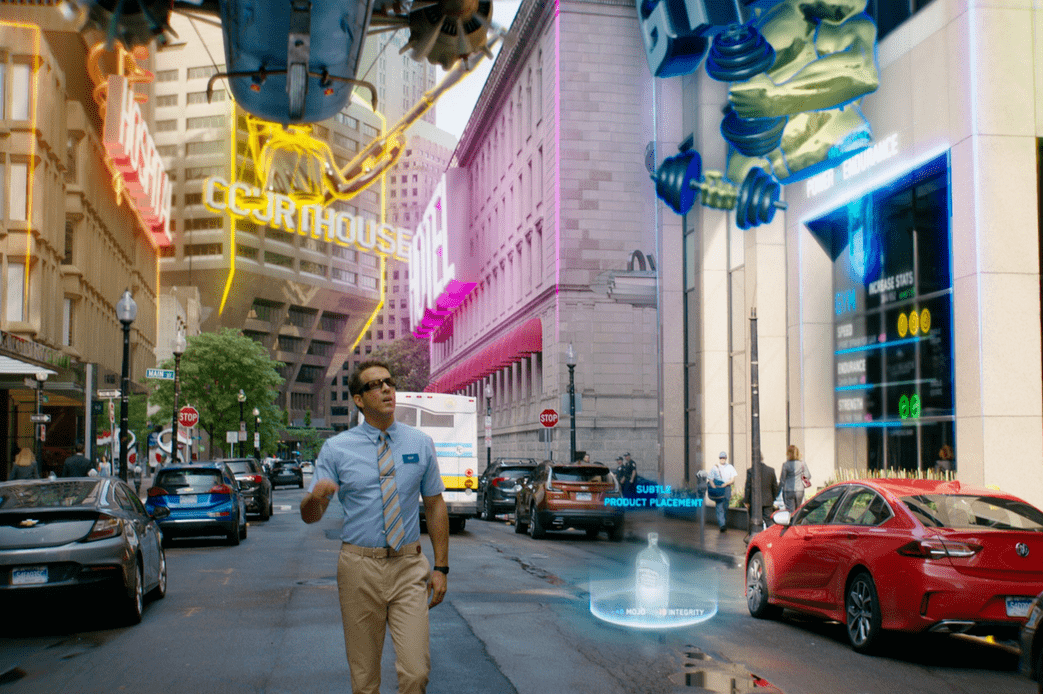

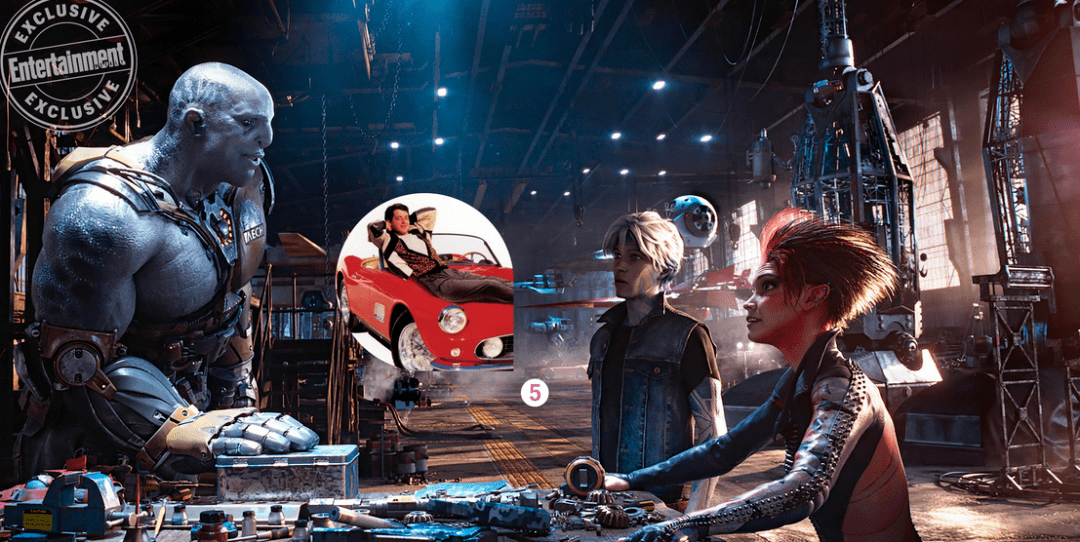

It should be pointed out that some of the content production capabilities demonstrated by Apple are still far from our ideal content metaverse. What we want is a metaverse like "Ready Player One" or "Runaway Player", not just beautiful 3D "exhibits". In order to achieve this goal, we need to explore the deep logic of metaverse content production.

In the Metaverse, the environment, virtual representations of people, and non-player characters (NPC) constitute the core content of this virtual world. These three together build the worldview and story line of the Metaverse. The environment provides the physical and cultural context for the virtual world and defines the rules and operation of the virtual world. It is the foundation of the virtual world and constitutes the spatial dimension of the metaverse. A person's virtual representative, or player character, is the user's identity and actor in the virtual world. It is a tool for users to participate in and experience the virtual world, reflecting the user's wishes and behaviors.

Ideally, NPCs can provide diverse characters and story lines for the virtual world, enriching the content and experience of the virtual world. They are activity drivers in the virtual world and can help users better participate in and understand the virtual world. The plasticity and creativity of NPCs make the storyline of the Metaverse endless possibilities. By interacting with NPCs, users can experience a variety of stories and adventures in the Metaverse. Virtual representatives of humans in the metaverse can obtain special "privileges" or abilities through interaction with NPCs. This "privilege" can help users achieve their goals in the virtual world and enhance their identity and status in the virtual world. Therefore, NPC is not only the story driver of the metaverse, but also an important factor for users to achieve success and satisfaction in the virtual world.

However, to achieve the above goals, NPCs need to have a high level of intelligence, which is far beyond the reach of NPCs in the current metaverse. Current NPCs mostly rely on preset dialogues and behaviors, which may make them seem rigid and lack realism and personalization when responding to player actions. At the same time, NPCs also have limitations in advancing storylines and conducting ongoing interactions.

In this context, the potential and advantages of using AI technology, especially AIGC and ChatGPT, to optimize Metaverse content production and build intelligent NPCs are particularly prominent.

Cocos CEO Lin Shun believes that the combination of AIGC and Metaverse can greatly improve the efficiency and creativity of content generation. AIGC can take on heavy and repetitive content creation work, saving developers time and energy while bringing more diverse and rich game content. Using AIGC's technology, worlds and scenes can be automatically generated, speeding up the content creation process of the Metaverse, making the world of the Metaverse more colorful and serving the needs of various forms. For example: automatically generating tasks and plots to make the content in the metaverse more vivid and interesting; automatically generating NPC and character designs, AIGC can help developers generate various personalized NPC and character images, making the characters in the metaverse more diverse and interesting. Unique; automatically generates sound effects and music, realistic sound effects and dynamic music, making the Metaverse scene more immersive and immersive.

Lin Shun also believes that AI can provide more realistic and intelligent interactions for NPCs in the metaverse. NPCs with "souls" can form a dynamic world in the metaverse, and NPCs can make decisions based on the player's actions and decisions. React accordingly to make the game world more realistic and vivid, advancing the development of the world. They can understand players' instructions and emotions through natural language processing and emotion recognition technology, and respond accordingly, thereby providing a more personalized and rich gaming experience. AI gives NPCs a more realistic "soul" or personality. Through emotional modeling and cognitive models, AI can make NPCs show complex emotional states, personality traits and behavioral patterns, increasing the emotional connection and interaction between players and NPCs.

Lin Yu, chief product officer of Mo Universe, expressed a similar view. He believed that "AIGC will greatly improve the production efficiency of the Metaverse in the three core elements of "people, objects, and scenes." For example, in digital human In terms of image modeling design, design efficiency is improved; in the construction of digital human brain intelligence, the intelligence level of digital humans is greatly improved; AIGC of 2D and 3D pictures and video content can improve the efficiency of modeling and design of objects and scenes. .AIGC can give NPC a brain, or "soul", and greatly improve the intelligence level of NPC, such as intelligent question and answer between NPC and users, not only text question and answer, but also voice question and answer, as well as multi-modal modes such as pictures and videos. Intelligent communication.”

Finally, DataMesh founder and CEO Li Jie mentioned that in the field of enterprise metaverse, there is still a "chicken-and-egg problem" that needs to be solved - high-quality content can attract users, but if Without enough users, it is impossible to produce and maintain this high-quality content. This is a classic network effect problem.

Li Jie believes that under the TEMS (training, experience, monitoring and simulation) model of the enterprise metaverse, one possible way to solve this problem is through the two aspects of simulation (Simulation) and training (Training). Initial drive content generation for the Metaverse. In the early days, companies can train and educate internal employees by building specific simulation scenarios, which can not only improve employees' skills, but also provide early active users and content for the Metaverse.

Over time, as employees gradually adapt to and rely on this new way of working, the user base of the Enterprise Metaverse will grow, and these users will also generate a large amount of interactive data in the Metaverse. These data can be collected and analyzed to further optimize the Experience and Monitor and Control functions of the Metaverse, forming a virtuous cycle.

When talking about the killer application of the Metaverse, Li Jie believes that the killer application may be a solution that perfectly integrates the four aspects of TEMS. For example, an application that can simulate complex business processes in real time, provide rich and customized training content, have efficient monitoring and control functions, and provide a seamless and immersive user experience may be the killer of the Metaverse application. Such applications can not only greatly improve the company's production efficiency and employee job satisfaction, but also promote the company's continuous innovation and development.

Above, we have analyzed some core technical areas and challenges of XR and the Metaverse. Finally, I hope that companies like Apple can continue to break through technical bottlenecks and lower the price as soon as possible (the price of more than 20,000 is indeed not close to the people), so that the colorful metaverse like "Ready Player One" can arrive as soon as possible.

But to be honest, I am not optimistic about the XR headset released by Apple this time. Apple is a great company, and its product capabilities in the field of consumer electronics are also unique in the world. However, there are still some significant technical bottlenecks in the XR equipment and Metaverse industries. Breaking through these bottlenecks requires the efforts of the entire industry and takes time. It is impossible for one company alone to break through. When Apple released the Iphone, it was not just Apple's fault that brought mankind into the mobile Internet era. In fact, at that time, mankind had already entered the mobile Internet era with one foot, but Apple's products were the best and they harvested the biggest fruits. Just imagine, if our network is still stuck at 2G or even 1G, will the iPhone still be useful? Apple is great, but no matter how great a company is, it is impossible to transcend the times.

From a rational perspective, there are still considerable challenges in each of the above-mentioned near-eye display, computational rendering, perceptual interaction, and content production. Even if it is as powerful as Apple, it has only produced the best product under the existing industry conditions, but it is still far from our ideal of the metaverse. It is foreseeable that when a large number of users actually experience Vision Pro, they will most likely feel that it has some good designs and breakthroughs compared with previous XR products, but overall it is still far from expectations and has many "grooves" . For example, according to reports, the wired battery that comes with the Vision Pro can only last for 2 hours.

Text: Yi Li Yanyu/Data Monkey

The above is the detailed content of From 'Hey Siri' to the Metaverse, how far does Apple have to go?. For more information, please follow other related articles on the PHP Chinese website!

What does Apple LTE network mean?

What does Apple LTE network mean? The role of Apple's Do Not Disturb mode

The role of Apple's Do Not Disturb mode How to solve the problem that Apple cannot download more than 200 files

How to solve the problem that Apple cannot download more than 200 files What does the metaverse concept mean?

What does the metaverse concept mean? Popular explanation of what Metaverse XR means

Popular explanation of what Metaverse XR means What does Metaverse Concept Stock mean?

What does Metaverse Concept Stock mean? Windows 10 running opening location introduction

Windows 10 running opening location introduction What is the difference between guid and mbr formats

What is the difference between guid and mbr formats