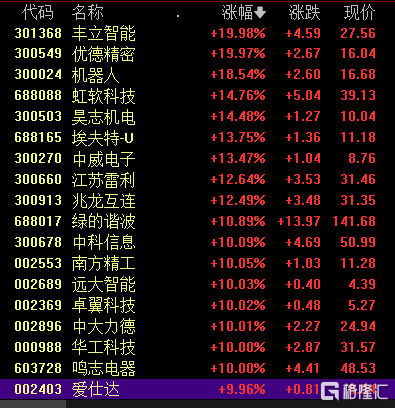

On May 18, robot concept stocks set off a rising limit trend. As of the time of publication, Fengli Intelligent and Youde Precision had reached their daily limit of 20cm. Robot, Haozhi Electromechanical, Jiangsu Leili, and Green Harmonic had risen by more than 10%. Southern Seiko and Yuanda had risen by more than 10%. Intelligence, Huagong Technology, etc. have hit the daily limit

News, at the ITF World 2023 Semiconductor Conference, Huang Renxun said that the next wave of artificial intelligence will be embodied intelligence (embodied AI), that is, intelligent systems that can understand, reason, and interact with the physical world.

In addition, at the Tesla shareholders meeting on May 16, Musk stated that humanoid robots will be Tesla’s main long-term source of value in the future. He spent a lot of space explaining robots and speculated that the demand for the humanoid robot Optimus will reach 10 billion units, far exceeding the demand for automobiles, and may be in the tens of billions of dollars.

Being favored by the two giants Tesla and NVIDIA at the same time, the humanoid robot sector is indeed worthy of a high look.

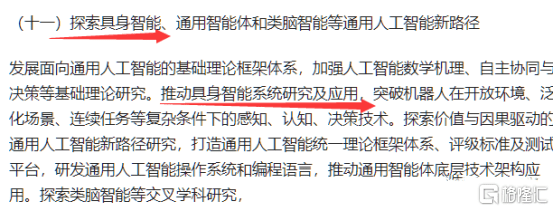

In addition, Beijing has released several measures to promote the development of general artificial intelligence. There is also an expression of embodied intelligence: promoting the research and application of embodied intelligence systems, and breaking through the perception, cognition, and decision-making technologies of robots under complex conditions such as open environments, generalized scenarios, and continuous tasks.

Artificial intelligence adds a new term

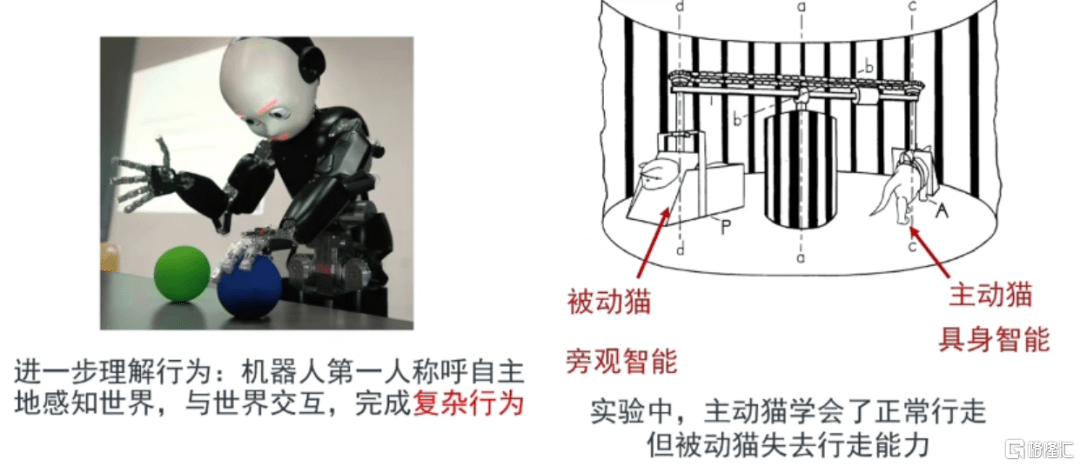

"Embodied intelligence" originally dates back to 1950, when Turing first proposed this concept in the paper "Computing Machinery and Intelligence". It refers to a kind of machine intelligence with autonomous decision-making and action capabilities. It can perceive and understand the environment like humans, and complete tasks through autonomous learning and adaptive behavior.

To be more specific, robots/simulators (referring to virtual environments) that can interact with the environment and perceive the environment like humans, and have autonomous planning, decision-making, action, and execution capabilities (referring to virtual environments) are the ultimate form of AI. We temporarily call it embodied intelligence. robot. Its implementation covers a variety of artificial intelligence technologies, such as computer vision, natural language processing, and robotics.

As Li Feifei, a professor of computer science at Stanford University, said, "The meaning of embodiment is not the body itself, but the overall needs and functions of interacting with the environment and doing things in the environment."

Simply put, embodied intelligent robots can understand human language and complete corresponding tasks. Although the ideal is grand, the reality is that it can only "understand human language", and people still need to rely heavily on handwritten code to control robots.

Dieter Fox, senior director of robotics research at NVIDIA and a professor at the University of Washington, pointed out that a key goal of robotics research is to build robots that are helpful to humans in the real world. But to do this, they must first be exposed to and learn how to interact with humans.

The next wave of AI

Giants are investing in the humanoid robot track. After Tesla launched the Optimus prototype last year, and recently including OpenAI Venture Fund, which led the Norwegian robot manufacturer 1X Technologies’ A2 round of financing, chatGPT will also help improve the perception capabilities of humanoid robots and accelerate the industry. change.

The emergence of large models such as GPT has provided new ideas - many researchers have tried to combine multi-modal large language models with robots, through joint training of images, text, and embodied data, and introduced Multi-modal input enhances the model's understanding of real-life objects and helps robots handle embodied reasoning tasks.

The AI teams of Google and Microsoft are at the forefront, trying to inject soul into robots with large models.

On March 8, the team from Google and the Technical University of Berlin launched the largest visual language model in history - PaLM-E, with a parameter volume of up to 562 billion (GPT-3 has a parameter volume of 175 billion). As a multi-modal embodied visual language model (VLM), PaLM-E can not only understand images, but also understand and generate language, and can execute various complex robot instructions without retraining. Google researchers plan to study more real-world applications of PaLM-E, such as home automation or industrial robots. They hope that PaLM-E can promote more research on multi-modal reasoning and embodied AI.

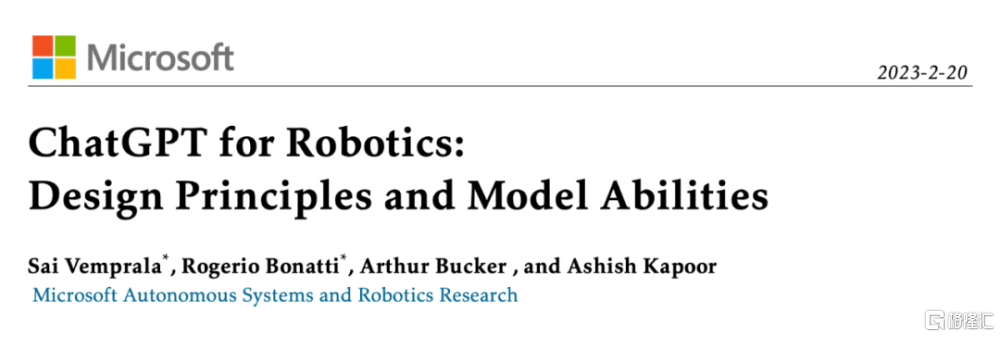

In a recent study, the Microsoft team is exploring how to extend ChatGPT developed by OpenA into the field of robotics, allowing us to use language to intuitively control multiple platforms such as robotic arms, drones, and home assistance robots. The researchers cited multiple examples of ChatGPT solving robotic problems, and also introduced the use of ChatGPT to solve complex robot deployments in areas such as operations, aerial and navigation.

It is obvious that Google and Microsoft have highly similar expectations for embodied AI: humans operate robots and do not need to learn complex programming languages or the details of the robot system. "Speech" (dictating/gesturing requirements to the robot) is " "The robot completes the task" (the robot completes the task), reaching the state of "handy, like an arm using fingers".

Therefore, large language models such as ChatGPT play a crucial role in realizing convenient human-computer interaction in embodied intelligence.

If the large model represented by chatGPT has opened a new era of general AI, then multi-modal, embodied, active and interactive artificial intelligence must be the only way forward in this era.

The above is the detailed content of The two giants are optimistic at the same time! Artificial intelligence adds a new term, is it the next wave of AI?. For more information, please follow other related articles on the PHP Chinese website!

Application of artificial intelligence in life

Application of artificial intelligence in life

What is the basic concept of artificial intelligence

What is the basic concept of artificial intelligence

How to set vlanid

How to set vlanid

How to use averageifs function

How to use averageifs function

Four major characteristics of blockchain

Four major characteristics of blockchain

How clearfix implements clearing floats

How clearfix implements clearing floats

How to save pictures in Douyin comment area to mobile phone

How to save pictures in Douyin comment area to mobile phone

What should I do if English letters appear when I turn on the computer and the computer cannot be turned on?

What should I do if English letters appear when I turn on the computer and the computer cannot be turned on?