The world is filled with data – images, videos, spreadsheets, audio and text generated by people and computers flood the internet, drowning us in a sea of information.

Traditionally, humans analyze data to make more informed decisions and seek to adjust systems to control changes in data patterns. However, as the amount of incoming information increases, our ability to understand it decreases, leaving us with the following challenge:

How do we use all this data to derive meaning in an automated rather than manual way?

This is where machine learning comes into play. This article will introduce:

These predictions are made by machines learning patterns from a set of data called "training data", and they can drive further technological development to improve people's lives.

Machine learning is a concept that allows computers to automatically learn from examples and experience and imitate human decision-making without being explicitly programmed.

Machine learning is a branch of artificial intelligence that uses algorithms and statistical techniques to learn from data and derive patterns and hidden insights.

Now, let’s explore the ins and outs of machine learning in more depth.

There are tens of thousands of algorithms in machine learning, which can be grouped according to learning style or the nature of the problem they solve. But every machine learning algorithm contains the following key components:

The above is a detailed classification of the four components of machine learning algorithms.

Descriptive: The system collects historical data, organizes it, and then presents it in an easy-to-understand manner.

The main focus is to grasp what is already happening in the enterprise rather than drawing inferences or predictions from its findings. Descriptive analytics uses simple mathematical and statistical tools such as arithmetic, averages, and percentages rather than the complex calculations required for predictive and prescriptive analytics.

Descriptive analysis mainly analyzes and infers historical data, while predictive analysis focuses on predicting and understanding possible future situations.

Analyzing past data patterns and trends by looking at historical data can predict what may happen in the future.

Prescriptive analysis tells us how to act, while descriptive analysis tells us what happened in the past. Predictive analytics tells us what is likely to happen in the future by learning from the past. But once we have an insight into what might be happening, what should we do?

This is normative analysis. It helps the system use past knowledge to make multiple recommendations on actions a person can take. Prescriptive analytics can simulate scenarios and provide a path to achieving desired outcomes.

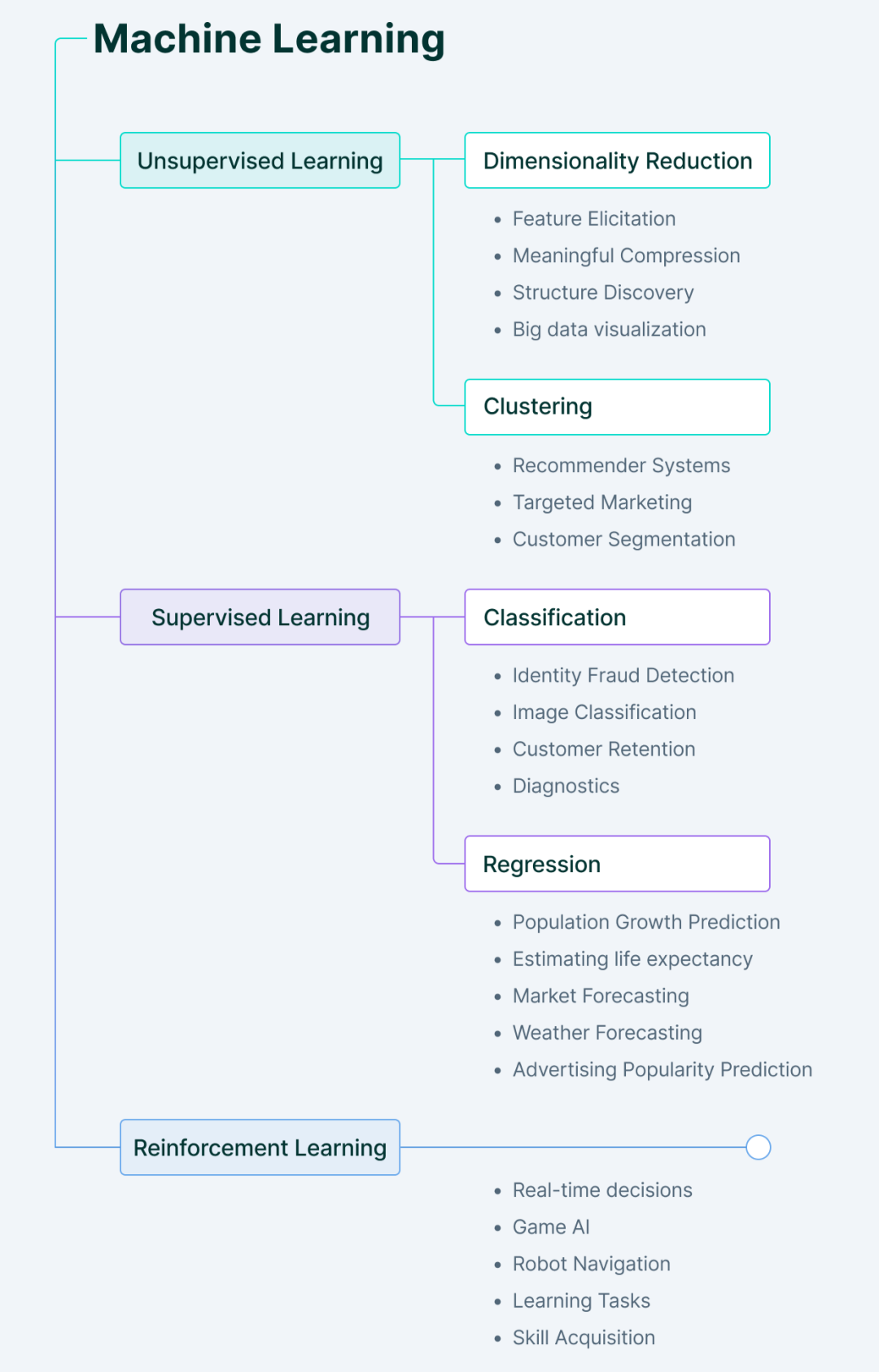

The learning of ML algorithms can be divided into three main parts.

Machine learning models are designed to learn patterns from data and apply this knowledge to make predictions. The question is: How does the model make predictions?

The process is very basic - find patterns from input data (labeled or unlabeled) and apply it to derive a result.

Machine learning models are designed to compare the predictions they make to ground truth. The goal is to understand whether it is learning in the right direction. This determines the accuracy of the model and suggests how we can improve the training of the model.

The ultimate goal of the model is to improve predictions, which means reducing the difference between known results and corresponding model estimates.

The model needs to better adapt to the training data samples by continuously updating the weights. The algorithm works in a loop, evaluating and optimizing the results, updating weights, until a maximum value is obtained regarding the accuracy of the model.

Machine learning mainly includes four types.

In supervised learning, as the name suggests, the machine learns under guidance.

This is done by feeding the computer a set of labeled data so that the machine understands what the input is and what the output should be. Here, humans act as guides, providing the model with labeled training data (input-output pairs) from which the machine learns patterns.

Once the relationship between input and output is learned from previous data sets, the machine can easily predict the output value of new data.

Where can we use supervised learning?

The answer is: when we know what to look for in the input data and what we want as the output.

The main types of supervised learning problems include regression and classification problems.

Unsupervised learning works exactly the opposite of supervised learning.

It uses unlabeled data - machines must understand the data, find hidden patterns and make predictions accordingly.

Here, machines provide us with new discoveries after independently deriving hidden patterns from data, without humans having to specify what to look for.

The main types of unsupervised learning problems include clustering and association rule analysis.

Reinforcement learning involves an agent that learns to behave in its environment by performing actions.

Based on the results of these actions, it provides feedback and adjusts its future course - for every good action, the agent gets positive feedback, and for every bad action, the agent gets negative feedback or punishment.

Reinforcement learning learns without any labeled data. Since there is no labeled data, the agent can only learn based on its own experience.

Semi-supervised is the state between supervised and unsupervised learning.

It takes the positive aspects from each learning, i.e. it uses smaller labeled datasets to guide classification and performs unsupervised feature extraction from larger unlabeled datasets.

The main advantage of using semi-supervised learning is its ability to solve problems when there is not enough labeled data to train the model, or when the data simply cannot be labeled because humans don't know what to look for in it.

Machine learning is at the core of almost every technology company these days, including businesses like Google or the Youtube search engine.

Below, we have summarized some examples of real-life applications of machine learning that you may be familiar with:

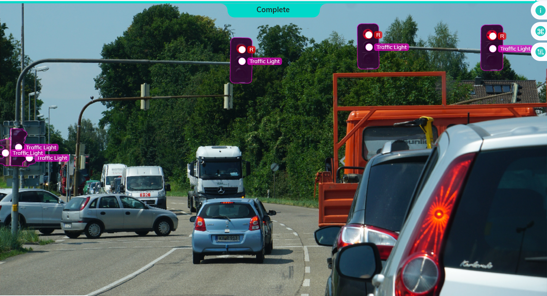

Vehicles encounter a variety of situations on the road. Such situation.

For self-driving cars to perform better than humans, they need to learn and adapt to changing road conditions and the behavior of other vehicles.

Self-driving cars collect data about their surroundings from sensors and cameras, then interpret it and react accordingly. It uses supervised learning to identify surrounding objects, unsupervised learning to identify patterns in other vehicles, and finally takes action accordingly with the help of reinforcement algorithms.

Image analysis is used to extract different information from images.

It has applications in areas such as checking for manufacturing defects, analyzing car traffic in smart cities, or visual search engines like Google Lens.

The main idea is to use deep learning techniques to extract features from images and then apply these features to object detection.

It’s very common these days for companies to use AI chatbots to provide customer support and sales. AI chatbots help businesses handle high volumes of customer queries by providing 24/7 support, thereby reducing support costs and generating additional revenue and happy customers.

AI robotics uses natural language processing (NLP) to process text, extract query keywords, and respond accordingly.

The fact is this: medical imaging data is both the richest source of information and one of the most complex.

Manually analyzing thousands of medical images is a tedious task and wastes valuable time for pathologists that could be used more efficiently.

But it’s not just time saved—artifacts or small features like nodules may not be visible to the naked eye, leading to delays in disease diagnosis and incorrect predictions. This is why there is so much potential using deep learning techniques involving neural networks, which can be used to extract features from images.

As the e-commerce sector expands, we can observe an increase in the number of online transactions and a diversification of available payment methods. Unfortunately, some people take advantage of this situation. Fraudsters in today's world are highly skilled and can adopt new technologies very quickly.

That’s why we need a system that can analyze data patterns, make accurate predictions, and respond to online cybersecurity threats, such as fake login attempts or phishing attacks.

For example, fraud prevention systems can discover whether a purchase is legitimate based on where you made purchases in the past or how long you were online. Likewise, they can detect if someone is trying to impersonate you online or over the phone.

This correlation of recommendation algorithms is based on the study of historical data and depends on several factors, including user preferences and interests.

Companies such as JD.com or Douyin use recommendation systems to curate and display relevant content or products to users/buyers.

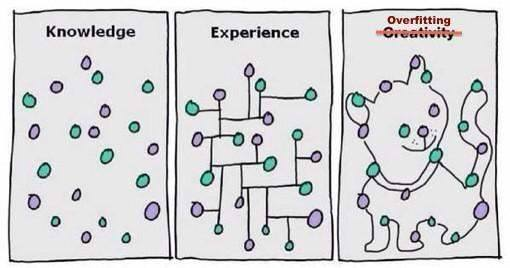

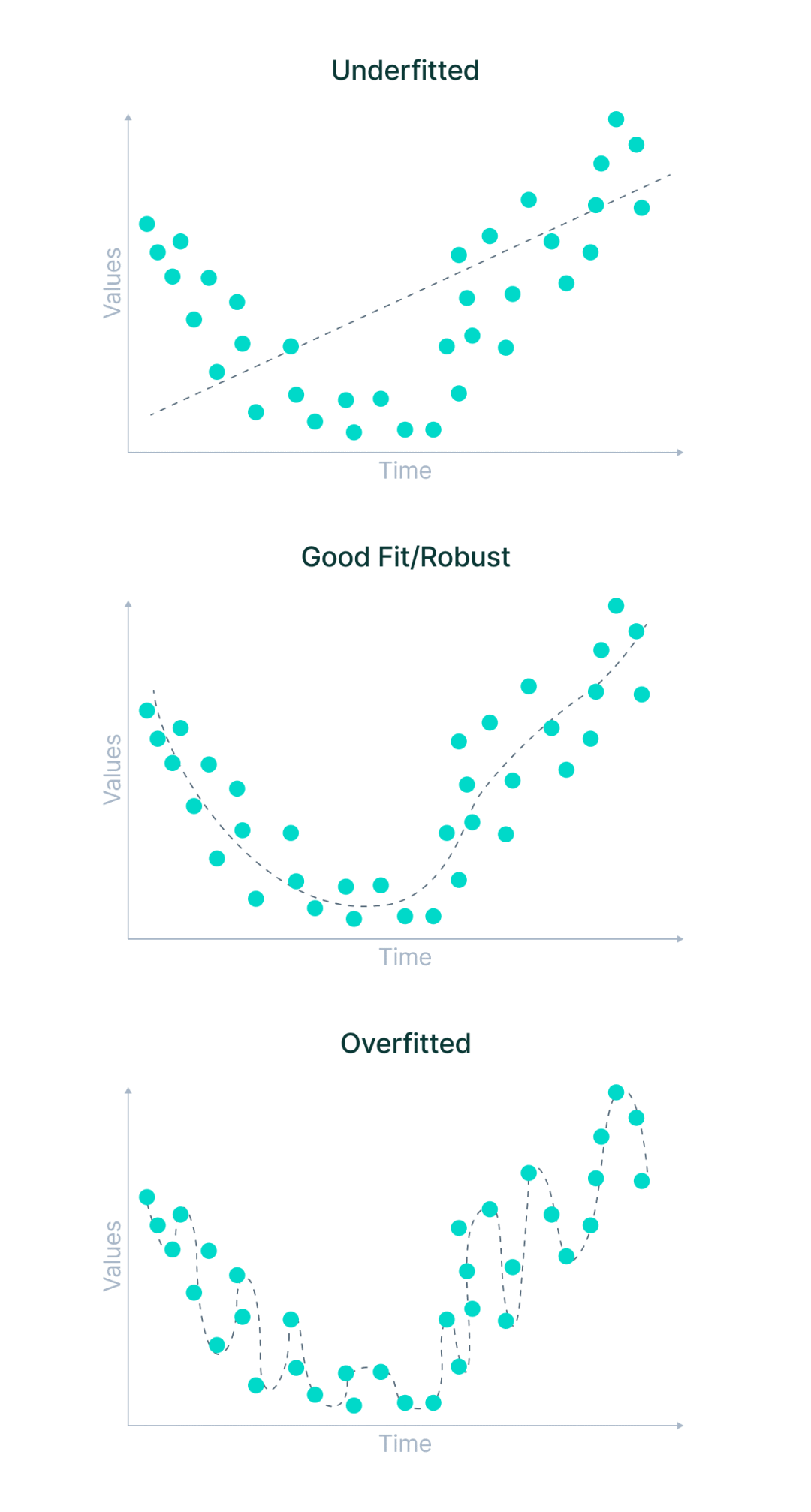

In most cases, the cause of poor performance of any machine learning algorithm is Due to underfitting and overfitting.

Let’s break down these terms in the context of training a machine learning model.

Because the model has little flexibility, it cannot predict new data points. In other words, it focuses too much on the examples given and fails to see the bigger picture.

What are the causes of underfitting and overfitting?

More general situations include situations where the data used for training is not clean and contains a lot of noise or garbage values, or the size of the data is too small. However, there are some more specific reasons.

Let’s take a look at those.

Underfitting may occur because:

Overfitting may occur when:

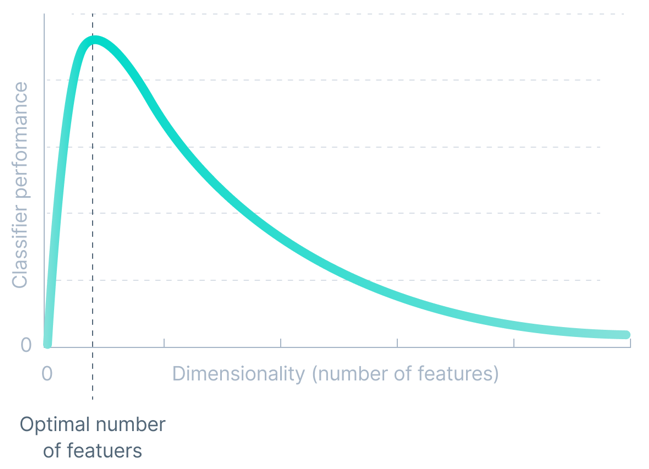

The accuracy of any machine learning model is directly proportional to the dimensionality of the data set. But it only works up to a certain threshold.

The dimensionality of a data set refers to the number of attributes/features present in the data set. Exponentially increasing the number of dimensions leads to the addition of non-essential attributes that confuse the model, thereby reducing the accuracy of the machine learning model.

We call these difficulties associated with training machine learning models the "curse of dimensionality."

Machine learning algorithms are sensitive to low-quality training data.

Data quality may be affected due to noise in the data caused by incorrect data or missing values. Even relatively small errors in the training data can lead to large-scale errors in the system output.

When an algorithm performs poorly, it is usually due to data quality issues such as insufficient quantity/skew/noisy data or insufficient features to describe the data.

Therefore, before training a machine learning model, data cleaning is often required to obtain high-quality data.

The above is the detailed content of Understand what machine learning is in one article. For more information, please follow other related articles on the PHP Chinese website!