Mind reading can be said to be one of the superpowers that humans most want, and it must also be the superpower that people least want others to have. Just enter the keyword "mind reading" into a search engine and you will find a large number of related books, videos and tutorials, which shows that people are obsessed with this ability. But putting aside those psychological, behavioral or mystical contents, from a technical point of view, there are patterns in human brain signals, and therefore mind reading (analyzing the patterns of brain signals) is possible.

Nowadays, with the development of AI technology, its ability to analyze patterns has become more and more sophisticated, and mind reading is becoming a reality.

A few days ago, a paper published by the University of Texas at Austin in Nature Neuroscience aroused heated discussion, which can reconstruct semantically consistent sequences by non-invasively reading brain signals. Statement - Not surprisingly, this model also uses the currently popular GPT language model. But let’s put aside this latest result for now and look at some other earlier research results on AI mind reading to get a rough understanding of the current research landscape on this topic.

Broadly speaking, mind reading can be divided into two categories: direct mind reading and indirect mind reading.

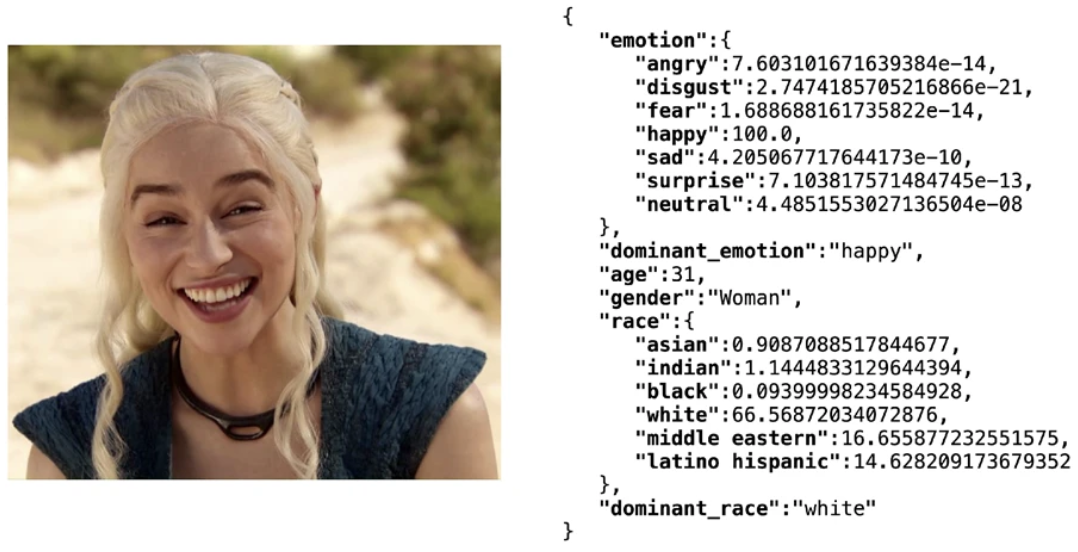

Indirect mind reading refers to inferring a person's thoughts and emotions through indirect characteristics. These features include facial expressions, body posture, body temperature, heart rate, breathing rhythm, speaking speed and tone, etc. In recent years, deep learning technology based on big data has allowed AI to identify emotions through facial expressions quite accurately. For example, Deepface, a lightweight open source facial recognition software library, can comprehensively analyze multiple characteristics such as age, gender, emotion and race. Achieved 97.53% test set accuracy. However, emotion analysis technology based on the above characteristics is usually not regarded as mind reading. After all, humans themselves can more or less guess the emotions of others through their expressions and other characteristics. Therefore, the mind reading technology focused on in this article is limited to direct mind reading.

##Use the Deepfake library to get the face attribute analysis results

Direct mind reading refers to directly "translating" brain signals into a form that others can understand, such as text, voice, and images. Currently, there are three main types of brain signals that researchers are focusing on: invasive brain-computer interfaces, brain waves, and neuroimaging.

Mind reading based on intrusive brain-computer interfaceIntrusive brain-computer interface can be said to be the standard feature of cyberpunk works. You can read it in "Cyberpunk" 2077" and many other movies or games. The basic idea is to read the electrical signals passed between nerve cells in or near the brain or nervous system. Invasively read brain signals are generally more accurate and less noisy than non-invasive methods.

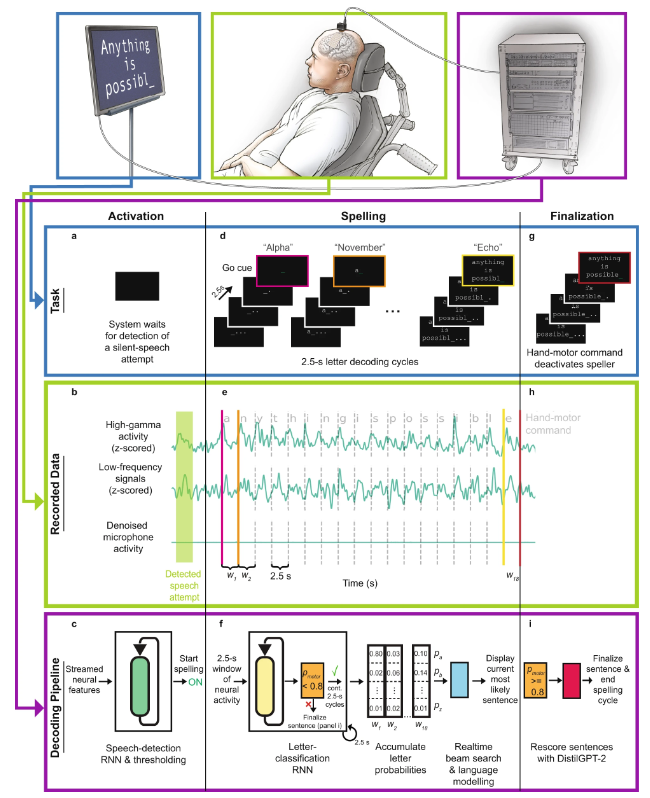

In 2021, in the paper "Neuroprosthesis for Decoding Speech in a Paralyzed Person with Anarthria", researchers from the University of California, San Francisco proposed using AI to help disabled people with speech impairments communicate . In this study, the subject was a person with a one-armed disability who had an inarticulate speech. Notably, their experiments used a neural implant to acquire the signal, which used a combination of a high-density cortical EEG electrode array and a transcutaneous connector. This invasive approach naturally leads to higher accuracy - achieving a maximum accuracy of 98% and a median decoding rate of 75%, with the model decoding at speeds of up to 18 words per minute. In addition, the application of language models also greatly improves the meaning expression of decoding results, which is no longer just a simple accumulation of strings.

After that, the team further improved their system in the 2022 Nature Neuroscience paper "Generalizable spelling using a speech neuroprosthesis in an individual with severe limb and vocal paralysis", integrating The emerging language model GPT further improves performance.

Direct speech brain-computer interface workflow diagram

Specifically , its workflow is:

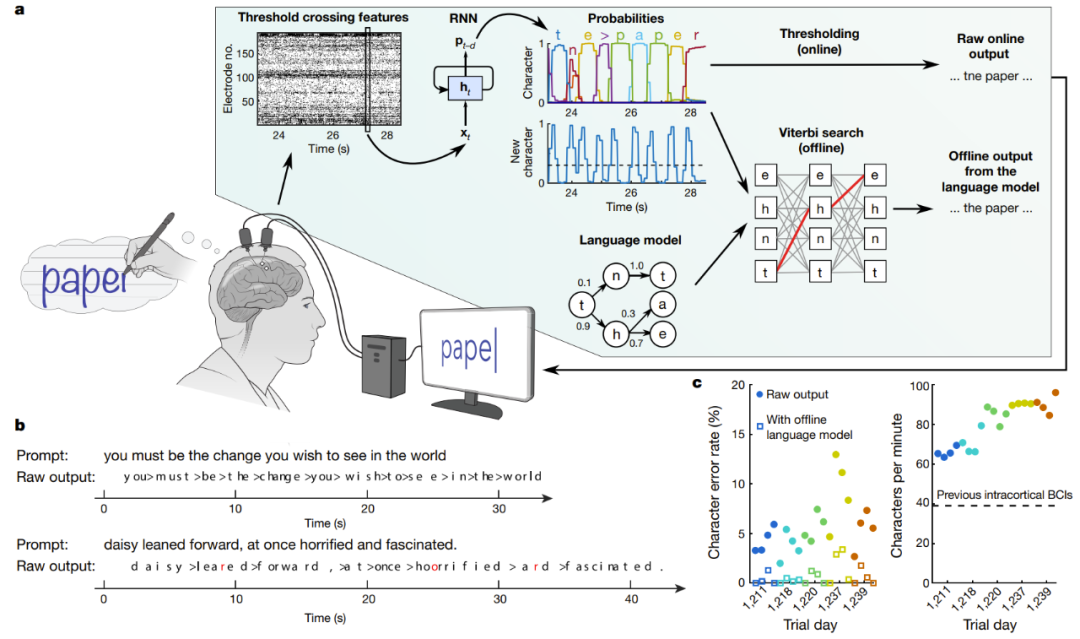

Another implantable brain-computer interface research claims to have successfully achieved efficient handwriting recognition and conversion of EEG signals into text. In the Nature paper "High-performance brain-to-text communication via handwriting," researchers at Stanford University successfully enabled paralyzed people with spinal cord injuries to type at a speed of 90 characters per minute, and the original online accuracy reached 94.1%, using The offline accuracy of the language model exceeds 99%!

Decoding brain signals of subjects attempting handwriting in real time

A in the figure is a schematic diagram of the decoding algorithm. First, neural activity at each electrode is temporally combined and smoothed. An RNN is then used to convert the neural population time series into a probabilistic time series, which describes the likelihood of each character and the probability of any new character starting. The RNN has an output delay (d) of 1 second, giving it time to fully observe each character before determining its identity. Finally, set the threshold of character probability to obtain the "original online output" for real-time use (when the probability of a new character exceeds a certain threshold at time t, the most likely character is given and displayed at time t 0.3 seconds on the screen). In an offline retrospective analysis, the researchers combined character probabilities with a language model with a large vocabulary to decode the text that participants were most likely to write.

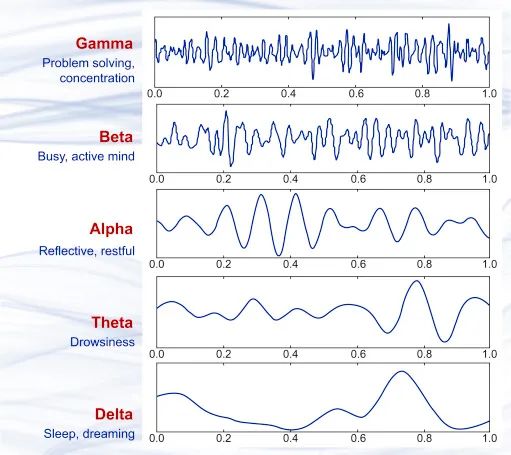

Based on the research results of brain science in recent decades, we know that there are tiny currents in the process of nerve cells transmitting signals in the brain. This produces subtle electromagnetic fluctuations. When a large number of nerve cells are working simultaneously, these electromagnetic fluctuations can be captured using non-invasive precision instruments. In 1875, scientists first observed a flowing electric field phenomenon known as brain waves in animals. In 1925, Hans Berger invented the electroencephalogram (EEG) and recorded the electrical activity of the human brain for the first time. In the nearly hundred years since then, EEG technology has continued to improve, and its accuracy and real-time performance have reached a very high level and have been commercially applied. Now you can even buy portable brainwave detection and analysis equipment.

Several different brain wave waveform samples, from top to bottom are gamma waves (above 35Hz ), beta wave (12-35 Hz), alpha wave (8-12 Hz), theta wave (4-8 Hz), delta wave (0.5-4 Hz), which are roughly equivalent to different brain states.

The most common way to analyze people's emotions and thoughts through brain waves is to analyze P300 waves, which are the brain waves produced by the subject's brain about 300 milliseconds after seeing a stimulus. Research on analyzing brain waves has continued uninterrupted since the discovery of brain waves. For example, in 2001, Lawrence Farwell, a controversial researcher in the field, proposed an algorithm that can detect whether a subject has experienced something by evaluating brain wave responses. event, and even if the subject tried to conceal it, it would be in vain. In other words, this is a brainwave-based lie detector.

Since brain waves themselves are signals with patterns, it is natural to use neural networks to analyze brain waves. Below we will introduce some methods used by scientists to translate brain wave signals into speech, text and images through some research in recent years.

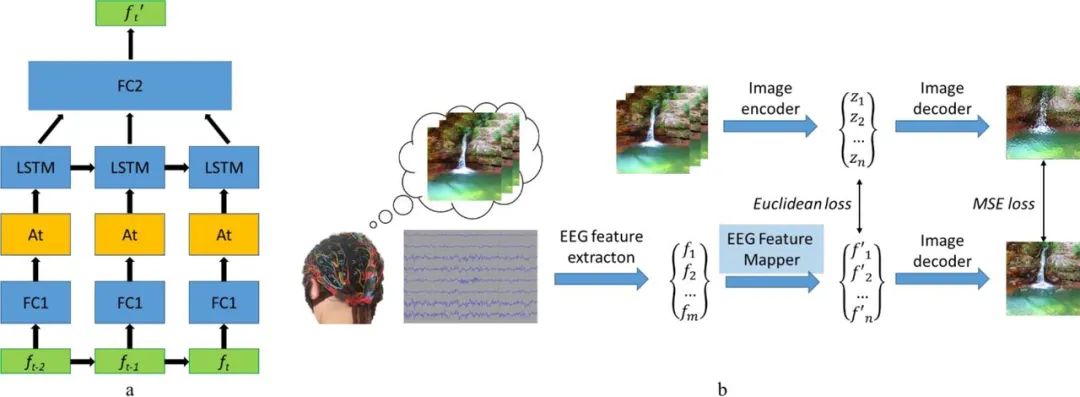

In 2019, a Russian research team proposed a visual brain-computer interface (BCI) system that can reconstruct images based on brain waves. The research idea is very straightforward, which is to extract features from brain wave signals, then extract feature vectors, then map them to find the location of the features in the hidden space, and finally decode and reconstruct the image. Among them, the image decoder is part of an image-to-image convolutional autoencoder model, including 1 fully connected input layer, followed by 5 deconvolution modules, each module consists of 1 deconvolution layer and It consists of ReLU activations, while the activation of the last module is the hyperbolic tangent activation layer.

Another important component of the model is the EEG feature mapper, whose function is to translate data from the EEG feature domain to the hidden spatial domain of the image decoder. The team used LSTM as a recurrent unit in the model and used an attention mechanism for further refinement. Its loss function is to minimize the mean square error between the feature representation of the EEG and the image. For details, see their paper "Natural image reconstruction from brain waves: a novel visual BCI system with native feedback."

Model structure (a) and training routine (b) of EEG feature mapper

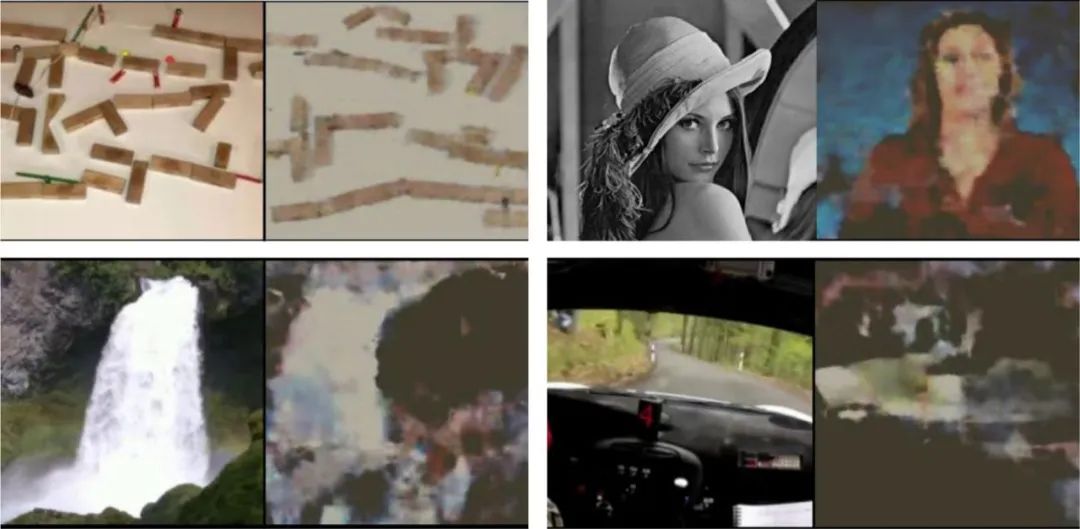

Below are some example results, where it can be seen that there is a significant correlation between the reconstructed image and the original image.

The original image seen by the subject (left of each pair of images) and the original image according to the subject's brain Wave-reconstructed images (right of each pair of images)

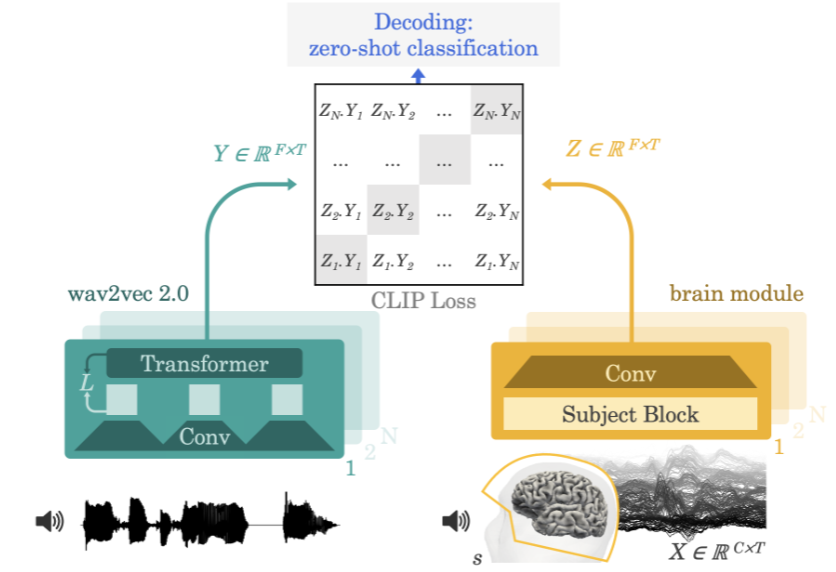

In 2022, the Meta AI team proposed a method to extract speech from non-invasive brain recordings in the paper "Decoding speech from non-invasive brain recordings" A neural network architecture that decodes speech signals from electroencephalography (EEG) or magnetoencephalography (MEG) signals.

Meta AI team’s approach diagram

The method used by the team was to have experimental participants listen to stories or sentences while recording electroencephalography or magnetoencephalography of their brain activity. To do this, the model first extracts a deep contextual representation of the 3-second speech signal (Y) through a pre-trained self-supervised model (wav2vec 2.0), and also learns a representation of the brain activity in the corresponding aligned 3-second window (X) (Z). The representation Z is given by a deep convolutional network. During evaluation, the researchers fed the model the remaining sentences and calculated each 3-second segment of language based on each brain representation. As a result, this decoding process can be zero-shot, allowing the model to predict audio clips that are not included in the training set.

Scientists can also use a technology called functional magnetic resonance imaging (fMRI) to understand brain activity. The technology, developed in the early 1990s, works by looking at blood flow in the brain through magnetic resonance imaging to detect brain activity. The technology can reveal whether specific functional areas in the brain are active.

When we say a certain brain area is "more active," what do we mean? How does fMRI detect this activity?

When neurons in a brain area begin to send out more electrical signals than before, we say that the brain area is more active. For example, if a specific brain area becomes more active when you lift your leg, then that area of the brain can be thought to be responsible for controlling the leg lift.

fMRI detects this electrical activity by detecting oxygen levels in the blood. This is called the blood oxygen level dependent (BOLD) response. The way it works is that when neurons are more active, they require more oxygen from red blood cells. To do this, the surrounding blood vessels widen to allow more blood to flow through. So, when neurons are more active, oxygen levels rise. Oxygenated blood creates less field interference than deoxygenated blood, allowing the neuron's signal (which is essentially hydrogen in the water) to last longer. So when the signal persists longer, fMRI knows that the area has more oxygen, which means it's more active. After color-coding this activity, fMRI images are obtained.

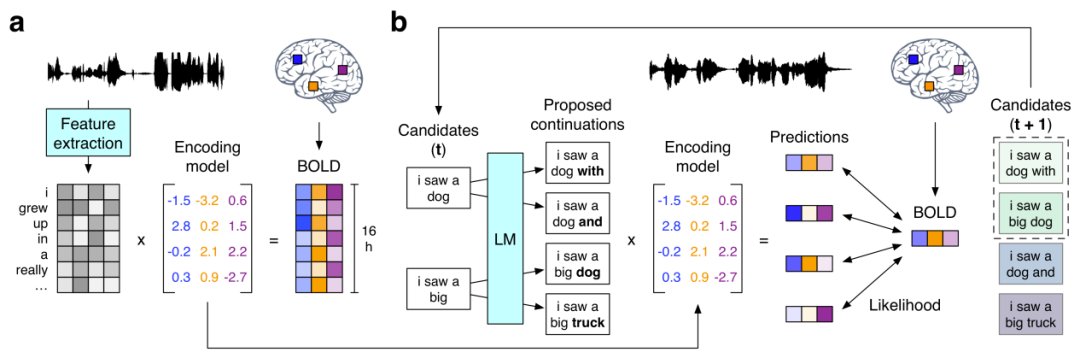

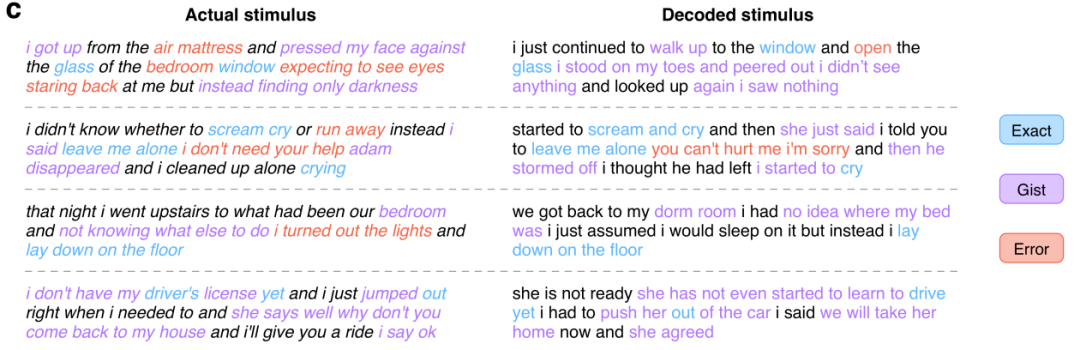

Next, let’s take a look at the previously mentioned research on using GPT to reconstruct semantically consistent continuous sentences "Semantic reconstruction of continuous language from non-invasive brain recordings". They propose a non-invasive decoder that can reconstruct continuous natural language based on cortical representations of semantic meaning in fMRI recordings. When presented with new brain recordings, the decoder was able to generate intelligible word sequences that replicated the meaning of speech heard by the subjects, imagined speech, and even silent videos, suggesting that a single language decoder could be used A range of different semantic tasks. The workflow of this language decoder is as follows:

(a) When three subjects listened to 16 hours of narrative BOLD fMRI responses recorded during story time. The system estimates for each subject an encoding model that predicts the brain responses elicited by the semantic features of the words used as stimuli. (b) To reconstruct language based on fresh brain recordings, the decoder maintains a set of candidate word sequences. When a new word is detected, a language model proposes continuity for each sequence, and the encoding model is then used to evaluate the likelihood of the recorded brain response for each continuity condition. The most likely contiguous sequence is retained last.

Among them, the language model uses the GPT model that is currently at the core of AI research. The researchers fine-tuned the GPT they used on a large corpus of more than 200 million words of Reddit comments and 240 autobiographical stories from The Moth Radio Hour and Modern Love. The model was trained for 50 epochs with a maximum context length of 100. Some experimental results are shown below:

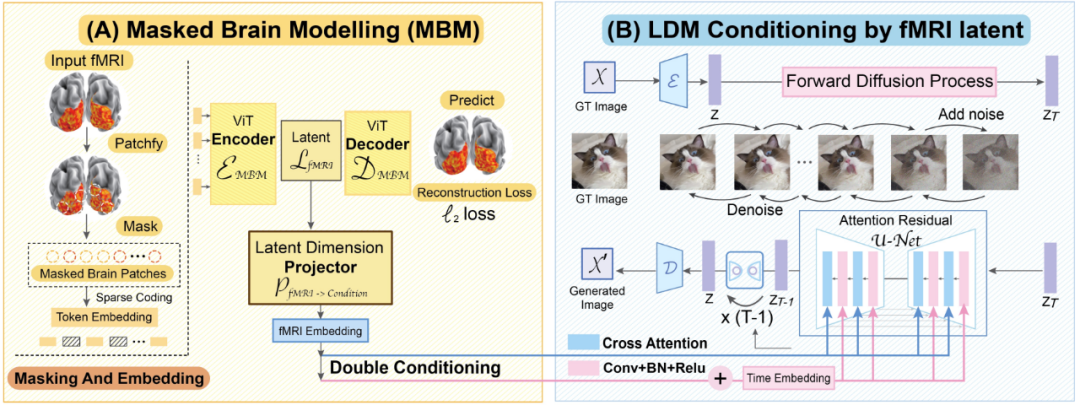

Finally, let’s take a look at this CVPR 2023 paper "Seeing Beyond the Brain" : Conditional Diffusion Model with Sparse Masked Modeling for Vision Decoding》. Researchers from the National University of Singapore, the Chinese University of Hong Kong and Stanford University claim that the MinD-Vis model they proposed has achieved the achievement of decoding fMRI-based brain activity signals into images for the first time, and the reconstructed images are not only rich in details but also contain accurate Semantic and image features (texture, shape, etc.).

##MinD-Vis workflow diagram

Let's take a look at the two working stages of MinD-Vis. As shown in the figure, in stage A, pretraining is performed on fMRI using SC-MBM (Sparse Coding Masked Brain Modeling). Then randomly mask the fMRIs and tokenize them into large embeddings. The researchers trained an autoencoder to recover masked patches. In stage B, it is integrated with the latent diffusion model (LDM) through double conditioning. A latent dimension projection algorithm was used to project the fMRI latent space to the LDM conditional space via two paths. One of the paths is to directly connect the cross-attention heads in LDM. Another route is to add fMRI implications to the temporal embedding.

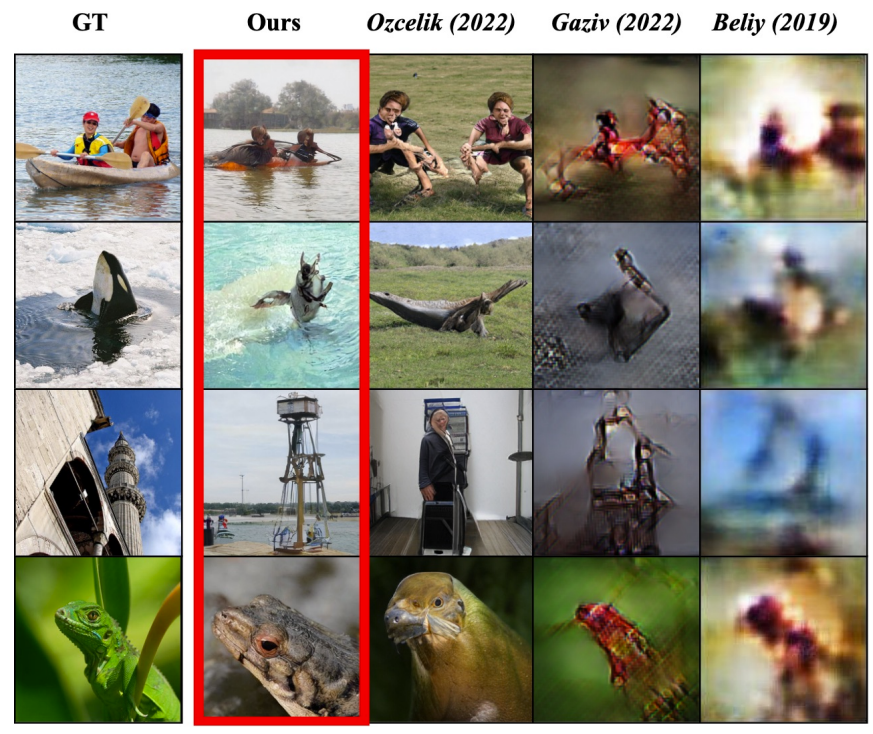

Judging from the experimental results given in the paper, the mind-reading ability of this model is indeed very good:

The left picture is the original picture seen by the subject, the red box marks the reconstruction result of MinD-Vis, and the next three columns are Results from other methods.

With the increase in the amount of data and the improvement of algorithms, artificial intelligence is understanding our world more and more deeply, and we humans, as the core of this world Part of nature is also an object to be understood - by exploring the activity patterns of human brains, machines are gaining the ability to understand what humans are thinking from the bottom up. Perhaps one day in the future, AI can become a true mind-reading master, and may even have the ability to capture human dreams with high fidelity!

The above just briefly introduces some recent research results of AI in direct mind reading. In fact, some companies have begun to work on the commercialization of related technologies, such as Neuralink and Brain-computer interface and neurotechnology companies represented by Blackrock Neurotech, their future potential products will have exciting application prospects, such as helping people with inexpressible disabilities reestablish their connection with the world, and remotely controlling robots operating in dangerous areas such as the deep sea and space. machine. At the same time, the development of these technologies has also given many people hope of deciphering the mystery of human consciousness.

Of course, this type of technology has also caused many people to worry about privacy, security and ethics. After all, we have seen this type of technology being used in many movies or novels. for evil purposes. Nowadays, the further development of such technologies is inevitable, so how to ensure that these technologies are consistent with human interests has become an important issue that requires thinking and discussion by all relevant people and policymakers.

The above is the detailed content of Brain-computer interface, brain waves and fMRI, AI is mastering mind reading. For more information, please follow other related articles on the PHP Chinese website!