##Enterprise Digitalization is a hot topic in recent years. It refers to the use of new generation digital technologies such as artificial intelligence, big data, and cloud computing to change the business model of an enterprise, thereby promoting new growth in the enterprise's business. Enterprise digitalization generally includes the digitalization of business operations and the digitalization of enterprise management. This sharing mainly introduces the digitalization of enterprise management level.

Information digitization, simply put, means reading, writing, storing and transmitting information in a digital way. From the previous paper documents to the current electronic documents and online collaborative documents, information digitization has become the new normal in today's office. Currently, Alibaba uses DingTalk Documents and Yuque Documents for business collaboration, and the number of online documents has reached more than 20 million. In addition, many companies have their own internal content communities, such as Alibaba’s intranet Alibaba Internal and External Networks, and the technical community ATA. Currently, there are nearly 300,000 technical articles in the ATA community, which are all very valuable content assets.

#The digitization of processes refers to the use of digital technology to transform service processes and improve service efficiency. There will be a lot of transactional work such as internal administration, IT, human resources, etc. The BPMS process management system can standardize work processes, formulate a workflow based on business rules, and automatically execute it according to the workflow, which can greatly reduce labor costs. RPA is mainly used to solve the problem of multi-system switching in the process. Because it can simulate manual click input operations on the system interface, it can connect various system platforms. The next development direction of process digitization is the intelligence of processes, realized through conversational robots and RPA. Nowadays, task-based conversational robots can help users complete some simple tasks within a few rounds of dialogue, such as asking for leave, booking tickets, etc.

#The goal of business digitalization is to establish a new business model through digital technology. Within the enterprise, there are actually some business middle offices, such as the business digitization of the purchasing department, which refers to the digitization of a series of processes from product search, purchase application initiation, purchase contract writing, payment, order execution, etc. Another example is the business digitization of the legal middle office. Taking the contract center as an example, it realizes the digitization of the entire contract life cycle from contract drafting to contract review, contract signing, and contract performance.

The massive data and documents generated by digitization will be scattered in various business systems, so it is necessary to An intelligent enterprise search engine helps employees quickly locate the information they are looking for. Taking Alibaba Group as an example, the main scenarios for enterprise search are as follows:

(1) Unified search, also known as comprehensive search, which aggregates multiple Information about content sites includes DingTalk documents, Yuque documents, ATA, etc. The entrances to unified search are currently placed on Alibaba's internal network Alibaba Internal and External and the employee-only version of DingTalk. The combined traffic of these two entrances reaches about 140 QPS, which is a very high traffic in a ToB scenario.

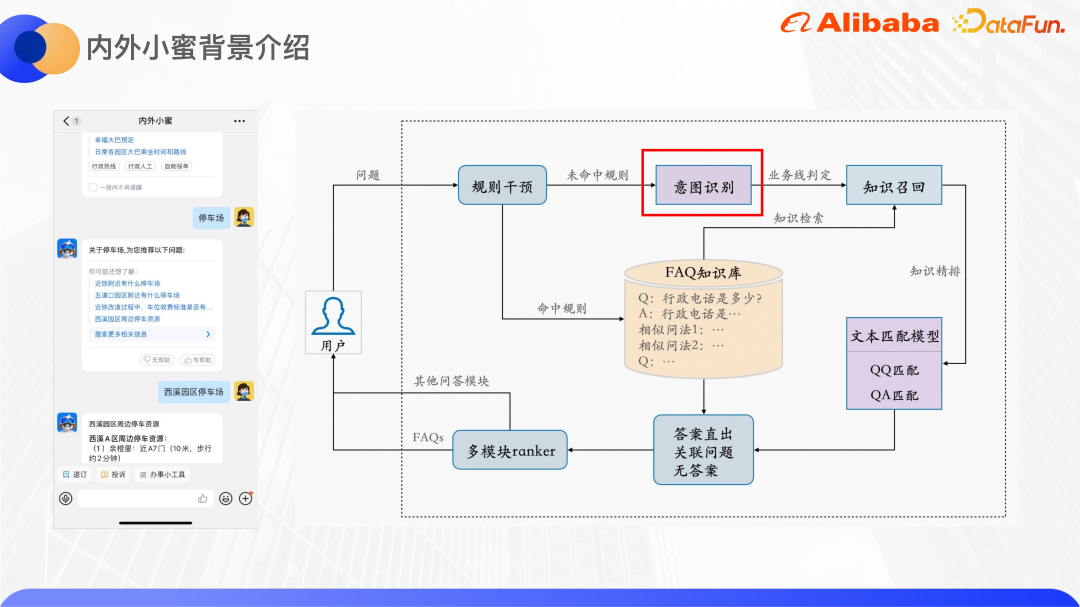

(2) Enterprise employee assistant refers to Xiaomi inside and outside. It is an intelligent service robot for Alibaba’s internal employees, bringing together HR, administration, IT It provides corporate knowledge question and answer services in many fields, as well as fast service channels. Including the entrance of DingTalk and some plug-in entrances, there are a total of about 250,000 people open to it, and it is also one of the group's traffic positions.

(3) Industry search corresponds to the digitization of the business mentioned in the previous chapter. For example, procurement has a portal called the Procurement Mall. Buyers can search in the Procurement Mall, select products, and submit purchases. Application is similar to an e-commerce search website, except that the user is a corporate buyer; the legal compliance business also has a corresponding portal, where legal students can search for contracts and carry out a series of tasks such as contract drafting, approval, and signing. .

## Generally speaking, each business system or content site in the enterprise will have its own Search business systems need to be isolated from each other, but the isolation of content sites will form the phenomenon of information islands. For example, if a technical classmate encounters a technical problem, he may first go to ATA to search for technical articles related to the problem. If he cannot find it, he will then search for similar content in Zhibo, DingTalk Documents, and Yuque Documents, which will take a total of four or five searches. This search behavior is undoubtedly very inefficient. Therefore, we hope to collect these contents into a unified enterprise search, so that all relevant information can be obtained with just one search.

In addition, industry searches with business attributes generally need to be isolated from each other. For example, the users of the procurement mall are the buyers of the group, and the users of the contract center are the legal affairs of the group. The number of users in these two search scenarios is very small, so the user behavior will be relatively sparse and rely on user behavior data. recommendation algorithm, the effect will be greatly reduced. There is also very little annotated data in the fields of procurement and legal affairs, because it requires professionals to annotate and the cost is high, so it is difficult to collect high-quality data sets.The last thing is the matching problem between Query and document. The length of the searched Query is basically within a dozen words. It is a short text, lacks context, and the semantic information is not rich enough. , There are many related research works in the academic community for the understanding of short texts. The items searched are basically long documents, with the number of characters ranging from hundreds to thousands. Understanding and representing the content of long documents is also a very difficult task.

Currently, unified search is connected to more than 40 content sites, large and small, such as ATA, DingTalk Documents, and Yuque Documents. Alibaba's self-developed Ha3 engine is used for recall and rough sorting. Before recall, the algorithm's QP service is called to analyze the user's query and provide query segmentation, error correction, term weight, query expansion, NER intent recognition, etc. According to the QP results and business logic, the query string is assembled on the engine side for recall. The rough sorting plug-in based on Ha3 can support some lightweight sorting models, such as GBDT and so on. In the fine ranking stage, more complex models can be used for sorting. The correlation model is mainly used to ensure the accuracy of search, and the click-through rate estimation model directly optimizes the click-through rate.

In addition to search sorting, it also integrates other search peripheral functions, such as the search direct area of the search drop-down box, associated words, related searches, and popular Search etc. Currently, the services supported by the upper layer are mainly unified search within and outside Alibaba and Alibaba DingTalk, vertical search for procurement and legal affairs, and Query understanding of the ATA Teambition OKR system.

The above picture is the general architecture of enterprise search QP. The QP service is deployed on an algorithm online service platform called DII. The DII platform can support the construction and query of KV tables and index table indexes. It is a chain service framework as a whole, and complex business logic needs to be split into relatively independent and cohesive business modules. For example, the search QP service inside and outside Alibaba is divided into multiple functional modules such as word segmentation, error correction, query expansion, term weight, and intent recognition. The advantage of the chain framework is that it facilitates collaborative development by multiple people. Each person is responsible for the development of their own module. As long as the upstream and downstream interfaces are agreed upon, different QP services can reuse the same module, reducing duplicate code. In addition, a layer is wrapped on the underlying algorithm service to provide a TPP interface to the outside world. TPP is a mature algorithm recommendation platform within Alibaba. It can easily conduct AB experiments and elastic expansion. The mechanism of log management and monitoring and alarming is also very mature.

Perform Query preprocessing on the TPP side, then assemble the DII request, call the DII algorithm service, parse the result after obtaining it, and finally return it to the caller.

Next, we will introduce the Query intent identification work in two enterprise scenarios.

The bottom layer of internal and external Xiaomi is based on The Yunxiaomi Q&A engine launched by DAMO Academy can support FAQ Q&A, multi-round task Q&A, and knowledge graph Q&A. The right side of the picture above shows the general framework of the FAQ question and answer engine.

After the user enters a Query, there will be a rule intervention module, which mainly allows business and operations to set some rules. If the rule is hit, it will be directly Return the set answer. If no rule is hit, the algorithm will be used. The intent recognition module predicts user Query to the corresponding business line. There are many QA pairs in the FAQ knowledge base of each business line, and each question will be configured with some similar questions. Use Query to retrieve the candidate set of QA pairs in the knowledge base, and then use the text matching module to refine the QA pairs. Based on the model score, it is judged whether the answer is straight, related questions are recommended, or there is no answer. In addition to the FAQ question and answer engine, there will also be other question and answer engines such as task-based question and answer and knowledge graph question and answer. Therefore, a multi-module ranker is finally designed to choose which engine's answer to reveal to the user.

#The following focuses on the intent recognition module.

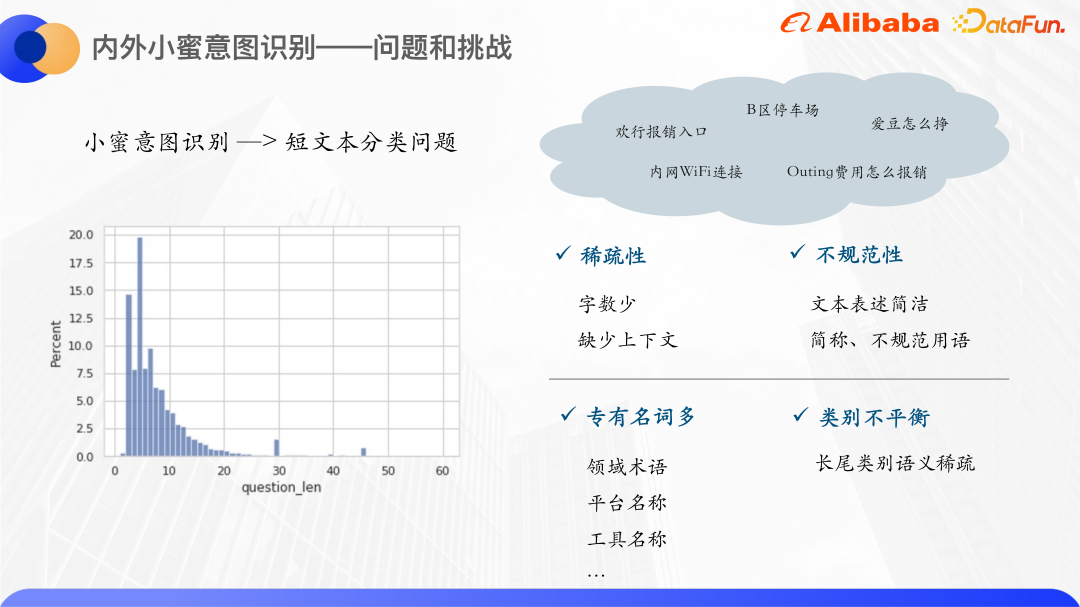

By counting Xiaomi’s user queries within and outside the past year, we found that most of the users The word count of Query is concentrated between 0-20, and more than 80% of Query words are within 10. Therefore, the intent recognition of Xiaomi is a problem of short text classification. The number of short texts is very small, so if the traditional vector space model is used Representation will cause the vector space to be sparse. And generally speaking, short text expressions are not very standardized, with many abbreviations and irregular terms, so there are more OOV phenomena.

Another feature of Xiaomi’s short text Query is that there are many proper nouns, usually internal platform and tool names, such as Huanxing, Idou and so on. The texts of these proper nouns themselves do not have category-related semantic information, so it is difficult to learn effective semantic representations, so we thought of using knowledge enhancement to solve this problem.

General knowledge enhancement will use open source knowledge graph, but the proper nouns within the enterprise cannot find the corresponding entities in the open source knowledge graph, so we Just look for knowledge from within. It happens that Alibaba has a knowledge card search function. Each knowledge card corresponds to an intranet product. It is highly related to the field of Xiaomi inside and outside. For example, Huanxing and Idol can find relevant information here. Knowledge cards, so corporate knowledge cards are used as knowledge sources.

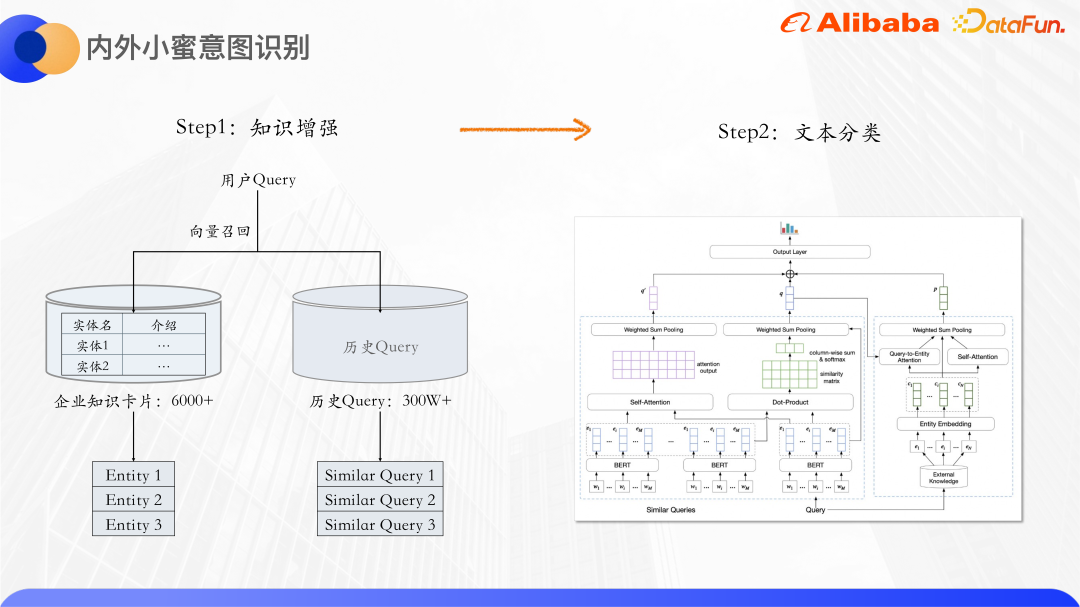

The first is knowledge enhancement, with a total of more than 6,000 There are enterprise knowledge cards. Each knowledge card will have an entity name and a text introduction. According to the user's query, the knowledge cards related to it are recalled, and historical queries are also used, because there are many queries that are similar, such as intranet Wifi connection, Wifi intranet connection, etc. Similar queries can supplement each other's semantic information and further alleviate the sparsity of short text. In addition to the knowledge card entities, similar queries are recalled, and the original queries are sent to the text classification model for classification.

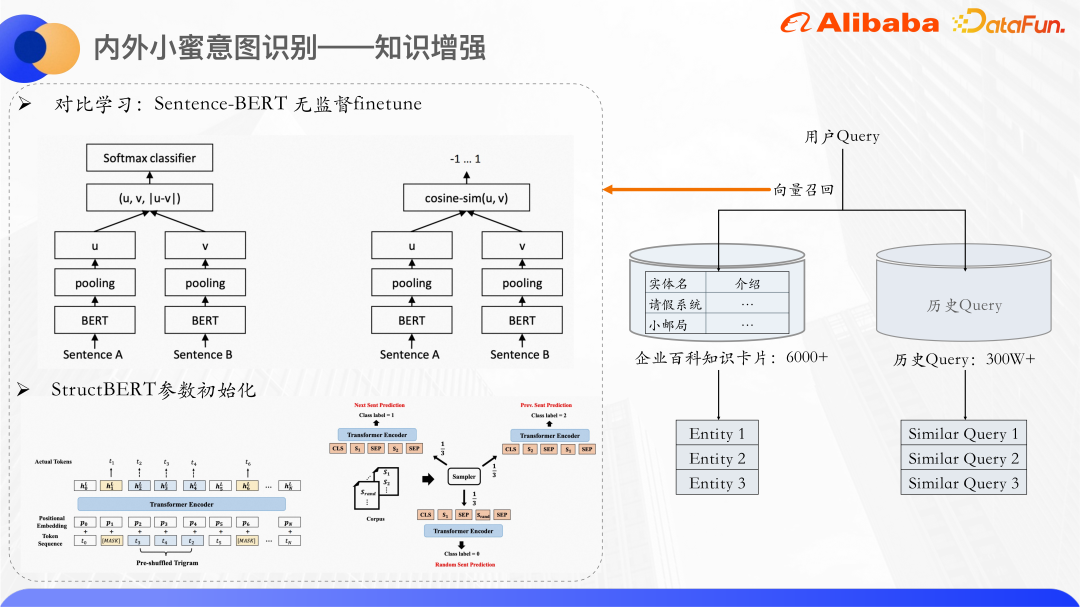

Use vector recall to recall entities and similar queries of knowledge cards. Use Bert to calculate the concrete quantity of the text description of query and knowledge card respectively. Generally speaking, Bert's CLS vector will not be used directly as sentence representation. Many papers have also mentioned that directly using CLS vector as sentence representation will have poor effect, because the vector output by Bert will have expression degradation problems and is not suitable for direct use. It does unsupervised similarity calculation, so it uses the idea of contrastive learning to bring similar samples closer and distribute dissimilar samples as evenly as possible.

Specifically, a Sentence-Bert is finetune on the data set, and its model structure and training method can produce better sentence vector representations. It is a two-tower structure. The Bert models on both sides share model parameters. The two sentences are input into Bert respectively. After pooling the hidden states output by Bert, the sentence vectors of the two sentences will be obtained. The optimization goal here is the loss of comparative learning, infoNCE.

Positive example: Directly input the sample into the model twice, but the dropout of these two times is are different, so the represented vectors will also be slightly different.

Negative example: All other sentences in the same batch.

By optimizing this Loss, we get the Sentence-Bert model to predict sentence vectors.

We use the StructBERT model parameters to initialize the Bert part here. StructBERT is a pre-training model proposed by DAMO Academy. Its model structure is the same as the native BERT. Its core idea is to incorporate language structure information into the pre-training task to obtain the sentence vectors and knowledge cards of the query. Through calculation Cosine similarity of vectors, recall the most similar top k knowledge cards and similar queries.

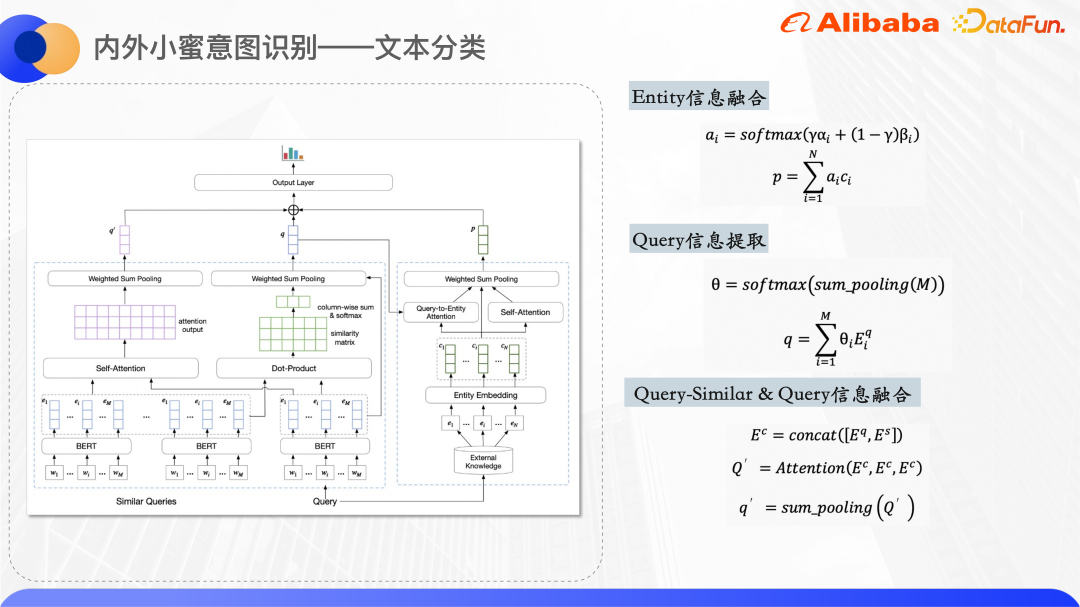

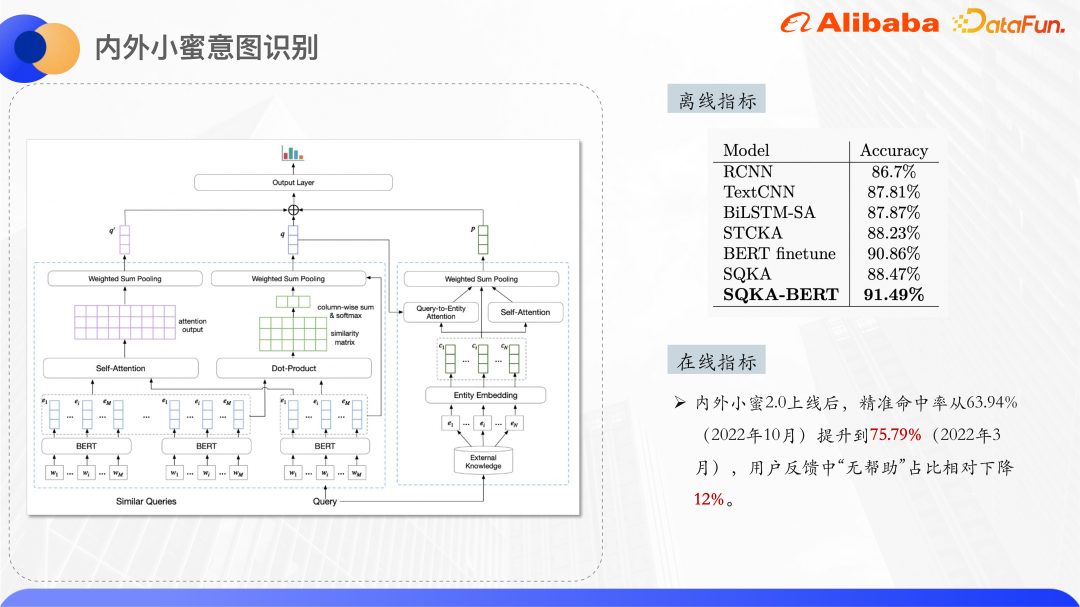

The above picture is the model structure of text classification, using Bert in the Encoding layer Extract the representation of the original query and the word vectors of similar queries. Each entity of the knowledge card maintains an entity ID embedding, and the ID embedding is randomly initialized.

#The right side of the model structure diagram is used to process the entities recalled by the query and obtain a unified vector representation of the entities. Because the short text itself is relatively vague, the recalled knowledge card entities will also have a certain amount of noise. By using two attention mechanisms, the model can pay more attention to the correct entities. One is Query-to-Entity Attention, which aims to make the model pay more attention to entities related to the query. The other is the self-Attention of the entity itself, which can increase the weight of entities that are similar to each other and reduce the weight of noisy entities. Combining the two sets of Attention weights, a vector representation of the final entity is obtained.

The left side of the model structure diagram is to process the original query and similar query. Because it is observed that the overlapping words of similar query and original query can characterize the central word of the query to a certain extent, so here we calculate the Click between two words to get the similarity matrix and do sum pooling to get the weight of each word in the original query that is relatively similar to the query. The purpose is to make the model pay more attention to the central word, and then combine the word vectors of the similar query and the original query together. Splice them together and calculate the fused semantic information.

Finally, the above three vectors are concatenated together, and the probability of each category is obtained through dense layer prediction.

The above are the experimental results, which exceed the results of BERT finetune. If Bert is not used in the encoding layer , also exceeds all non-Bert models.

Take the purchasing mall as an example. The mall has its own product category system, and each product will be listed under a product category before being put on the shelves. In order to improve the accuracy of mall search, it is necessary to predict the query to a specific category, and then adjust the search ranking results according to this category. You can also display sub-category navigation and related searches on the interface based on the category results.

Category prediction requires manually labeled data sets, but in the procurement field, the cost of labeling is relatively high, so this problem is solved from the perspective of small sample classification.

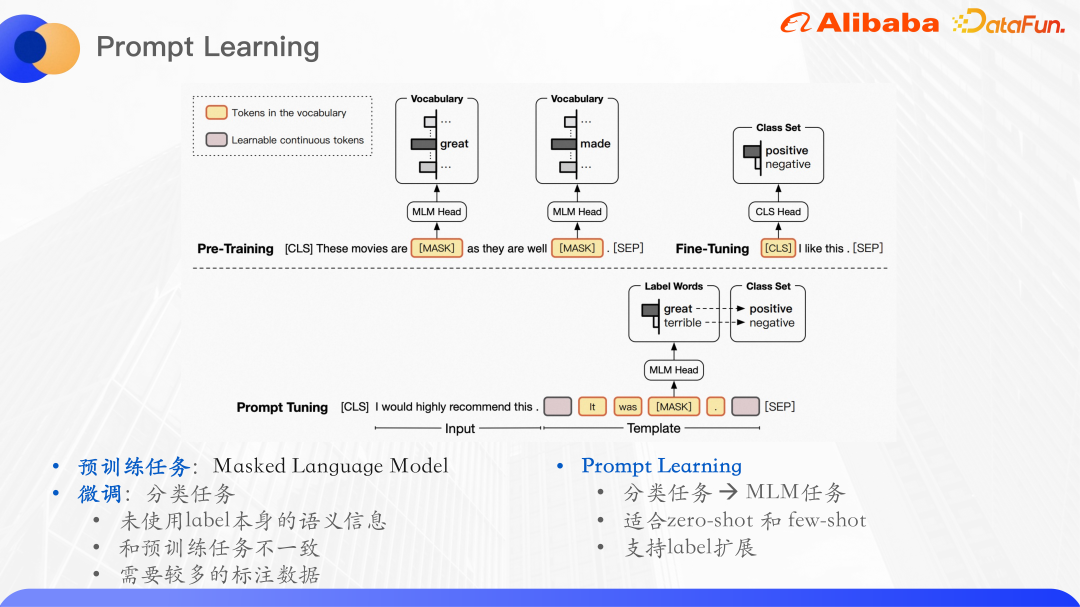

The pre-trained model has demonstrated strong language understanding capabilities on NLP tasks. Typical The paradigm used is to pre-train on a large-scale unlabeled dataset and then finetune on supervised downstream tasks. For example, Bert's pre-training task is mainly a mask language model, which means randomly masking out a part of the words in a sentence, inputting it into the original model, and then predicting the words in the mask part to maximize the likelihood of the words.

Doing query category prediction is essentially a text classification task. The text classification task is to predict the input to a certain label ID, and this does not use the label itself. The semantic information, fine-tuned classification tasks and pre-training tasks are inconsistent, and the language model learned from the pre-training tasks cannot be maximized, so a new pre-trained language model has emerged.

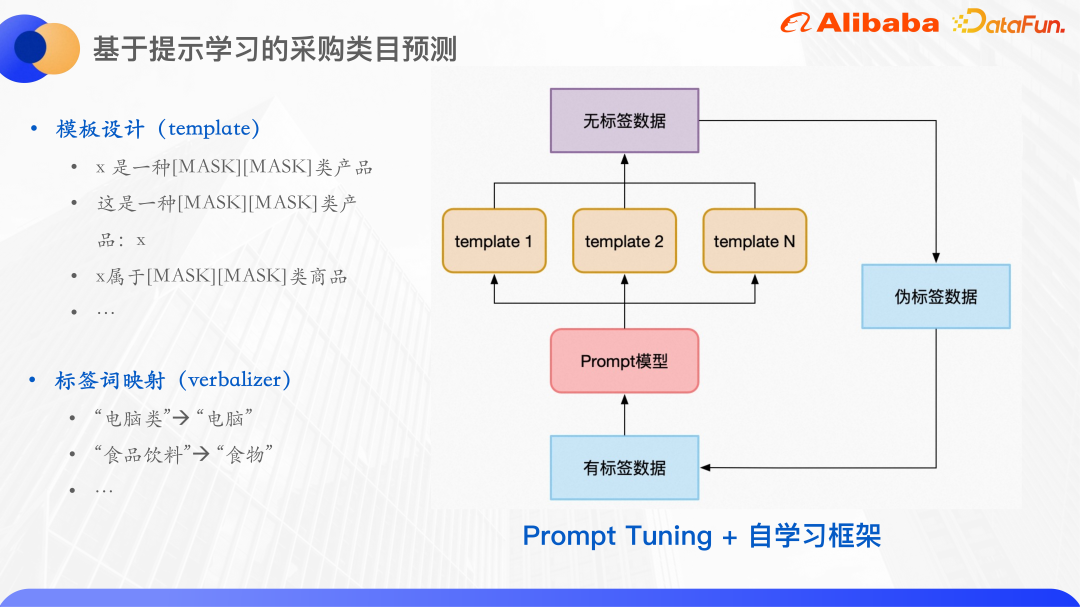

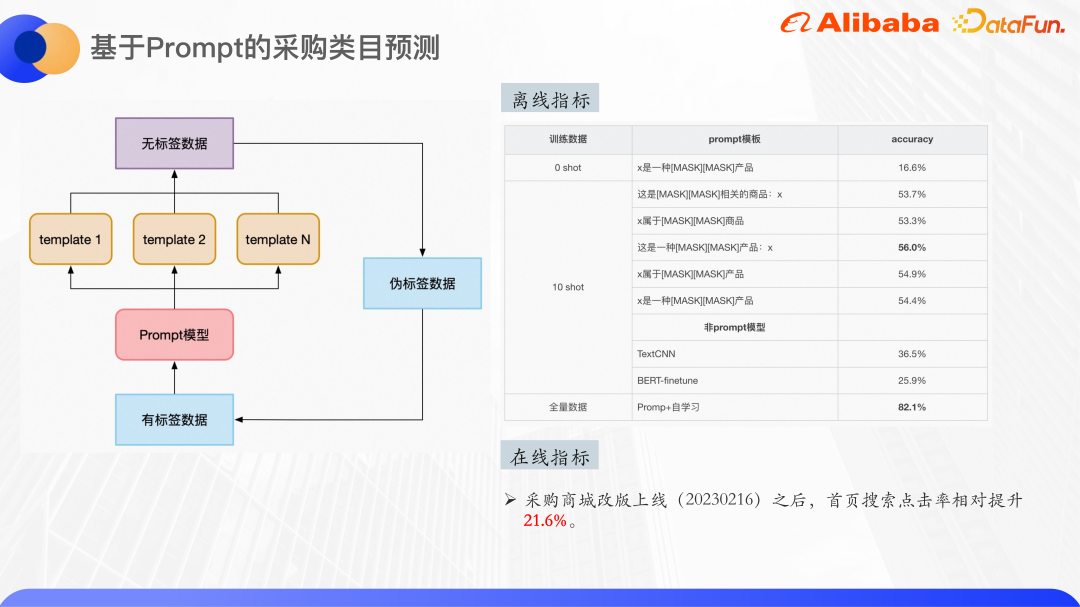

The paradigm of pre-trained language models is called prompt learning. Prompt can be understood as a clue to the pre-trained language model to help it better understand human problems. Specifically, an additional paragraph is added to the input text. In this paragraph, the words related to label will be masked, and then the model will be used to predict the words at the position of mask, thus converting the classification task into a mask language model. Task, after predicting the word at the mask position, it is often necessary to map the word to the label set. Category prediction for procurement is a typical small sample classification problem. Several templates are constructed for the category prediction task, and then the mask is dropped. The part is the word that needs to be predicted.

For the template, a mapping from prediction words to label words is established.

#First of all, predicted words are not necessarily labels. Because in order to facilitate training, the number of mask characters for each sample is the same. The original label words have 3 characters, 4 characters, etc. Here, the prediction words and label words are mapped and unified into two characters.

In addition, based on prompt learning, using the self-learning framework, first use labeled data to train a model for each template, and then integrate several models to predict unlabeled data and train In one round, samples with high confidence are selected as pseudo-label data and added to the training set, thus obtaining more labeled data, and then training a round of models.

The above picture is some experimental results, you can see the classification in the zero shot scenario As a result, the pre-trained model uses Bert base, with a total of 30 classes, and zero shot can already achieve an accuracy of 16%. Training on the ten shot data set, several templates can reach a maximum accuracy of 56%, and the improvement is quite obvious. It can be seen that the choice of template will also have a certain impact on the results.

The same ten shot data set was also tested using TextCNN and BERT-finetune. The effects were far lower than the effect of prompt learning fine-tuning, so the prompt Learning is very effective in small sample scenarios.

Finally, using the full amount of data, about 4,000 training samples, and self-learning, the effect reached about 82%. Adding some post-processing such as card threshold online can ensure the classification accuracy is above 90%.

##Enterprise There are two major difficulties in understanding scene Query:

# (1) Insufficient domain knowledge, General short text understanding Knowledge graphs will be used for knowledge enhancement, but due to the particularity of enterprise scenarios, open source knowledge graphs are difficult to meet the needs, so semi-structured data within the enterprise are used for knowledge enhancement.

(2) There are very few labeled data in some professional fields within the enterprise, 0 samples and small samples There are many scenarios. In this case, it is natural to think of using a pre-training model plus hint learning. However, the experimental results of 0 samples are not particularly good, because the corpus used in the existing pre-training model does not actually cover us. Domain knowledge of enterprise scenarios.

# So is it possible to train an enterprise-level pre-trained large model, using data from internal vertical fields of the enterprise based on common corpus, such as Alibaba’s ATA? The article data, contract data, code data, etc. are trained to obtain a large pre-trained model, and then prompt learning or Context learning is used to unify various tasks such as text classification, NER, and text matching into one language model task.

In addition, for factual tasks such as question and answer QA and search, how to ensure the correctness of the answers based on the results of the generative language model is also something that needs to be thought about. question.

#A1: The model is self-developed, and there are no papers and codes yet.

A2: Query and similar queries use token dimension level input, and knowledge cards only use ID embedding, because considering the name of the knowledge card itself, there are some internal The product name is not particularly meaningful in terms of textual semantics. If these knowledge cards are described in text, they are just a relatively long text, which may introduce too much noise, so its text description is not used, only the ID embedding of this knowledge card is used.

A3: ten short is indeed only about 50%, because the pre-trained model does not cover some rare corpora in the procurement field, and uses parameters The model BERT-base has a relatively small amount, so the effect of ten shot is not very good. However, if the full amount of data is used, the accuracy can be achieved above 80%.

A4: This area is currently being explored. The main idea is to use some ideas similar to reinforcement learning and add some artificial feedback to adjust the output before the language model is generated.

After the input, add some preprocessing behind the output of the large model. During preprocessing, you can add knowledge graphs or other knowledge to Ensure the accuracy of your answers.

The above is the detailed content of Query intent recognition based on knowledge enhancement and pre-trained large model. For more information, please follow other related articles on the PHP Chinese website!