Suppose you look at a few photos of an object, can you imagine how it looks from other angles? People can do it. We can guess what the parts we haven't seen before, or what the angles we haven't seen look like. In fact, the model has a way to do this. Given some scene pictures, it can also brainstorm images from unseen angles.

Rendering a new perspective, the most eye-catching recently is NeRF (Neural Radiance Field), which was nominated for the ECCV 2020 Best Paper honorary mention. It does not require the previous complicated three-dimensional reconstruction process, only It takes just a few photos and the position of the camera when the photo was taken to synthesize an image from a new perspective. The stunning effects of NeRF attracted many visual researchers, and a series of excellent works were subsequently produced.

But the difficulty is that such models are complex to build, and there is currently no unified code base framework to implement them, which will undoubtedly hinder further exploration and development in this field. . To this end, the OpenXRLab rendering generation platform has built a highly modular algorithm library XRNeRF to help quickly realize the construction, training and inference of NeRF-like models.

##Open source address: https://github.com/openxrlab/xrnerf

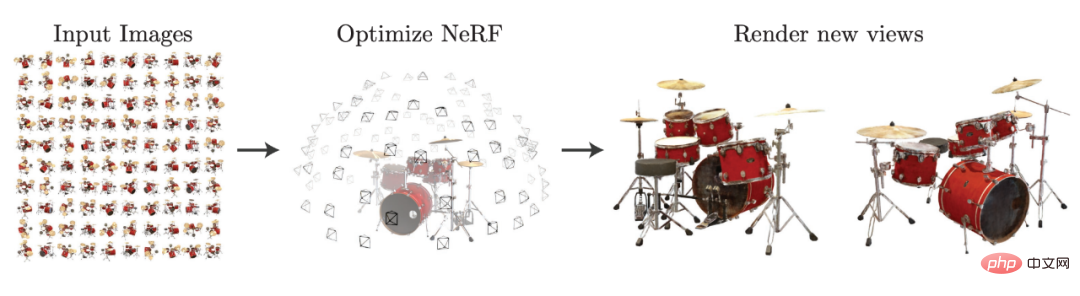

What is a NeRF class modelNeRF class tasks generally refer to capturing scene information under a known perspective, including the captured images, and the internal and external parameters corresponding to each image, thereby synthesizing new Image from perspective. We can understand this task very clearly with the help of the diagram in the NeRF paper.

Selected from arxiv: 2003.08934.

#NeRF will also collect 5-dimensional scene information when collecting images, that is, one image corresponds to a three-dimensional coordinate value and two other light radiation angles. Such a scene will be modeled as a Radiance Field through a multi-layer perceptron, which means that the multi-layer perceptron will input a three-dimensional coordinate point and map it to the Density and RGB color of the point, thereby using voxel rendering (Volume Rendering) to convert the Radiance Field is rendered into a photorealistic virtual perspective.

As shown in the picture above, after constructing the Radiance Field through some pictures, the image of the drum set from a new perspective can be generated. Because NeRF does not require explicit 3D reconstruction to obtain the desired new perspective, it provides a 3D implicit representation paradigm based on deep learning, which can train 3D scenes using only 2D posed images data. Deep neural networks for information.

Since NeRF, NeRF-like models have emerged in endlessly: Mip-NeRF uses cones instead of rays to optimize the generation of fine structures; KiloNeRF uses thousands of micro-multilayers Perceptron instead of a single large multi-layer perceptron, reducing the amount of calculation and achieving real-time rendering capabilities; in addition, models such as AniNeRF and Neural Body learn human perspective transformation from short video frames to obtain good perspective synthesis and driving effects; in addition, GN The 'R model uses sparse perspective images and geometric priors to achieve generalizable human rendering between different IDs.

The generalizable human body implicit field representation proposed by GN'R achieves the single-model human body rendering effect

Putting wheels on NeRFAlthough the current NeRF algorithm is very popular in the research field, it is a relatively new method after all, so the model implementation must be A bit more troublesome. If you are using a conventional framework such as PyTorch or TensorFlow, you must first find a similar NeRF model and then modify it based on it.

Doing this will bring about several obvious problems. First of all, we have to completely understand an implementation before we can change it to what we want. The workload of this part is actually quite large. ; Secondly, because the official implementations of different papers are not unified, it will consume a lot of energy when comparing the source code of different NeRF models. After all, no one knows whether there are some novel tricks in the training process of a certain paper; finally, if there is no With a unified set of code, it will undoubtedly be much slower to verify new ideas for new models.

In order to solve many problems, OpenXRLab constructs a unified and highly modular code base framework XRNeRF for the NeRF class model.

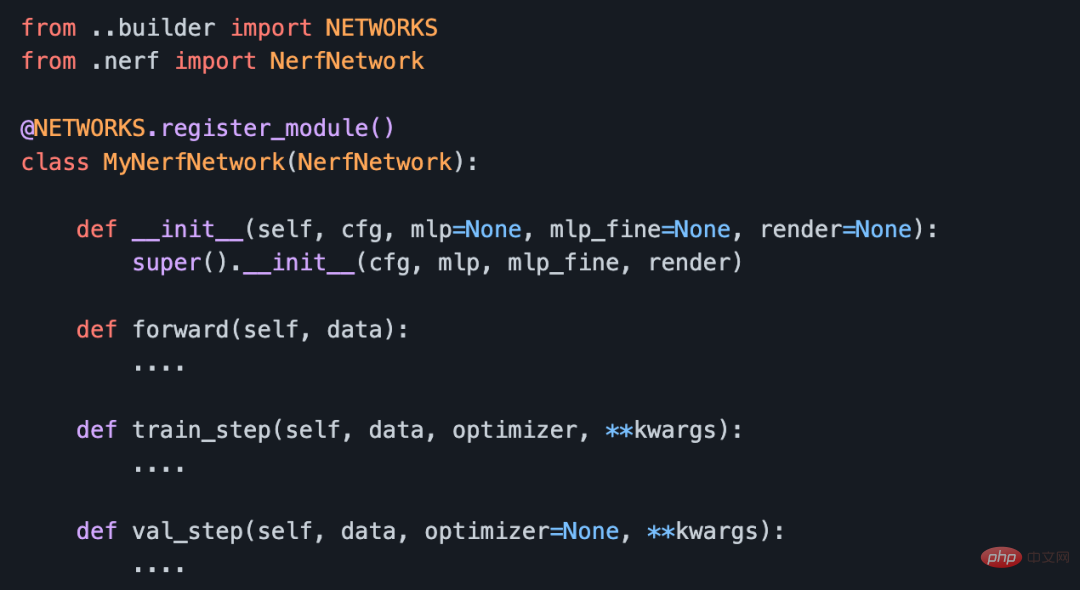

XRNeRF implements many NeRF models, which makes it easier to get started and can easily reproduce the experimental results of the corresponding papers. XRNeRF divides these models into five modules: datasets, mlp, network, embedder and render. The ease of use of XRNeRF lies in the fact that different modules can be assembled to form a complete model through the config mechanism. It is extremely simple and easy to use, and also greatly increases the reusability.

On the basis of ensuring ease of use, flexibility is also required. XRNeRF can customize the specific characteristics or implementation of different modules through another set of register mechanisms, thus making XRNeRF There is greater decoupling and the code is easier to understand.

In addition, all algorithms implemented by XRNeRF adopt the Pipeline mode. The Pipeline on the data reads the original data and obtains the input of the model after a series of processing. The Pipeline of the model then The input data is processed and the corresponding output is obtained. Such a Pipeline connects the config mechanism and the registration machine mechanism to form a complete architecture.

XRNeRF implements many core NeRF models and strings them together through the above three mechanisms to build a highly modular code framework that is both easy to use and flexible.

XRNeRF is a NeRF class algorithm library based on the Pytorch framework. It has reproduced 8 classic papers in both scene and body directions. Compared with direct modeling, XRNeRF has significantly improved model building efficiency, cost and flexibility, and has complete usage documentation, examples and issue feedback mechanisms. In summary, the core features of XRNeRF have the following five points.

1. Implemented many mainstream and core algorithms

For example, the pioneering work NeRF, CVPR 2021 Best Paper Candidate (NeuralBody), ICCV 2021 Best Paper Honorable Mention (Mip-NeRF) and Siggraph 2022 Best Paper (Instant NGP).

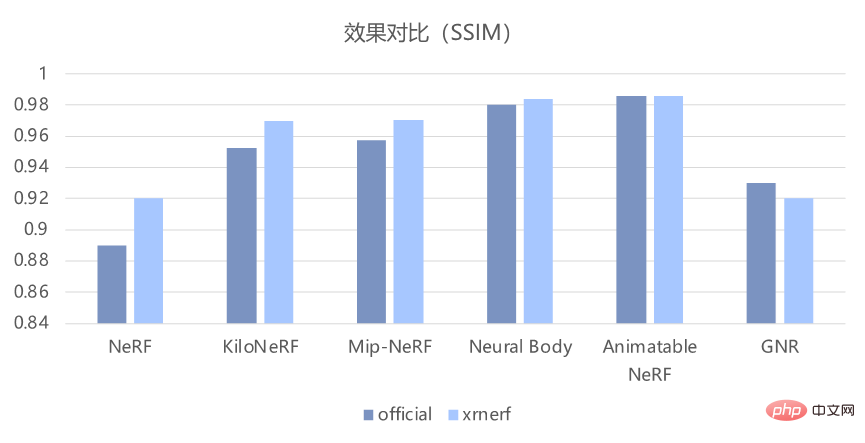

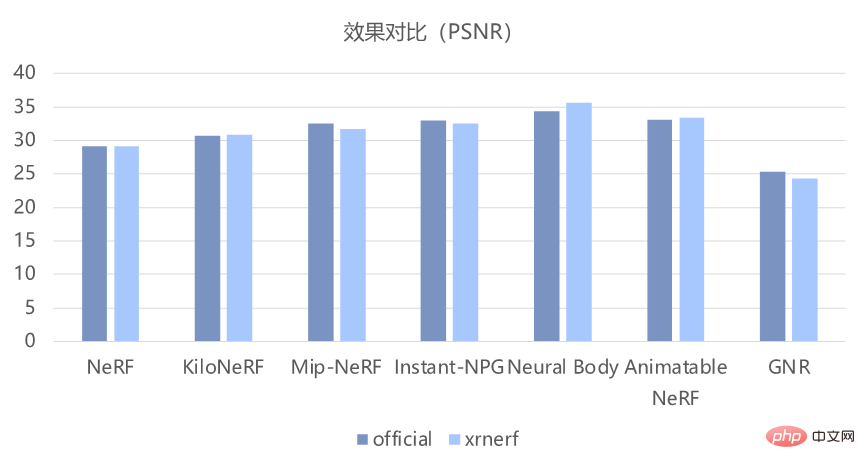

On the basis of implementing these models, XRNeRF can also ensure that the reproduction effect is basically consistent with that in the paper. As shown in the figure below, judging from the objective PSNR and SSIM indicators, it can well reproduce the effect of the original code.

2. Modular design

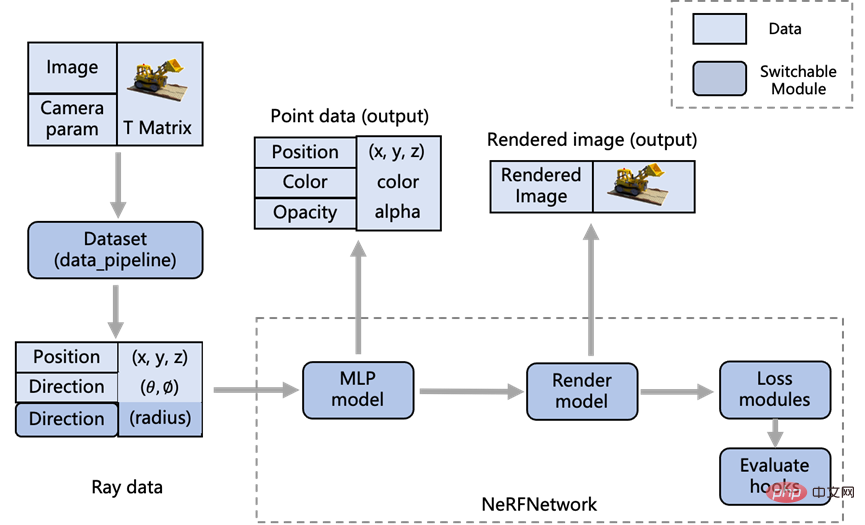

XRNeRF modularizes the entire code framework to maximize code reusability and facilitate researchers to read and modify existing codes. By analyzing the existing NeRF class model methods, the specific module process of XRNeRF design is shown in the following figure:

# #The advantage of modularity is that if we need to modify the data format, we only need to modify the logic under the Dataset module. If we need to modify the logic of rendering images, we only need to modify the Render model module.

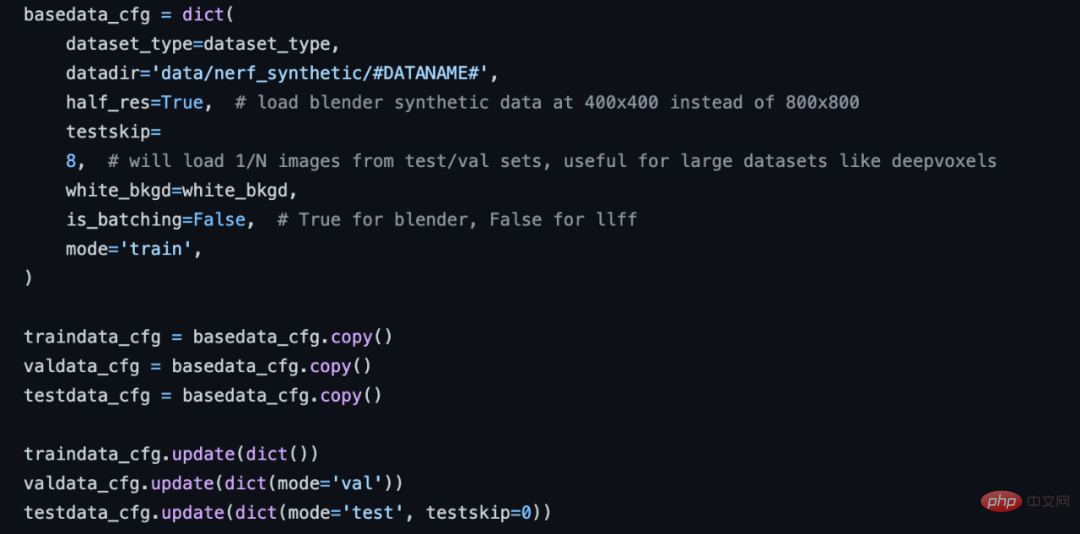

3. Standard data processing pipeline

XRNeRF provides a set of complex and diverse problems in data preprocessing for NeRF algorithms. Standard data processing procedures. It is obtained serially from multiple data processing operations. You only need to modify the data pipeline part in the config configuration file to complete the smooth construction of data processing.

NeRF configuration data flow section.

The data processing ops required for multiple data sets have been implemented in XRNeRF. You only need to define these ops in order in the config to complete the data processing process. of construction. If a new op needs to be added in the future, you only need to complete the implementation of the new op in the corresponding folder, and one line of code can be added to the entire data processing process.

4. Modular network construction method

##The model in XRNeRF mainly consists of embedder, MLP and render model Composed and connected through a network, these can be decoupled from each other, thus enabling the replacement of different modules between different algorithms.

The embedder inputs the position and perspective of the point and outputs the embedded feature data; the MLP uses the output of the embedder as input and outputs the Density and RGB color of the sampling point; the render model inputs the output of the MLP As a result, operations such as integration are performed along the points on the ray to obtain the RGB value of a pixel on the image. These three modules are connected through the standard network module to form a complete model.

Customize the code structure of the network module.

5. Good reproduction effect

Supports training network in the fastest 60 seconds, 30 Real-time rendering of frames per second, supporting high-definition, anti-aliasing, multi-scale scene and human body image rendering. Whether looking at the objective PSNR and SSIM indicators or the subjective demo display effect, XRNeRF can well reproduce the effect of the original code.

Usage of XRNeRFThe XRNeRF framework seems to have very good features, and it is also very simple and convenient to use. For example, during the installation process, XRNeRF relies on many development environments, such as PyTorch, CUDA environment, visual processing libraries, etc. However, XRNeRF provides a Docker environment, and image files can be built directly through DockerFile.

#We tried it. Compared with configuring various operating environments and packages step by step, the configuration method of only docker build one-line command is obviously more convenient. Too much. In addition, when building a Docker image, the domestic image address is configured in the DockerFile, so the speed is still very fast, and there is basically no need to worry about network problems.

After building the image and starting the container from the image, we can transfer the project code and data to the container through the docker cp command. However, you can also directly map the project address to the inside of the container through the -v parameter when creating the container. However, it should be noted here that the data set needs to be placed in a certain location (otherwise the config file needs to be changed), such as the data folder under the XRNeRF project.

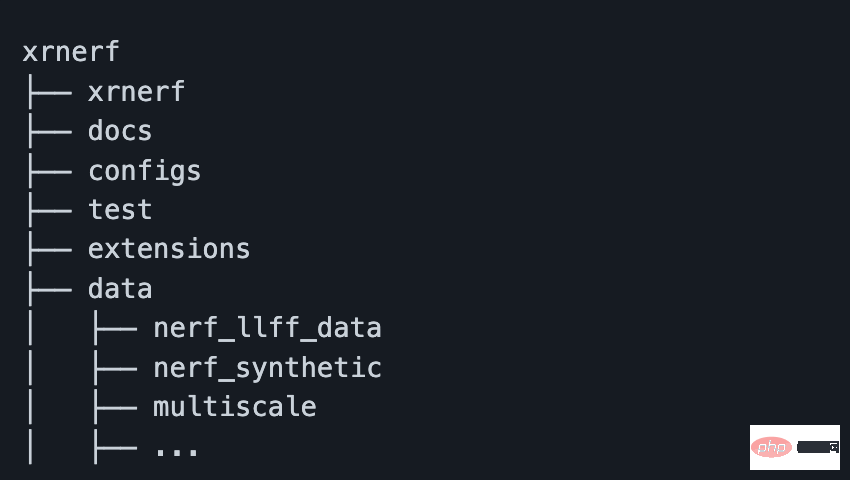

Generally speaking, after downloading the data, the approximate folder structure is as shown below:

Now, the environment, data and code are all ready. With just one short line of code, you can perform the training and verification of the NeFR model:

python run_nerf.py --config configs/nerf/nerf_blender_base01.py --dataname lego

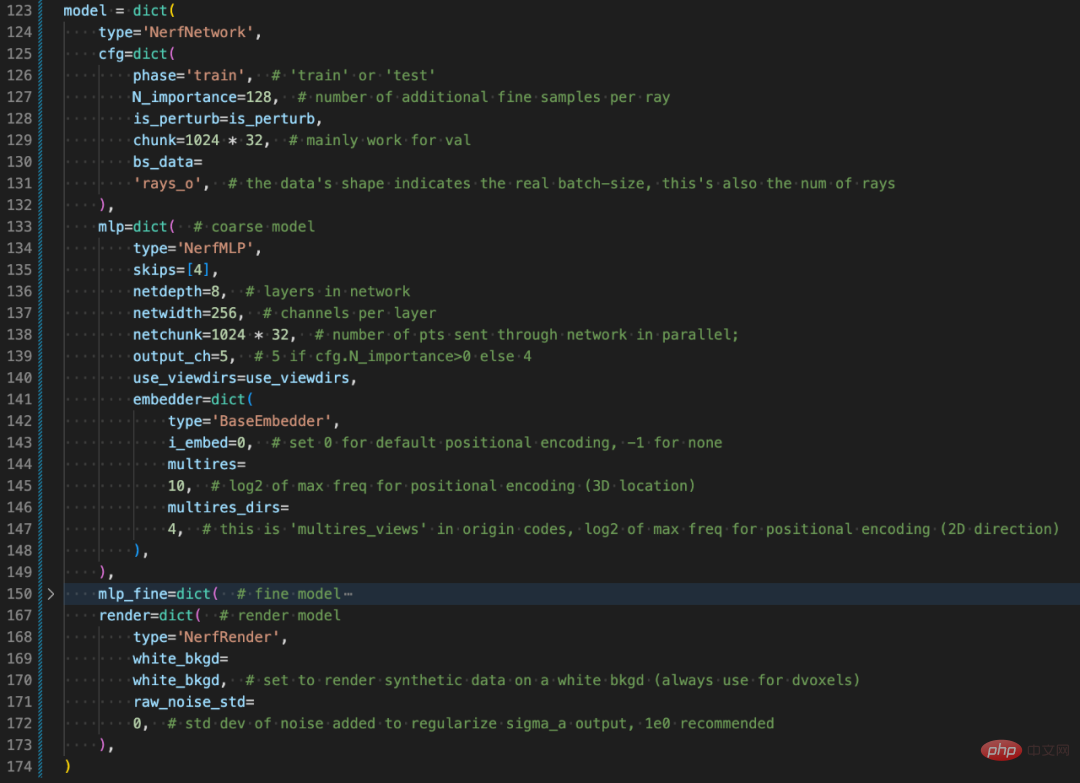

Where dataname represents the specific data set in the data directory, and config represents the specific configuration file of the model. Because XRNeRF adopts a highly modular design, its config is built using a dictionary. Although it may seem a little cumbersome at first glance, after actually understanding the design structure of XRNeRF, it is very simple to read.

From a subjective point of view, the config configuration file (nerf_blender_base01.py) contains all necessary information for training the model, including optimizers, distributed strategies, model architecture, data preprocessing and iteration, etc. , and even many image processing related configurations are included. In summary, in addition to specific code implementation, the config configuration file describes the entire training and inference process.

Describes the Config configuration of the model structure part.

Overall experience, XRNeRF is relatively smooth from the basic operating environment establishment to the final execution of training tasks. Moreover, by configuring the config file or implementing specific OPs, you can also obtain very high modeling flexibility. Compared with directly using deep learning framework modeling, XRNeRF will undoubtedly reduce a lot of development work, and researchers or algorithm engineers can also spend more time on model or task innovation.

The NeRF class model is still the focus of research in the field of computer vision. A unified code base like XRNeRF, like the Transformer library of HuggingFace, can gather more and more excellent research work. Gathering more and more new code and new ideas. In turn, XRNeRF will also greatly accelerate researchers' exploration of NeRF-type models, making it easier to apply this new field to new scenarios and tasks, and the potential of NeRF will also be accelerated.

The above is the detailed content of Brainstorming new perspectives, a unified NeRF code base framework has been open sourced. For more information, please follow other related articles on the PHP Chinese website!

Introduction to the usage of vbs whole code

Introduction to the usage of vbs whole code

Introduction to the meaning of javascript

Introduction to the meaning of javascript

The difference between Hongmeng system and Android system

The difference between Hongmeng system and Android system

clonenode usage

clonenode usage

How to open ofd file

How to open ofd file

What are the applications of the Internet of Things?

What are the applications of the Internet of Things?

Detailed explanation of onbeforeunload event

Detailed explanation of onbeforeunload event

Introduction to carriage return and line feed characters in java

Introduction to carriage return and line feed characters in java