After open source the SAM model that “divides everything”, Meta is going further and further on the road to “visual basic model”.

This time, they open sourced a set of models called DINOv2. These models can produce high-performance visual representations that can be used for downstream tasks such as classification, segmentation, image retrieval, and depth estimation without fine-tuning.

##This set of models has the following characteristics:

Learning non-task-specific pre-trained representations has become a standard in natural language processing. You can use these features "as-is" (no fine-tuning required), and they perform significantly better on downstream tasks than task-specific models. This success is due to pre-training on large amounts of raw text using auxiliary objectives, such as language modeling or word vectors, which do not require supervision.

As this paradigm shift occurs in the field of NLP, it is expected that similar "base" models will emerge in computer vision. These models should generate visual features that work "out of the box" on any task, whether at the image level (e.g. image classification) or pixel level (e.g. segmentation).

These basic models have great hope to focus on text-guided pre-training, that is, using a form of text supervision to guide the training of features. This form of text-guided pre-training limits the information about the image that can be retained, as the caption only approximates the rich information in the image, and finer, complex pixel-level information may not be discovered with this supervision. Furthermore, these image encoders require already aligned text-image corpora and do not provide the flexibility of their text counterparts, i.e. cannot learn from raw data alone.

An alternative to text-guided pre-training is self-supervised learning, where features are learned from images only. These methods are conceptually closer to front-end tasks such as language modeling, and can capture information at the image and pixel level. However, despite their potential to learn general features, most of the improvements in self-supervised learning have been achieved in the context of pre-training on the small refined dataset ImageNet1k. There have been some efforts by some researchers to extend these methods beyond ImageNet-1k, but they focused on unfiltered datasets, which often resulted in significant degradation in performance quality. This is due to a lack of control over data quality and diversity, which are critical to producing good results.

In this work, researchers explore whether self-supervised learning is possible to learn general visual features if pre-trained on a large amount of refined data. They revisit existing discriminative self-supervised methods that learn features at the image and patch level, such as iBOT, and reconsider some of their design choices under larger datasets. Most of our technical contributions are tailored to stabilize and accelerate discriminative self-supervised learning when scaling model and data sizes. These improvements made their method approximately 2x faster and required 1/3 less memory than similar discriminative self-supervised methods, allowing them to take advantage of longer training and larger batch sizes.

Regarding the pre-training data, they built an automated pipeline for filtering and rebalancing the dataset from a large collection of unfiltered images. This is inspired by pipelines used in NLP, where data similarity is used instead of external metadata, and manual annotation is not required. A major difficulty when processing images is to rebalance concepts and avoid overfitting in some dominant modes. In this work, the naive clustering method can solve this problem well, and the researchers collected a small but diverse corpus consisting of 142M images to validate their method.

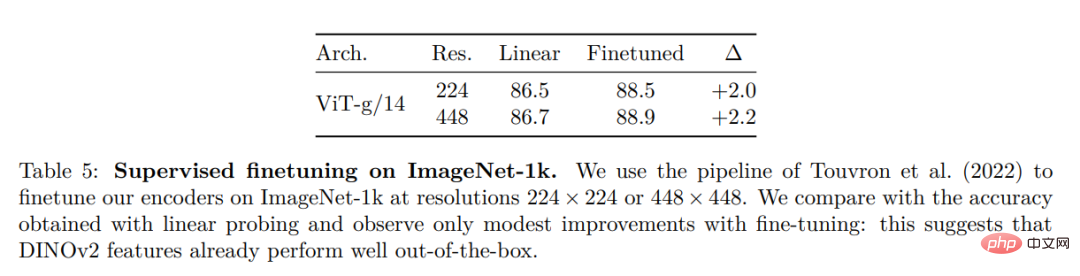

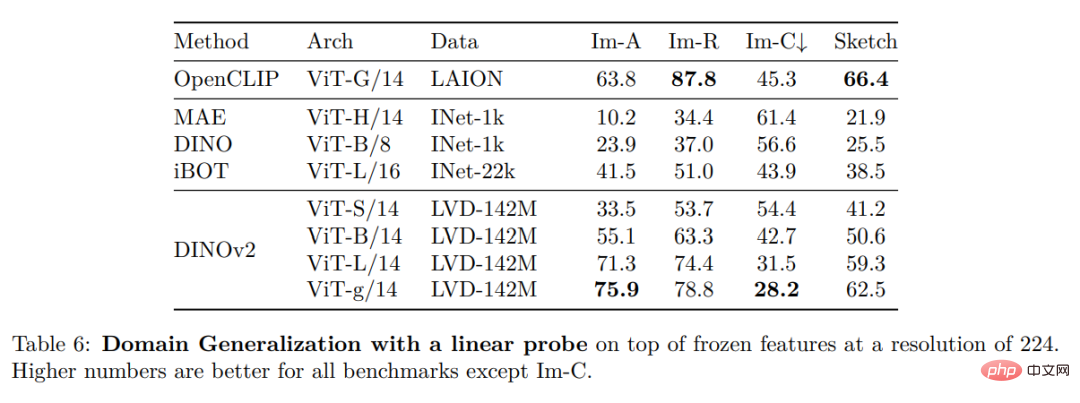

Finally, the researchers provide various pre-trained vision models, called DINOv2, trained on their data using different visual Transformer (ViT) architectures. They released all models and code to retrain DINOv2 on any data. When extended, they validated the quality of DINOv2 on a variety of computer vision benchmarks at the image and pixel levels, as shown in Figure 2. We conclude that self-supervised pre-training alone is a good candidate for learning transferable frozen features, comparable to the best publicly available weakly supervised models.

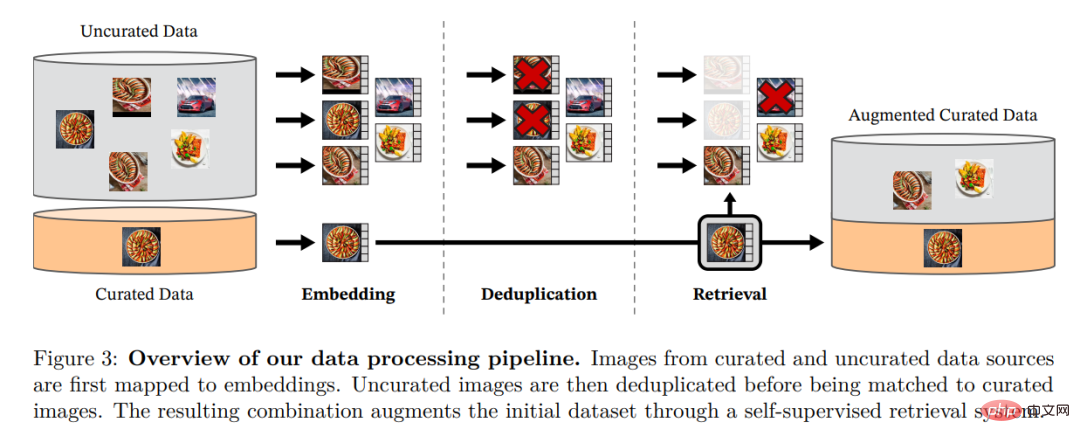

The researchers assembled their refined LVD by retrieving images from large amounts of unfiltered data that were close to images in multiple refined datasets -142M dataset. In their paper, they describe the main components in the data pipeline, including curated/unfiltered data sources, image deduplication steps, and retrieval systems. The entire pipeline does not require any metadata or text and processes images directly, as shown in Figure 3. The reader is referred to Appendix A for further details on the model methodology.

Figure 3: Overview of the data processing pipeline. Images from refined and non-refined data sources are first mapped to embeddings. The unrefined image is then deduplicated before being matched to the standard image. The resulting combination further enriches the initial data set through a self-supervised retrieval system.

The researchers learned their features through a discriminative self-supervised method that can see The work is a combination of DINO and iBOT losses, centered on SwAV. They also added a regularizer to propagate features and a brief high-resolution training phase.

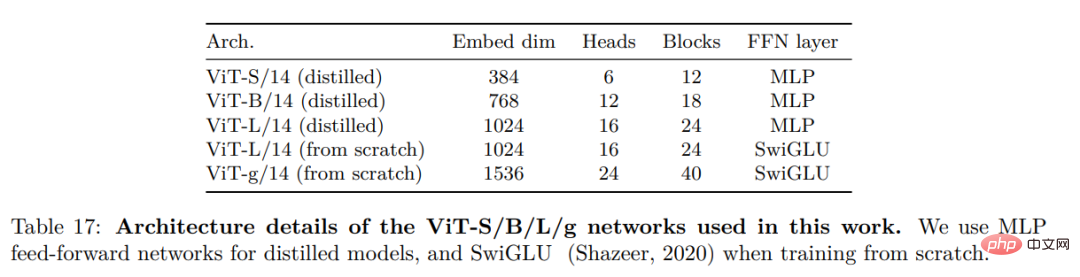

They considered several improvements to train the model on a larger scale. The model is trained on an A100 GPU using PyTorch 2.0, and the code can also be used with a pretrained model for feature extraction. Details of the model are in Appendix Table 17. On the same hardware, the DINOv2 code uses only 1/3 of the memory and runs 2 times faster than the iBOT implementation.

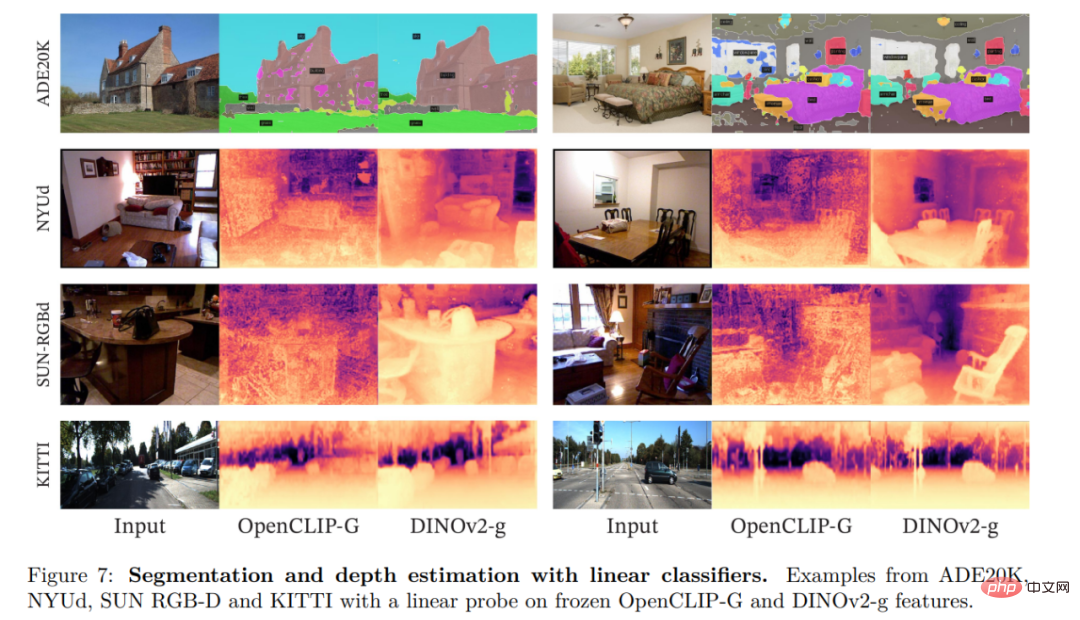

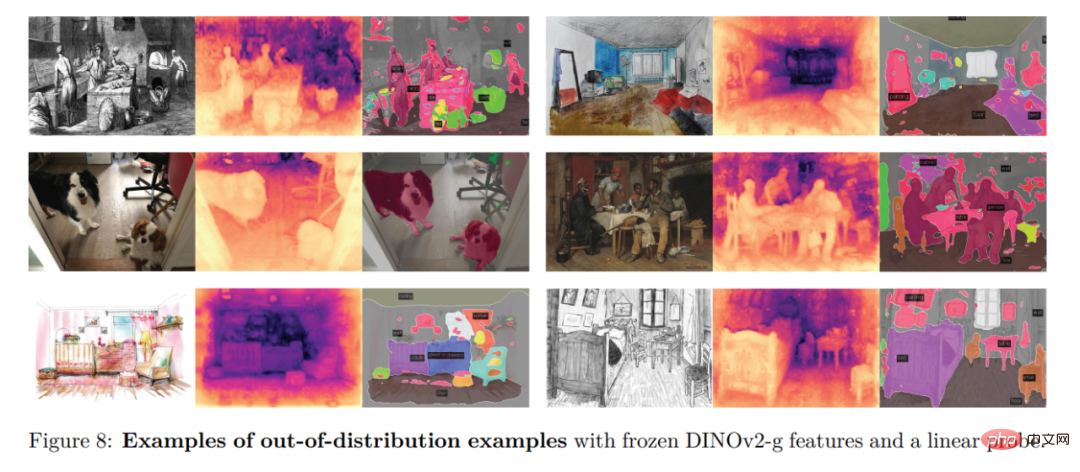

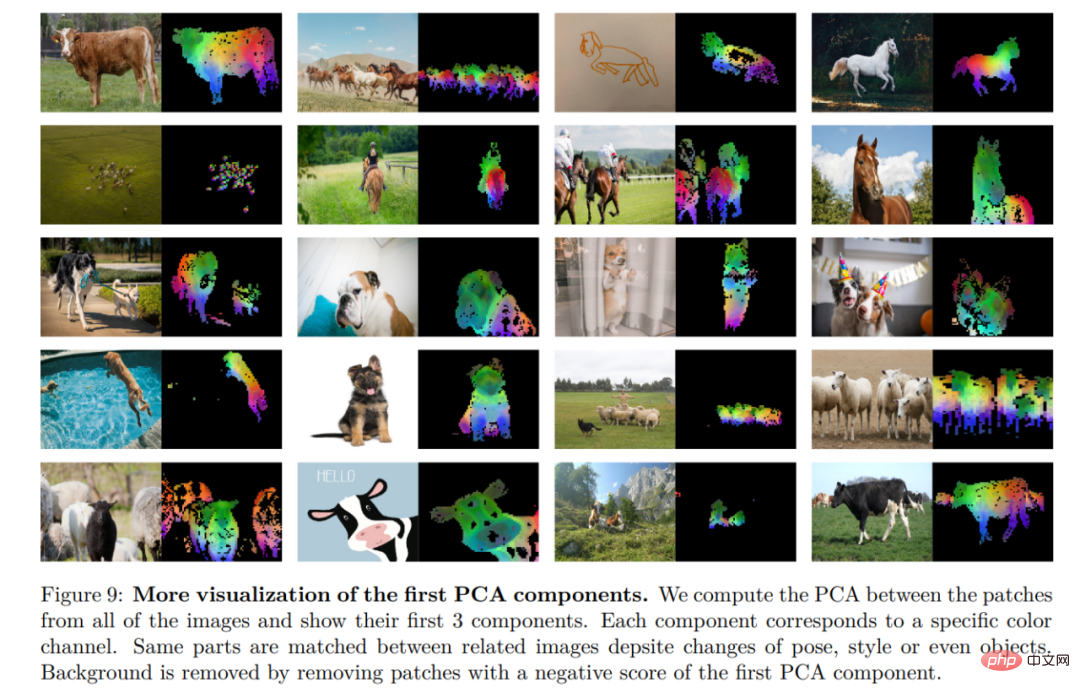

In this section, the researcher will introduce the new model in many image understanding Empirical evaluation on tasks. They evaluated global and local image representations, including category and instance-level recognition, semantic segmentation, monocular depth prediction, and action recognition.

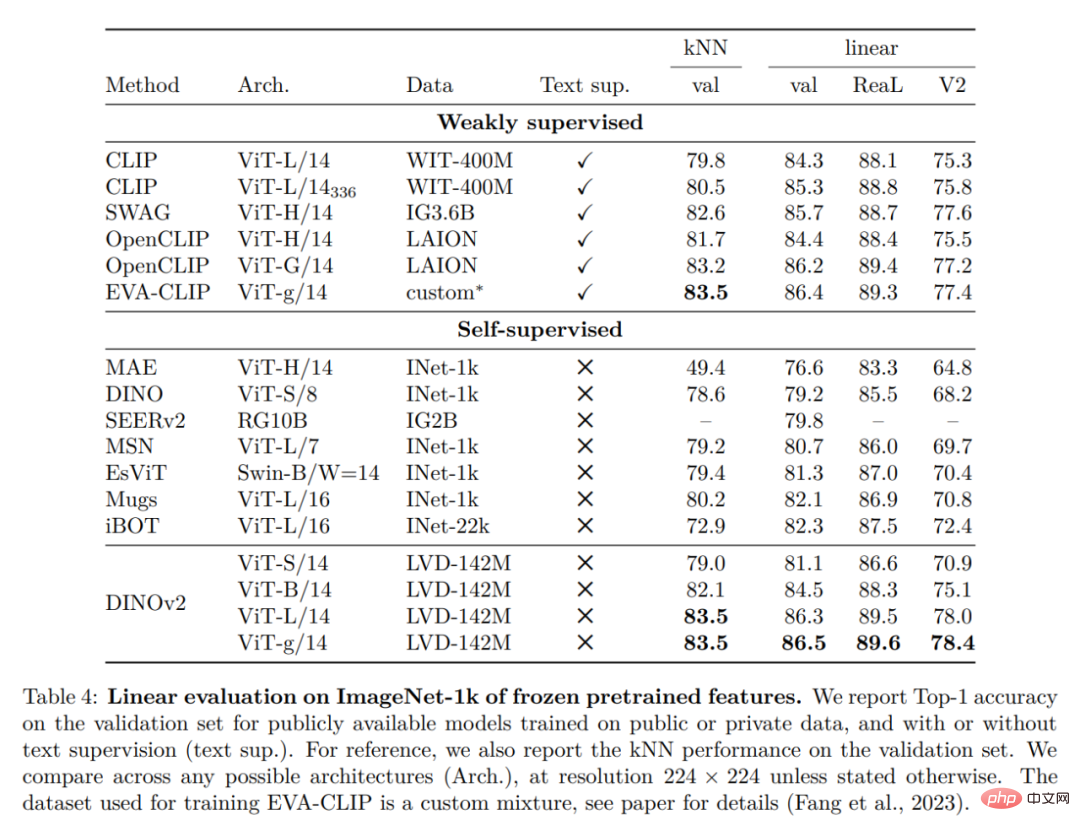

ImageNet Classification

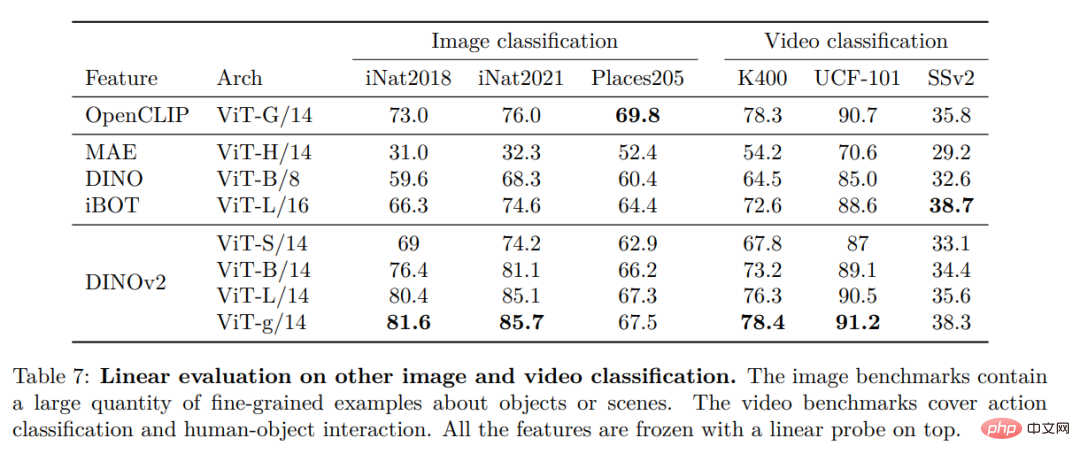

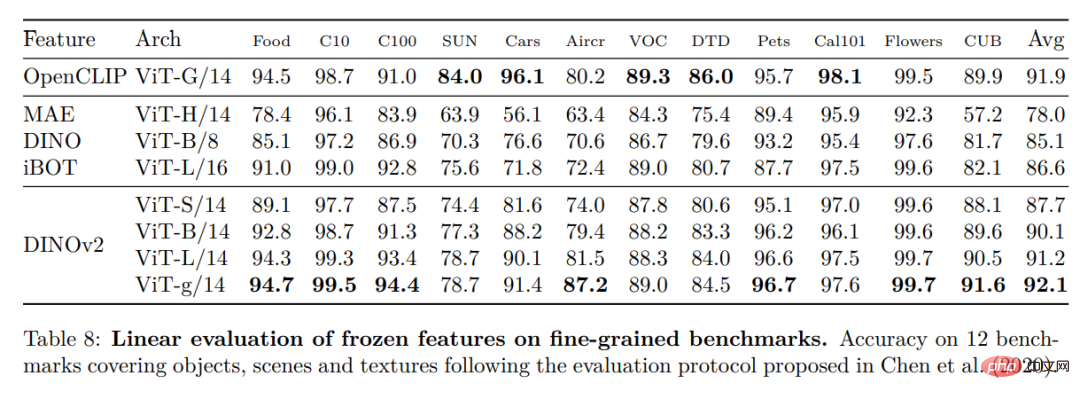

#Other Image and Video Classification Benchmarks

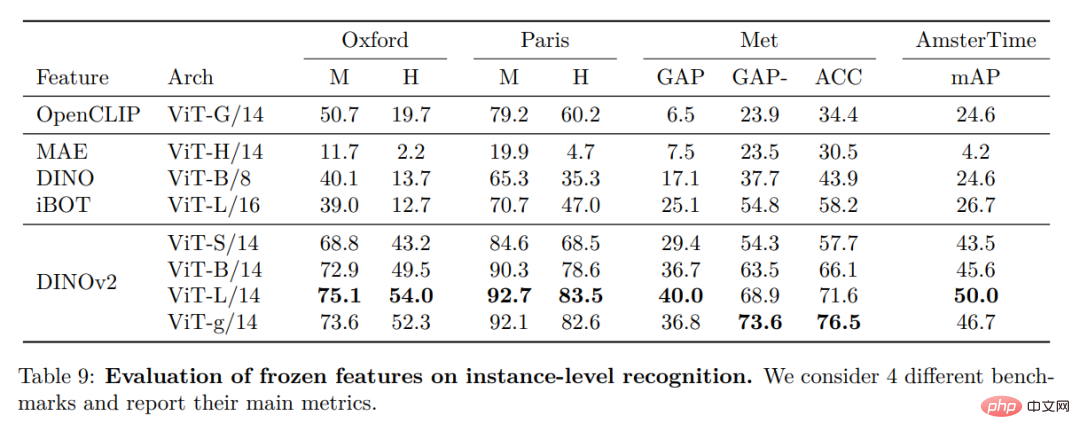

##Instance identification

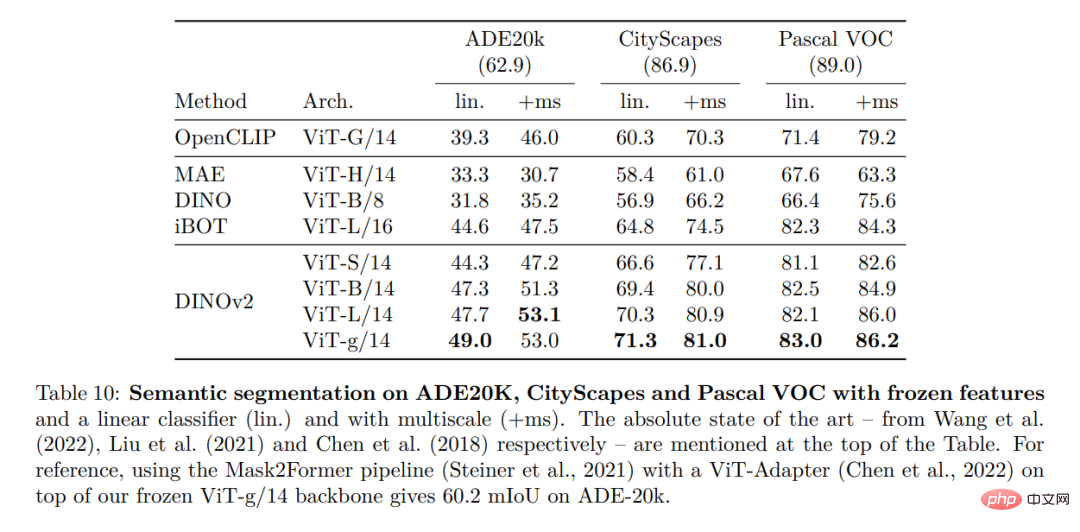

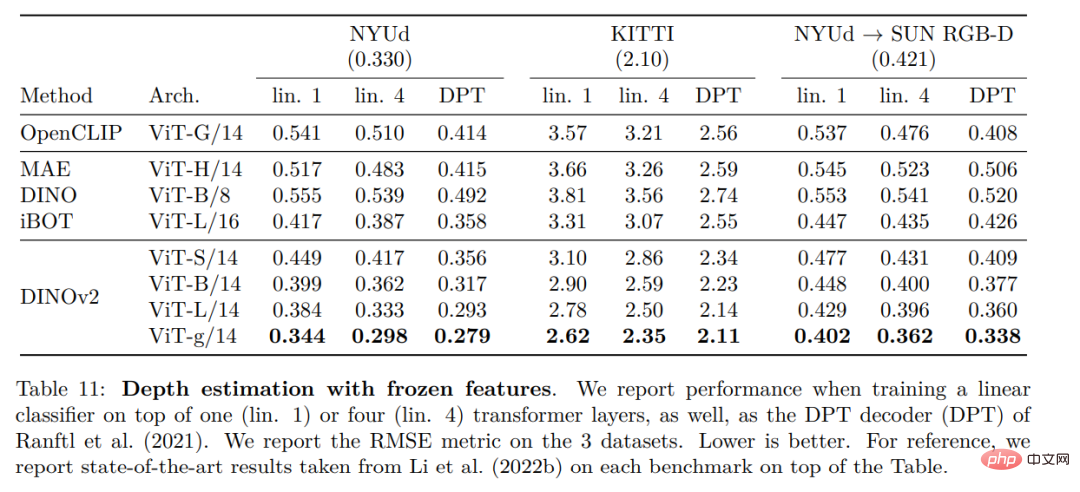

Dense recognition task

Qualitative results

The above is the detailed content of Meta releases multi-purpose large model open source to help move one step closer to visual unification. For more information, please follow other related articles on the PHP Chinese website!