In the era of intelligent driving, automobiles are transforming the new future. Automotive software and hardware, internal architecture, industry competition pattern, and value distribution in the industrial chain will also undergo profound changes. Under this wave of change, we believe that intelligent driving will successively go through three stages: increased utilization of assisted driving, mature autonomous driving solutions, and improved autonomous driving ecology, and will bring three waves of opportunities for hardware, software systems, and commercial operations respectively.

Among them, HD Map (high definition map), as a key factor in navigation and positioning, will also undergo major design changes. This is mainly reflected in the following important aspects:

The navigation map provides the length of a lane and the approximate road conditions of the relevant journey. High-precision maps provide very detailed road conditions. Such as road signs, slope, lane lines and the location of lane lines. These will be marked on the HD map. In the high-precision map, even the location of a certain traffic light is marked with high-precision GPS data. Therefore, when an unmanned vehicle is driving on the road, as long as there are paths made in the global path planning, and then these paths are converted into paths at the lane line level, the unmanned vehicle can follow each path marked on the high-definition map. Driving on the center line of each lane.

High-precision map and unmanned Other modules of the car are connected, including positioning, prediction, perception, planning, safety, simulation, control, and human-computer interaction, all of which require the help of high-precision maps. It’s not that some modules cannot implement these functions without high-precision maps, but with the help of high-precision maps, they can obtain more accurate information and make decisions that are more suitable for the traffic conditions at that time. I won’t elaborate on the more detailed technical stuff here, just an explanation of their general ideas

The main role of high-precision maps in positioning is that it provides information about static objects that have been determined for positioning. Then the unmanned vehicle can in turn find its relative position in the entire map based on the information of these static objects. If these static objects have their own high-precision latitude and longitude coordinates, the unmanned vehicle can reversely find its own latitude and longitude coordinates based on these latitude and longitude coordinates, thereby realizing sensor fusion based on high-precision maps and lidar cameras. Positioning method. This way you can get rid of relying on GPS data. Because GPS data has very strong noise when data is blocked. Of course, at this stage, the positioning method based on sensor fusion of radar and vision is not as accurate as the data provided by differential GPS, but it is still a positioning method. After all, when there is no GPS signal, the vehicle cannot drive without its own positioning information. At this time, it can only rely on other positioning methods.

The relationship between high-precision map and decision-making module It's even simpler. Because if the vehicle knows the route it wants to take in the future and the road signs and traffic lights and road information related to the route it wants to take, the decision-making module can make decisions that better match the current road conditions. It is equivalent to if we know what will happen in the future, we can adjust our current behavior in time to deal with what will happen in the future.

The relationship between high-definition map and simulation module is It’s easier to understand because as long as we position the vehicle or verify other algorithms on a map with high-precision map standards, the information obtained by the vehicle in actual applications is the same as the information we obtain in simulation. In other words, the code we build in the simulation environment can also be used in the real environment to a large extent.

##The perception module in driverless driving is a relatively complex module. Because it involves many, many real-life problems. But in fact, many things in most of the environments we perceive are static. So in this static environment we don't need to use additional computing power to calculate things that can be stored in the database in advance. For example, if a certain building is at a certain location, no matter how many times the vehicle drives to this location, the building it sees every time is at that point. Regardless of the perception method, the position of the building will not change with the vehicle's perception. Then the specific location of this kind of thing can be collected by a high-precision map collection vehicle, and then the data can be stored on the local hard drive of the unmanned vehicle. Then the unmanned vehicle can use this database to know that there is a building there every time it drives to that building without identifying it. Just like the positioning module, if we know the high-precision coordinates of this building, we can reversely find our own position based on these coordinates. Moreover, the computing power can be concentrated to identify dynamic objects other than this building based on the shape of the building and its physical characteristics that have been prepared in advance.

The specific content of the control is very detailed, and I am not very clear about it. But if it is to control the steering angle, the data of the center line of the lane line provided by the high-precision map is essential. Because although the lane line will be identified based on the camera, the position of the center line will then be identified based on this lane line. But these things are still not as accurate as the data provided by high-precision maps. After all, camera-based lane line recognition is real-time and will definitely make mistakes occasionally. Or when the lane lines become unclear because they have not been maintained for a long time, then the camera cannot recognize the corresponding lane line information. In this case, high-precision maps are needed. Lane lines are a very important piece of data in human driving, so current camera-based autonomous driving can only be implemented on highways. Because only the lane lines on the highway are in a better state of maintenance. Can be identified relatively easily. In contrast, lane lines in urban environments are not well maintained. Therefore, camera-based autonomous driving on urban roads is not yet advisable.

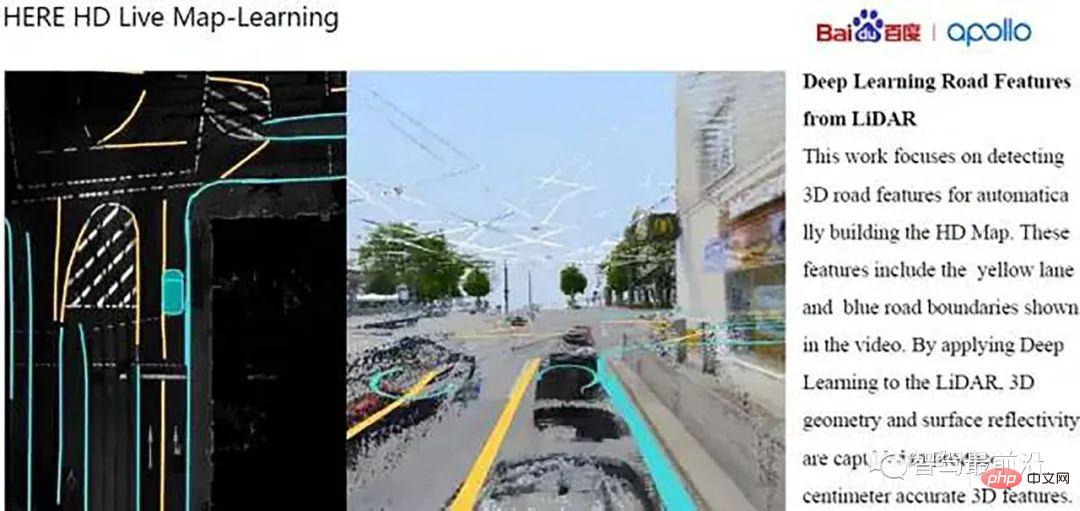

To be honest, I have not covered it here either But I heard it from the teacher. High-definition maps are first used to sweep streets by vehicles equipped with various sensors. After this kind of vehicle scans each street, it can obtain relevant point cloud information, camera information and other high-precision longitude and latitude information. The staff will then further edit them offline based on this information. What is involved here is point cloud splicing, road information recognized by cameras, such as lane lines, zebra crossings, and traffic lights. These static objects need to be further confirmed and marked by staff. Although the camera on the acquisition vehicle will perform preliminary feature recognition to extract these road-related features. But after all, it is based on computer vision, and the information extracted is not 100% correct. It may be wrong, or some annotations may not be well marked. Therefore, the last step still requires staff to conduct final confirmation and marking.

The above iterative update process of the map can be applied to realize L4/L5 level driverless functions and generate relevant robot control modes. It can also be used in the realization of commercial vehicles to ultimately realize driverless and even remote driving. drive.

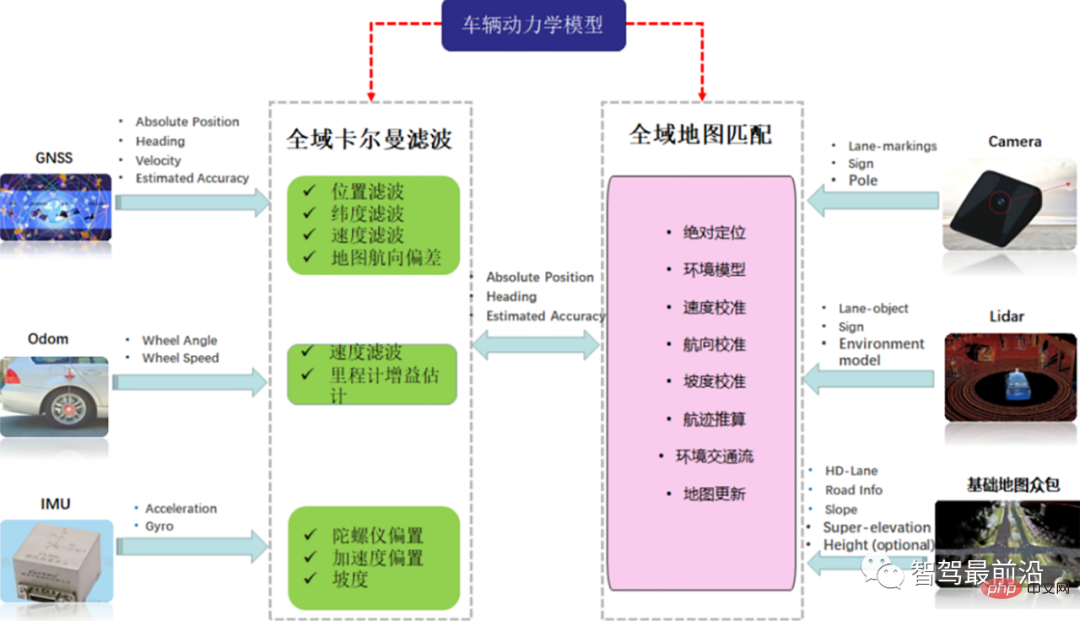

Obviously, in order to achieve precise positioning and continuously extend forward to improve its functional performance, high-precision maps must Obtained by continuously optimizing its own integrated positioning solution. This process involves two main software algorithms. The first is to perform dynamic optimal estimation of vehicle pose through full-state extended Kalman filtering; the second is to use visual sensors to obtain semantic information of the road environment and obtain precise positions through precise map matching algorithms. In addition, there is a need to improve economy, fit and overall performance. By choosing to configure industrial-grade vehicle-mounted terminal RTK: using high-performance industrial-grade 32-bit processor, built-in high-precision RTK board; establishing a channel with Qianxun platform through 3G/4G/5G, sending GGA information to the differential server, and receiving differential signals at the same time After receiving the information, the precise location information is output through RS232.

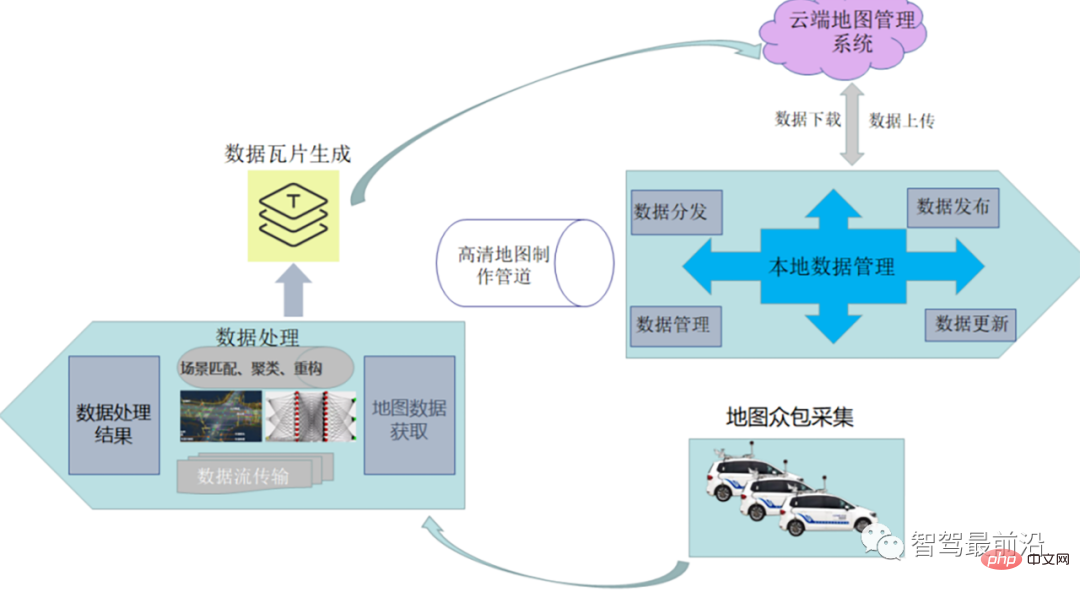

The most important process of high-precision maps It includes the collection and distribution of map crowdsourcing. Regarding the collection of crowdsourced map data, it can actually be understood that the road data collected by users through the self-driving vehicle's own sensors or other low-cost sensor hardware is transmitted to the cloud for data fusion, and the data is improved through data aggregation. accuracy to complete the production of high-precision maps. The entire crowdsourcing process actually includes physical sensor reporting, map scene matching, scene clustering, change detection and updating.

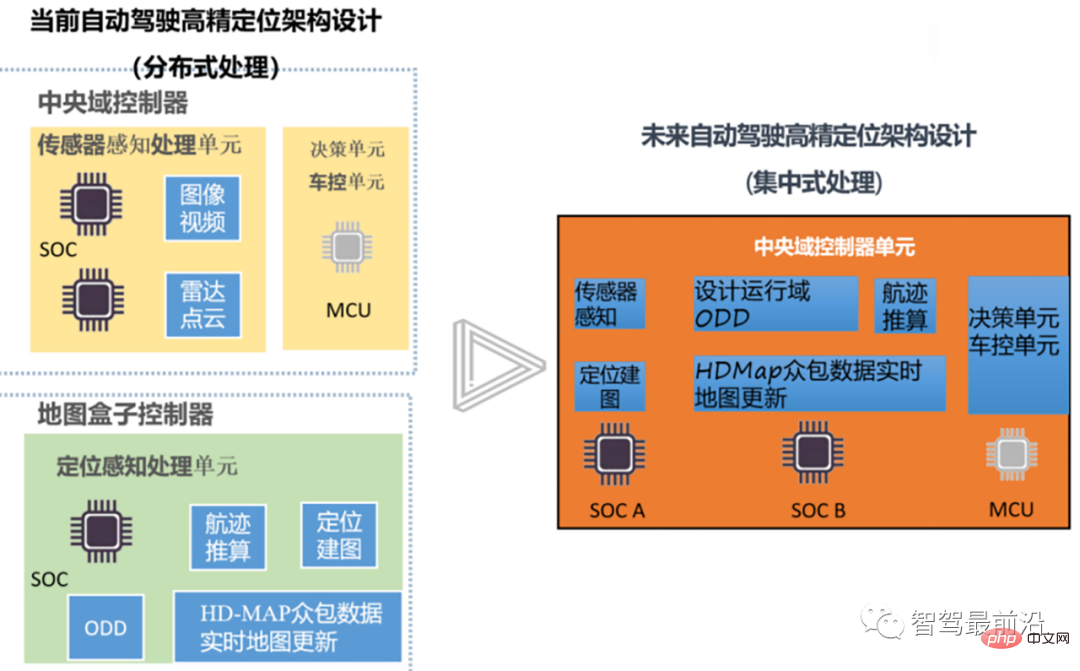

The current high-precision map architecture of the autonomous driving system is still oriented to a distributed approach. Its key concerns include map crowdsourcing collection, the analysis of the original information of the high-precision map by the map box, and how the map interacts with other sensors. Input data for fusion, etc. Let us note here that the future autonomous driving system architecture will continue to evolve from distributed development methods to centralized ones. The centralized approach can be seen in three or two steps:

That is, integrating intelligent driving ADS and intelligent parking The AVP system carries out fully centralized control and uses a central pre-processing device to integrate, predict, plan and other processing methods for the information to be processed in the two systems. The processing methods of all sensing and data units related to smart driving and smart parking (high-precision maps, lidar, fully distributed cameras, millimeter-wave radar, etc.) will be integrated into the central domain control unit accordingly.

This method is the second stage to achieve a fully centralized distribution method, namely the intelligent driving domain All functional development covered by the controller (such as autonomous driving, automatic parking) and all functional development covered by the smart cockpit domain (including driver monitoring DMS, audio-visual entertainment system iHU, and instrument display system IP) are integrated and covered.

This is a fully integrated control method that includes intelligent driving, intelligent cockpit and intelligent chassis domains. That is, the three main functions are integrated into the vehicle central control unit, and the later processing of this data will create more performance (computing power, bandwidth, storage, etc.) requirements for the domain controller.

The high-precision map positioning development we are concerned about here will be more oriented towards centralized design methods in the future. We will elaborate on this.

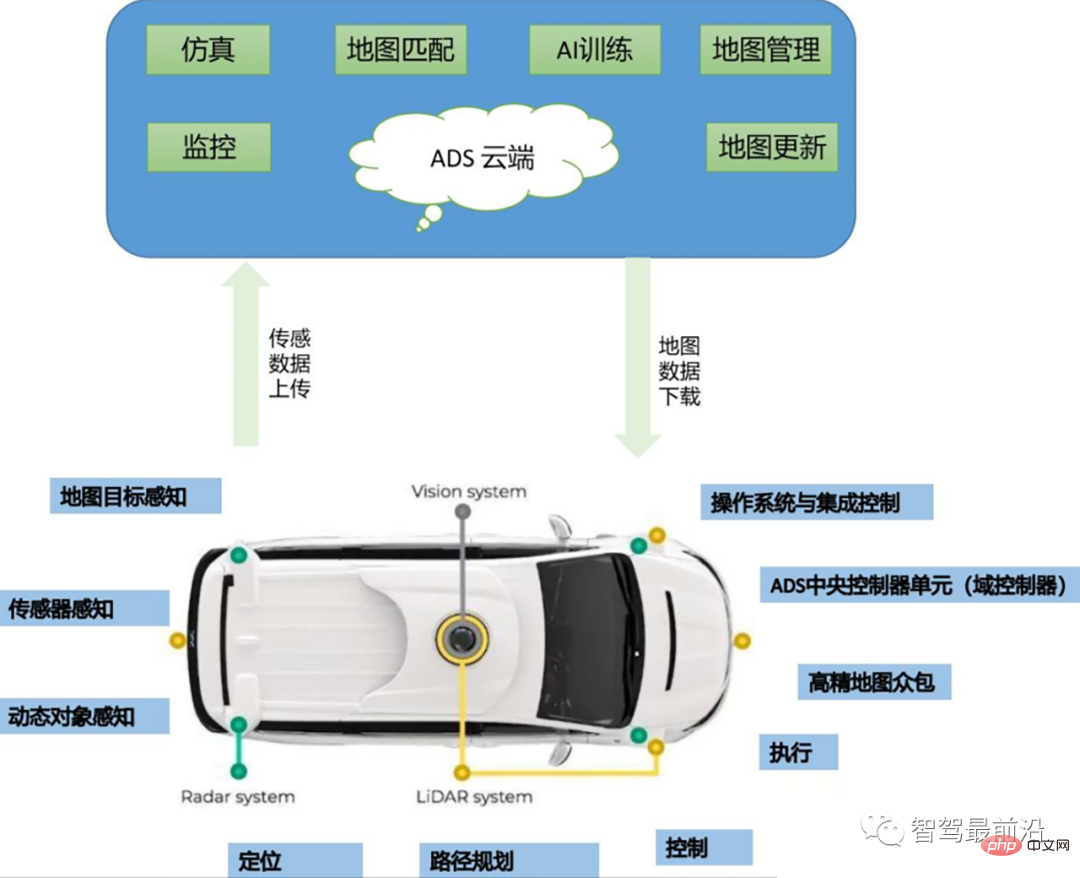

The figure above shows the architectural development trend of high-precision maps in future autonomous driving system control. In the future, autonomous driving systems will strive to integrate the sensing unit, decision-making unit, and map positioning unit into the central domain control unit, aiming to reduce the dependence on high-precision map boxes from the bottom up. The design of its domain controller fully considers the full integration of AI computing chip SOC, logic computing chip MCU, and high-precision map box.

The above figure shows the corresponding high-precision map sensor data collection, data learning, AI training, high-precision map services, simulation and other services under the entire cloud control logic. , at the same time, during the movement and verification process of the vehicle, the map data will be continuously updated through physical sensing, dynamic data sensing, map target sensing, positioning, path planning and other contents, and OTA will be uploaded to the cloud to update the overall crowdsourced data.

The previous article described the process of how high-precision map data generates relevant data that can be processed by the autonomous driving controller. We know that the original data processed by high-precision maps is EHP data. The data actually contains the following main data support:

1: Received external GPS location information;

2: Location information matched to the map ;

3: Establish road network topology information;

4: Send data through CAN;

5: Fusion of partial navigation data;

The data is generally directly processed from the HDMap sensing end through Gigabit Ethernet and then input to the high-precision map central processing unit. We call the unit "high-precision map box". Through further processing of the data through the map box (we will explain this actual processing process in detail in a subsequent article), it can be converted into EHR (actually CanFD) data that can be processed by the autonomous driving controller.

For the next generation of autonomous driving systems, we are committed to integrating high-precision map information into the autonomous driving domain controller for overall processing. This process means that we The domain controller needs to take over all the data parsing work performed by the map box, so we need to focus on the following points:

1) Whether the AI chip of the autonomous driving domain controller can handle high-speed All the sensor data needed for a refined map?

2) Does the logical operation unit of the high-precision positioning map have enough computing power to perform sensor data information fusion?

3) Does the entire underlying operating system meet functional safety requirements?

4) What connection method is used between the AI chip and the logic chip to ensure the reliability of data transmission, Ethernet or CanFD?

In order to answer the above questions we need to analyze the way the controller processes high-precision map data as shown in the figure below.

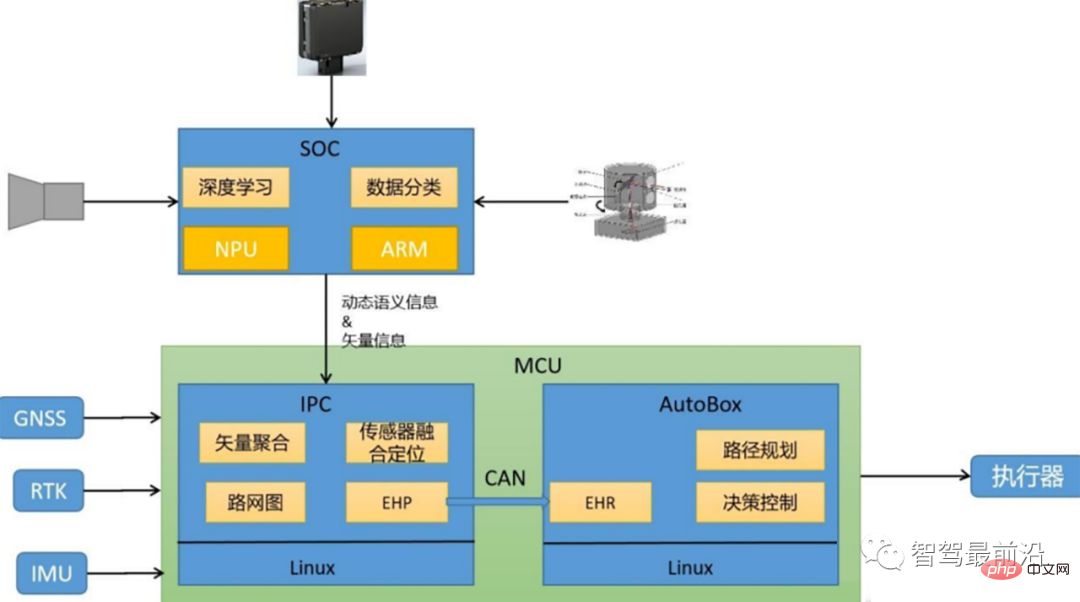

is the AI chip of the autonomous driving system. It will be mainly responsible for the basic processing of sensor data in future high-precision map data processing, including camera data, Lidar data, millimeter wave data, etc. In addition to basic data point cloud fusion and clustering, the applied processing methods also include commonly used deep learning algorithms, and ARM cores are generally used for central computing processing.

As the autonomous driving domain controller logical operation unit, the MCU will subsequently undertake all the logical calculations required for the original high-precision map box. Including front-end vector aggregation, sensor fusion positioning, building road network maps, and most importantly, replacing the original map box function to convert EHP information into EHR signals (how the central processing unit MCU can effectively convert EHP information into EHR information Will be detailed in a later article) and perform effective signal transmission through Can lines. Finally, AutoBox, a logical operation unit, is used for path planning, decision-making control and other operations.

Future autonomous driving will tend to integrate all data information processed by high-precision maps from the original map box into the autonomous driving domain In the controller, it aims to establish a true central processing integration with the vehicle domain controller as the integrated unit. This method can not only save more computing resources, but also enable the AI data processing algorithm to be better applied to high-precision positioning, ensuring the consistency of the two's understanding of the environment. We need to pay more attention to the important direction of high-precision sensor data integration in the future, and put more effort into chip computing power, interface design, bandwidth design and functional safety design.

The above is the detailed content of Overview of key technical elements in the development of intelligent driving. For more information, please follow other related articles on the PHP Chinese website!