I recently completed a very satisfying paper. Not only was the whole process enjoyable and memorable, but it also truly achieved "academic impact and industrial output." I believe this article will change the paradigm of differential privacy (DP) deep learning.

Because this experience is too "coincident" (the process is full of coincidences and the conclusion is extremely clever), I would like to share with my classmates my observation-->conception -->Empirical evidence-->Theory-->Complete process of large-scale experiments. I will try to keep this article lightweight and not involve too many technical details.

##Paper address: arxiv.org/abs/2206.07136

With The order of presentation in paper is different. Paper sometimes deliberately puts the conclusion at the beginning to attract readers, or introduces the simplified theorem first and puts the complete theorem in the appendix. In this article, I want to write down my experience in chronological order (that is, a running account) ), for example, write down the detours you have taken and unexpected situations during research, for the reference of students who have just embarked on the road of scientific research.

1. Literature ReadingThe origin of the matter is a paper from Stanford, which has now been recorded in ICLR:

Paper address: https://arxiv.org/abs/2110.05679

The article is very well written. In summary, there are three points Main contributions:

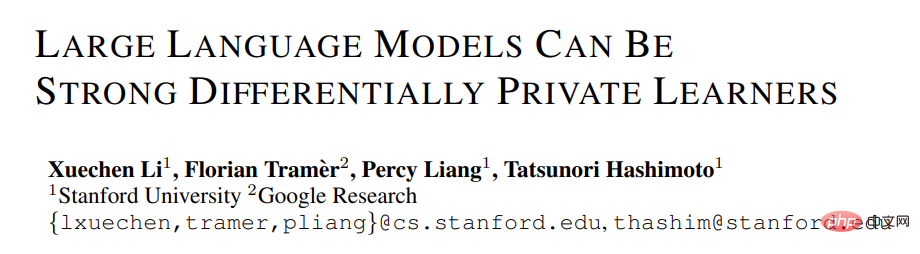

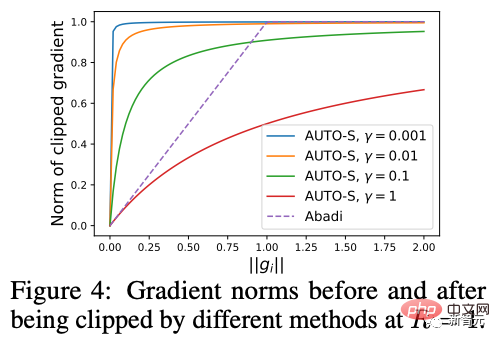

1. In NLP tasks, the accuracy of the DP model is very high, which encourages the application of privacy in language models. (In contrast, DP in CV will cause a very large accuracy deterioration. For example, CIFAR10 currently has 80% accuracy without pre-training under the DP limit, but can easily reach 95% without considering DP; ImageNet's best DP accuracy at the time was less than 50%.)

#2. On the language model, the larger the model, the better the performance will be. For example, the performance improvement of GPT2 from 400 million parameters to 800 million parameters is obvious, and it has also achieved many SOTAs. (But in CV and recommendation systems, in many cases the performance of larger models will be very poor, even close to random guess. For example, the DP best accuracy of CIFAR10 was previously obtained by four-layer CNN, not ResNet.)

In NLP tasks, the larger the DP model, the better the performance [Xuechen et al. 2021]

3. The hyperparameters for obtaining SOTA on multiple tasks are consistent: the clipping threshold needs to be set small enough, and the learning rate needs to be larger. (All previous articles have been about adjusting a clipping threshold for each task, which is time-consuming and labor-intensive. There has never been a clipping threshold=0.1 like this one that runs through all tasks, and the performance is so good.)

The above summary is what I understood immediately after reading the paper. The content in brackets is not from this paper, but the impression generated by many previous readings. This relies on long-term reading accumulation and a high degree of generalization ability to quickly associate and compare.

In fact, it is difficult for many students to start writing articles because they can only see the content of one article and cannot form a network and make associations with knowledge points in the entire field. . On the one hand, students who are just starting out do not read enough and have not yet mastered enough knowledge points. This is especially the case for students who have been taking projects from teachers for a long time and do not propose independently. On the other hand, although the amount of reading is sufficient, it is not summarized from time to time, resulting in the information not being condensed into knowledge or the knowledge not being connected.

Here is the background knowledge of DP deep learning. I will skip the definition of DP for now and it will not affect reading.

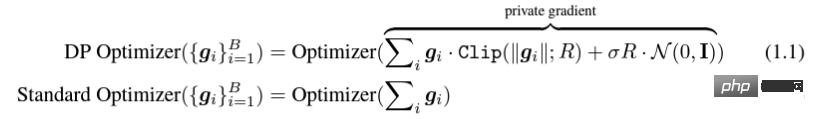

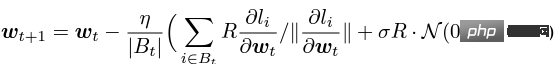

The so-called DP deep learning from an algorithmic point of view actually means doing two extra steps: per-sample gradient clipping and Gaussian noise addition; in other words, as long as you process the gradient according to these two steps (The processed gradient is called private gradient). You can then use the optimizer however you want, including SGD/Adam.

As for how private the final algorithm is, it is a question in another sub-field, called privacy accounting theory. This field is relatively mature and requires a strong theoretical foundation. Since this article focuses on optimization, it will not be mentioned here.

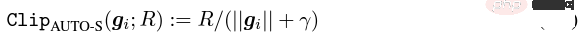

g_i is the gradient of a data point (per-sample gradient), R is the clipping threshold, and sigma is the noise multiplier.

Clip is called clipping function, just like regular gradient clipping. If the gradient is longer than R, it will be cut to R, and if it is less than R, it will not move.

For example, the DP version of SGD is used in all current papers and is the pioneering work of privacy deep learning (Abadi, Martin, et al. "Deep learning with differential privacy.") clipping function, also known as Abadi's clipping:  .

.

But this is completely unnecessary. Following the first principles and starting from privacy accounting theory, in fact, the clipping function only needs to satisfy that the modulus of Clip(g_i)*g_i is less than or equal to R. That's it. In other words, Abadi's clipping is just one function that satisfies this condition, but it is by no means the only one.

There are many shining points in an article, but not all of them can be used by me. I have to judge the greatest contribution based on my own needs and expertise. What is it.

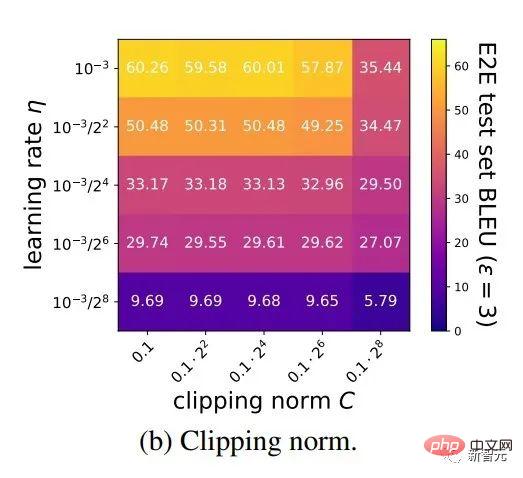

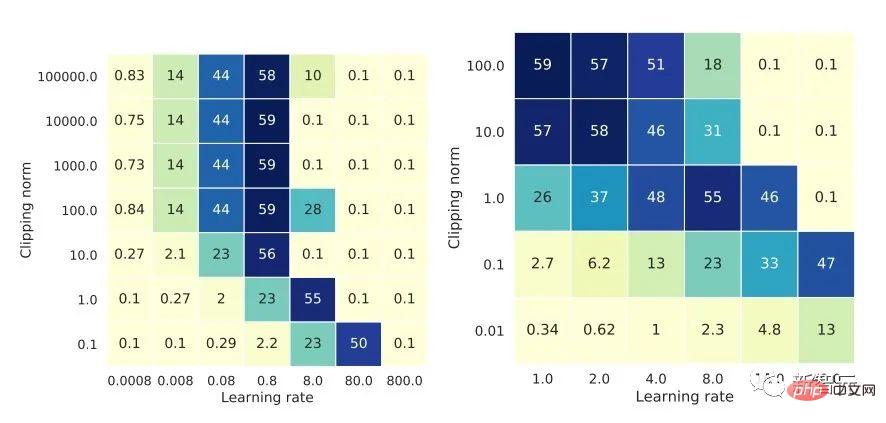

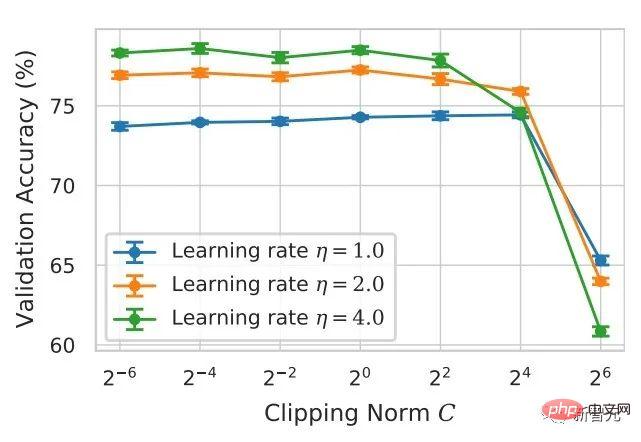

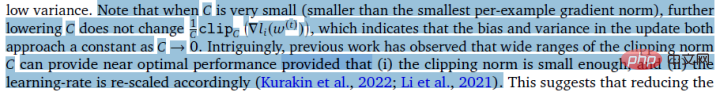

The first two contributions of this article are actually very empirical and difficult to dig into. The last contribution is very interesting. I carefully looked at the ablation study of the hyperparameters and found a point that the original author did not discover: when the clipping threshold is small enough, in fact, the clipping threshold (that is, clipping norm C, in the above formula and R is a variable) has no effect.

Longitudinally, C=0.1, 0.4, 1.6 has no difference to DP-Adam [Xuechen et al. 2021].

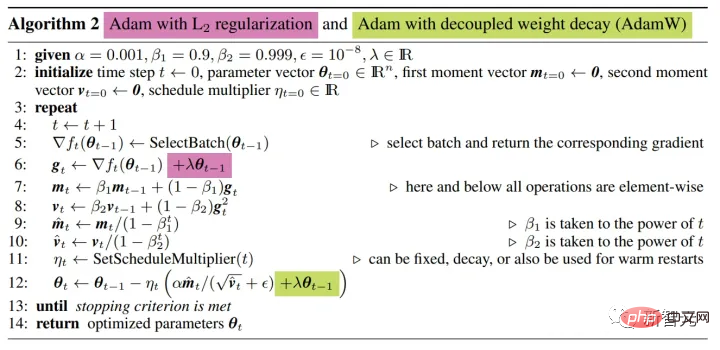

This aroused my interest, and I felt that there must be some principle behind it. So I handwrote the DP-Adam they used to see why. In fact, it is very simple:

If R is small enough, clipping is actually equivalent For normalization! By simply substituting private gradient (1.1), R can be extracted from the clipping part and the noising part respectively:

And the form of Adam makes R It will appear in both the gradient and the adaptive step size. Once the numerator and denominator cancel, R will be gone, and the idea will be there!

Both m and v depend on the gradient, and replacing them with private gradients results in DP-AdamW.

Such a simple substitution proves my first theorem: in DP-AdamW, sufficiently small clipping thresholds are equivalent to each other, without Adjust parameters.

No doubt this is a succinct and interesting observation, but it doesn't make enough sense, so I need to think about what practical use this observation has.

In fact, this means that DP training reduces the parameter adjustment work by an order of magnitude: assuming that the learning rate and R are adjusted to 5 values each (as shown in the figure above), then 25 combinations must be tested to find the optimal hyperparameters . Now you only need to adjust the learning rate in 5 possibilities, and the parameter adjustment efficiency has been improved several times. This is a very valuable pain point issue for the industry.

The intention is high enough, the mathematics is concise enough, and a good idea has begun to take shape.

If it is only established for Adam/AdamW, the limitations of this work are still too great, so I quickly expanded it to AdamW and other adaptive optimizers, such as AdaGrad. In fact, for all adaptive optimizers, it can be proved that the clipping threshold will be offset, so there is no need to adjust parameters, which greatly increases the richness of the theorem.

There is another interesting little detail here. As we all know, Adam with weight decay is different from AdamW. The latter uses decoupled weight decay. An ICLR was published on this difference

Adam has two ways to add weight decay.

This difference also exists in the DP optimizer. The same is true for Adam. If decoupled weight decay is used, scaling R does not affect the size of weight decay. However, if ordinary weight decay is used, enlarging R by twice is equivalent to reducing weight decay by twice.

Smart students may have discovered that I have always emphasized the adaptive optimizer. Why don’t I talk about SGD? The answer is that I After writing the theory of DP adaptive optimizer, Google immediately published an article on DP-SGD used in CV and also did an ablation study. However, the rules were completely different from those found in Adam, which left me with a diagonal Impression

For DP-SGD and when R is small enough, increasing lr by 10 times is equal to increasing R by 10 times [https: //arxiv.org/abs/2201.12328].

I was very excited when I saw this article, because it was another paper that proved the effectiveness of small clipping threshold.

In the scientific world, there are often hidden patterns behind consecutive coincidences.

After a simple substitution, I found that SGD is easier to analyze than Adam. (1.3) can be approximated as:

Obviously R can be proposed again and combined with the learning rate to theoretically prove Google's observation.

「Specifically, when the clipping norm is decreased k times, the learning rate should be increased k times to maintain similar accuracy.」

It's a pity that Google only saw the phenomenon and did not rise to the level of theory. There is also a coincidence here, that is, they drew an ablation study of two scales at the same time in the above picture. Only the left scale can see the diagonal line. There is no conclusion just looking at the right side...

Since there is no adaptive step size, SGD does not ignore R like Adam, but treats R as part of the learning rate, so there is no need to adjust it separately. Anyway, the learning rate is adjusted together if the parameters are adjusted.

Then extending the theory of SGD to momentum, all optimizers supported by Pytorch have been analyzed.

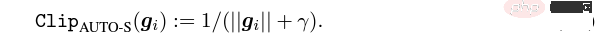

There is an innovative point, but Abadi's clipping is strictly an approximate normalization and cannot be equalized, so there is no way Conclusively analyze convergence.

According to the principle of Doraemon Iron Man Corps, I directly named normalization as the new per-sample gradient clipping function, which replaced the Abadi clipping that has been used in the entire field for 6 years. This is my second innovation point. .

After the proof just now, the new clipping strictly does not require R, so it is called automatic clipping (AUTO-V; V for vanilla).

Since the form is different from Abadi's clipping, the accuracy will be different, and my clipping may have disadvantages.

So I need to write code to test my new method, and it only requires changing one line of code (after all, it’s just  ).

).

In fact, there are mainly three clipping functions in the direction of DP per-sample gradient clipping. In addition to Abadi's clipping, the two are proposed by me, one is global clipping, and the other is This is automatic clipping. In my previous work, I already knew how to change clipping in various popular libraries. I put the modification method in the appendix at the end of the article.

After my testing, I found that in the Stanford article, GPT2 has clipped all iterations and all per-sample gradients during the entire training process. In other words, at least in this experiment, Abadi's clipping is completely equivalent to automatic clipping. Although later experiments did lose to SOTA, this has shown that my new method has enough value: a clipping function that does not need to adjust the clipping threshold, and sometimes accuracy will not be sacrificed.

The Stanford article has two major types of language model experiments, one is the generative task using GPT2 as the model, and the other is RoBERTa is a model classification task. Although automatic clipping is equivalent to Abadi's clipping on generation tasks, the accuracy on classification tasks is always a few points worse.

Due to my own academic habits, I will not change the data set at this time and then select our dominant experiments to publish, let alone add tricks (such as data enhancement and Magically modified models, etc.). I hope that in a completely fair comparison, I can only compare per-sample gradient clipping and achieve the best moisture-free effect possible.

In fact, in discussions with collaborators, we found that pure normalization and Abadi's clipping completely discard the gradient size information, that is to say, for automatic clipping, regardless of the original The gradient is as large as R after clipping, and Abadi retains size information for gradients smaller than R.

Based on this idea, we made a small but extremely clever change, called AUTO-S clipping (S stands for stable)

After fusing R and learning rate, it becomes

You can find this little ( Generally set to 0.01, in fact, it can be set to any other positive number, very robust) to retain the information of the gradient size:

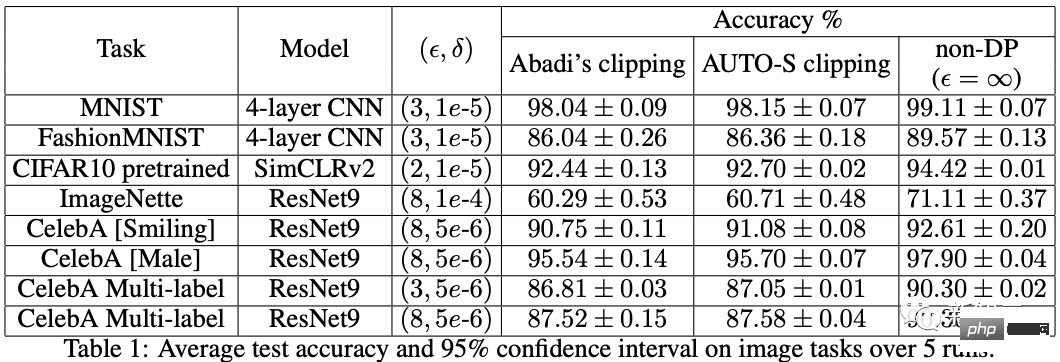

Based on this algorithm , or just change one line, re-run the Stanford code, and you will get the SOTA of six NLP data sets.

In the E2E generation task, AUTO-S surpasses all other clipping functions and in the SST2/MNLI/QNLI/QQP classification task Same goes for above.

One limitation of Stanford’s article is that it only focuses on NLP, and coincidentally: two months after Google brushed ImageNet’s DP SOTA, DeepMind, a subsidiary of Google, released an article on how DP shines in CV, directly increasing the ImageNet accuracy from 48% to 84%!

##Paper address: https://arxiv.org/abs/2204.13650

In this article, I first looked at the choice of optimizer and clipping threshold until I turned to this picture in the appendix:

DP-SGD’s SOTA on ImageNet also requires the clipping threshold to be small enough.

Still the small clipping threshold works best! With three high-quality articles supporting automatic clipping, I already have a strong motivation, and I am more and more sure that my work will be outstanding.

Coincidentally, this article by DeepMind is also a pure experiment without theory, which also led them to almost realize that they can theoretically do not need R. In fact, they are really close. I came up with my idea, and they have even discovered that R can be extracted and integrated with the learning rate (interested students can take a look at their formulas (2) and (3)). But the inertia of Abadi's clipping was too great... Even though they figured out the rules, they didn't go any further.

DeepMind also found that small clipping threshold works best, but did not understand why.

Inspired by this new work, I started to do CV experiments so that my algorithm can be used by all DP researchers, instead of developing a set of methods for NLP , CV does another set.

A good algorithm should be universal and easy to use. Facts have also proved that automatic clipping can also achieve SOTA on CV data sets.

Looking at all the above papers, SOTA has significantly improved and achieved engineering results. It's full, but the theory is completely blank.

When I finished all the experiments, the contribution of this work had exceeded the requirements of a top conference: I combined the DP-SGD and DP-SGD generated by the small clipping threshold empirically. The parameter impact of DP-Adam is greatly simplified; a new clipping function is proposed without sacrificing computational efficiency, privacy, and without parameter adjustment; the small γ repairs the damage to gradient size information caused by Abadi's clipping and normalization; sufficient NLP and CV experiments have achieved SOTA accuracy.

I'm not satisfied yet. An optimizer without theoretical support is still unable to make a substantial contribution to deep learning. Dozens of new optimizers are proposed every year, and all of them are discarded in the second year. There are still only a few officially supported by Pytorch and actually used by the industry.

For this reason, my collaborators and I spent an additional two months doing automatic DP-SGD convergence analysis. The process was difficult but the final proof was simplified to the extreme. The conclusion is also very simple: the impact of batch size, learning rate, model size, sample size and other variables on convergence is quantitatively expressed, and it is consistent with all known DP training behaviors.

Specially, we proved that although DP-SGD converges slower than standard SGD, when the iteration tends to infinite, the convergence speed is an order of magnitude. This provides confidence in privacy computation: the DP model converges, albeit late.

Finally, the article I wrote for 7 months has been completed. Unexpectedly, the coincidences have not stopped yet. NeurIPS submitted the paper in May, and the internal modifications were completed on June 14 and released to arXiv. As a result, on June 27, I saw that Microsoft Research Asia (MSRA) published an article that collided with ours. The clipping proposed was exactly the same as our automatic clipping:

is exactly the same as our AUTO-S.

Looking carefully, even the proof of convergence is almost the same. And the two groups of us have no intersection. It can be said that a coincidence was born across the Pacific Ocean.

Let’s talk a little bit about the difference between the two articles: the other article is more theoretical, for example, it additionally analyzes the convergence of Abadi DP-SGD (I only proved automatic clipping, which is what their article says) DP-NSGD, maybe I don’t know how to adjust DP-SGD); the assumptions used are also somewhat different; and our experiments are more and larger (more than a dozen data sets), and we more explicitly establish Abadi's clipping and The equivalence relationship of normalization, such as Theorem 1 and 2, explains why R can be used without parameter adjustment.

Since it is work at the same time, I am very happy that there are people who agree with each other and can complement each other and jointly promote this algorithm, so that the entire community can believe in this result and benefit from it as soon as possible. Of course, selfishly, I also remind myself that the next article will be accelerated!

Looking back on the creative process of this article, from the starting point, basic skills must be the prerequisite, and another important prerequisite is that I have always kept it in mind. The pain point of adjusting parameters. It's been a long drought, so reading the right article can help you find nectar. As for the process, the core lies in the habit of mathematically theorizing observation. In this work, the ability to implement code is not the most important. I will write another column focusing on another hard-core coding work; the final convergence analysis also relies on my collaborators and my own indomitability. Fortunately, you are not afraid of being late for a good meal, so keep going!

The above is the detailed content of Penn Machine Learning PhD: How did I write a top-notch paper from scratch?. For more information, please follow other related articles on the PHP Chinese website!