With 100 billion neurons, each neuron has about 8,000 synapses, the complex structure of the brain inspires artificial intelligence research.

#Currently, the architecture of most deep learning models is an artificial neural network inspired by the neurons of the biological brain.

## Generative AI explodes, you can see the deep learning algorithm generating , the ability to summarize, translate and classify text is increasingly powerful.

#However, these language models still cannot match human language capabilities.

Predictive coding theory provides a preliminary explanation for this difference:

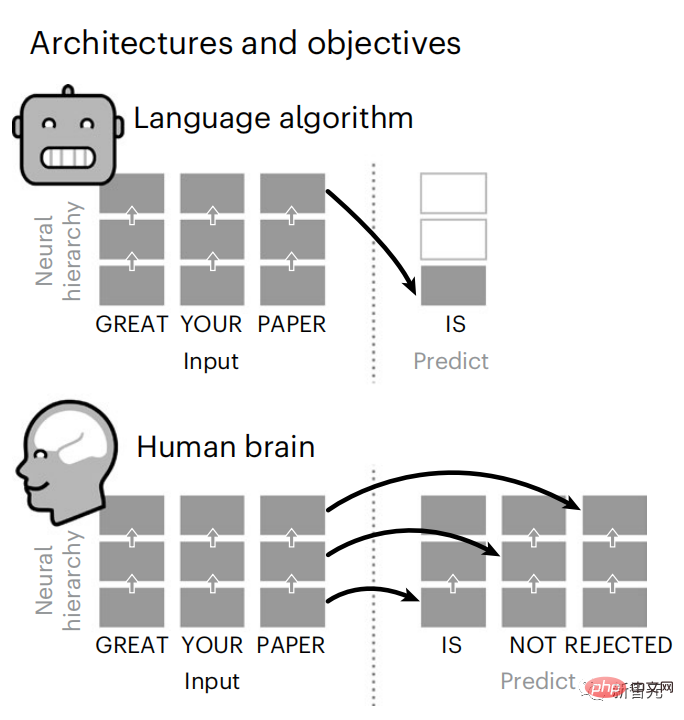

While language models can predict nearby words, the human brain constantly predicts layers of representation across multiple time scales.

To test this hypothesis, scientists at Meta AI analyzed the brain fMRI signals of 304 people who listened to the short story.

#It is concluded that hierarchical predictive coding plays a crucial role in language processing.

#Meanwhile, research illustrates how synergies between neuroscience and artificial intelligence can reveal the computational basis of human cognition.

#The latest research has been published in the Nature sub-journal Nature Human Behavior.

##Paper address: //m.sbmmt.com/link/7eab47bf3a57db8e440e5a788467c37f

It is worth mentioning that GPT-2 was used during the experiment. Maybe this research can inspire OpenAI’s unopened models in the future.

Wouldn’t ChatGPT be even stronger by then?Brain Predictive Coding Hierarchy

In less than 3 years, deep learning has made significant progress in text generation and translation. Thanks to a well-trained algorithm: predict words based on nearby context.

#Notably, activation from these models has been shown to map linearly to brain responses to speech and text.

# Furthermore, this mapping depends primarily on the algorithm's ability to predict future words, thus suggesting that this goal is sufficient for them to converge to brain-like computations.

#However, a gap still exists between these algorithms and the brain: despite large amounts of training data, current language models fail at generating long stories, summarizing, and Challenges with coherent conversation and information retrieval.

#Because the algorithm cannot capture some syntactic structures and semantic properties, and the understanding of the language is also very superficial.

#For example, the algorithm tends to incorrectly assign verbs to subjects in nested phrases.

「the keys that the man holds ARE here」

Similarly, when the text When generating predictions optimized only for the next word, deep language models can generate bland, incoherent sequences, or get stuck in loops that repeat endlessly.

Currently, predictive coding theory provides a potential explanation for this flaw:

Although deep language models are mainly used to Predicting the next word, but this framework shows that the human brain can predict at multiple time scales and cortical levels of representation.

Previous research has demonstrated that speech prediction in the brain, that is, a word or phoneme, correlates well with functional magnetic resonance imaging ( fMRI), electroencephalography, magnetoencephalography and electrocorticography were correlated.

#A model trained to predict the next word or phoneme can have its output reduced to a single number, the probability of the next symbol.

# However, the nature and time scale of predictive representations are largely unknown.

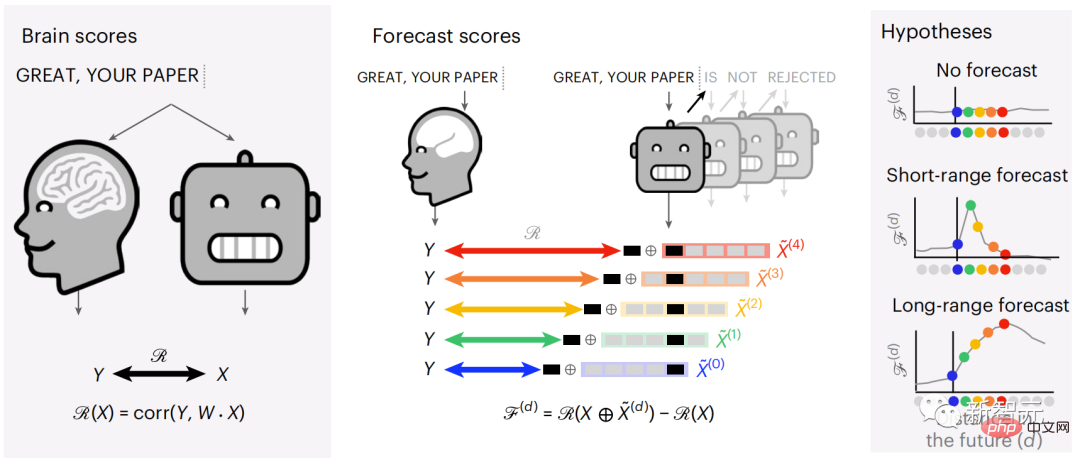

#In this study, the researchers extracted fMRI signals from 304 people and had each person listen to them for about 26 minutes short story (Y), and input the same content to activate the language algorithm (X).

Then, the similarity between X and Y is quantified by the "brain score", that is, the Pearson correlation coefficient (R) after the best linear mapping W .

#To test whether adding representations of predicted words improves this correlation, change the activation of the network (black rectangle X ) is connected to the prediction window (colored rectangle ~X), and then uses PCA to reduce the dimension of the prediction window to the dimension of X.

Finally F quantifies the brain score gain obtained by enhancing the activation of this prediction window by the language algorithm. We repeat this analysis (d) with different distance windows.

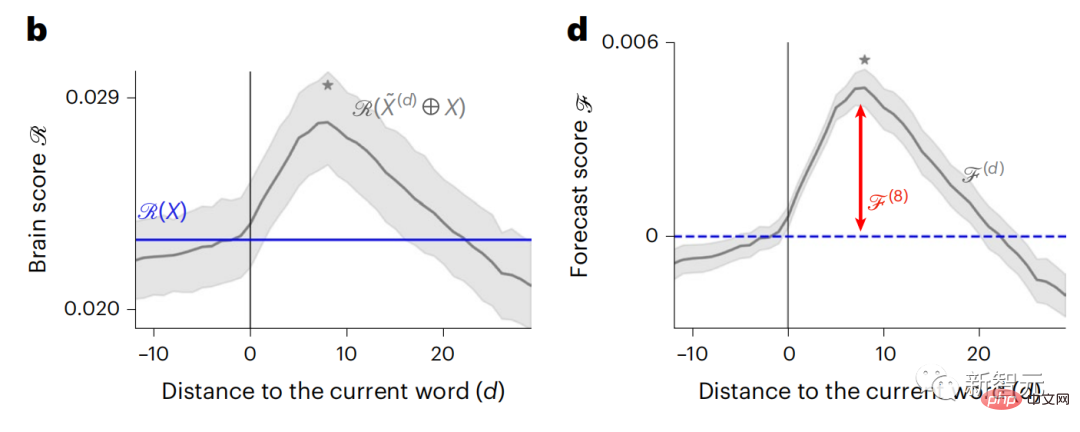

#It was found that this brain mapping could be improved by augmenting these algorithms with predictions that span multiple time scales, namely long-range predictions and hierarchical predictions.

#Finally, the experimental results found that these predictions are hierarchically organized: the frontal cortex predicts higher levels, greater scope, and more predictions than the temporal cortex. Contextual representation.

Deep language model maps to brain activity

Researchers quantitatively studied the similarity between deep language models and the brain when the input content is the same.

Using the Narratives dataset, the fMRI (functional magnetic resonance imaging) of 304 people who listened to short stories was analyzed.

Perform independent linear ridge regression on the results for each voxel and each experimental individual to predict the fMRI signal resulting from activation of several deep language models .

Using the held-out data, the corresponding "brain score" was calculated, that is, the correlation between the fMRI signal and the ridge regression prediction result obtained by inputting the specified language model stimulus sex.

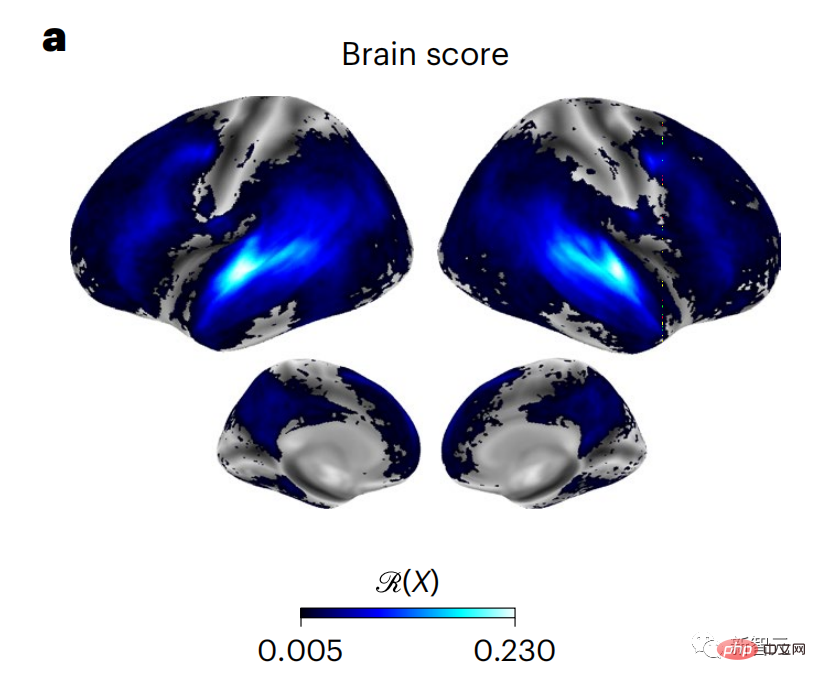

For clarity, first focus on the activations of the eighth layer of GPT-2, a 12-layer causal deep neural network powered by HuggingFace2 that is the most predictive Brain activity.

Consistent with previous studies, GPT-2 activation accurately mapped to a distributed set of bilateral brain regions, with brain scores peaking in the auditory cortex and anterior and superior temporal regions.

The Meta team then tested Does increasing stimulation of language models with long-range prediction capabilities lead to higher brain scores.

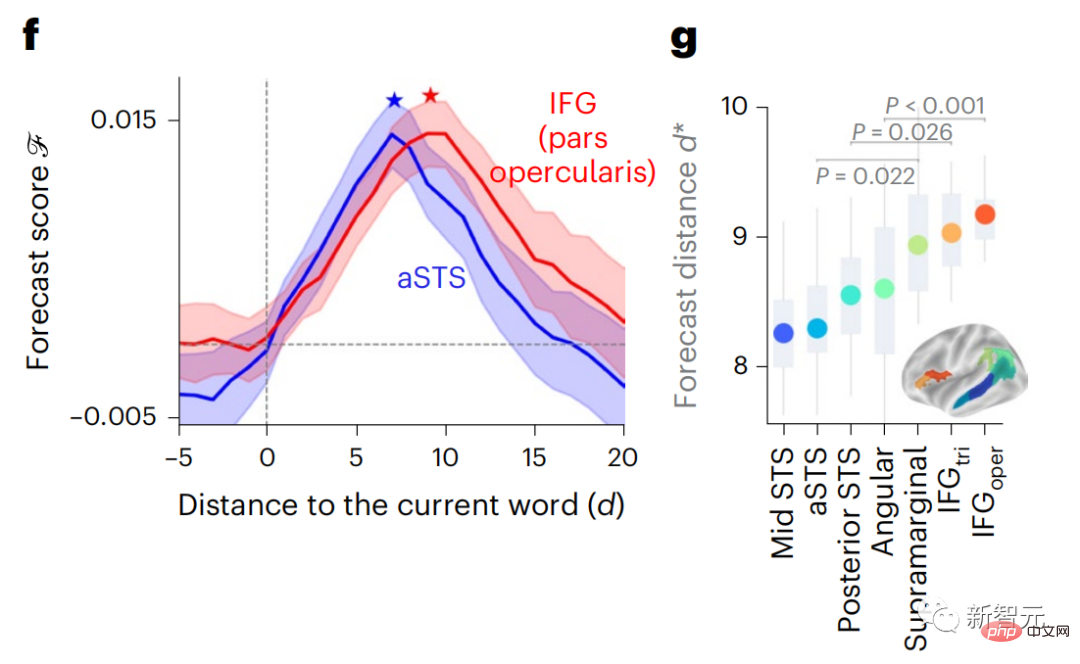

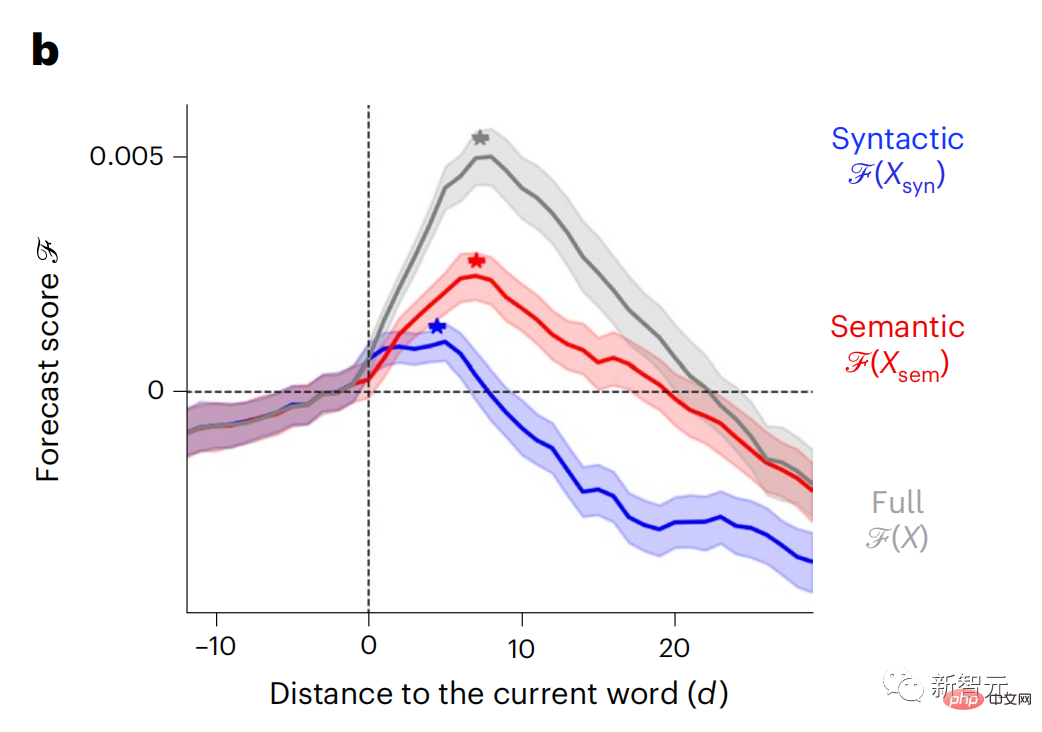

#For each word, the researchers connected the model activation for the current word to a "prediction window" consisting of future words. The representation parameters of the prediction window include d, which represents the distance between the current word and the last future word in the window, and w, which represents the number of concatenated words. For each d, compare the brain scores with and without the predictive representation and calculate the “prediction score”.

The results show that the prediction score is the highest when d=8, and the peak value appears in the brain area related to language processing.

d=8 corresponds to 3.15 seconds of audio, which is the time of two consecutive fMRI scans. Prediction scores were distributed bilaterally in the brain, except in the inferior frontal and supramarginal gyri.

Through supplementary analysis, the team also obtained the following results: (1) Each future word with a distance of 0 to 10 from the current word has a significant contribution to the prediction result. ; (2) Predictive representations are best captured with a window size of around 8 words; (3) Random predictive representations cannot improve brain scores; (4) Compared to real future words, GPT-2 generated words can achieve similar results results, but with lower scores.

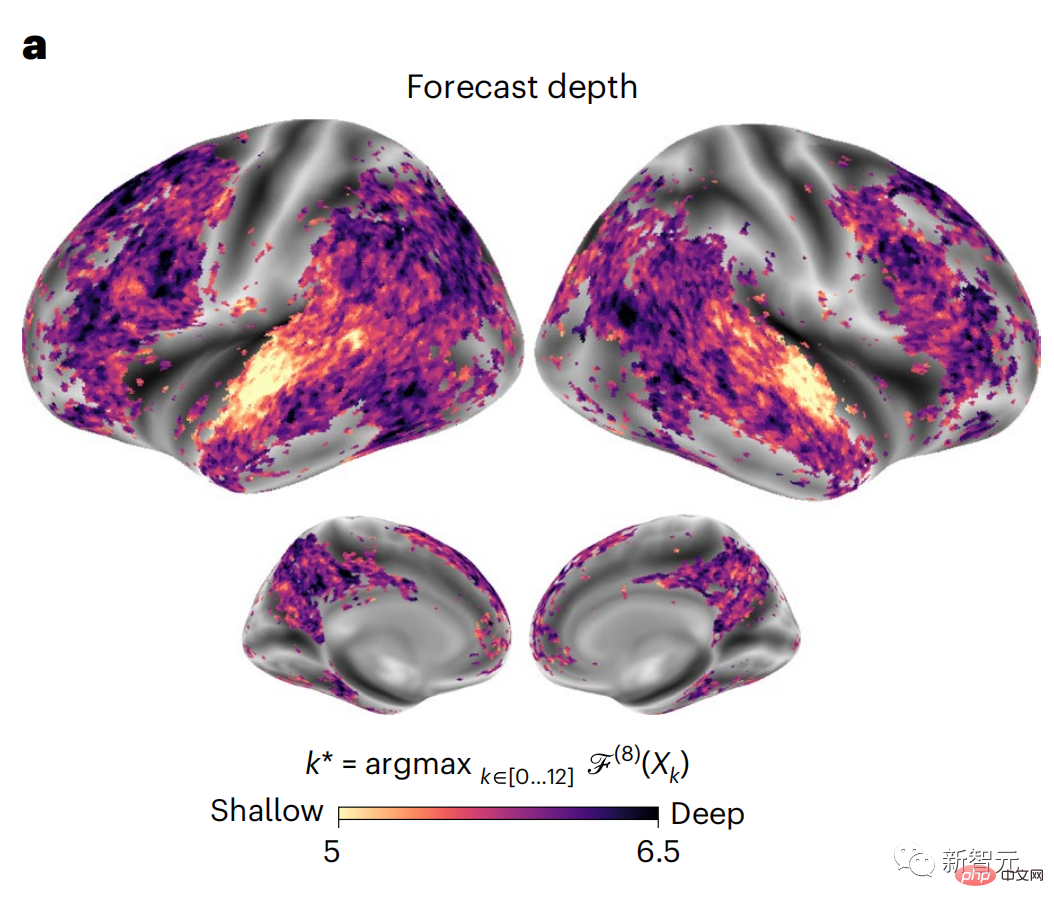

The predicted time frame changes along the layers of the brain

Anatomy & Functional studies have shown that the cerebral cortex is hierarchical. Are the prediction time windows the same for different levels of cortex?

#The researchers estimated the peak prediction score of each voxel and expressed its corresponding distance as d.

The results showed that the d corresponding to the predicted peak in the prefrontal area was larger than that in the temporal lobe area on average (Figure 2e), and the d of the inferior temporal gyrus It is larger than the superior temporal sulcus.

The variation of the best prediction distance along the temporal-parietal-frontal axis is basically symmetrical in both hemispheres of the brain .

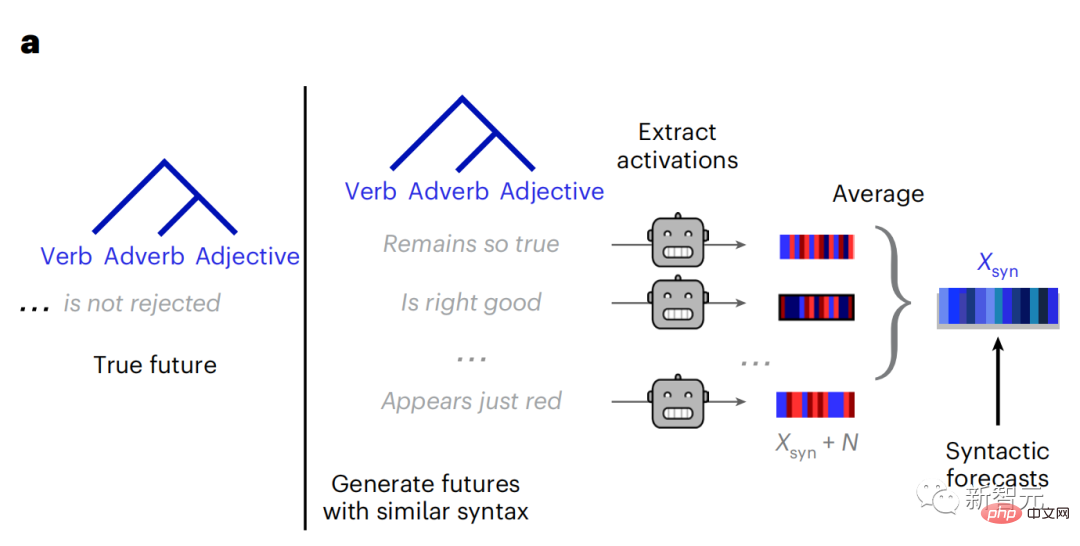

For each word and its preceding context, ten possible future words that match the syntax of a true future word. For each possible future word, the corresponding GPT-2 activation is extracted and averaged. This approach is able to decompose a given language model activation into syntactic and semantic components, thereby calculating their respective prediction scores.

The results show that semantic prediction is long-range (d = 8), involving a distributed network, in front Peaks were reached in the lobe and parietal lobes, while syntactic prediction was shorter in scope (d = 5) and concentrated in superior temporal and left frontal regions.

These results reveal multiple levels of prediction in the brain, in which the superior temporal cortex mainly predicts short-term, superficial and syntactic representations, while the inferior frontal and parietal regions mainly predict long-term, contextual, high-level and syntactic representations. Semantic representation.

The predicted background becomes more complex along the brain hierarchy

Still as before The method calculates the prediction score, but changes the use of GPT-2 layers to determine k for each voxel, the depth at which the prediction score is maximized.

# Our results show that the optimal prediction depth varies along the expected cortical hierarchy, with the best model predicting deeper in associative cortex than in lower-level language areas . Differences between regions, although small on average, are very noticeable in different individuals.

In general, long-term predictions in the frontal cortex have a more complex background than short-term predictions in lower-level brain areas. The level is higher.

Adjust GPT-2 to a predictive encoding structure

Adjust the current word and future words of GPT-2 The representations can be concatenated to obtain a better model of brain activity, especially in the frontal area.

Can fine-tuning GPT-2 to predict representations at greater distances, with richer backgrounds, and higher levels of hierarchy improve brain mapping of these regions?

In the adjustment, not only language modeling is used, but also high-level and long-distance targets are used. The high-level targets here are pre-trained GPT -Layer 8 of the 2 model.

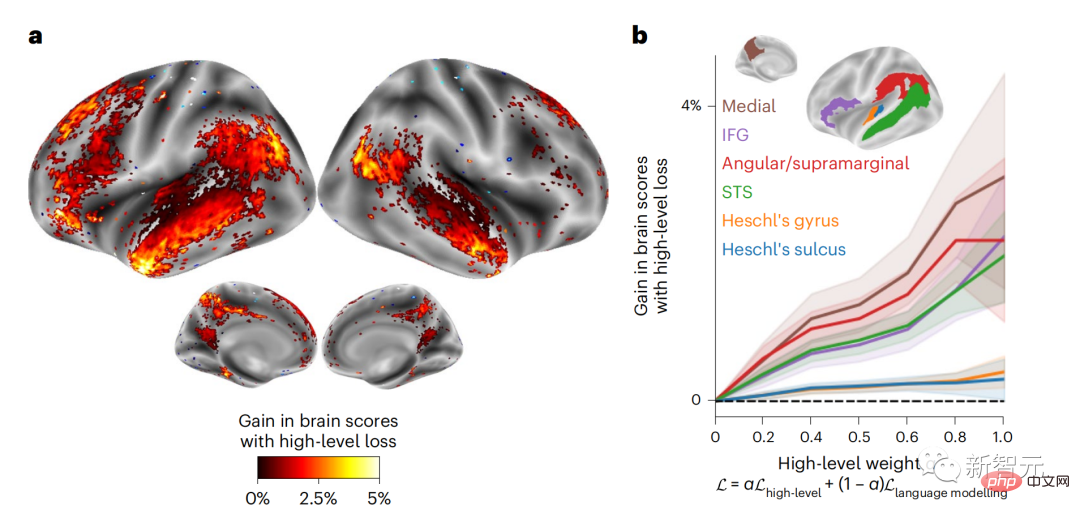

The results showed that fine-tuning GPT-2 with high-level and long-range modeling pairs best improved frontal lobe responses, while auditory area and lower-level of brain regions did not significantly benefit from such high-level targeting, further reflecting the role of frontal regions in predicting long-range, contextual, and high-level representations of language.

Reference:https:/ /m.sbmmt.com/link/7eab47bf3a57db8e440e5a788467c37f

The above is the detailed content of Brain hierarchical prediction makes large models more efficient!. For more information, please follow other related articles on the PHP Chinese website!