Hinton, Turing Award winner and deep learning pioneer, once predicted, "People should stop training radiologists now. It is obvious that in five years, deep learning will do better than radiologists. This may It will take 10 years, but we already have enough radiologists."

I think if you are a radiologist, you are like a radiologist who has reached the edge of the cliff. , but the wild wolf has not yet looked down.

Nearly seven years have passed, and artificial intelligence technology has only participated in and replaced some of the technical work of radiologists, and has problems such as single functions and insufficient training data. Let radiologists still hold their jobs firmly.

But after the release of the basic model of the ChatGPT class, the capabilities of the artificial intelligence model have been improved unprecedentedly. It can handle multi-modal data and adapt to the in-context of new tasks without fine-tuning. Learning capabilities, the rapid development of highly flexible and reusable artificial intelligence models may introduce new capabilities in the medical field.

Recently, researchers from many top universities and medical institutions such as Harvard University, Stanford University, Yale School of Medicine in the United States, and the University of Toronto in Canada jointly proposed a method in Nature A new paradigm of medical artificial intelligence, namely "generalist medical artificial intelligence (GMAI)".

##Paper link: https://www.nature.com/articles/s41586-023-05881-4

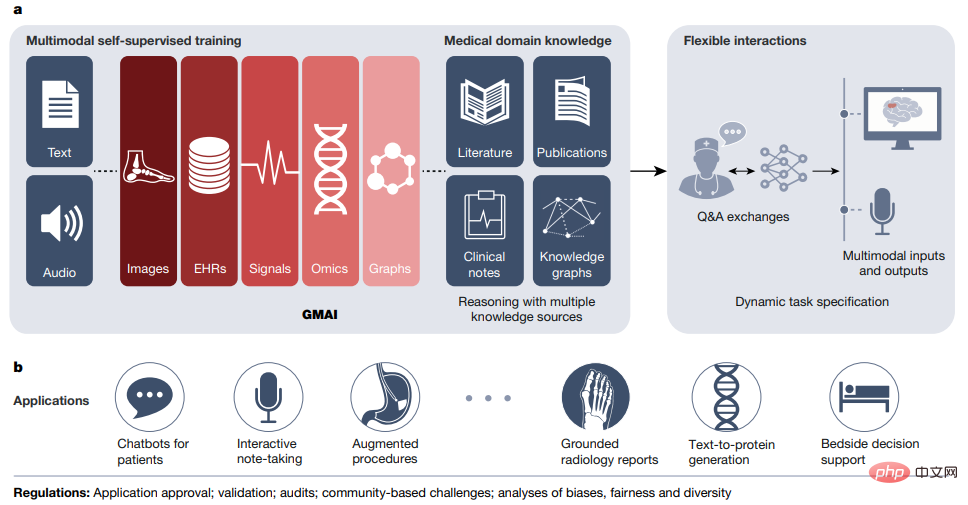

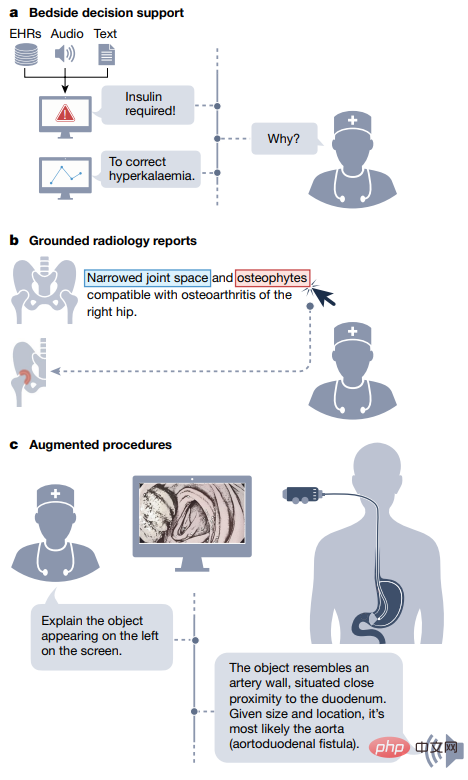

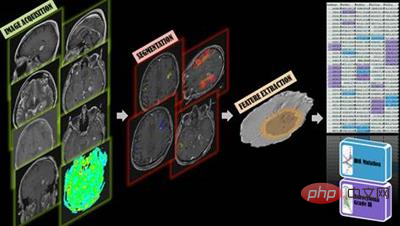

GMAI models will be able to perform a wide variety of tasks using little or no task-specific labeled data. Through self-supervised training on large, diverse datasets, GMAI can flexibly interpret different combinations of medical modalities, including data from imaging, electronic health records, lab results, genomics, charts, or medical text.

In turn, models can also generate expressive outputs such as free-text explanations, verbal recommendations, or image annotations, demonstrating advanced medical reasoning capabilities.

In the article, the researchers identified a set of high-impact potential application scenarios for GMAI and listed specific technical capabilities and training data sets.

The author team anticipates that GMAI applications will challenge current validated medical AI devices and change practices associated with the collection of large medical data sets.

Potential of General Models for Medical AIGMAI models are expected to solve more diverse and challenging tasks than current medical AI models, even for specific The tasks have almost no labeling requirements.

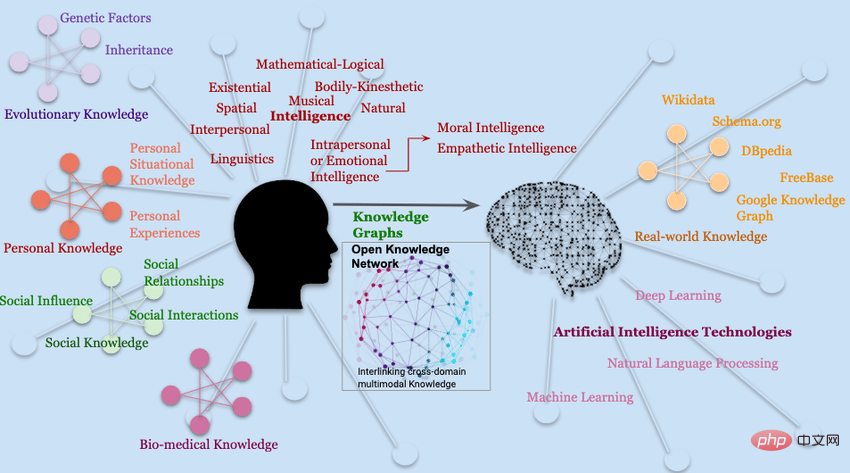

Among the three defined capabilities of GMAI, "the ability to perform dynamically specified tasks" and "the ability to support flexible combinations of data patterns" allow for flexible communication between GMAI models and users. Interaction; the third capability requires the GMAI model to formally represent knowledge in the medical field and be able to perform advanced medical reasoning.

Some recently released basic models have demonstrated some of the capabilities of GMAI. By flexibly combining multiple modalities, they can dynamically Specify a new task, but building a GMAI model with the above three capabilities still requires further development. For example, existing medical reasoning models (such as GPT-3 or PaLM) are not multi-modal and cannot generate reliable factual statement.

Flexible interactionsFlexible interactions

GMAI provides users with custom queries The ability to interact with models makes AI insights more accessible to different audiences and provides greater flexibility for different tasks and settings.

Currently artificial intelligence models can only handle a very limited set of tasks and generate a set of rigid, predetermined outputs. For example, the model can detect a specific disease, accept a certain image, and output The result is the likelihood of developing the disease.

In contrast, custom queries can input questions that the user can think of as they pat their heads, such as "Explain this mass that appears on the head MRI scan. Is it more likely to be a tumor or an abscess?" ?".

In addition, the query allows users to customize the format of its output, such as "This is a follow-up MRI scan of a glioblastoma patient, using The parts that may be tumors are highlighted in red."

Custom queries can achieve two key capabilities, namely"Dynamic Tasks" and "Multimodal Input and Output".

Custom queries can teach AI models to solve new problems on the fly, dynamically specifying new tasks without having to retrain the model.

For example, GMAI can answer highly specific, previously unseen questions, such as "Based on this ultrasound result, how many millimeters is the gallbladder wall thick?"

GMAI models may have difficulty completing new tasks involving unknown concepts or pathologies, while in-context learning allows users to teach GMAI to learn new tasks with very few examples. Concepts, such as "Here are the medical histories of 10 patients who previously suffered from a new emerging disease, namely infection with Langya henipavirus. How likely is it that this current patient is also infected with Langya henipavirus?".

Custom queries can also accept complex medical information containing multiple modalities. For example, when a clinician inquires about a diagnosis, he or she may enter reports, waveform signals, laboratory results, Genome mapping and imaging studies, etc.; GMAI models can also flexibly incorporate different modes into answers, for example users may ask for textual answers and accompanying visual information.

Medical field knowledge

and clinical In sharp contrast to doctors, traditional medical artificial intelligence models usually lack understanding of the background of the medical field (such as pathophysiological processes, etc.) before training for their specific tasks, and can only rely entirely on the characteristics of the input data and the prediction target. statistical correlation between.

Lack of background information can make it difficult to train a model for a specific medical task, especially when task data is scarce.

GMAI model can solve these deficiencies by formally representing medical knowledge. Structures such as knowledge graphs can allow the model to understand medical concepts and the relationships between them. reasoning based on relationships; in addition, based on the retrieval-based method, GMAI can retrieve relevant background from existing databases in the form of articles, images, or previous cases.

The resulting model can provide some warnings, such as "This patient may develop acute respiratory distress syndrome because this patient was recently admitted with severe chest trauma and despite inhalation The amount of oxygen increases, but the partial pressure of oxygen in the patient's arterial blood continues to decrease."

Because the GMAI model may even be asked to provide treatment recommendations, although most of them are based on observational data The model's ability to infer and exploit causal relationships between medical concepts and clinical findings will play a key role in clinical applicability.

Finally, by accessing rich molecular and clinical knowledge, GMAI models can solve data-limited tasks by drawing on knowledge of related problems.

GMAI has the potential to impact the actual process of medical care by improving care and reducing clinician workload.

Controllability

GMAI allows users to finely control their The format of the output makes complex medical information easier to obtain and understand, so some kind of GMAI model is needed to restate the model output according to the needs of the audience.

The visualization results provided by GMAI also need to be carefully customized, such as changing the perspective or labeling important features with text, etc. The model can also potentially be adjusted level of detail in a specific domain in its output, or translate it into multiple languages to communicate effectively with different users.

Finally, GMAI’s flexibility enables it to be adapted to a specific region or hospital, following local customs and policies. Users may need guidance on how to query the GMAI model, and effectively utilize its output. Formal guidance.

Adaptability

Existing medical artificial intelligence models are difficult to cope with Shifts in distribution, but the distribution of data can change dramatically due to ongoing changes in technology, procedures, environments, or populations.

GMAI can keep up with the pace of change through in-context learning. For example, hospitals can teach GMAI models to interpret X-rays from brand-new scanners, just by inputting prompts and a few words. Just a sample.

In other words, GMAI can instantly adapt to new data distribution, while traditional medical artificial intelligence models need to be retrained on new data sets; however, currently only in large language models The ability of in-context learning was observed in .

To ensure that GMAI can adapt to changes in context, GMAI models need to be trained on diverse data from multiple complementary data sources.

For example, to adapt to new variants of coronavirus disease 2019, a successful model can retrieve the characteristics of past variants and predict the To update these features for new context, a clinician might simply type "Check these chest X-rays for Omicron".

Models can be compared with delta variants, considering bronchial and perivascular infiltrates as key signals.

While users can manually adjust model behavior via prompt words, new technologies can also play a role in automatically incorporating human feedback.

Users can evaluate or comment on each output of the GMAI model, just like the reinforcement learning feedback technology used by ChatGPT, which can change the behavior of the GMAI model.

Applicability

Large-scale artificial intelligence models have become the The foundation for downstream applications, such as GPT-3, has provided technical support for more than 300 applications in different industries within a few months of its release.

Among medical basic models, CheXzero can be used to detect dozens of diseases in chest X-rays and does not require training on explicit labels for these diseases.

The paradigm shift towards GMAI will drive the development and release of large-scale medical AI models with broad capabilities that can serve as the basis for a variety of downstream clinical applications: either directly using GMAI’s output or integrating GMAI’s The result is used as an intermediate representation, and is subsequently connected to a small domain model.

It should be noted that this flexible applicability is also a double-edged sword. All faults that exist in the basic model will continue to propagate in downstream applications.

Although the GMAI model has many advantages, compared with other fields, the security risks in the medical field are particularly high, so there are still problems to ensure safe deployment.

Validation/Confirmation

GMAI model due to its unprecedented Functional, so it is also very difficult to conduct proficiency verification.

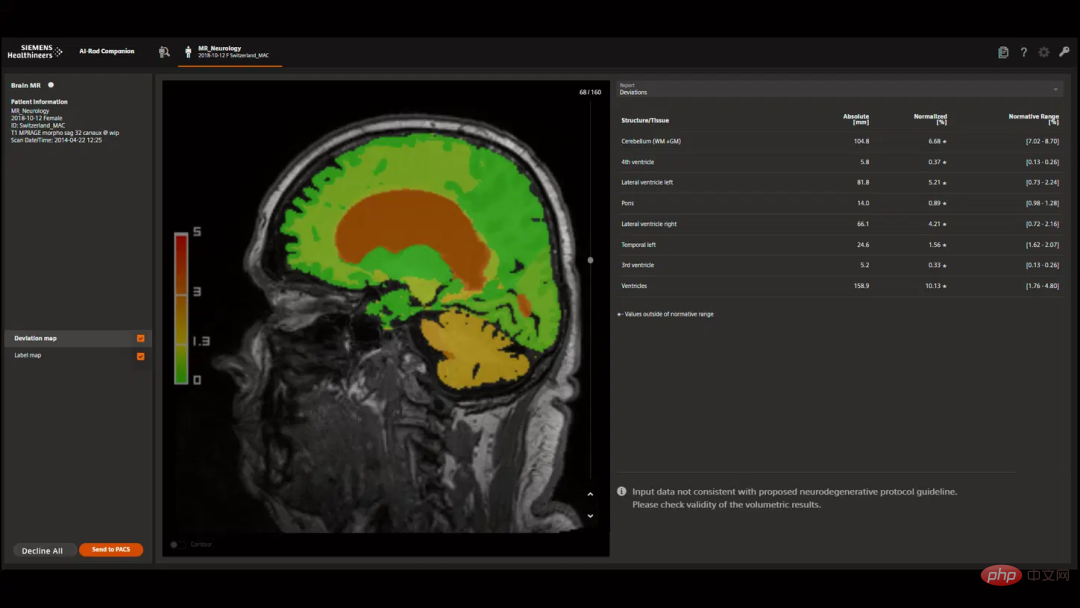

Current AI models are designed for specific tasks, so they only need to be validated in those predefined use cases, such as diagnosing data from brain MRIs Certain types of cancer.

But GMAI models can also perform previously unseen tasks first proposed by end users (such as diagnosing other diseases in brain MRIs), how to predict all failure modes is a more Difficult question.

Developers and regulators need to be responsible for explaining how GMAI models are tested and what use cases they are approved for; the GMAI interface itself Designs should warn of "off-label use" when entering unknown territory, rather than confidently fabricating inaccurate information.

More broadly, GMAI’s unique breadth of capabilities requires regulatory foresight and requires institutional and government policies to adapt to a new paradigm that will also reshape insurance arrangements and the allocation of responsibilities.

Verification

Compared with traditional artificial intelligence models, GMAI Models can handle incredibly complex inputs and outputs, making it more difficult for clinicians to determine their correctness.

For example, a traditional model may only consider the results of one imaging study when classifying a patient's cancer, and only a radiologist or pathologist is needed to validate the model. Is the output correct?

The GMAI model may consider two inputs and may output initial classification, treatment recommendations and multi-modal arguments involving visualization, statistical analysis and literature reference.

In this case, a multidisciplinary team (composed of radiologists, pathologists, oncologists doctors and other experts) to judge whether the output of GMAI is correct.

Therefore, fact-checking GMAI output is a serious challenge, both during validation and after model deployment.

Creators can make GMAI output easier to verify by incorporating interpretable technology, for example, allowing GMAI output to include clickable literature and specific evidence passages so that clinicians can be more effective to verify GMAI’s predictions.

Finally, it is crucial that GMAI models accurately express uncertainty and prevent misleading users with overconfident statements.

Social bias

Medical AI models may perpetuate society bias and harm to marginalized groups.

These risks may be more apparent when developing GMAI, where the demands and complexity of massive data can make it difficult for the model to ensure that it is free of undesirable biases.

GMAI models must be thoroughly validated to ensure they do not perform poorly in specific populations, such as minority groups.

Even after deployment, models require ongoing auditing and monitoring because as the model encounters new tasks and environments, new problems may arise that need to be quickly identified and fixed. Bias must be a priority for developers, vendors, and regulators.

Privacy

The development and use of GMAI models poses serious risks to patient privacy and may Gain access to rich patient characteristics, including clinical measurements and signals, molecular signatures and demographic information, and behavioral and sensory tracking data.

In addition, GMAI models may use larger architectures that are easier to memorize training data and repeat directly to users, potentially exposing sensitive patient data in the training data set.

The damage caused by exposed data can be reduced by de-identifying and limiting the amount of information collected on individual patients.

Privacy issues are not limited to training data. The deployed GMAI model may also expose current patient data. For example, prompts can deceive models such as GPT-3 to ignore previous instructions. ;Malicious users can force the model to ignore "do not expose information" instructions to extract sensitive data.

The above is the detailed content of Hinton's prediction is coming true! Nature, a top university in the United States and Canada, published an article: General medical artificial intelligence GMAI will not only replace 'radiologists'. For more information, please follow other related articles on the PHP Chinese website!

Python re module usage

Python re module usage Usage of promise

Usage of promise How to operate json with jquery

How to operate json with jquery python programming computer configuration requirements

python programming computer configuration requirements Win11 skips the tutorial to log in to Microsoft account

Win11 skips the tutorial to log in to Microsoft account What is the appropriate virtual memory setting?

What is the appropriate virtual memory setting? How to Get Started with Buying Cryptocurrencies

How to Get Started with Buying Cryptocurrencies How to solve the problem that this copy of windows is not genuine

How to solve the problem that this copy of windows is not genuine