Microsoft recently published a paper on HuggingGPT. Original address: HuggingGPT: Solving AI Tasks with ChatGPT and its Friends in Hugging Face[1]. This article is an interpretation of the paper.

HuggingGPT: Solving AI Tasks with ChatGPT and its Friends in Hugging Face Translated into Chinese: HuggingGPT: Solving AI Tasks with ChatGPT and its Friends in Hugging Face.

What are its friends? After reading the paper, it should be the large language model represented by GPT4 and various expert models. The expert model mentioned in this article is relative to the general model and is a model in a specific field, such as a model in the medical field, a model in the financial field, etc.

Hugging Face is an open source machine learning community and platform.

You can quickly understand the main content of the paper by answering the following questions.

- What is the idea behind HuggingGPT and how does it work?

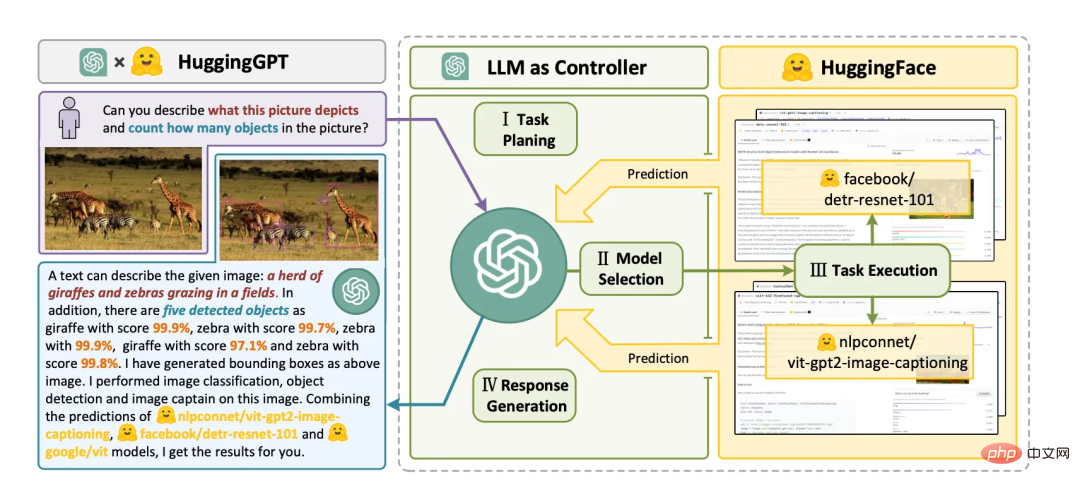

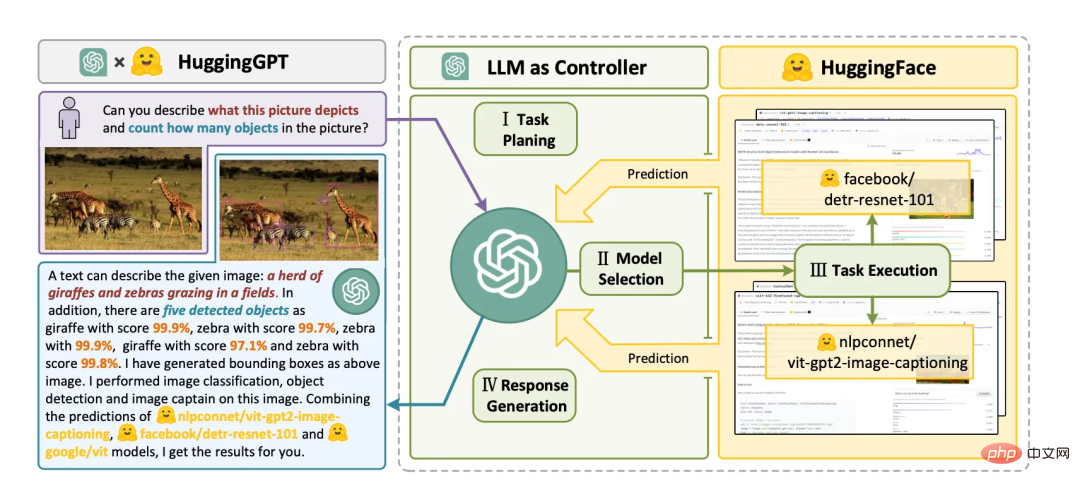

##The idea behind HuggingGPT is to use a large language model (LLM) as the controller to manage AI models and solve complex AI tasks. HuggingGPT works by leveraging LLM’s strengths in understanding and reasoning to dissect user requests and decompose them into multiple subtasks. Then, based on the description of the expert model, HuggingGPT assigns the most suitable model to each task and integrates the results of different models. The workflow of HuggingGPT includes four stages: task planning, model selection, task execution and response generation. You can find more information on pages 4 and 16 of the PDF file. -

What is the idea behind HuggingGPT and how does it work?-

The idea behind HuggingGPT is to use a Large Language Model (LLM) Act as a controller to manage AI models and solve complex AI tasks. The working principle of HuggingGPT is to take advantage of LLM's advantages in understanding and reasoning to analyze user intentions and decompose tasks into multiple sub-tasks. Then, based on the description of the expert model, HuggingGPT assigns the most suitable model to each task and integrates the results of different models. The workflow of HuggingGPT includes four stages: task planning, model selection, task execution and response generation. You can find more information on pages 4 and 16 of the PDF file. -

How does HuggingGPT use language as a common interface to enhance AI models? -

HuggingGPT uses language as a common interface to enhance AI models by using Large language models (LLM) serve as controllers to manage AI models. LLM can understand and reason about users' natural language requests, and then decompose the task into multiple sub-tasks. Based on the description of the expert model, HuggingGPT assigns the most suitable model to each sub-task and integrates the results of different models. This approach enables HuggingGPT to cover complex AI tasks in many different modalities and domains, including language, vision, speech, and other challenging tasks. You can find more information on pages 1 and 16 of the PDF file. -

How does HuggingGPT use large language models to manage existing AI models?-

HuggingGPT uses large language models as interfaces to route user requests to Expert models effectively combine the language understanding capabilities of large language models with the expertise of other expert models. The large language model acts as the brain for planning and decision-making, while the small model acts as the executor of each specific task. This collaboration protocol between models provides new ways to design general AI models. (Page 3-4)-

What kind of complex AI tasks can HuggingGPT solve?-

HuggingGPT can solve languages, images, audio A wide range of tasks in various modalities such as video and video, including various forms of tasks such as detection, generation, classification and question answering. Examples of 24 tasks that HuggingGPT can solve include text classification, object detection, semantic segmentation, image generation, question answering, text-to-speech, and text-to-video. (Page 3)-

Can HuggingGPT be used with different types of AI models, or is it limited to specific models?-

HuggingGPT is not limited to specific models AI models or visual perception tasks. It can solve tasks in any modality or domain by organizing cooperation between models through large language models. Under the planning of large language models, task processes can be effectively specified and more complex problems can be solved. HuggingGPT takes a more open approach, assigning and organizing tasks according to model descriptions. (Page 4) -

can be understood by analogy with the concept of microservice architecture and cloud native architecture, which are very popular now. HuggingGPT is the controller, which can be implemented with GPT4 and is responsible for processing natural language input. Decomposition, planning, and scheduling. The so-called scheduling means scheduling to workers, that is, other large language models (LLM) and expert models (specific domain models). Finally, the worker returns the processing results to the controller, and the controller integrates the results and converts them into Natural language is returned to the user.

HuggingGPT’s workflow includes four stages:

- Task planning: Use ChatGPT to analyze user requests, understand their intentions, and dismantle them into a solvable task.

- Model Selection: To solve the planned tasks, ChatGPT selects AI models hosted on Hugging Face based on their descriptions.

- Task execution: Call and execute each selected model and return the results to ChatGPT.

- Generate response: Finally, use ChatGPT to integrate the predictions of all models and generate Response.

Reference link

[1] HuggingGPT: Solving AI Tasks with ChatGPT and its Friends in Hugging Face: https://arxiv.org/pdf/2104.06674.pdf

The above is the detailed content of Interpretation of Microsoft's latest HuggingGPT paper, what did you learn?. For more information, please follow other related articles on the PHP Chinese website!