AI is like a black box that can make decisions on its own, but people don’t know why. Build an AI model, input data, and then output the results, but one problem is that we cannot explain why the AI reaches such a conclusion. There is a need to understand the reasoning behind how an AI reaches a certain conclusion, rather than just accepting a result that is output without context or explanation.

Interpretability is designed to help people understand:

In this article, I will introduce 6 Python frameworks for interpretability.

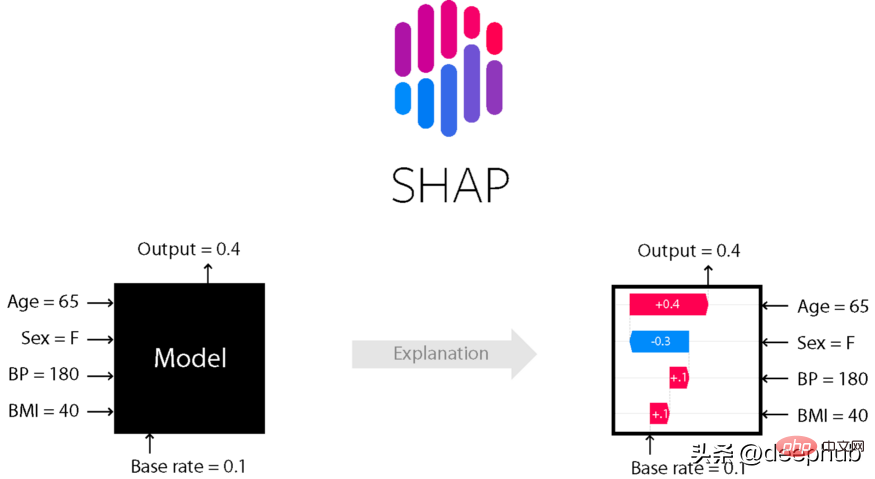

SHapley Additive explanation (SHapley Additive explanation) is a game theory method for explaining the output of any machine learning model. It utilizes the classic Shapley value from game theory and its related extensions to relate optimal credit allocation to local interpretations (see paper for details and citations).

The contribution of each feature in the dataset to the model prediction is explained by the Shapley value. Lundberg and Lee's SHAP algorithm was originally published in 2017, and the algorithm has been widely adopted by the community in many different fields.

Use pip or conda to install the shap library.

# install with pippip install shap# install with condaconda install -c conda-forge shap

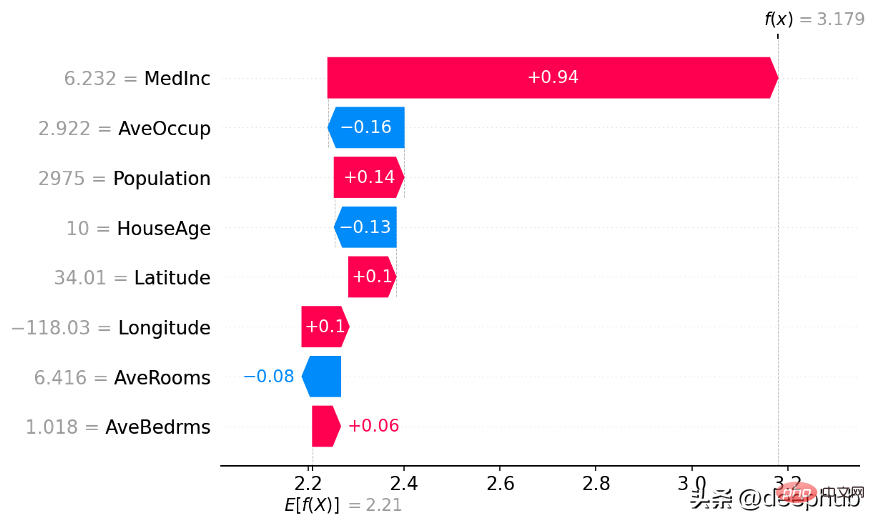

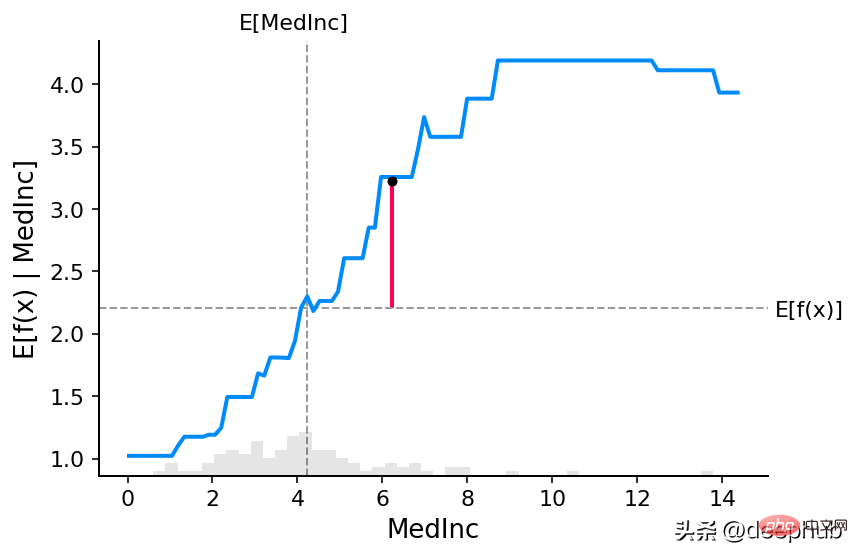

Use Shap library to build waterfall chart

pip install lime

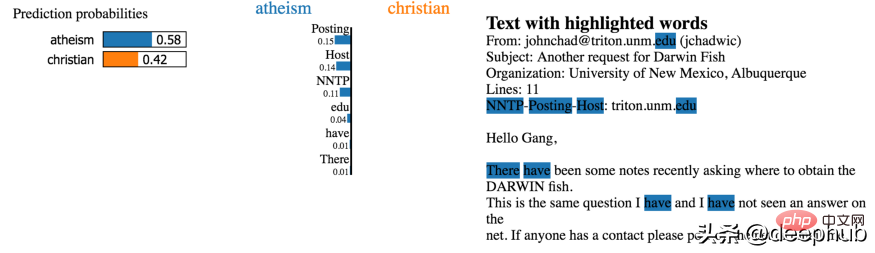

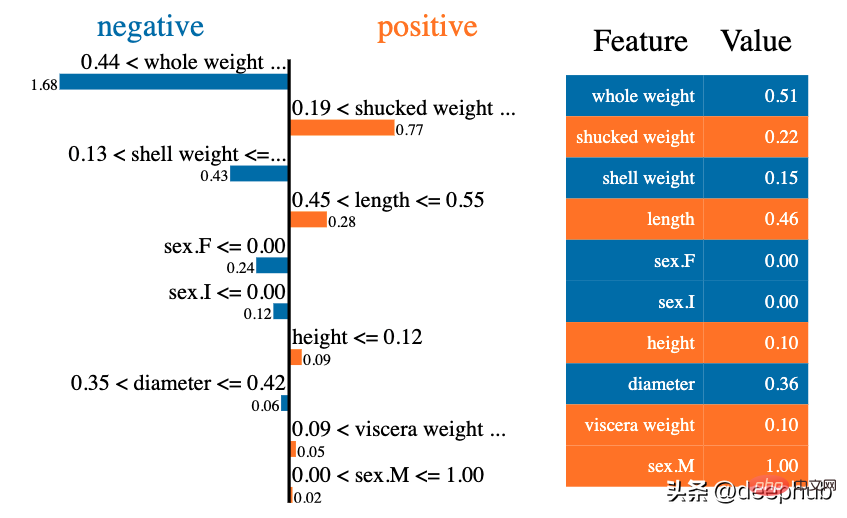

##Partial explanation diagram built by LIME

##Partial explanation diagram built by LIME

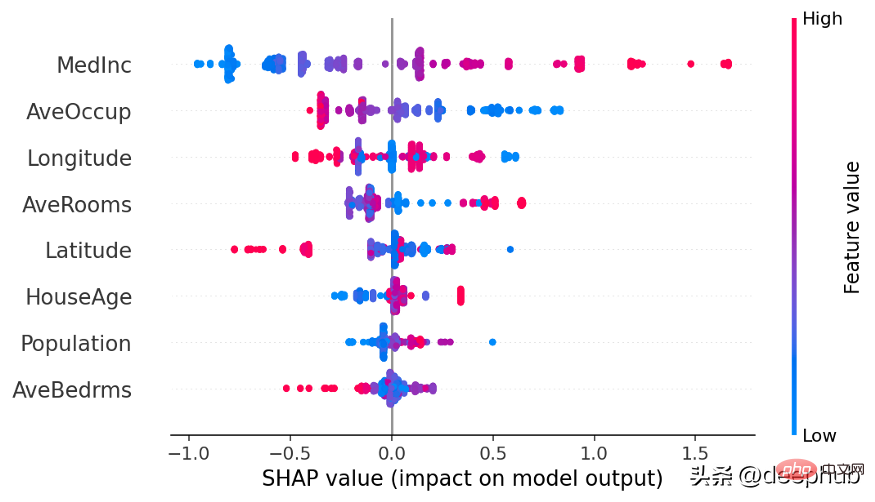

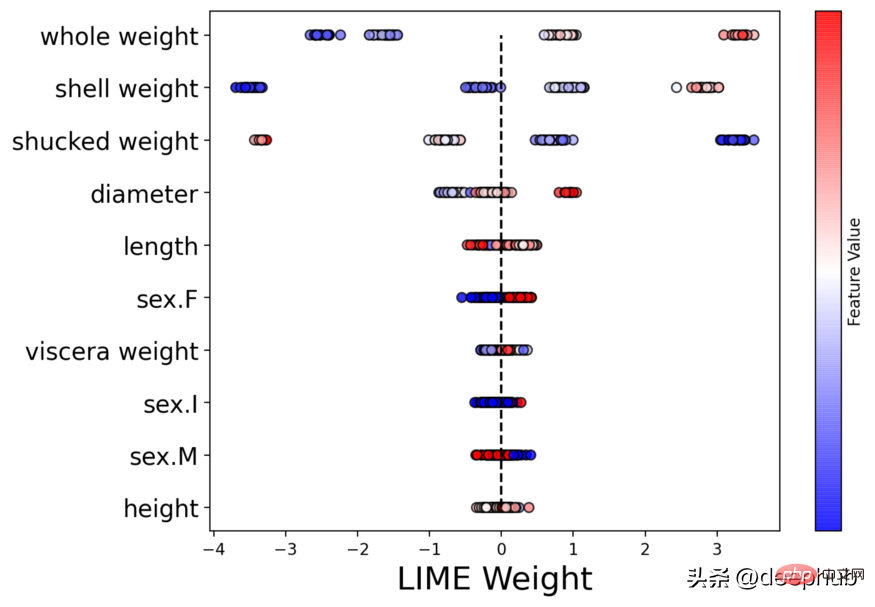

Beeswarm graph built by LIME

Beeswarm graph built by LIME

Shapash

To express findings that contain stories, insights, and models in your data, interactivity and beautiful charts are essential. The best way for business and data scientists/analysts to present and interact with AI/ML results is to visualize them and put them on the web. The Shapash library can generate interactive dashboards and has a collection of many visualization charts. Related to shape/lime interpretability. It can use SHAP/Lime as the backend, which means it only provides better-looking charts.

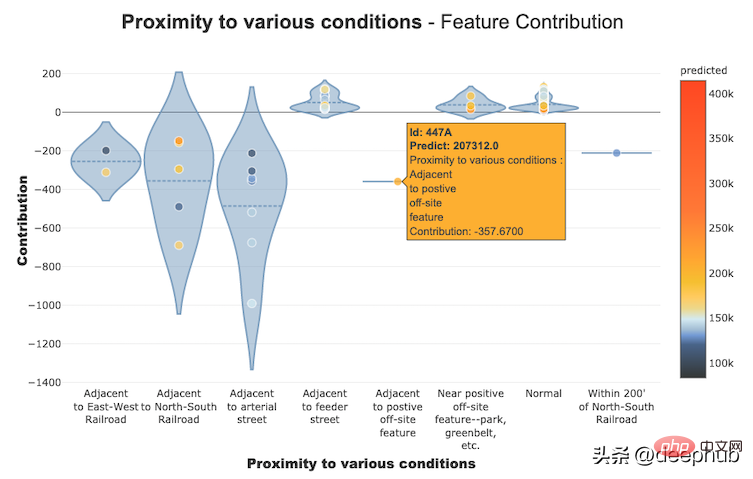

Using Shapash to build feature contribution graph

Using Shapash to build feature contribution graph

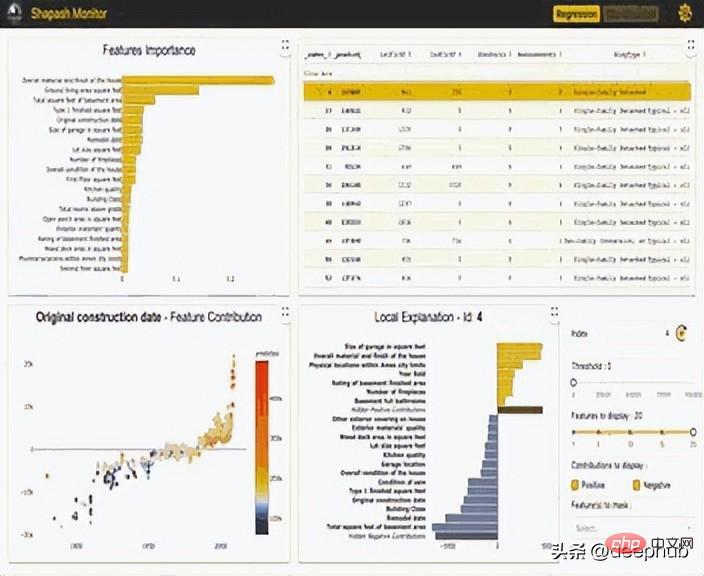

Interactive dashboard created using Shapash library

Interactive dashboard created using Shapash library

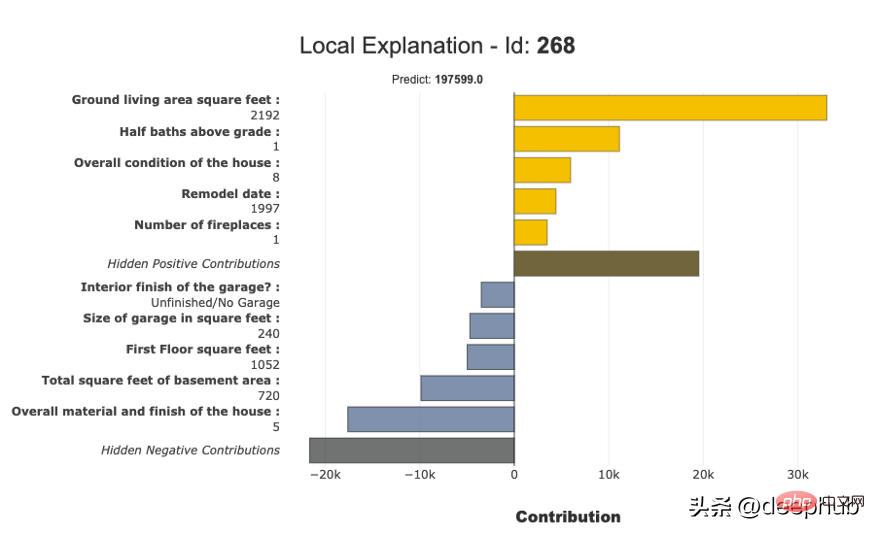

Partial Interpretation Graph built using Shapash

Partial Interpretation Graph built using Shapash

InterpretML

InterpretML demonstrates two types of interpretability: glassbox models – machine learning models designed for interpretability (e.g. linear models, rule lists, generalized additive models) and black box interpretability techniques – using Used to explain existing systems (e.g. partial dependencies, LIME). Using a unified API and encapsulating multiple methods, with a built-in, extensible visualization platform, this package enables researchers to easily compare interpretability algorithms. InterpretML also includes the first implementation of the explanation Boosting Machine, a powerful, interpretable, glassbox model that can be as accurate as many black-box models.

Local explanation interactive graph built using InterpretML

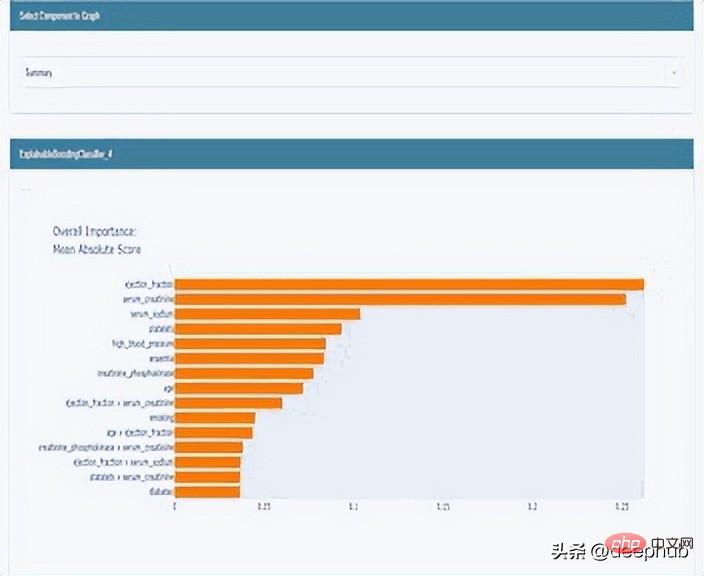

Global explanation graph built using InterpretML

ELI5 is a Python library that can help debug machine learning classifiers and interpret their predictions. Currently the following machine learning frameworks are supported:

ELI5 has two main Ways to explain a classification or regression model:

Use the ELI5 library to generate global weights

Use the ELI5 library to generate local weights

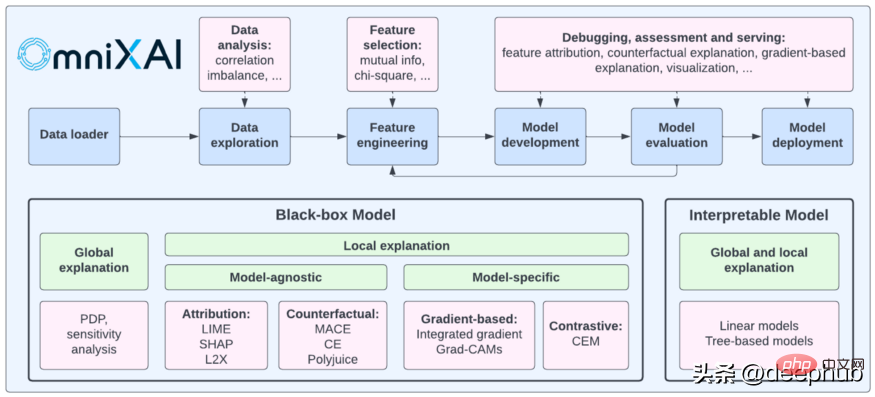

OmniXAI (short for Omni explained AI) is a Python library recently developed and open sourced by Salesforce. It provides a full range of explainable artificial intelligence and explainable machine learning capabilities to solve several problems that require judgment in the generation of machine learning models in practice. For data scientists, ML researchers who need to interpret various types of data, models and explanation techniques at various stages of the ML process, OmniXAI hopes to provide a one-stop comprehensive library that makes explainable AI simple.

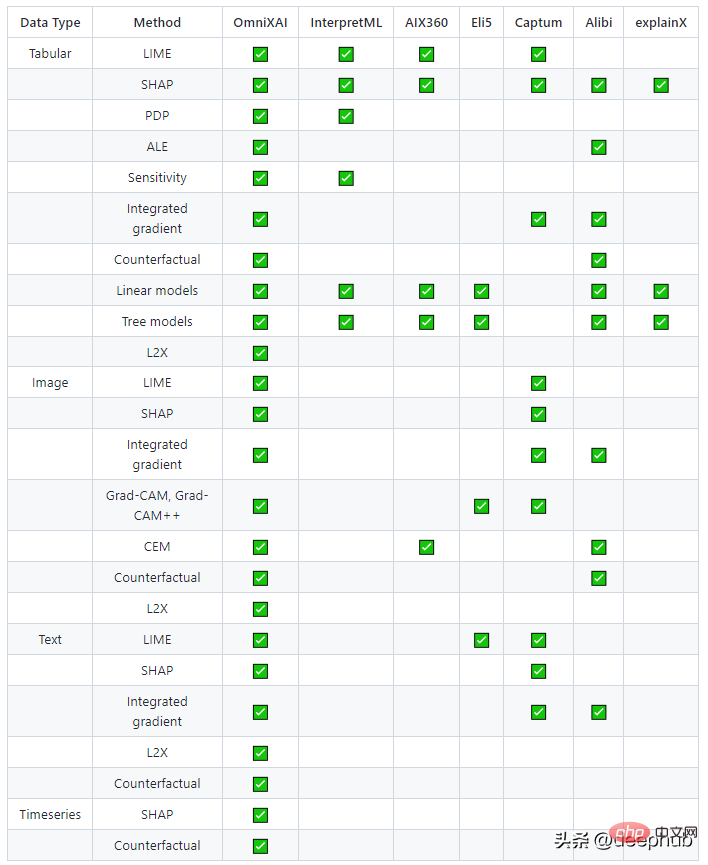

The following is a comparison between what OmniXAI provides and other similar libraries

The above is the detailed content of 6 Recommended Python Frameworks for Building Explainable Artificial Intelligence Systems (XAI). For more information, please follow other related articles on the PHP Chinese website!