Multimodal learning aims to understand and analyze information from multiple modalities, and substantial progress has been made in supervision mechanisms in recent years.

#However, heavy reliance on data combined with expensive manual annotation hinders model scaling. At the same time, given the availability of large-scale unlabeled data in the real world, self-supervised learning has become an attractive strategy to alleviate the labeling bottleneck.

Based on these two directions, self-supervised multimodal learning (SSML) provides a method to exploit supervision from original multimodal data.

##Paper address: https ://arxiv.org/abs/2304.01008

##Project address: https://github. com/ys-zong/awesome-self-supervised-multimodal-learning

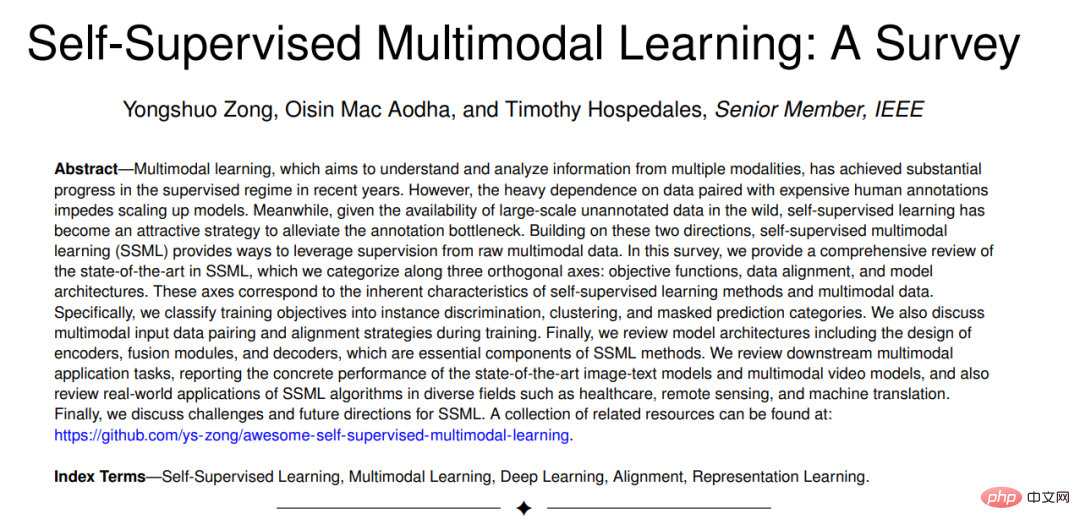

In this review, we provide a comprehensive review of the state-of-the-art techniques for SSML , we classify along three orthogonal axes: objective function, data alignment, and model architecture. These axes correspond to the inherent characteristics of self-supervised learning methods and multi-modal data.

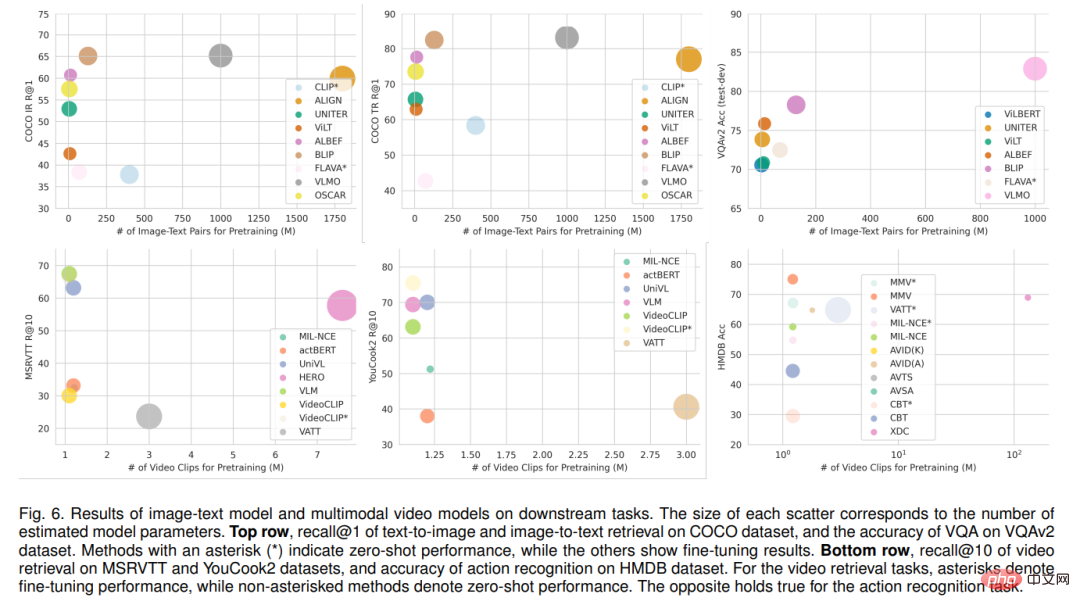

Specifically, we divide the training objectives into instance discrimination, clustering and mask prediction categories. We also discuss multimodal input data pairing and alignment strategies during training. Finally, the model architecture is reviewed, including the design of encoders, fusion modules, and decoders, which are important components of SSML methods.Reviews the downstream multi-modal application tasks, reports the specific performance of the state-of-the-art image-text model and multi-modal video model, and also reviews the application of SSML algorithms in different fields Practical applications such as healthcare, remote sensing and machine translation. Finally, challenges and future directions for SSML are discussed.

1. Introduction

However, multi-modal algorithms often still require expensive manual annotation for effective training, which hinders their expansion. Recently, self-supervised learning (SSL) [9], [10] has begun to alleviate this problem by generating supervision from readily available annotated data. Self-supervision in single-modal learning is fairly well defined and depends only on the training objectives and whether human annotation is used for supervision. However, in the context of multimodal learning, its definition is more nuanced. In multimodal learning, one modality often acts as a supervisory signal for another modality. In terms of the goal of upward scaling by eliminating the manual annotation bottleneck, a key issue in defining the scope of self-supervision is whether cross-modal pairings are freely acquired.

Self-supervised multimodal learning (SSML) significantly enhances the capabilities of multimodal models by leveraging freely available multimodal data and self-supervised objectives.

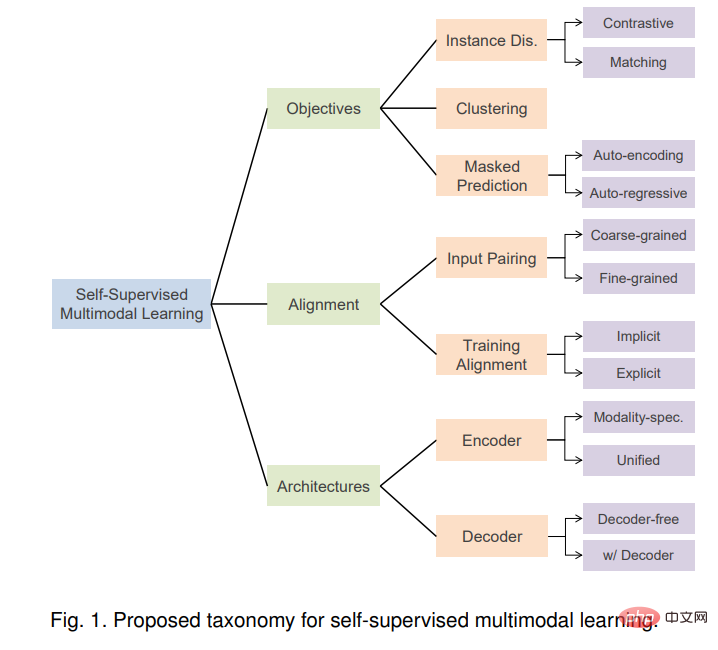

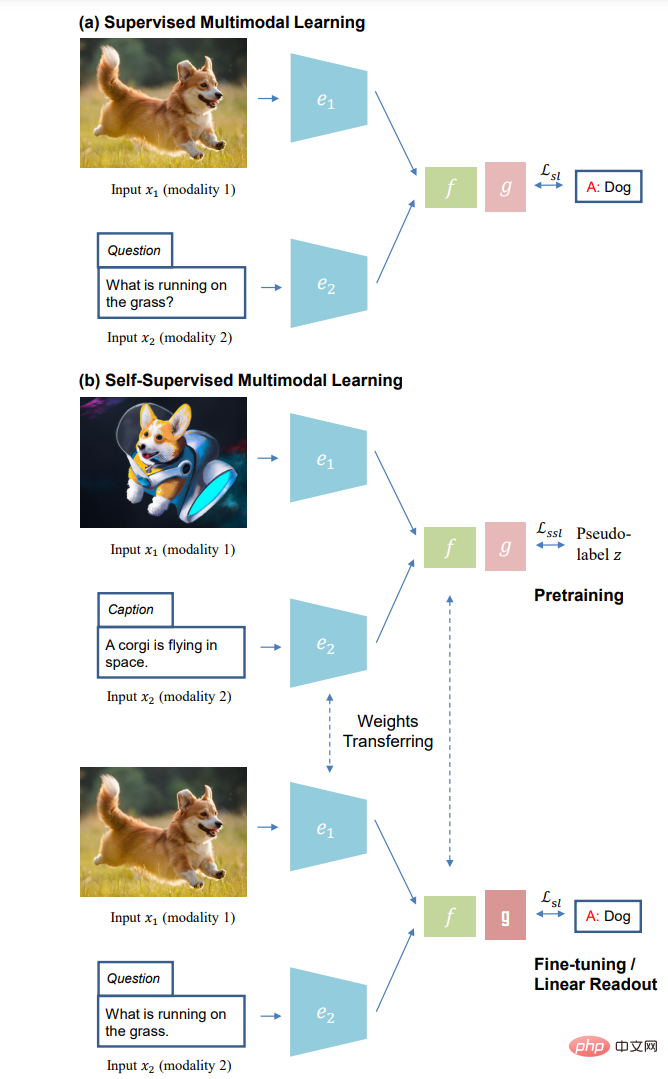

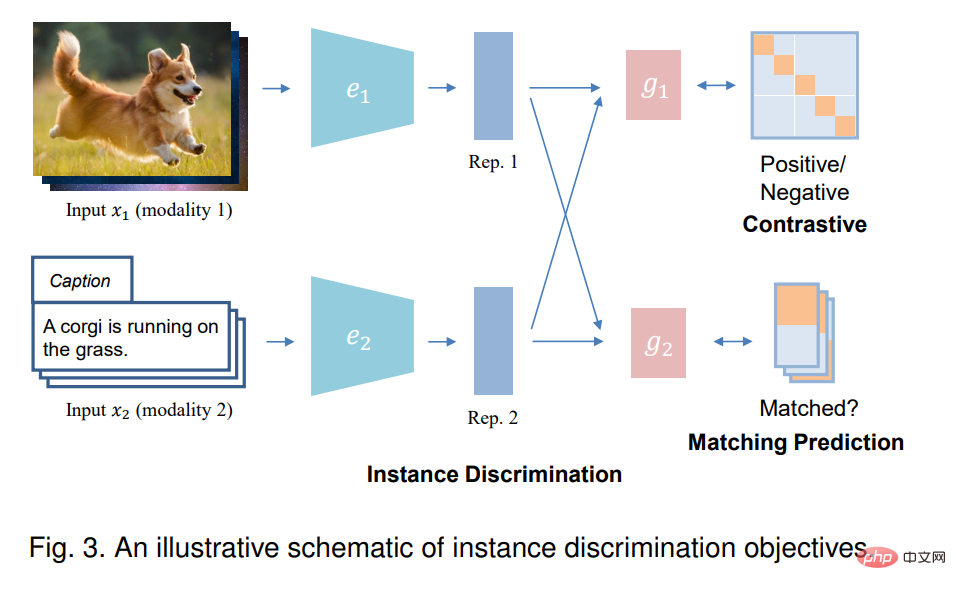

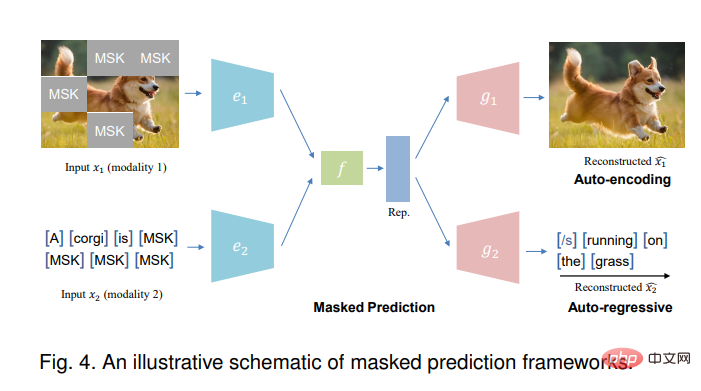

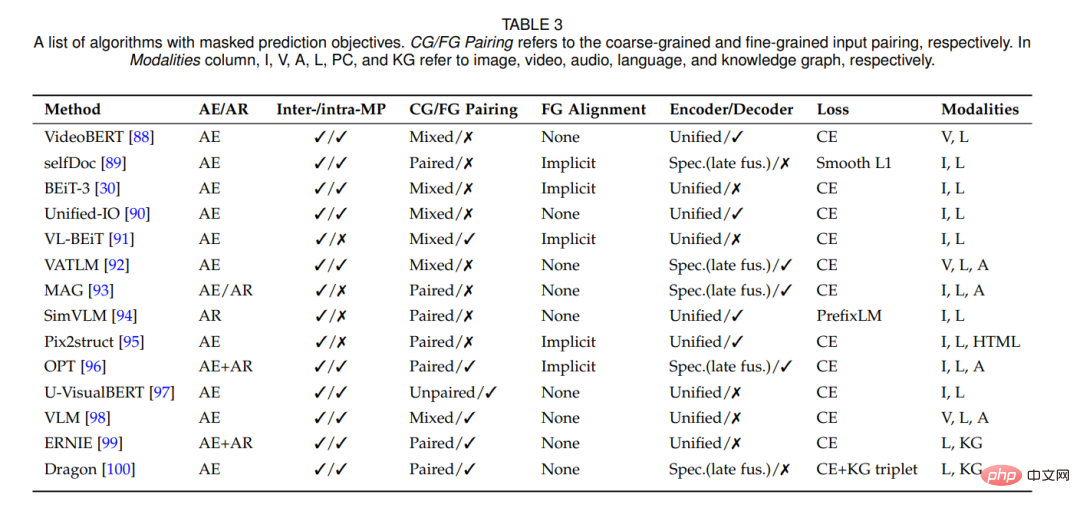

In this review, we review the SSML algorithm and its applications. We decompose the various methods along three orthogonal axes: objective function, data alignment, and model architecture. These axes correspond to the characteristics of self-supervised learning algorithms and the specific considerations required for multimodal data. Figure 1 provides an overview of the proposed taxonomy. Based on the pre-task, we divide the training objectives into instance discrimination, clustering and mask prediction categories. Hybrid approaches that combine two or more of these approaches are also discussed. Unique to multimodal self-supervision is the problem of multimodal data pairing. Pairings, or more generally alignments, between modalities can be exploited by SSML algorithms as input (e.g. when one modality is used to provide supervision for another), but also as output (e.g. , learns from unpaired data and induces pairing as a by-product). We discuss the different roles of alignment at coarse-grained levels that are often assumed to be freely available in multimodal self-supervision (e.g., web-crawled images and captions [11]); sometimes explicitly or Implicitly induced fine-grained alignment (e.g., correspondence between title words and image patches [12]). Additionally, we explore the intersection of objective functions and data alignment assumptions. also analyzes the design of contemporary SSML model architecture. Specifically, we consider the design space of encoder and fusion modules, comparing mode-specific encoders (without fusion or with late fusion) and unified encoders with early fusion. We also examine architectures with specific decoder designs and discuss the impact of these design choices. Finally, the applications of these algorithms in multiple real-world domains, including healthcare, remote sensing, machine translation, etc., are discussed, and the technical challenges and social impacts of SSML are discussed in depth. , indicating potential future research directions. We summarize recent advances in methods, datasets, and implementations to provide a starting point for researchers and practitioners in the field. Existing review papers either only focus on supervised multimodal learning [1], [2], [13], [14], or single modality Self-supervised learning [9], [10], [15], or a certain sub-area of SSML, such as visual-linguistic pre-training [16]. The most relevant review is [17], but it focuses more on temporal data and ignores the key considerations of multi-modal self-supervision of alignment and architecture. In contrast, we provide a comprehensive and up-to-date overview of SSML algorithms and provide a new taxonomy covering algorithms, data, and architecture. Self-supervision in multi-modal learning We first describe the scope of SSML considered in this survey, as this term has been used inconsistently in previous literature. Defining self-supervision in a single-modal context is more straightforward by invoking the label-free nature of different pretext tasks, e.g., the well-known instance discrimination [20] or the masked prediction target [21] implement self-supervision. In contrast, the situation in multimodal learning is more complicated because the roles of modality and label become blurred. For example, in supervised image captioning [22], text is usually treated as a label, but in self-supervised multi-modal visual and language representation learning [11], text is treated as an input modality. In the multimodal context, the term self-supervision has been used to refer to at least four situations: (1) Label-free learning from automatically paired multimodal data— — such as movies with video and audio tracks [23], or image and depth data from RGBD cameras [24]. (2) Learning from multimodal data, in which one modality has been manually annotated, or two modalities have been manually paired, but this annotation has been created for a different purpose, and therefore can be considered free for SSML pre-training. For example, matching image-caption pairs scraped from the web, as used in the seminal CLIP [11], is actually an example of supervised metric learning [25], [26] where the pairing is supervised. However, since both patterns and pairings are freely available at scale, it is often described as self-supervised. This uncurated, incidentally created data is often of lower quality and noisier than specially curated datasets such as COCO [22] and Visual Genome [27]. (3) Learn from high-quality purpose-annotated multi-modal data (e.g., manually captioned images in COCO [22]), but with a self-supervised style objective such as Pixel-BERT [28]. (4) Finally, there are “self-supervised” methods that use a mixture of free and manually labeled multi-modal data [29], [30]. For the purpose of this investigation, we follow the idea of self-supervision and aim to scale up by breaking the bottleneck of manual annotation. Therefore, we include the first two categories and the fourth category of methods in terms of being able to train on freely available data. We exclude methods shown only for manually curated datasets because they apply typical “self-supervision” objectives on curated datasets (e.g., masked prediction). (a) Supervised multi-modal learning and (b) Self-supervised Learning paradigm of multi-modal learning: self-supervised pre-training without manual annotation (top); supervise and fine-tune downstream tasks (bottom). In this section, we will introduce the objective function used to train three types of self-supervised multi-modal algorithms: instance discrimination , clustering and masking predictions. Finally we also discussed hybrid targets. 3.1 Instance discrimination In single-mode learning, instance discrimination (ID) converts the original data into Each instance in is treated as a separate class, and the model is trained to distinguish between different instances. In the context of multimodal learning, instance discrimination usually aims to determine whether samples from two input modalities are from the same instance, i.e., paired. By doing so, it attempts to align the representation space of pairs of patterns while pushing the representation space of different pairs of instances further apart. There are two types of instance recognition goals: contrastive prediction and matching prediction, depending on how the input is sampled. ##3.2 Clustering The clustering method assumes that the trained End-to-end clustering will result in grouping data based on semantically salient features. In practice, these methods iteratively predict cluster assignments of encoded representations and use these predictions (also known as pseudo-labels) as supervisory signals to update feature representations. Multimodal clustering provides the opportunity to learn multimodal representations and also improve traditional clustering by supervising other modalities using pseudo-labels for each modality. 3.3 Mask prediction The mask prediction task can use automatic encoding (similar to BERT[101]) or autoregression method (similar to GPT [102]) to perform.

2. Background knowledge

3. Objective function

##

##

The above is the detailed content of Multimodal self-supervised learning: exploring objective functions, data alignment and model architecture - taking the latest Edinburgh review as an example. For more information, please follow other related articles on the PHP Chinese website!

What is the encoding used inside a computer to process data and instructions?

What is the encoding used inside a computer to process data and instructions?

Introduction to python programming uses

Introduction to python programming uses

Usage of console.log

Usage of console.log

Window switching shortcut keys

Window switching shortcut keys

What language is c language?

What language is c language?

Top ten currency trading software apps ranking list

Top ten currency trading software apps ranking list

What are the methods to implement operator overloading in Go language?

What are the methods to implement operator overloading in Go language?

Property management system software

Property management system software